Day 3 (by my counting; HPA calls this Day 2, because Tuesday’s Super Session doesn’t count) covered LTO-5, LTFS, IMF, HDSLR, OLED, FIMS, SOA, SLA, monitors vs. displays, file-based mastering, Hollywood in the cloud, and Disney restorations.

These Tech Retreat posts are barely-edited stream-of-consciousness note-taking; there’s no other way to grab all this info in a timely manner, get it published, and still get enough sleep for the next day’s sessions!

I often use “distro” as shorthand for “distribution”, and “b’cast” for “broadcast”. You have been warned.

Washington Update – Jim Burger, Dow Lohnes

The year in copyright law and communications

Viacom vs YouTube: Viacom claiming > $1 billion in damages from YouTube’s “actual knowledge” of infringement. YouTube says it’s OK in the DMCA’s “safe harbor” since it takes down infringers as soon as notified. Judge agreed; you need to have “specific knowledge” of infringing content to be guilty. YouTube did not go beyond “storage at the discretion of users”, with no financial benefit directly attributable to that specific content. Viacom appealed in Dec 2010.

DMCA anti-circumvention case, MDY vs Blizzard. MDY made a software bot for World of Warcraft. Blizzard alleged that the bot circumvented its bot-protection software. MDY says it’s not a copyright violation; Blizzard simply boots off bots. 9th Circuit Court agreed with Blizzard: no need for copyright infringement for a DMCA violation.

Broadcasters vs FilmOn and ivi for live streaming of OTA (over the air) content over the ‘net. They claim they have the same rights as cable / satellite companies for retransmission. Temporary injunction against FilmOn.

The “IP Czarina”: Victory Espinel appointed as Obama’s IP Enforcement Coordinator.

“Website seizures” – DoJ and ICE seizing domain names that allegedly engage in contributing to copyright infringement, e.g., www.pirates.com, and displaying a copyright-violation warning. Controversial; unilateral action; jurisdiction issues; DNS pollution. “Let’s get headlines.”

National Broadband Plan – 4 key areas: competition, availability of spectrum, enhancing use of broadband, maximizing broadband use. Long term goal: 100Mbps down / 50 Mbps up. “Looming spectrum shortage”, claims that by 2014 mobile traffic will be 35x 2009 levels (but with no data to back this up). Plan to free 300 MHz spectrum in 5 years, 500 MHz in 10 years; 120 MHz from broadcast bands. Reallocate broadcast spectrum via “voluntary” auctions, channel-sharing, “improved VHF”, MDTV.

Net Neutrality – Wireline can’t discriminate, block, or tier service. Wireless has no anti-discrimination provision. Verizon jumps the gun; “they’ve changed our license.”

21st Century Communications and Video Accessibility Act – Oct. 2010 law reinstating video descriptive audio [see yesterday’s VI discussion]; mobile devices and web need to be able to display closed captions and display EAS alerts (even on iPods???), smartphone web browsers need to be accessible and all phones have to be hearing-aid compatible, etc. Jim: with all the problems we’re having in the country, this is what Congress is focusing on???

HD SLRs: Really? – Michael Guncheon, HDVideoPro/HDMG

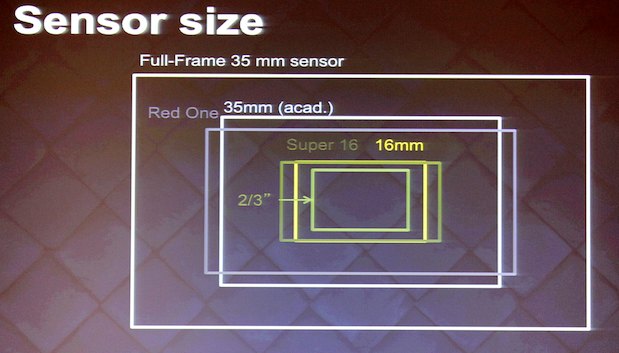

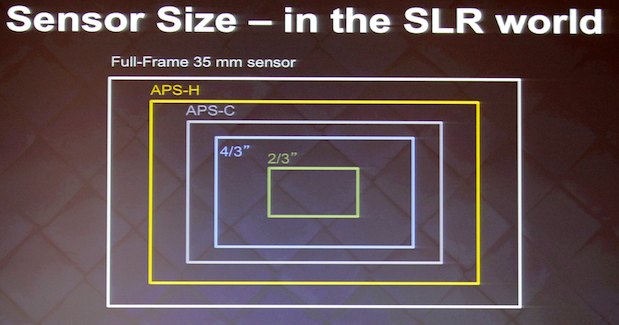

Traditional DSLRs can’t do video. Live view came in around 2004 (in an astrophotography camera), in 2007 for Canon. Mechanical focal-plane shutters would wear out after about 104 minutes of 24fps operation. Why HDSLRs? Large sensors with shallow depth of field capability.

2008: Nikon D90, Canon 5D Mk II; autoexposure only; D90 720/24p and 5D 1080/30p (really 30, not 29.97). No external audio on the Nikon; both cameras auto-gain audio only. Canon users would set exposure, twist the lens half off to decouple aperture control and fix the setting, or buy Nikon manual lenses to use with adapter. Magic Lantern hackware. Canon updated firmware for manual exposure, 29.97, AGC off. Magic Lantern no longer being developed.

2nd gen cameras: 7D, T2i, GH1, various Nikons, etc.

Problems / learning curves: frame rate vs. shutter angle; auto exposure; lens noise pickup; ISO vs. aperture vs. shutter speed (depth of field changes are so much more dramatic on large-sensor cameras). Filters: Singh-Ray variable ND. Image settings: can’t shoot RAW video, so you have to be careful: get the look in-camera vs. shoot a bit conservatively vs. shoot totally flat. What’s your goal / what’s your workflow? Canon Picture Style Editor on Mac and PC; pre-made styles on web (search for “5D picture styles”).

Audio: built-in mics not great; Canon 3.5mm minijack, manual level on 5D (but not 7D), no live level monitoring or headphone monitoring. sounddevices.com review of 5D audio. BeachTek, JuicedLink audio adapters.

Record time: memory card limitations. CF uses FAT32, 4GB max file size, 12 minute limits for Canon 1080p.

Heat limitations; cameras overheat when run in live view / video recording for too long.

HDMI monitor issues: using HMDI kills built-in LCD; resolution drop in record mode (5D drops from 1080i to 480p). Monitors from Ikan, SmallHD, etc.

Ergonomics: all sorts of rigs (Redrock Micro, Zacuto, Cinevate), LCD loupes.

Michael Guncheon shows off a 7D in a Redrock carbon-fiber rod rig.

Focus: be careful what you wish for; focus is very shallow. Still lenses don’t work well with follow-focus mechanisms, often aren’t repeatable. Adapted cine lenses (Zeiss CP.2s) about $4k each.

Other limits: CMOS rolling shutter. The Foundry’s Rolling Shutter fix-it software. Aliasing. Dust on the sensor when lenses are changed. Color fringing, highlight issues. Codecs and on-card file structures. FCP uses Log and Transfer. PluralEyes for double-system audio sync.

Question: compression artifacts noticeable? It’s more the chroma artifacts that cause problems.

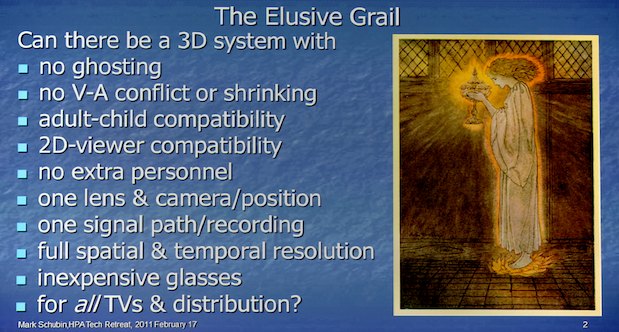

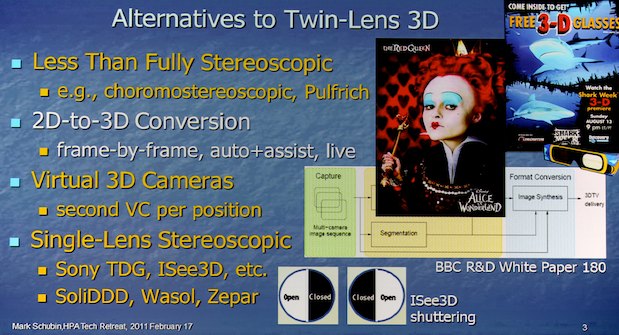

Alternatives to Two-Lens 3D – Mark Schubin, SchubinCafe.com

Pulfrich Illusion: brighter images are realtime; dark images are delayed a bit mentally; can use to see depth via motion.

View shifting or “wiggle vision”, constant motion (V3 lens adapter) see www.inv3.com/examples_section.html.

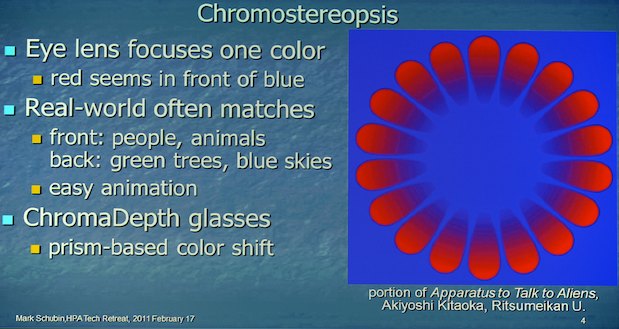

Chromostereopsis:

ChromaDepth used on VH1; Pulfrich on Discovery; view shift on CBS, anaglyph all over.

Virtual camera 3D: Imec, BBC research; use multiple cameras to capture and define a 3D space, then you can fly a virtual camera through that space.

The “other stereo” (audio): widely-spaced mics used as early as 1881 for exaggerated effects; now we typically use “single-source” stereo with coincident mics; less dramatic but more realistic.

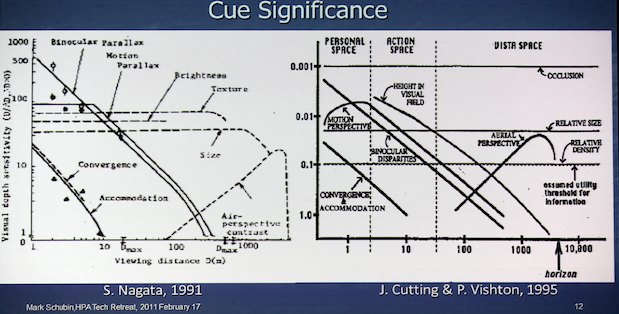

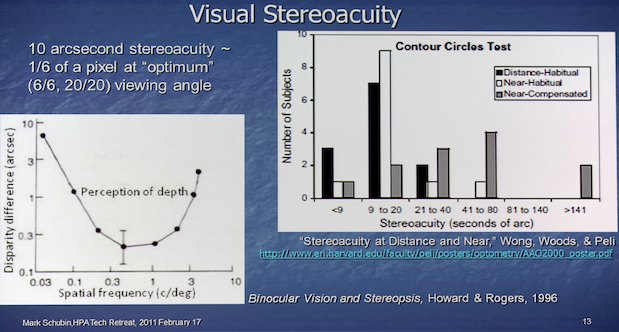

Visual cue significance:

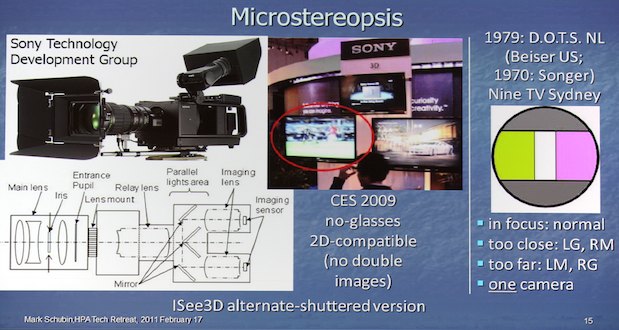

Lots of 3D cameras have been shown or sold with small interaxials, microstereopsis.

Patents have expired, successfully broadcast, 3D OK for 2D viewing, no ghosting, but no real wow factor.

More details: www.schubincafe.com, “Some Aspects of 3D in Depth”

Single-Lens Stereoscopy – Neal Weinstock, SoliDDD

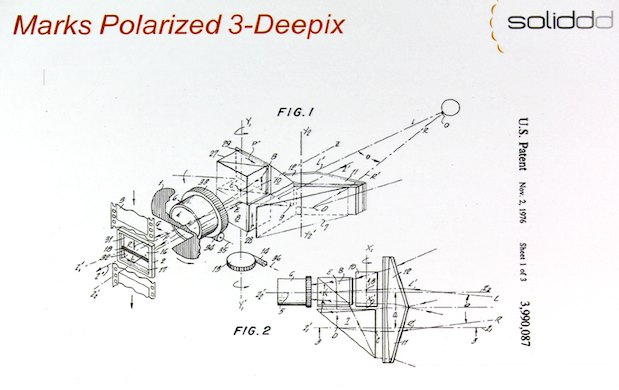

Single-lens 3D began in 1948, “Queen Julianna” using Dutch Veri-Vision system. The “silver age” of single-camera 3D was the 1960s, 1966 SpaceVision over/under lens, Marks Polarized 3-Deepix.

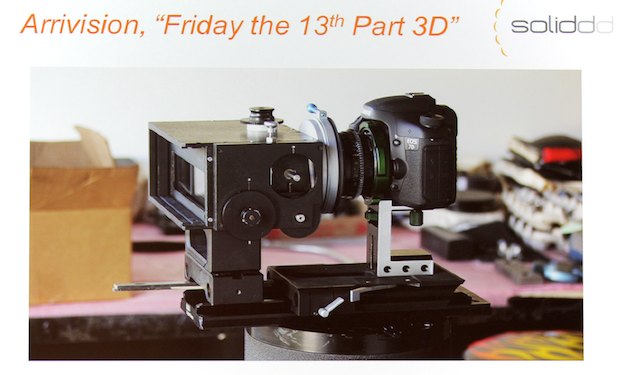

StereoVision and “The Stewardesses”. Arrivision for “Jaws 3D”, “Friday the 13th”. Dimension 3, D.O.T.S., Trumbull Digital Cyberscope, etc.

Basically, use prisms or mirrors to combine two images onto one piece of film. Arrivision adapter fixed IO, adjustable convergence and vertical alignment, in front of lens.

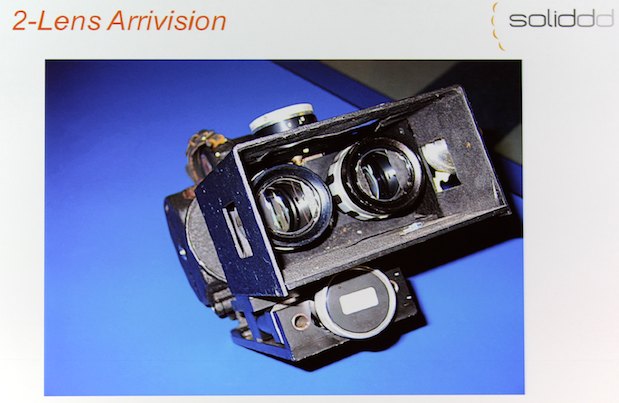

Arrivision two-lens system put the combiner between lenses and camera.

Problems: keystoning, vignetting, sensor utilization, stray light bounces, repositioned focal length and distance of prime lenses, light sensitivity, eyeballing the alignment of the adapter.

Advantages: no alignment issues between views, lighter and smaller, one camera is always matched to itself, theoretically easier to rack focus, convergence, IO, integrated lens control.

Problems that have gone away: old C-mount lenses (now we have PL), design issues solved with new optical design software; existing 3D rig infrastructure and general camera tech advances help out overall.

Current attempts: the Sony single-lens design with split light paths and two sensors internally. ISee3D, shuttered alternating views, single sensor and lens, consumer prototype at CES. Loreo, two-lens adapter for consumer SLRs, over/under or side-by-side, about $150.

Things we can now achieve: single camera/lens rig preferred operationally, minimal light loss, adjustable IO, convergence, focus, zoom; precise optics with no polarizing effects; minimal resolution loss; use with film; output RT data in standard formats.

Questions: where will we be in 5 years? Would be nice for more intercamera communication, smart switchers, etc., to match convergence for multicamera shoots for example.

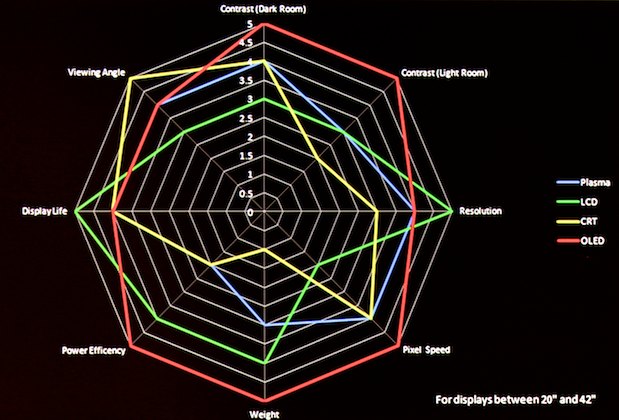

OLED & the Status of Its Development – Gary Mandle, Sony

In 2005, Sony CRT production shut down. OLED development started back in 1965, Sigmatron developed thin film electroluminescence TFEL; 1987 QVGA-resolution prototype by Planar, “bi-layer diode”, etc. 1994 Sony started OLED work. 2001 prototype 13″. 2007 first Sony OLED TV, 2010 7.4″ OLED pro monitor, now the 17″ and 25″ OLEDs.

How it works (“it’s not made out of banana peels”): [several eye-straining charts shown; too detailed to reproduce here. Discussion of electron/hole pairs, emissive layers, top emission architecture. -AJW]

TOLED (transparent, HUDs, aviation), SOLED (stacked, for lighting), P-LED (polymeric, flexible), AMOLED (active matrix OLED displays).

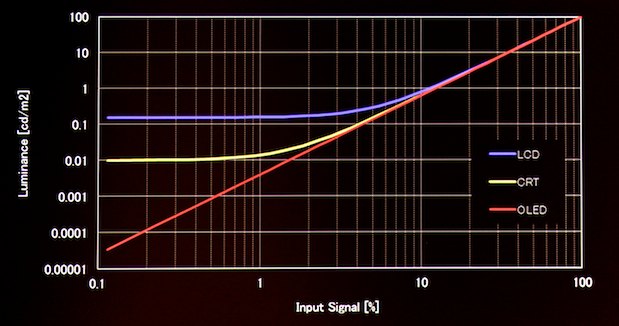

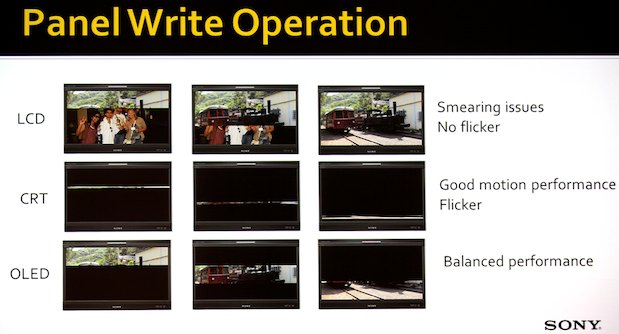

Advantages: far superior black levels and contrast ranges; “quite simply, OLED goes to black”. New signal processing with nonlinear cubic LUT to better render dark areas. OLED response/decay times are a lot quicker; virtually instantaneous, so no blur or smear.

OLED controllable “on times”, monitors are set for about 50% duty cycle to balance flicker against smear:

Current panels: 10bit drivers, 1920×1080, P3 gamut, top emission, 30k hr lifetime (like BVM CRT).

Trimaster EL monitors have 3G SDI, HDMI, DisplayPort, also accessory slots for other inputs.

Understanding Reference Monitoring – Charles Poynton

Session name wrong: we should retire the word “monitor”. It’s a passive word. Approving material is active, so we should use the active word “display”. BVM Broadcast Video Monitor? A lot of content isn’t broadcast, much of it isn’t video, and it’s a display, not a monitor!

Studio reference displays – ten random ideas:

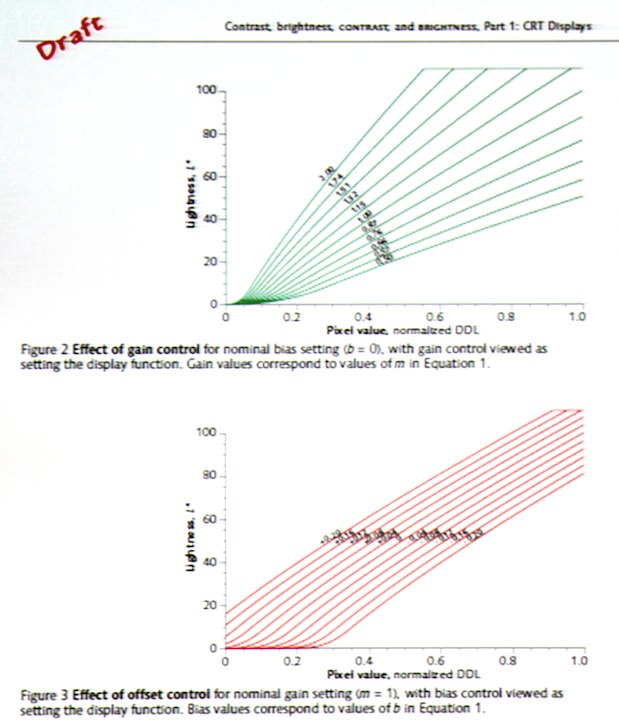

1. Displays have a nonlinear response to “voltage.” It’s a key point for getting good images from a limited number of bits (8 or 10); it scales like the eye’s response to light.

2. A consumer display, or a pro display, cannot “improve” or “enhance” the picture. Yes, electrical pre-compensation for display technology issues is OK, but you can’t make the picture “better.”

3. The correct EOCF (electro-optical conversion function) is almost never the inverse of the OECF (opto-electrical conversion function). For typical rather dim displays (80-320 nits) in dim environments (1-50 lux) picture rendering or “appearance mapping” is necessary to make the image “visually correct”. Creative intent intervenes; you want the display to show what the image creator intended. The Rec.709 document is screwed up: it standardizes the camera (and Charles signed the document, too!). The document should have standardized the display instead. It’s more important to standardize how a stored picture is rendered than to specify how the camera makes that stored picture in the first place; any DP will muck about with the camera settings to generate the look he or she wants. The only thing standardized on displays is the 2.4 gamma, but not white or black levels.

4. HD gamma is not 2.2: BT.709 OECF approximates a 0.5 power (not 0.45); studio class CRTs exhibit a 2.4 power.

5. See #3 about the 709 standards mistake.

6. “Brightness” and “contrast” are highly ambiguous terms. Sometimes each control seems to do what the other should. The controls aren’t even calibrated on most displays. Contrast is really gain, brightness really controls DC offset.

Tweaking “brightness” (black level) markedly affects perceived contrast ratio; adjusting “contrast” (gain) changes the average brightness of the image. There are papers from half a century ago that complain about these ambiguous names.

7. Black is set using PLUGE but it’s slightly above absolute black. So don’t clip your blacks… also, don’t clip your whites; we don’t need legalizers in the digital age. Doing wide-gamut requires turning off the clippers anyway.

8. [Something Charles skipped past too fast for me to record. -AJW]

9. Poynton’s Third Law: “After approval and mastering, creative intent can’t be separated from faulty production.” Thus “enhancers” that denoise, change color/luma mapping, change frame rates are damaging. Example: removing picture noise from “Blair Witch” breaks the show.

10. We’ve never had a realistic standard for code-to-light mapping – the EOCF.

Reference Monitoring Panel

– Moderator: Paul Chapman, Fotokem

– Charles Poynton

– Dave Schnuelle, Dolby

– Jim Noecker, Panasonic

– Gary Mandle, Sony

Things were well standardized in the CRT world. Now, with home video mastering on a plasma, what do we do with room setup? CRTs had a lot of reflectance off the faceplate and phosphor (about 60%). With LCD or Plasma, only 1% reflectance, so can turn up the lights. But with a different surround brightness, that changes gamma (that’s why d-cine is 2.6, sRGB is 2.2). Your perception of contrast increases as surround lighting goes up. If the reflectance of the monitor is low (Dolby monitor is about 0.5%), you can see a lot more in the shadows even with brighter surround lighting.

But consumer viewing environments are all over the map; you need to have benchmarks for consistent mastering environments. Yes, calibration changes based on viewing environment. Concern: if you’re mastering in a dark room, how does that translate to the brighter home environment? How do we characterize that? How do we standardize that? Easier with the controlled environment of a cinema. Well, how far do you want to chase that? TVs and iPods both? Is it even worth trying to accommodate everything?

How is reflectivity controlled on the new monitors? Various antireflection films are incorporated in the display panels.

Calibration for all these different displays? Do we need different calibrators for the different display types (panels, projectors, etc.)? There’s no standard that says you should master HD content at 100 nits, so it’s all up for grabs… Another parameter belongs in the standard: we don’t master stills, we master mopix, so we need a standard for dynamic picture changes.

Some displays emit narrow bands of color; will all colorimeters work properly on narrow-band displays? Tristimulus calibrators trace back to incandescent standards at NIST, but red phosphors especially are all over the map. Use spectroradiometers? The closer you get to wide gamut, the narrower spectrum the emitters need to be. Some calibrators respond to polarization, and will give different results mounted vertically or horizontally. The master calibrator needs to be a spectroradiometer. Most tristimulus probes can’t handle wide-gamut devices (again, energy in bands outside what the probes are looking for). Colorimeters OK for CCFL, but not OLED, tristimulus RGB backlighting. Everyone on panel agrees: you need a spectroradiometer.

High dynamic range monitoring? Content creation community has got to decide whether to take control of the consumer viewing experience for wide gamut, or let the CE guys drive it. You can go to any CE store and find wide-gamut color, but they’re warping your Rec.709 masters into something they think will sell more TVs. Same for HDR: spatially-modulated backlight (a.k.a. local dimming), but nobody is mastering content on those displays. If you’re mastering in P3, and doing a “trim pass” for 709, then the CE guys are warping it back to their idea of wide gamut and HDR. OTOH, cameras are shooting wider ranges, and ACES allows holding wide DR and wide gamut through the chain. Consumer displays with brightnesses of 500-600 nits but with good blacks; we’ll see where this goes professionally. Standards organizations need to tell the manufacturers what’s needed for pro displays.

Support for IIF ACES ODT in new monitors? Sony is looking into it. Panasonic can’t say; plasma can generate greater bit depths, but can’t say when that will happen. Dolby has 12-bit RGB inputs, working with Academy on “log ACES format” for the display.

Metamerism is the study of differing visual responses to color; it’s a problem with spiky display colors, as different people will see different colors on the same display.

Interoperable Mastering/Interoperable Media Panel

– Moderator: Howard Lukk, Disney

– Annie Chang, Disney

– Brad Gilmer, Advanced Media Workflow Association

IMF is the Interoperable Master Format. What is it? The problem for nontheatrical distro (Blu-ray, iTunes, PPV, etc.): need a lot of masters in different codecs, frame rates, resolutions, aspect ratios, 42 different languages… it gets insane. Too much to store. Solution: IMF. Single file format, standardized. Minimize storage, uses mezzanine codec, main content plus version deltas. USC ETC draft in September, version 1.0 published next week.

Normally, take DIs and create film-outs, DCPs, and all the nontheatrical masters (typically a Rec.709 master from which others are derived). The IMF replaces that Rec.709 master. (IIF ACES for production, output made into IMF; some elements of the DCP may be used in the IMF.) Not designed for archive, but could be used in archiving.

Key Concepts: high quality final master. Components wrapped in track files: essence (AV), data essence (captioning / subtitles), dynamic metadata (pan ‘n’ scan). Composition playlists. Security.

Image essence up to 8K 60P stereo; 8- 10- 12- 16-bit, multiple color spaces and gamuts. Mezzanine compression or uncompressed. Compression: MXF-wrapped VBR or CBR intraframe, spatial resolution scalability, industry standard, thus JPEG2000 chosen.

Uncompressed audio only, 24 bit, 48-96 kHz including fractional “NTSC” rates.

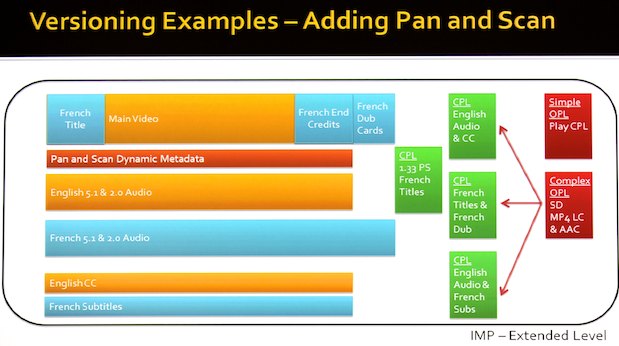

Dynamic metadata based on timecode, color correction changes, 3D data, pan ‘n’ scan. Pan ‘n’ scan info for full x/y moves, zooms, squeezes, using a single full-aperture image. Different pan ‘n’ scan data for different output aspect ratios.

Based on MXF OP1a / AS-02 wrappers.

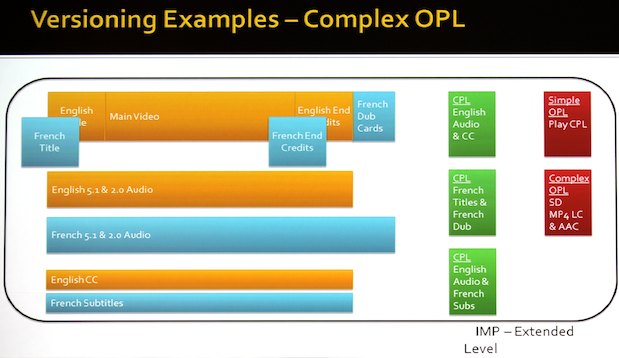

CPL: Composition playlist, like D-cinema CPL, XML, UUIDs (universally unique IDs) instead of paths. CPL points to essence segments in the IMF with one or more track files, creates entire program. Hierarchy: composition > sequence > segment > resource > track file. Track files can have handles, frames with repeat counts. OPL: Output Profile List to define things for certain devices, XML, linked to CPL. Simple OPL: play this CPL. OPL can add preprocessing parameters, like color space transforms, transcoding setups.

Packaging and security: IMP Interoperable Master Package, containing composition (CPL + tracks), OPL, packing list, asset map. Security: digital signature for CPL and OPL; encryption TBD.

Basis and Extended levels: basic level is mostly what we do now; extended level(s) for future proofing: new standards? Basic: essence up to HD resolution, 30p or 30i; stereo, up to 10-bit; captions and subtitles; CPL and simple OPL. Extended: up to 8K 60P, audio with channel labeling; pan ‘n’ scan, color correction, stereo subtitle Z-axis info, 3D data; complex OPL.

Versioning:

Working group at SMPTE, ad hoc groups approved in March, should have Basic level this fall. SMPTE 10E Committee and 10E50 IMF Working Group. Join up and get involved!

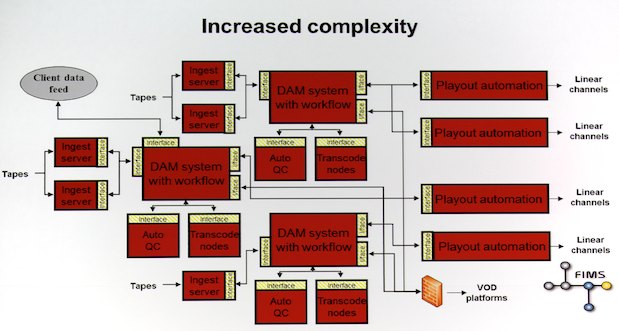

FIMS – Framework for Interoperable Media Services (AMWA, EBU). What problem to be solved? Around 2006, you’d have tapes going into ingest servers; a DAM (digital asset management) system; scheduling; playout automation; editing; a transcoder; it’s all great, right? But you wind up adding more DAMs (“one DAM system after another…”) each with its own workflow.

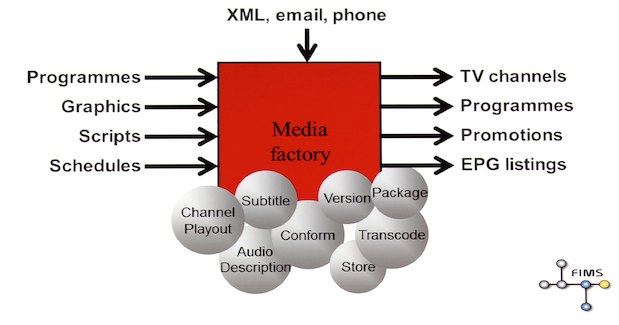

Instead: “Media Factory” with many services; requests and essence come in; finished products go out.

How to do it? Abstracted services, not custom APIs (for QC [Quality Control], transcode, etc.). Initial implementations: DAM service and Client service. DAM service: create placeholder, search for assets, insert and copy assets, get asset data and metadata.

Challenges: common service definitions not easy; proprietary interfaces are, well, proprietary; etc. FIMS created to address these challenges.

Why FIMS is important to users? (Hans Hoffman via video playback): FIMS uses SOA to achieve cost effectiveness and higher efficiency through standardized software interfaces.

Work started in parallel by both EBU and AMWA, looking at existing SOAs (service-oriented architectures) in large businesses. Existing work didn’t fully address needs. AMWA and EBU combining forces made sense.

Requirements: For manufacturers: reduces costs, risks, lets ’em concentrate on innovation instead of interfaces. For users: faster integration, lower cost and risk, reduces vendor lock-in, easier cross-platform delivery, flexibility. For IT: modularity, loose coupling, interoperability, scalability, upgradeability. For media industry: must support high-bandwidth transfers, human workflow, time synchronization.

First phase: focus on framework, transcoding and ingest. Contributors: IBM, Sony, BBC, Cinegy, Avid, metaglue, cube-tec, etc. FIMS initial demo at NAB 2011.

Join FIMS: wiki.amwa.tv/ebu

Questions: rationalization of AS-02 and IMF? AS-02 is AMWA’s application specification for multiversioning, very close to IMF’s goals. May just take AS-02 and give to IMF, so that they will have a common methodology. It’s important not to reinvent the wheel (applause). We want to adopt standards we can utilize. Custom plumbing is just not sustainable in large facilities. It’s not even cost reduction, it’s just keeping costs under control! Can things be abstracted one level higher, to the workflow level? Talked about it, something they’re thinking about.

Next: File-based mastering; Hollywood in the cloud; LTO-5, Disney film restorations…

File-Based Mastering

– Moderator: Leon Silverman, Disney

– Brian Kenworthy, Deluxe Digital Studios

– Dave Register, Laser Pacific

– Timor Insepov, Sony DADC

– Greg Head, Walt Disney Studios

– Annie Chang, Walt Disney Studios

“Tapelessness is next to Godliness.” What happens when we master tapelessly? And why? Disney found that file-based saves money; easier to automate.

Annie: Disney moved feature mastering to file-based from HDCAM-SR and Digibeta. Made 24 different masters on HDCAM-SR. Narrowed down stakeholders to 2 formats: DPX files with DVS Clipster playlist, and ProRes QT (QuickTime). Then dropped SD masters after testing downconversion methods. Had to implement naming convention, MD5 checksums, storage issues. Plan is to continue file-based mastering; not the easiest thing (slow movement of large files still a problem; occasional file corruption issues).

Laser Pacific: biggest issue was getting past not having a master tape on the shelf; getting the confidence back when just using files. Create MD5s when you know the file is good in QC, then the MD5 travels with the file everywhere for verification. The MD5 goes a long way to restore confidence. Also, getting a Clipster made creating masters a lot easier; also had to use FCP in ways we hadn’t before. Mostly applying DI processes (color correction, dustbusting) to mastering. Reading 4K DPXes off a SAN is a lot slower than working from tape!

Greg: We didn’t anticipate the timing (of the shift to files) would sync up with all the distro chains changing. More than ever, we need to keep the speed up, with all the day-and-date releases. File delivery times, bandwidth; we need to get the files faster. Driving a Digibeta across town takes 20 minutes; file transfer and checksumming can take two days; six days if you need to retransmit. Why not just carry drives around? Unreliable drives, viruses; it doesn’t work too well.

Deluxe: Started out doing testing to ensure files looked as good as HDCAM-SR. Just handling the capacity and volume is challenging.Changed our infrastructure significantly, with more storage, higher bandwidth links. Depending on file size, say 300 files due in a certain time frame, it’s really tight… MD5 checksums are crucial.

Sony: looking at the tail end: digital distro. Need that Media Factory from the last panel, see what we can take from the physical supply chain process and bring into digital. Barrier to entry is high; we don’t want multiple DAMs, will take some time for all the industry to clean up libraries and metadata (since it’s not standardized right now). Need to have all assets, all services well defined and well described. Deliverable SKU proliferation as various vendors ask for differentiation.

Leon: automated QC? What has this new workflow done to QC?

Annie: What’s nice about tape, if you need to make an insert fix, you fix it and the rest of the show is fine. On files, if you do the same fix, need to export entire file and QC it again (not so much on frame-based DPX, more so on QTs). Would be nice to have good automated tools. Disney doing more QC in files due to distrust factor. Vendor QC, title manager QC, “anyone who wants to look at it can, too.”

Dave: with tape, just pick any machine in the machine room and go; with files, you need a high-bandwidth connection to the SAN but even then, if the SAN is loaded you’ll get stutters. So you have to move files to local storage for QC, and those reliable DPX players are expensive. The Clipster is often busy doing something else, too.

Greg: automated QC is the future, needs development, can complement traditional QC, not replace it. Files that pass checksum sometimes have dropped frames; need to refine the process a bit more.

Brian: agree, it’s the future. Depends on the toolsets. If you miss errors and then checksum the file, then depend on the checksum, that’s a problem.

Timor: automated QC supplements, doesn’t replace manual QC. Can be done remotely, not using edit bays (web-based QC). QC operator needs tool to jump to places in file with reported issues. Making sure infrastructure supports checksum checking.

Leon: transferring over networks; how are you using networks now, and what do we need?

Annie: we have fiber to vendors and a system called Transfer Manager. Files from Laser Pacific over fiber, TM knows what to expect from inventory system, Faster network, more speed more speed! Files checksummed before storage. ProRes is OK, but DPX is slow; 8-10 hours to move a DPX show, plus checksumming.

Dave: 4-10 hours is manageable; we prefer TM to any other method. Transfer time comparable to writing to FireWire drive, but a lot more reliable. Prefer LTOs to Firewire drives; 10-20% failure rate on drives (unreadable at far end for whatever reason; has to be redone).

Brian: TM is good. 10GigE network in-house.

Tibor: 10Gig lines cost a lot. Just because it’s file based, there’s the perception of “just send it.” Point-to-point is great, looking at delivering to the replicators, to Hulu; important to optimize bandwidth. Expectation is so high, “just push a button”, so need to provide better education, set expectations realistically.

Dave: 300 files in a week looks easy until you do the math.

Tibor: Sometimes it just makes sense to put a lot of drives in a car and drive it there fast.

Leon: maybe cost effective 10Gig pipes will be here soon, but it looks like we’re outgrowing ’em already… Talk about the importance of metadata.

Annie: HAM not DAM (Human Asset Management). We did our own naming convention. Just trying to put something in that works for you. Looking forward to the day where there’s some UUID in the file. For now we need the filenames. Our inventory was based on physical assets, working to convert; there’s so much more metadata on files.

Tibor: trying to future-proof things yet also trying to make things work now. Hoping for a standard. Consulting with stakeholders to get broad set of metadata, without it we can’t be cost-effective. Before we go into automated systems, we need to make sure we have the correct data. Timeline-based metadata (where do we swap out title cards, logos). More detailed program description than simple synopsis.

Challenges: We really need standardized codecs, standardized metadata, more interoperability, more bandwidth.

Questions: How are DPX frames moved? In whatever folder structure you want; the Clipster project ties ’em together. Why so many checksums? Is there really that much corruption? Errors can creep in at any step: a transfer, an edit. It’s a challenge to track all this. The good thing about DPX is that if a frame is bad, just replace that frame. With all that ProRes and QT in use, any chance that Disney can convince their largest shareholder to come to the party? No, that shareholder treats Disney like he treats everyone else!

Hollywood in the Cloud Panel

– Moderator: Steve Poehlein, HP

– Al Kovalick, Avid

– Dan Lin, Deluxe

– Howard Lukk, Disney

– Steve Mannel, Salesforce.com

– Kurt Kyle, SAP America

Steve: Consumers want to control how and where they consume content, and the industry is responding by migrating to multiplatform digital delivery. Media is moving to the network edge, more network-centric devices. Should the supporting services move to the network edge too? (Film formats: 150 formats in 120 years.) Cloud computing: on-demand, flexible… can be private, public, or a hybrid.

Howard: Stakes getting higher and higher as time goes on; more deliverables all the time. An explosion of media.

Should entertainment companies leverage the cloud? Howard: Sure. The internet, the cloud, not just external clouds but internal clouds.

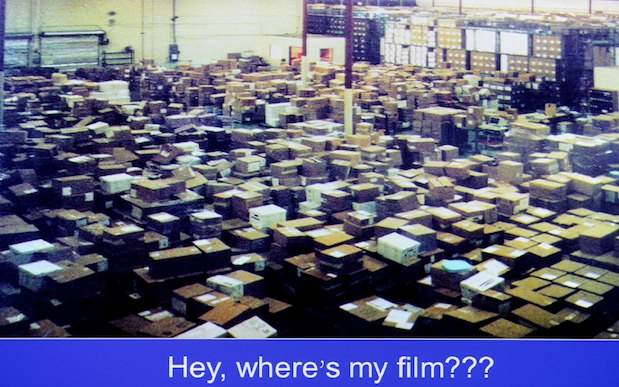

How should vendors respond? Dan: was at Ascent, now at Deluxe. We decided early on to store on tape, was economical (LTO-2, now LTO-4, soon LTO-5). But now we have huge catalog on tape, need to retrieve from tape, huge time penalty. Cloud may provide elasticity for storage without incurring huge CapEx (capital expense, as opposed to OpEx, operational expense).

How can studios leverage these tools? Steve: observation looking back, lots of work on the supply side, but then demand gets greater. Users and collaboration can also be leveraged. At IBM, evaluating telco work order management. Best solutions planned for failure and enabled collaboration. With Chatter [Twitter-like chat service; spots on Super Bowl, remember?], open collaboration but with security. Some solutions we’ve deployed in enterprise (stolen from consumer web!) solve problems through simple contextual collaboration. Al: 20% of all software sales will be web apps by 2012. I don’t like installing/patching/maintaining software. As Salesforce’s Benioff says, “software is dead,” because it’s all moving to the cloud. You gotta love it: automatically upgradeable, pay for only what you use, no platform wars. Kurt: SAP has all kinds of private / public clouds. Customers want to have in-house, OK, want to use on web, OK. Enabling collaboration in the cloud is wonderful. Mobility as a cloud, deployed to any device. I don’t see compute power or storage as limitations, the problem is who is going to manage all these formats, all these deliverables? How does the ordering system work? If I use a cloud service, say for a transcode, will that new asset enter the ordering system? Data management and supply-chain management may come to post. It’s a pressure on post to be more integrated in the business process. More about managing the business components of the process.

What about piracy, security, inhibitors in using the cloud? Network speed and latency are the real enemy. One of the myths is how can you do all this file movement through the cloud for rendering in the cloud, storing work in progress in the cloud. Lots of technical gaps, not enough critical mass. Still a long way to go. How about a mezzanine in the cloud? Dreamworks renders in the cloud today. Most providers do not have tight coupling between computing and storage. Avid just announced some cloud capability; basically cloud based editing, review, approval. Turing test for cloud: can I tell the difference between media under the desk and media in the cloud? Jog and shuttle, if it’s smooth, that’s the test. Avid using Amazon cloud services. Strata of resolutions: for TV, cloud for graphics and editing with proxies; not everything is 4k DIs. Big benefit of cloud is for collaboration, review and approval. Google Docs spreadsheet for organizing a photo shoot, for example; see spreadsheet cells fill in in real time as people sign up for their tasks.

We look at a lot of what’s being labeled cloud, and it’s just virtualization.

As distro moves into cloud-like models, as with UltraViolet, rights management and rights systems are very interesting. Everybody treats their rights significantly differently, all have a different take on it. Sounds great, “we’ll create the ultimate rights service”, but no one will use it. If you’re a large customer, you can get what you want in security; small guys, not so much. Cloud data centers may be the most secure data centers in the world; that’s the trend, as SLAs (service level agreements) require security.

Where do clouds provide the most impact? Collaboration is huge. Rendering and storage are more difficult. Distro may come into play. B2B different from B2C. DRM is a tricky thing. Look what happened with iTunes.

Yes, collaboration is big. More time spent on Facebook, wall postings outpace email, most traffic from mobile devices. Fundamental shift in behavior, and we’ll all be hiring these people (the Facebook users). To the extent we make our systems more friendly, more social, more mobile, it’ll make everything easier.

It’s nice to say that using the cloud will save money. But collaboration is much more important. If I decide to send all my cam original to the post house; to keep it all in-house and enable remote editors… The idea we can take mobile devices and do work on ’em, on tablets. The cloud becomes the enabler between companies and processes. Enabling a more efficient process, and igniting creative processes. Take individual problems to the cloud now, more comprehensive movement later. In private clouds, more flexibility, more momentum.

Al: cloud impacts my engineering (at Avid). We’re not building stuff for the cloud, but we’re not building things that can’t be put in the cloud. Thin interfaces, portability. As a vendor, I build for just one target, not Linux + Mac + Windows… sweet spots are apps with low bandwidth, SAS apps; we won’t have DI in the cloud any time soon, but it’ll trickle up eventually.

Is there a sense that some sort of central repository makes sense? Would it be considered a cloud? Sounds like a private cloud. Not one model fits everything. Such a proliferation of storage models, some centralized general model probably won’t work. “Should we put everything into the cloud? If we took all the data in the USA, it would take 18 months using every ounce of bandwidth we have.”

Questions: who is the customer for buying these services? Production-based accounting: studios rarely buy gear and services, producers do. Got to understand how the production business works (and is paid for) today. Business problem and the interfaces of how we’re moving stuff around (as material comes in for a show, then flushes out when the show is done). Some studios are paying monthly services for some productions. Show in Jordan right now, no high-speed bandwidth: the connection is a G-Tech drive on the producer’s desk, so the cloud doesn’t work for this one.

Is there an effort under way to get certified security so that studios feel comfortable putting assets in cloud? Developing APIs for security. Achilles heel for cloud work right now: all this prep to be able to say “I need 1600 CPUs right now”, can it reliably turn around big renders, that sort of thing.

Where are the clouds coming from? Will the provider be able guarantee me all the power I need when it’s crunch time? Amazon has a team ensuring that the capacity is there.

The New Role of LTO-5 Technologies in Media Workflows

– Moderator: George Anderson, Media Technology Market Partners

– LTO-5 Technology – Ed Childers, Ultrium LTO Project

– Linear Tape File System (LTFS) – Michael Richmond, IBM Almaden

– LTFS vs. tar – Tom Goldberg, Cache-A

– LTFS Demystified – Tridib Chakravarty, StorageDNA

LTO-5 Technology: from IT perspective, traditional storage tech doesn’t map well: deduplication, compression not useful for media industries (media files don’t dedupe much, and compression already very well used in media files). LTO-5 has partitioning, enables LTFS. Tape capacity / speed growth will sustain for at least 10 years. Storage systems always defines by the tech. LTO-5 / LTFS allow hybrid storage systems: SSD emulating disk or caching for disk, disk caching for tape, etc. $$$/Gigabyte, power important. Proprietary systems, non-self-describing file systems are inhibitors of tape use; need to move stuff around, and LO-5 and LTFS are designed to solve these.

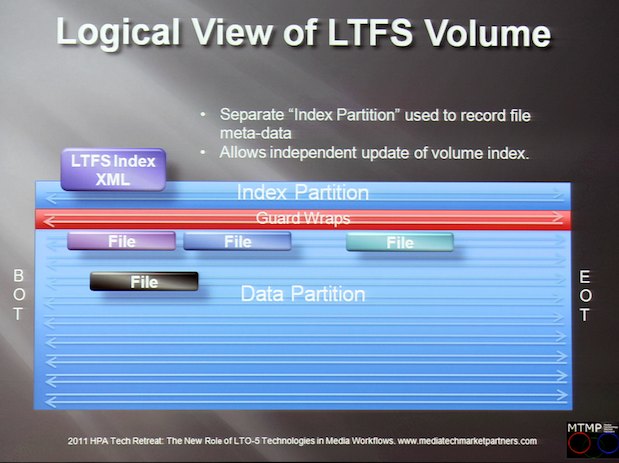

LTO-5: 5th-gen LTO, 5 gens to go on the roadmap. Self-describing data container. Partitioning is the key. 2U rack with 24 carts = 36 TB. LTFS fits atop LTO; LTO tape formats not defined. Many use tar, or their own proprietary format. LTFS is a standardized format, drivers for linux / Mac / Windows (POSIX interface); seeks, partial restores, etc. Drag and drop files; mountable filesystem like any other. Think 1.5TB thumb drive. Needed to be an open standard, not IBM proprietary. All vendors working on it, joint code thread something the industry can trust as a standard.

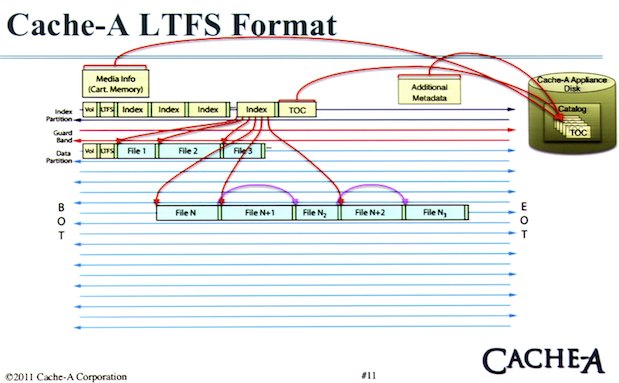

LTFS: XML structures are open and published. LTFS consortium: IBM, 1Beyond, Quantum, StorageDNA, ThoughtEquity, HP, FOR-A. Most files written to data partition, but you can define things so that certain small files can be stored in the index partition for speed. Library mode: mount a library as opposed to a tape; tapes appear as subdirectories. Index is cached so you don’t need to load a cart to see its index. Includes single-drive operation mode.

Single-drive mode available today, library mode beta testing Q1 2011, general availability in Q2.

LTFS vs. tar: tape issues: portability, ease of use, self-describing, linear nature.

Portability: tar is portable, nothing else has been. LTFS is portable; HP and 1Beyond (IBM drive) interoperated in demo room here at HPA.

Ease of use: tar isn’t easy! LTFS is GUI driven, looks like a file system.

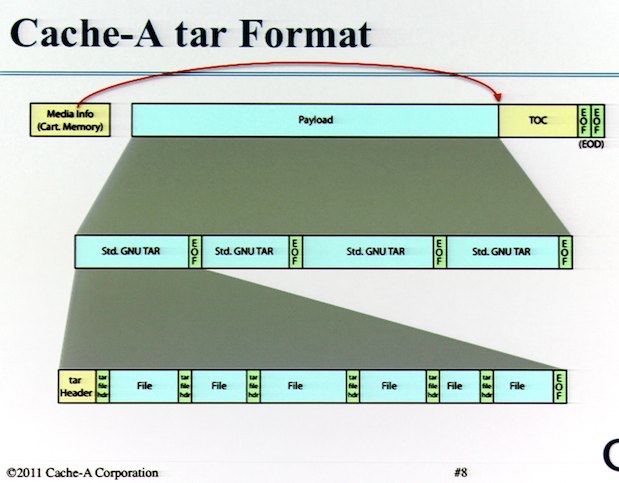

Self-describing tape file systems: SuperMac DataStream in 1987 (Mac), 1992’s QIC with QFA (DOS); 1996 DatMan (Windows); 2004-2007 Quantum A-series S-DLT. 2008 Cache-A used tar format with added intelligence, but unless you have a Cache-A driven you need to untar to see contents.

tar history: 1970 unix program (Tape ARchive), POSIX standardized in 1988, 2001.

tar on tape: sequential blocks (tarballs) with one or more files.

Cache-A kept master index, stored in MIC (memory in cassette, a flash chip) and at end of current data, kept in SQL database in Cache-A appliance. LTFS maintains indexes in index section on tape.

LTFS issues: no tape spanning, not all filenames work (certain characters can cause cross-platform problems), LTO-5 only; long delays updating index when you eject the tape; some ops thrash the tape. Looks like a random-access filesystem, but it’s still sequential.

Cache-A additions: adds a TOC (table of contents) with URL coding for filenames.

LTFS is self-describing and easy to use; tar is not. LTFS can do single file restores; tar cannot.

LTFS Demystified: StorageDNA builds local/remote media synchronization apps, e.g., SAN-to-SAN. LTO-5 with LTFS makes tape play well in this arena.

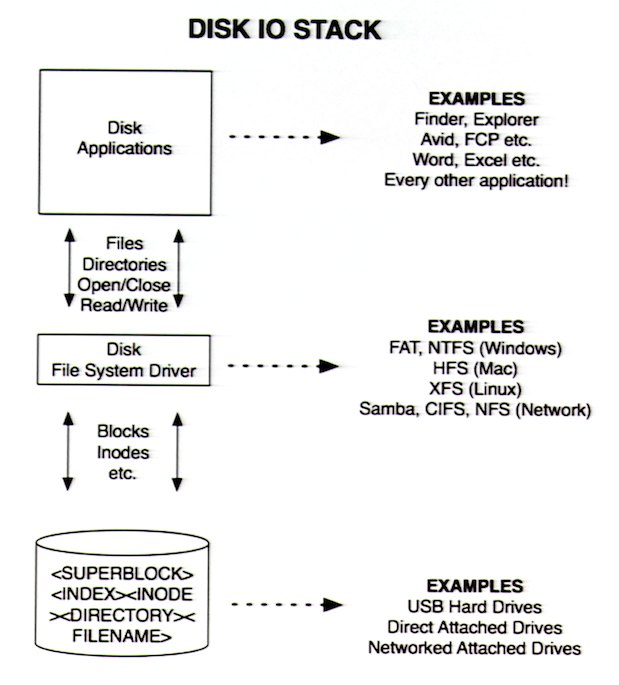

Disk IO stack: the file system buffers apps from dealing with the hardware, and disks are portable between systems:

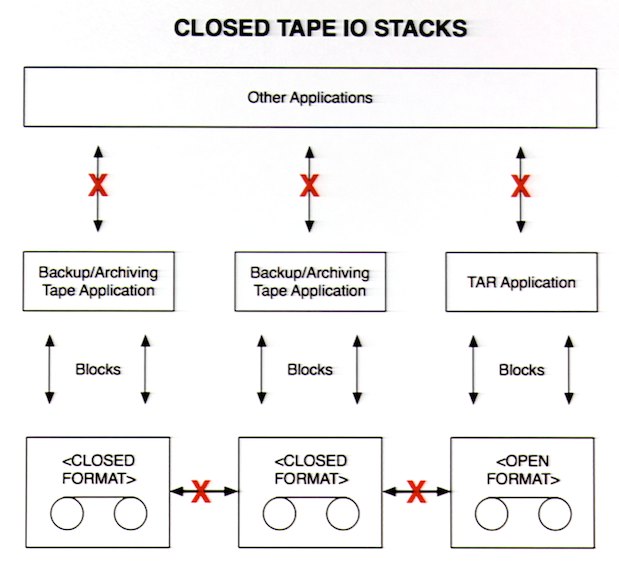

Tape IO stack is like the bronze age by comparison:

And none of these systems is easy to use, or portable. LTFS essentially replicates the disk IO stack, with its portability and openness.

However, it’s still sequential: if you open an LTFS disk with Finder, Finder treats it like a disk, creating all sorts of desktop files, reading metadata, etc. Need to make vendors aware of tape to avoid thrashing: Microsoft, Apple, Avid, Autodesk, Adobe, etc.

Questions: “Is this a step forwards or back? Reminds me of using cassettes with my old Radio Shack computer.” What about the Quantum MXF format? LTFS does add great value; if you’re writing a backup application, for example, you don’t need to write to a new drive type, and you can restore using the Finder (or Windows Explorer). You can use rsync on LTFS tapes. What happens if you have a system crash when writing a tape? What happens to update the index in this case? When you mount LTFS, you can specify how frequently to write the index: on every file close, for example. You may need to run an LTFS recovery tool to resync/reposition a tape after a power failure, but that last file fully written will be there. What about future-proofing? One reason we have so many tar variants is because there were limits found… what about Chinese characters? Any unicode character can be used in an LTFS filename, but three (forward slash, null, one other) aren’t usable in names, and ten more are recommended against due to cross-platform issues, e.g., you can create files on linux that Windows can’t read the name of (backslash, colon, etc.; any character that’s used as a path or drive separator on one OS or another).

Avid’s Al Kovalick: looks great, we love it, we support it, you don’t need to convince us. We just haven’t released any products yet that use it.

When you delete a file on LTFS, it’s marked as gone, but the space isn’t reclaimed; it’s sequential, remember?

Partial restores should be possible; have demoed scrubbing in a video clip from tape, so you can seek to a byte within a file.

What about spanning tapes, for backup? If you have more than 1.5 TB as multiple files, split your directory across multiple tapes. Spanning single files across tapes is a problem.

Disney Film Library Restoration and Remastering – Sara Duran-Singer and Kevin Schaeffer, Disney

To date, have done over 200 titles, 60 in 2010.

Third full restoration of Fantasia. But which one? The 1940, ’41, or ’46 version? Originally released in 1940, 125 minutes in Fantasound. In 1941, removed many scenes (Deems Taylor narration, orchestra, Bach Toccata). 1946 (the popular version) restores some narration, and that’s the version the cut negative was stored in. Decided to restore to 1940 version, Walt’s vision.

Original Deems Taylor dialog tracks have been lost. Issues finding other elements:

(Similar issues as today: what do we keep? What do we toss? What has value? Not enough to keep finished product; elements needed for repurposing. Will the greenscreen shots from “Tron: Legacy” have value in the future? Dunno, but don’t want to be the one to throw it away!) In this case, re-recorded and replaced all the narration with Corey Burton, so quality will remain consistent throughout. Fortunately had all the visual elements on three-strip Technicolor nitrate negs! First time Disney restoration had all the original negs. Scanned, then did dirt and scratch removal, degraining, and paint fixes.

Audio restoration: 35mm masters, digital cleanup, noise reduction, equalization, and remix.

Also: 4K scan and cleanup on “The Love Bug” and all the following Herbie films. Blackbeard’s Ghost, Redford’s Quiz Show.

1st Cinemascope Disney film “20,000 Leagues Under the Sea”. Elements almost 60 years old; not sure what condition they’ll be in. Six months researching just to get started, reading production files and transfer logs, looking for good dye-transfer print for reference. Original master had faded too much to restore color. Looked for camera negs, transferred those. 27 reels of neg at 4k on NorthLight, plus some YCM (yellow/cyan/magenta) protection master, to 2.55:1. Also found old stills, D1 master for a home-vid release. The squid sequence: shot at night in a storm to hide the wires and mechanisms, but the 4K scans made ’em visible. Had to paint ’em out. “Anamorphic mumps” in the lens due to inconsistent squeeze, a slightly overstretched image for stuff close to the camera. A 0.5% digital squeeze to the entire image fixed it; as show was shot full-aperture 2.66:1, there was room to bring the edges in a bit.

TRON: shot in B&W and then blown up into 16×20 transparencies for retouching. They shot 5-perf 65mm and 8-perf VistaVision and… we’re out of time!

[No pix taken due to copyright issues; don’t want an angry mouse coming after me. The restorations were very clean looking. -AJW]

What Just Happened? – A Review of the Day by Jerry Pierce & Leon Silverman

DSLRs are cool, but not easy to use. “DSLRs in video are like taking my Prius and making it a dump truck.” (applause).

Demo room favorites?

Sarnoff sequential disparity on 3D. 3D is captured time-synchronized, but displayed one eye before the other with shutter glasses; caused a “rotational” effect; needs more research.

“As an old VTR guy I thought the solid-state SR was cool.”

“I never thought I’d see a CRT look that bad” (compared to the OLED). Both the Dolby and OLED monitors looked great.

The future of the tapeless workflow apparently has a lot of tape.

Lots of HPA attendees have cut the cord.

When will packaged media not be in your local store? A few say in 5 years, half in 10 years. Very few say “never.”

What should we be covering next year? More cameras, UltraViolet, optics, OpenEXR and ACES. What about the Super session? “When will 1.33 die?” (applause). More round-table time, please.

IBM’s Watson. Virtual production. Jim Burger needs to teach his PowerPoint techniques! NBCu/Comcast: one year later!

Day 4’s writeup will be posted Sunday night.

Disclaimer: I’m attending the HPA Tech Retreat on a press pass, which saves me the registration fee. I’m paying for my own transport, meals, and hotel. No material connection exists between myself and the Hollywood Post Alliance; aside from the press pass, HPA has not influenced me with any compensation to encourage favorable coverage.

The HPA logo and motto were borrowed from the HPA website. The HPA is not responsible for my graphical additions.

All off-screen graphics and photos are copyrighted by their owners, and used by permission of the HPA.

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now