Band Pro Film & Digital is celebrating their 25th anniversary this year, and they put on three days of free events in Los Angeles to mark the occasion: F35 training with Jeff Cree, a 3D Symposium, and an Open House with gear, food, and conversation—and some new PL-mount primes, too.

I received an invitation saying, “Band Pro is happy to offer verified press who are flying in for the event two free nights of lodging and complementary breakfast at the event hotel.” I inquired and found that I counted as “verified press”, and that was enough to push me over the edge; I went down to LA for four nights total.

Lest you think that the following coverage was unduly influenced by this generous offer, I should say that I paid my own way on Southwest Airlines and picked up two nights of hotel lodging myself—and that the hotel denied that breakfast was complementary. Anyone familiar with journalists will instantly recognize that the denial of free food is the second most deadly insult possible to a member of the press; only denial of free drinks is worse [grin].

December 15: F35 Digital Imaging Technician Training

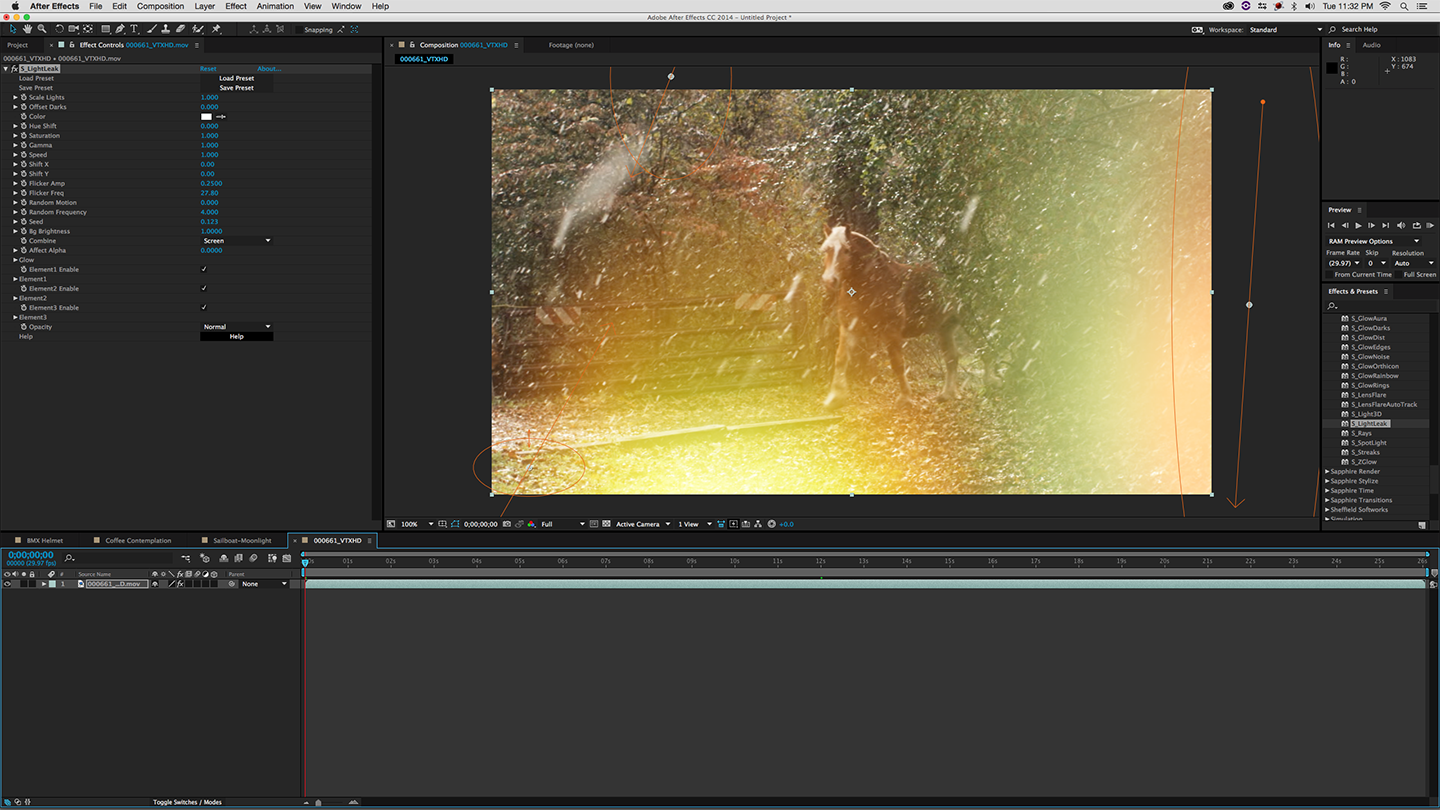

Band Pro’s first event was a day of Sony F35 DIT (Digital Imaging Technician) training at the Harmony Gold Theater in Hollywood.

F35 DIT training at the Harmony Gold Theater in Hollywood.

The F35 is Sony’s top-end digital cinema camera; it uses a single 35mm-sized sensor, PL-mount lenses, and HDCAM-SR recording. The quarter-million-dollar package shoots 1080i and 1080p at variable frame rates up to 50fps, and it’s one of the few digital cameras that offers full ramping: speed changes during a shot.

The F35 with Fujinon 18-85mm T2.0 zoom and SRW-1 recorder.

The session was given by Band Pro’s Jeff Cree. Jeff was with Sony for many years (I first met him about ten years ago, when he gave a colleague some training on the HDW-700, before Sony had any progressive-scan cameras) and he’s possibly the foremost expert in the country when it comes to Sony’s high-end CineAlta equipment. He certainly knows the F35; he filled every minute of the day-long session (about six hours, plus lunch and a couple of breaks) with useful information, and was never at a loss when it came to answering audience questions.

The indefatigable Jeff Cree spoke for six solid hours.

I can’t possibly relay everything Jeff talked about (where’s Mike Curtis and his fast-flying typing fingers when you need him?), but I can hit some of the highlights.

Jeff started with some brief history of the F35 and its 2/3″ 3-CCD brother, the F23. Both cameras share the same bodywork and internal processing, differing only in their sensors, front plates, and lens mounts. The processor board is shared between the cameras, and has two input connectors on it: one for the F23 sensor block, and one for the F35. The 36-bit floating-point processor has “about three times the bandwidth needed” for HD video, and that power, combined with a 14-bit video A/D, lets these cameras capture and cleanly process about 14 stops of dynamic range without the need for any “pre-knee” analog precompression to keep highlights under control. These cameras have highlight headroom extending to 800%, compared to the 600% max headroom of most other cameras, including the F900 series.

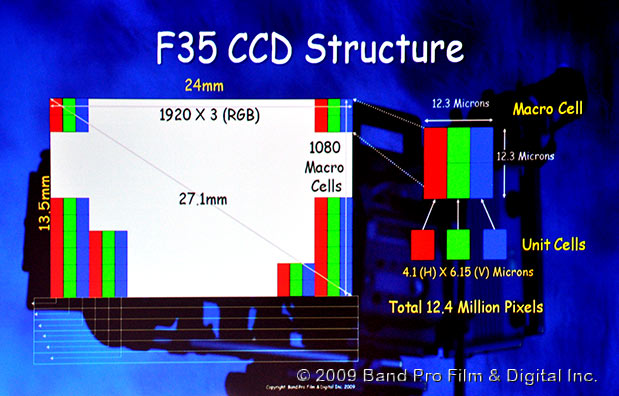

The F35’s CCD sensor is about the size of a 3-perf 35mm frame and it has a 16×9 aspect ratio. CCDs may be more costly and more power-hungry than CMOS, but they still offer better dynamic range and lower noise. This CCD, basically the same one used in the Panavision Genesis (though Jeff took pains to emphasize that only the sensor is shared between the two cameras; everything else is different), has 5760 x 2160 active photosites and vertical RGB striping—not a Bayer pattern. In practice, a “pixel” of HD video consists of a “macro cell” of three photosite columns (R, G, and B) and two rows.

How the F35’s CCD sensor is set up: each “pixel” is a 2×3 macro cell.

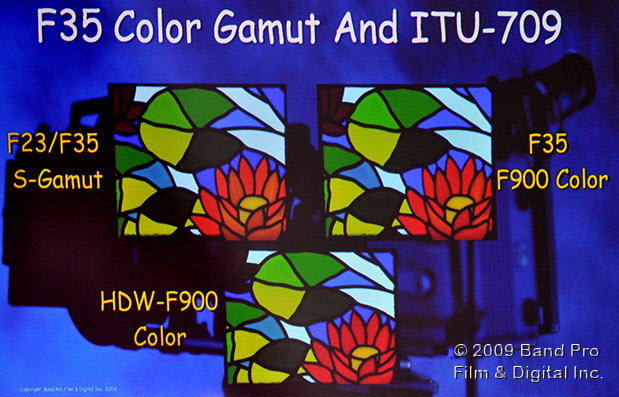

The F35 and F23 use special, wide-band (and very expensive) color-separation filters for their three channels. The filtration gives the cameras extended red responses, and more primary color overlap than normal, 709-compliant camera colors have. This allows the F23 and F35 to see finer hue discriminations than 709-compliant cameras are capable of, allowing them to more accurately reproduce different colors that lesser cameras would simply merge together.

The overlapping spectral sensitivities of the R, G, & B channels let the F23/F35 see more subtle color gradations than normal cameras do.

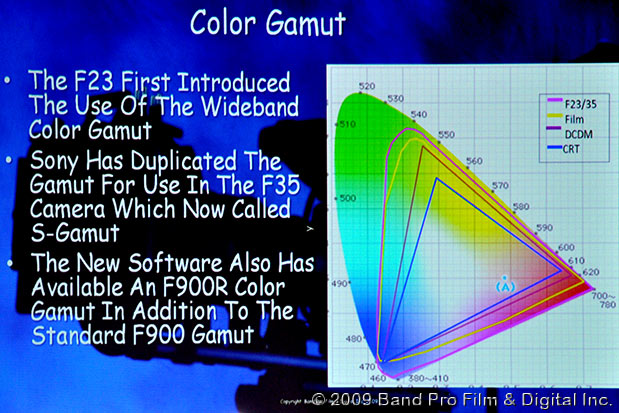

The filters also allow for a wider gamut overall: wider than 709, wider than Digital Cinema Initiative Distribution Master (DCDM) gamut, even wider for the most part than film (film guys: no death threats, please; I’m just reporting what Jeff said).

The F35 and F23 can see colors beyond 709, beyond DCDM, and even (mostly) beyond film.

These cameras thus allow recording wider color spaces than most other video cameras do, and you can choose to record the wide gamut, or restrict the camera’s response to DCDM or 709 gamuts. Even if you do restrict the color, the discrimination afforded by the fancy filtration captures more subtlety in the color.

The camera’s gamuts are labeled S-Gamut (the wide gamut), DCDM, F900, and F900R; the latter two are used to match the HDCAM cameras of the same names. Amusingly, the F900-compatible gamut is really a Rec.709-compliant gamut, but since the matrix used to generate it doesn’t use the Rec.709 coefficients (seeing as the incoming R, G, and B signals aren’t 709-constrained colors to begin with), the ITU wouldn’t let Sony use “709” as the gamut’s name.

The F35’s EL panel in ramp mode (here, a ramp from 24fps to 24fps; probably not what you’d normally do).

There’s a side-mounted panel on the camera, which Jeff referred to as the EL (electro-luminescent) panel and Sony calls the subdisplay. In my few brief outings with the F23, I had found the allocation of tasks between the viewfinder menus and the EL display to be a bit confusing, but Jeff made the distinction clear: the viewfinder menus are used to set up the camera in all its engineering details, while the EL panel (and the detachable assistant’s panel on the camera’s right side) are normally used for operational control. The clever DIT customizes the EL panel menus so that only the items needed on the current job are available; that way the operator and the 1st AC aren’t distracted by stuff they don’t need to see (and can’t screw up things they shouldn’t be mucking about with).

The SRW-1’s detachable ops panel.

The SRW-1 deck can be attached to the back of the camera or to the top; the F35’s Interface Box mounts in whichever location the deck isn’t. When mounted on the camera, the deck lets the camera reach its full potential: in-shot speed ramping, and overcranking to 1080/50p (the deck will handle 1080/60p, but the F35’s sensor can’t be read any faster than 50fps). If the deck is detached, you can’t ramp, and you can’t go any faster than 1080/30p since that’s the limit of dual-link 4:4:4 HD-SDI.

The deck can also be connected via dual HD-SDI links from the Interface Box, or via a fiber adaptor (which lets the deck sit up to 1600 meters away; that’s nearly a mile!). The dual HD-SDI outputs on the Interface Box are the only “true” outputs for the camera; they run at the exact frame rate set, whereas the the monitoring outputs on the camera itself run at the camera’s current timebase, and drop frames or repeat frames as needed. For example, when shooting 12 fps with a timebase of 24fps, the Interface Box will output 12 frames per second on its dual-link outputs, while the monitoring outputs will double each frame to spit out 24fps.

There’s a 4-pin power input on the Interface Box, but it will only power the camera, not the deck: the deck and camera combined draw more amps than the single pair of power pins on the 4-pin connector can supply. If you want to drive the deck, you need to use the 8-pin Lemo connector on the camera body itself.

Right side of the F35: note dual power status LEDs, for 12v and 24v feeds.

That Lemo has doubled-up pins to carry the amps, and it also has pins for 24 volt power. While the camera itself wants (and requires) 12 volts, many cine accessories run on 24 volts. The F35 accepts 24v on the Lemo and makes it available on accessory power ports (like the one on the right side of the picture above).

The EVF’s design moves its bulk out away from the lens.

The F35 can drive two viewfinders at once, each with its own selection of markers and overlays. EVFs for the F35 are designed to avoid interference with fat cine lenses; instead of putting the bulk of their electronics over the lens, they stick their workings off to the side.

Some other tidbits:

- Not all PL-mount lenses are suitable for the F35; those that stick back more than 30mm behind their flange will run into the IR filter in front of the sensor.

- You can set up ramps using shutter-angle compensation or gain compensation. Gain comp uses only negative gains, up to -42dB, so there’s never an increase in noise, but you can lose some highlight headroom at the extreme negative gain settings.

- The newest firmware, version 1.43, has eight hypergammas, up from four in previous versions. The four new ones fully exploit the 800% headroom the F35 is capable of. Hypergammas are labeled HGrrrwGgg, where rrr is the dynamic range (325, 460, or 800), w is the white limit (0=100%, 9=109%), and gg is the video level for 18% gray in this HG setting. For example, HG8009G40 is an 800% DR hypergamma, going to 109% on the output, with a midgray at 40%. It may look confusing at first, but it’s better that having to remember what all eight gammas do by memorization alone.

- S-Log is a “hands-off” gamma curve to accurately capture every scrap of dynamic range for grading in post; it’s not WYSIWYG. However, firmware 1.43 lets you apply an S-Log MLUT (monitoring LUT) to the VF and monitoring outputs so that you can see a normal image while shooting S-Log.

- S-Log isn’t designed to be “painted” in camera, though the user gammas are preloaded with S-Log curves. There are two ways you can get into irreparable trouble with S-Log: (a) change the black point (the fixed S-Log curve has a fixed black point, but the user gammas let you change it), and (b) get clever and try to tweak your levels so that S-Log looks correct. These are problems especially if you paint the camera with MLUTs loaded; small changes in the monitored look can hide huge changes in the underlying curve, changes that are very hard to correct in post. If you must paint S-Log, use the Interface Box outputs, since they’re LUT-free.

- If you connect up the CVP gamma-editing program (Windows-only, alas) to the F35’s Ethernet port, you can tweak gamma curves live, instead of having to put ’em on Memory Sticks and sneakernet them to the camera for testing.

- When you’re in variable frame rate recording mode, you can still record audio, but it won’t be in sync! Audio is going directly to the deck (live) instead of through the frame-cache card, and the A/V delay will be randomly offset. Don’t make the mistake of having the system in VFR mode when you want to record sync sound; even if you’re shooting 23.98 fps with a 23.98 fps timebase, audio will be randomly synced, and your editor will hate you.

- If you need to mask out lit pixels, black-balance the camera three times. Each black balance does one channel per run (R, G, or B), so three passes will hit all three channels.

- There’s an air filter on the front panel of the camera. When it gets dirty, vacuum it—don’t blow the dust off! If you blow, the dust goes into the camera, and collects on the processor heatsink. Then you’ll have to pull the whole side off the camera to get at the dust, under the disapproving gaze of the operator, the DP, the director, and everyone else on the set.

Want more? Band Pro lets you download manuals for most of the gear they sell, including the F35 and the SRW-1; a great help, since Sony doesn’t make it easy to find these manuals on Sony’s own websites.

After the training, I went to dinner with a friend, and saw this nearby:

Panavision’s North Hollywood facility.

It has style. Isn’t that enough reason to photograph it?

Next: Highlights of the 3D Symposium…

December 16: The 3D Symposium<

On this day, Mike Curtis and his fast-flying typing fingers did show up, and you can read all about it in his posts. I commend his writeups to you for the blow-by-blow details; I’ll simply highlight a few notable things and give you my impressions of where things stand.

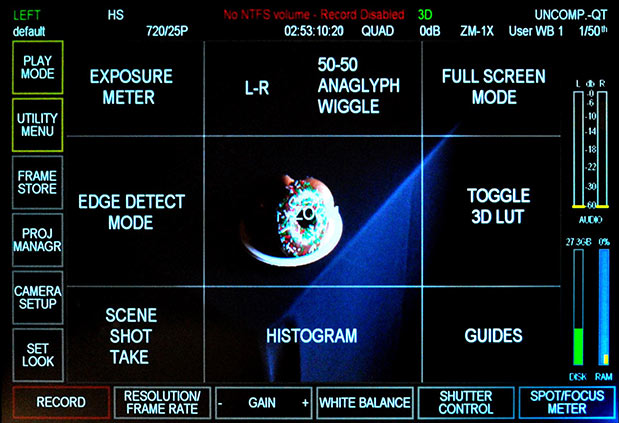

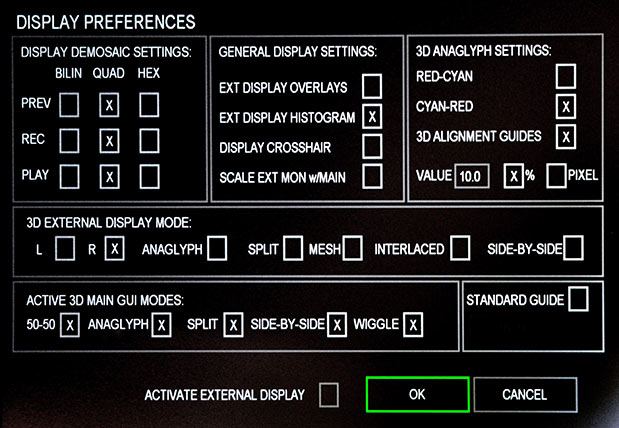

The triumvirate of Silicon Imaging, Iridas, and Cineform really have the 3D production and post workflow dialed in. The SI-2K camera, with Iridas and Cineform on-board, has stereo monitoring neatly integrated into their already-excellent interface:

The SI-2K’s touchscreen UI, with on-picture hotspots revealed. The top-center hotspot toggles 3D monitoring modes.

The SI-2K’s stereo monitoring setup options.

The camera does everything it can to make aligning two SI-2Ks as a stereo pair as easy as possible, with a multitude of monitoring possibilities individually selectable for the EVF / control screen and the monitoring outputs.

Iridas, likewise, is hip to the 3D jive: it even supports the use of the Tangent Wave control panel for “depth grading” while a clip is playing. Of course, the depth grading—changing the interaxial, correcting alignment, etc.—is stored as metadata, just like every other image tweak.

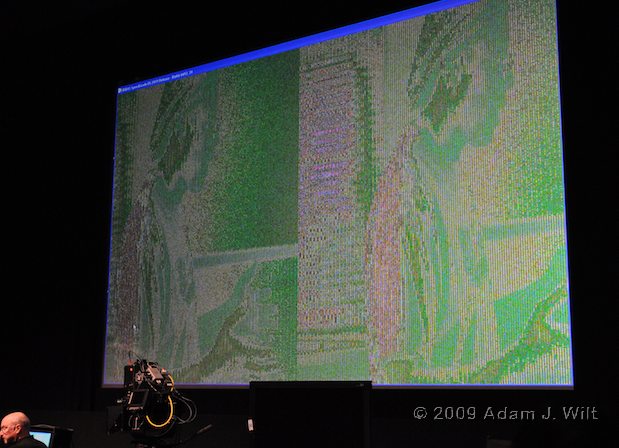

Iridas’s Steve Crouch shows raw Bayer-mask data from an Arri D21 frame.

Steve Crouch of Iridas likens decoding raw footage (whether in 2D or 3D) to a video game: in a video game, there isn’t a pre-built image of a scene; there’s a compact bunch of instructions. When you command your character to move, to turn, or to jump, the computer takes the resulting viewpoint and renders the scene on the fly to create your image. Similarly, working with raw takes a small scene description (the raw data) and rendering instructions (your color grade and depth grade) and renders each frame on the fly.

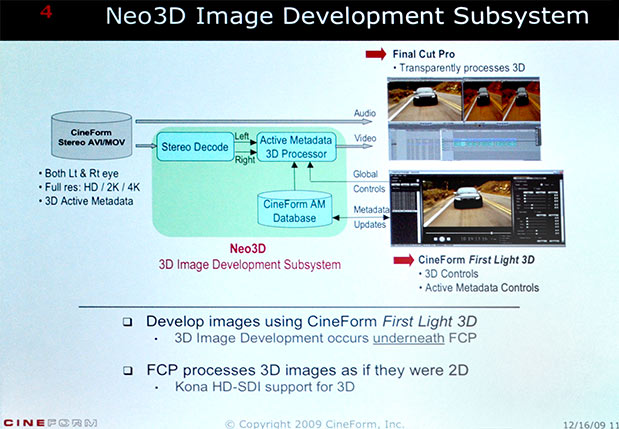

What makes Cineform so clever: you color-grade and depth-grade independent of the NLE.

Cineform has it nailed when it comes to a well-thought-through raw subsystem for Macs and PCs. Their codec works like any other QuickTime or AVI codec as far as an application is concerned: an app asks for a frame, and Cineform hands one over. The clever bit is that behind the scenes, Cineform runs a system-wide database that the codec queries for decoding parameters on a frame-by-frame basis. The separate Cineform FirstLight 3D application writes metadata into this database and allows both color grading and depth grading to happen in parallel with NLE playback, whether it’s FCP on Mac or Premiere on Windows.

The fact that Cineform’s raw format and codec technology give better than HDCAM-SR quality doesn’t hurt, either; nor does their clever combining of two video streams into a single file, encoding one eye’s frame as metadata in the other eye’s frame data.

Of course Cineform can be used as a mezzanine codec for any 3D project, and Iridas’s technology isn’t limited to the SI-2K either. But the combination of Silicon Imaging’s camera with Cineform’s codec infrastructure and Iridas’s grading and look-management capabilities gives the SI-2K a unique synergy; it’s as if the camera had been designed from the ground up to make 3D almost as easy as 2D to shoot, edit, and grade.

If that’s all there was to it, we could declare victory and go home. But that’s the easy part. The hard part is what happens before the light hits the lens.

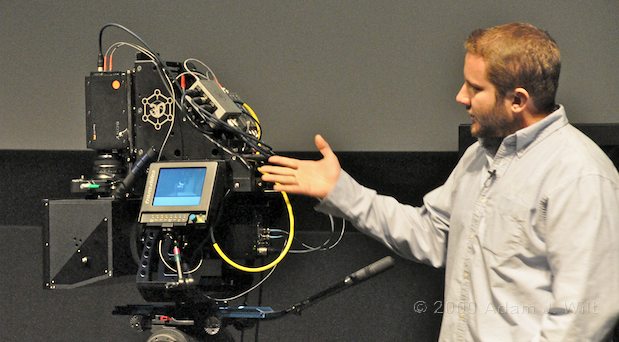

Element Technica’s Chris Burket shows off the “Quasar” beamsplitter rig.

Even those clever chaps at Element Technica can’t hide the fact that shooting stereo with large cameras is more akin to the old Technicolor three-strip cameras than an Arri 235, Aaton Penelope, or any decent shoulder-mount ENG camera. The human interocular distance is about 2.5 inches; if you’re going to replicate that viewpoint, you have to match that with your interaxial spacing: the centerlines of your lenses can’t be much more than 2.5″ or 62mm apart, otherwise the binocular depth cues will make your set look like a miniature. Unless you’re using very small-diameter lenses on very narrow camera bodies, you’re stuck with a beamsplitter rig like the “Quasar” shown in the photo above. Wanna go handheld, or put this puppy on a Steadicam? You’re braver than I am!

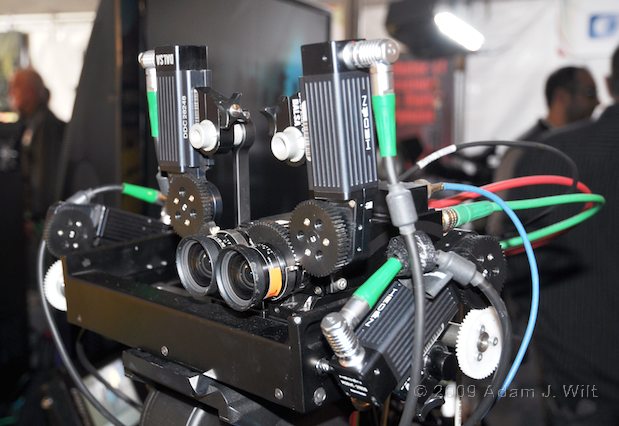

You can go with a parallel rig if you’re using tinycams, like 1/3″ to 2/3″ box cameras with small prime lenses:

A parallel 3D rig from the Band Pro open house: note the cut-off sector gears on the lenses, allowing a very tight interaxial.

But even there, your problems aren’t over: you have to make sure the cameras are perfectly aligned angularly and rotationally (e.g., in pitch, roll and yaw simultaneously), as well as being lined up so their optical axes are on the same level. Zoom lenses have to track identically though their zoom ranges; focus setting have to match and be controlled in sync.

Yes, you can fix these in post to a certain extent, using the grading tools from Iridas, Cineform, Quantel, and others, but you’ll be facing both a time hit and a quality hit if you try to fix it in post; things like roll (Dutch tilt) correction can really degrade resolution in a hurry—and things like vertical misalignment of the cameras, with their corresponding different views of the world, can’t be fixed in post at all.

It should go without saying that both left and right cams need to roll in perfect sync; even a scanline’s difference in their timing can shatter the simultaneity of motion. Thus I found it disturbing how much time the panelists in the discussion spent discussing sync: apparently it’s not been obvious to the folks they’ve had to deal with.

Consider also that unless you’re shooting parallel cameras, you’ll need a convergence puller alongside your focus puller. The Quasar rig has motorized interaxial and convergence adjustments set up for a Cmotion or Preston controller.

Given all this on-set complexity, is it any wonder than most recent 3D releases have been animated, not live-action?

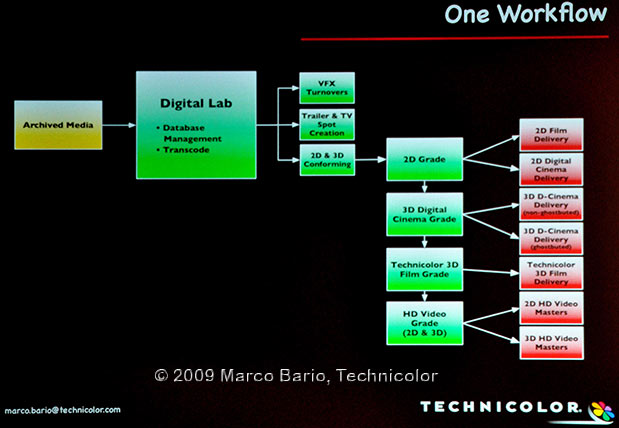

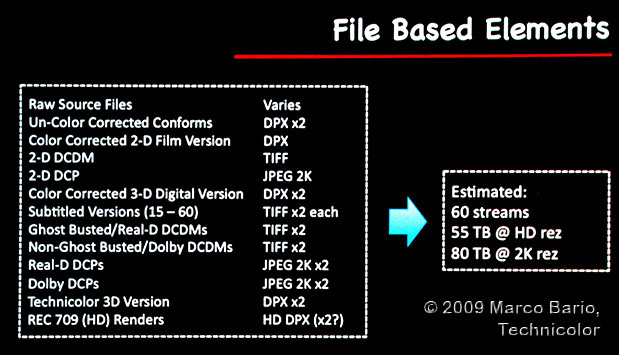

Why 3D is hard: some (not all) of the separate grades that need to be generated for 2D and 3D release.

If that’s not enough, look at the added complexity on the back end. A 2D color grade; a re-grade for digital 3D with its lowered light levels; another re-grade for filmed-out 3D; additional grades for 2D and 3D video releases.

“Now how much would you pay? But wait, there’s more…”

And let’s not forget that there are at least two digital 3D distribution formats (Dolby and Real-D), and some need ghostbusting and some don’t, and for overseas distribution you’ll need burned-in 3D subtitles in up to 60 different languages and dialects (something that Iridas will be able to render out in their next software version, fortunately).

Still with us? Now consider that 2D and 3D are fundamentally different beasts. 2D wants shallow depth of field for subject isolation and suppression of distracting elements; 3D wants deep focus so you eye can rove at will. How do you shoot both at the same time? Furthermore, your 3D cut and your 2D cut of the same material will be different (3D plays scenes longer and wider; 2D wants more cutaways and closeups), so after making your 3D edit, plan on going back and recutting the show for 2D presentation. Can you even make a show that will work as both a 2D and a 3D presentation, and do equal justice for both?

Clearly 3D isn’t something to be attempted by merely “nailing a couple of HVXs to a 2×4,” as one panelist put it.

Not that people won’t try, of course.

Next: The Band Pro open house, and a new line of PL-mount primes…

December 17: The Band Pro Open House

Band Pro’s open house (which they’ve been doing for several years now) isn’t, at first glance, any different from other reseller-sponsored mini trade shows, like the ones that Professional Products puts on in the Washington DC area, Snader presents in the San Francisco area, or Alpha Video holds in the midwest (I mention these specifically because I’ve attended them either as an exhibitor or as a customer). If anything, it’s a bit smaller than those other shows; Band Pro doesn’t rent an exhibition hall or convention center, they simply set up a tent in their parking lot and have their vendors arrayed within.

The big tent stayed full well into the night.

Two things set the Band Pro event apart: the food and the toys.

Band Pro doesn’t merely have a snack table, they turn their showroom into an all-day catered buffet, with plenty of sitting and standing room (and a bar), so that attendees can eat their fill and network with each other.

In the Band Pro showroom: Middle Eastern food and drink, lots of discussion.

This year the food was Middle Eastern. Everything I sampled was top-notch, and the dolmas were quite possibly the best I’ve ever had. [Disclaimer: remember what I said about journalists and free food!]

As to the toys: Band Pro has the unfair advantage of being the exclusive US distributor for a lot of fancy digital cine stuff (like 2/3″ Zeiss DigiPrimes and DigiZooms) and being situated next door to Hollywood, so there’s a lot of high-end production gear at Band Pro’s event that isn’t available for those other shows and/or doesn’t make a lot of sense to demo in their local markets.

Band Pro’s Randy Wedick shows Director / DP Joe Murray and DP Jordan Valenti the F35.

For example, how many places will you find Sony F35s sitting around? Band Pro had a couple. Band Pro was also showing off the SRW-9000 camcorder, which has seen some changes since it was unveiled at NAB.

The SRW-9000 at Band Pro in December…

…compare to the prototype SRW-9000 at NAB last April.

Something else you don’t see every day: Astro’s 56″ 4K LCD monitor (which I don’t have a picture of, because, really, with a 619-pixel-wide photo, what am I gonna show?), which with the right material—a wide shot of a cherry tree in bloom, where you could see each petal of every blossom—made 1080p HD look as bad as HD makes SD look.

Cine-tal was showing their 42″ Cinemage monitor, which is the on-set display I want on my next shoot… heck, it’s only $18,000-$22,000!

Randy Wedick demonstrates the SI-2K’s touchscreen interface for Joe Murray.

The SI-2K was there, too, so folks could get a first-hand look at its UI and its integrated copy of Iridas SpeedGrade for look creation.

And I don’t mean to dismiss the other vendors, a list of which may still be available here.

A New Line of PL-mount Primes

At about 2pm, Band Pro’s founder Amnon Band called for quiet…

Michael Bravin and Amnon Band stand ready to make an announcement.

He introduced Dr. Andreas Kaufmann of ACM, a company that may be best known in the USA as the majority owner of Leica.

Dr. Andreas Kaufmann of ACM describes developments.

ACM has been working for several years on a new series of lenses, for which Band Pro will be the worldwide distributor.

Finally, the unveiling…

…Mystery Primes revealed!

The crowd gathers ’round.

The “Mystery Primes” are so called because of certain legal waiting periods that need to be observed (trademark registration or the like); we should learn their real names in mid February.

Michael Bravin told me that the goal of the Mystery Prime project was “Master Prime quality at a lower price”. It’s encouraging to see new lenses coming out aiming primarily at high performance instead of striving first for a low cost. The market isn’t entirely caught up in “a race to the bottom”, as many doomsayers keep saying (but make no mistake: I don’t dismiss or disrespect what Red, UniQoptics, Cooke, and Zeiss are doing to make PL-mount primes more affordable).

The 40mm T1.4 Mystery Prime on an F35.

From 6 feet to infinity, all Mystery Prime distance scales are the same, making focus-pulling easier.

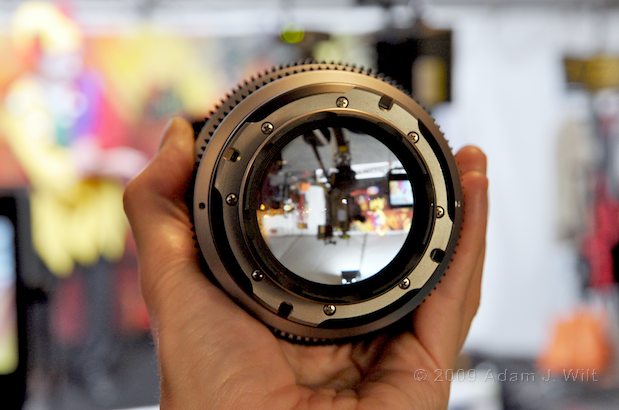

With T1.4 max apertures, these lenses have large exit pupils.

The lenses are compact, dense units with huge exit pupils—think of lightweight titanium skins shrink-wrapped around large hunks of fancy aspheric glass.

40mm of T1.4 goodness in the hand: three pounds of glass and titanium.

The prototypes I handled has silky-smooth iris and focus rings, with no trace of slop or binding. Sweet! I’ll be very interested to see how the lenses perform once they start shipping next summer.

Three more prototype Mystery Primes.

Here, unedited, is Band Pro’s press release about the new lenses:

Band Pro offers new set of 4K “Mystery Prime” Lenses

Burbank California, December 17, 2009–At the One World on HD event, Band Pro Film and Digital introduces a groundbreaking new brand of ultra-high performance PL mount prime lenses, designed to deliver optical performance for true 4K imaging and beyond.

After three years of design and prototyping, the new T1.4 lenses, still code named “Mystery Primes” within Band Pro, are fully developed and 3 focal lengths are due to be demonstrated on the F35 camera at the event. The series of prime lenses, available exclusively worldwide from Band Pro, will eventually total 15 different focal lengths, ranging from 12mm to 150mm. Delivery of production models of eight of the lenses will begin in early summer of 2010.

“A unique use of aspheric technology and cutting-edge mechanical cine lens design provides the “Mystery Primes” with unmatched evenness of illumination across the entire 35mm frame and into the corners with no discernable breathing” said Michael Bravin, Chief Technologist of Band Pro. “Suppression of color fringing into the farthest corners of the frame is superior to any lenses I have ever seen.”

The entire set of “Mystery Primes” features unified distance focus scales, common size and location of focus and iris rings, and a 95mm threaded (for filters) lens front–all allowing quick interchange of lenses in a busy production environment. Another unique feature is an integrated threaded net ring in the rear of the PL mount.

Designed to be light in weight yet rugged on the set, the mount and lens barrel are manufactured using lightweight high strength titanium materials. For example, a typical Mystery Prime weighs just 3 lbs (1.4kg).

The core set of “Mystery Primes”, which will start delivering by June 2010, includes 16mm, 18mm, 21mm, 25mm, 35mm, 40mm, 50mm, 65mm, 75mm, and 100mm lenses. Additional focal lengths will be delivered in a second phase.

The first 25 sets of lenses will be delivered to Otto Nemenz International. Their experienced team provided invaluable user input from the beginning of the design process.

Contact Band Pro for more information.

16 CFR Part 255 Disclosure

Band Pro offered me (and other out-of-town journos) two free nights at the Burbank Courtyard Marriott hotel to come and cover their open house. I paid my own flight costs from San Jose to Burbank, covered two additional nights at the hotel to attend the additional events, and rented a car for one day to get to the F35 training (the other events were within walking distance), a sum totaling $454.04. It’s not like I got a free trip out of the deal.

Jeff Cree reviewed and approved my use of some of the slides from his presentation, which I photographed off the screen, but he hasn’t reviewed my writeup. Any errors are mine alone.

I have known Michael Bravin for about 20 years, since we were both at Abekas Video Systems. I have known Jeff Cree for about 10 years, since he was with Sony and taught a colleague all the ins and outs of the HDW-700. I have met other Band Pro folks at shows (NAB, CineGear), but I haven’t yet been a customer of the company, just a tire-kicker.

Aside from the two free nights in the hotel, Band Pro has not influenced me with payments, discounts, or other blandishments to encourage a favorable article.

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now