What a year it’s been for Generative AI technology and the tools that have been developed! When I first started the AI Tools series with Part 1 back in January of 2023, I could only see a sliver of what was to become in the months ahead. I then decided we need a central place that we could reference all these emerging tools and their updates so I created AI Tools: The List You Need Now in February. By the time I got to AI Tools Part 2 in March, it was apparent that developers were moving full-steam ahead in developing and providing updates and new tools every week. I had to pull back from doing such rapid updates that were quickly becoming obsolete as soon as I’d publish and put out AI Tools Part 3: in June, to give us a sense of where we were mid-year. Then focusing primarily on video and animation tools, I published AI Tools Part 4 in August, along with a smattering of how-to articles and tutorials for various AI Tools (which I’ll cover in this wrap up) that brings us to here at the end of the year.

And here we stand, looking into the possibilities of our future.

Where we started… Where we are are going.

The biggest fears about Generative AI in January, was that it was cheating; theft; it’s not real art; it’s going to take our jobs away; it’s going to steal your soul, etc. Well, only some of that has happened so far. I’ve seen many of my nay-saying design, imaging and photography colleagues that were actually angry about it then, embrace the capabilities and adapted their own style in how they use it in their own workflows and compositions. Some have made their work a lot more interesting and creative as a result! I always applaud them and encourage them in the advancements, because they’re trying something new and the results are beautiful.

And I see how many folks are jumping on the bandwagon with full abandon of their past limitations and genuinely having a lot of fun and have unleashed the creative beast. I think I’ve fallen into this category as I’m always interested to see what I can do with the next tool that opens up a portal to creativity. I’m an explorer and I get bored really quickly.

In reality, nobody has lost their souls to AI yet and the jury is still out as to whether any real theft or plagiarism has cost anyone money in actual losses for IP, though there have been some class action suits filed*. But where there have been actual loss of jobs and threats of loss of IP are in the entertainment industry – where voices, faces and likenesses can be cloned or synthesized and the actors are no longer needed for certain roles. We’ve witnessed the strikes against the big studios for unfair use/threats of use of people’s likeness for use in other productions without compensation.

*Note that as of December 27, 2023, the first major lawsuit against OpenAI and Microsoft was filed by the New York Times for copyright infringement.

As an independent producer, VFX artist and corporate/industrial video guy, I have seen both sides of this coin in a very real and very scary way. Because I can do these things with a desktop computer and a web browser – today. We can clone someone’s voice, their likeness, motion, etc. and create video avatars or translate what they’re saying into another language or make them say things they probably wouldn’t (which has a long list of ethical issues which we will undoubtedly experience in the coming election year, sadly.)

We are using synthesized voices for most of our Voiceover productions now, so we’re not hiring outside talent (or recording ourselves) anymore for general how-to videos. This gives us ultimate flexibility and consistency throughout a production or series, and can instantly fix mispronunciations, or change the inflection of the artist’s speech to match the project’s needs. It’s not perfect and can’t really replace the human element with most dramatic reads that require emotion and energy (yet) but for industrial and light commercial work, it does the trick. And I can’t help but feel a little guilty for not hiring voice actors now.

For cloning/video avatar work, we independent producers MUST take the initiative to protect the rights of the actors with whom we hire for projects. We are striving for fair compensation for their performance and a buy-out of projected use with strict limitations – just like commercial works. And they agree to participating on-camera and in written contract before we can even engage. We need the talent to give us content needed to clone and produce realistic results, but we’re also not a big studio that’s going to make a 3D virtual actor they can use for anything they want. If there’s a wardrobe change or pose, etc. then it’s a new shoot and a new agreement. There are still limitations as to what we can do with current technology, but there will be a day soon where these limitations will be lifted even at the prosumer level. I’m not sure what that even looks like right now…

All we can do is stay alert, be honest and ethical and fair, and try to navigate these fast and crazy waters we’ve entered like the digital pioneers that we are. These are tools and some tools can kill you if mishandled, so let’s try not to lose a limb out there!

The AI Tool roadmap to here…

Let’s look back at this past year and track the development of some of these AI Tools and technologies.

Starting with the original inspiration that got me hooked, was Text-to-Image tools. I’ve been using Midjourney since June of 2022 and it has evolved an insane amount since then. We’re currently at version 6.0 (Alpha)

Since I wanted to keep it a fair test all along, I used the same text prompt and only varied the version that Midjourney was running at the time. It’s a silly prompt the first time I wrote it back in June of 2022, but then we were lucky if we only got 2 eyes on every face that it output, so we tried everything crazy that popped in our heads! (well, I still do!)

Text prompt: Uma Thurman making a sandwich Tarantino style

I really have no idea what kind of sandwich Quinten prefers and none of these ended up with a Kill Bill vibe, but you’ll notice that the 4-image cluster produced in 6/22 had a much smaller resolution output than subsequent versions. In the 4th Quadrant, in 12/23 was done with v5.2 with the same text prompt. (Check out the 4X Upscaling with v6.0 directly below @ 4096×4096)

This is the upscaled image at full resolution (4096×4096) straight download from Midjourney in Discord with no retouching or further enhancements, nor were there any other prompts to provide details, lighting, textures, etc. – just the original prompt upscaled 4x. (Cropped detail below if you don’t want to download the full 4K image to view at 100%)

The difference with Midjourney v6.0 Alpha

Using the exact same text prompt gave me a very different result without any other prompts or settings changes. The results were often quite different (many don’t look like the subject at all) and they have a painterly style by default. But the biggest thing is, the AI understands that she’s actually MAKING a sandwich – not just holding or eating a sandwich. I think this is a big step for the text-to-image generator, and while I upscaled the one that did look most like Uma, I didn’t try to change any parameters or prompts to make it more photorealistic or anything; I’m still quite pleased with the results!

We’ve all seen the numerous demos and posts about Adobe Photoshop’s Generative Fill AI (powered by Firefly) and I’ve shared examples using it with video in a couple of my articles and in my workshops. It’s really become a useful tool for designers and image editors to extend scenes to “zoom out” or fit a design profile – like these examples from my AI Tools Part 4 article in August:

(For demonstration purposes only. All rights to the film examples are property of the studios that hold rights to them.)

Of course there are numerous ways to just have fun with it too! Check out some of the work that Russell Brown from Adobe has created with Generative Fill on his Instagram channel. Russ does really creative composites with a professional result – much of which he does on a mobile device.

For the featured image in this Year in Review article, I used the same Midjourney prompt for the image in my original AI Tools Part 1 article a year ago and then expanded the image with Adobe Photoshop’s Generative Fill to enhance the outer part of this “world”. The tools can really just work well together and that allows for more creativity and flexibility in your design work.

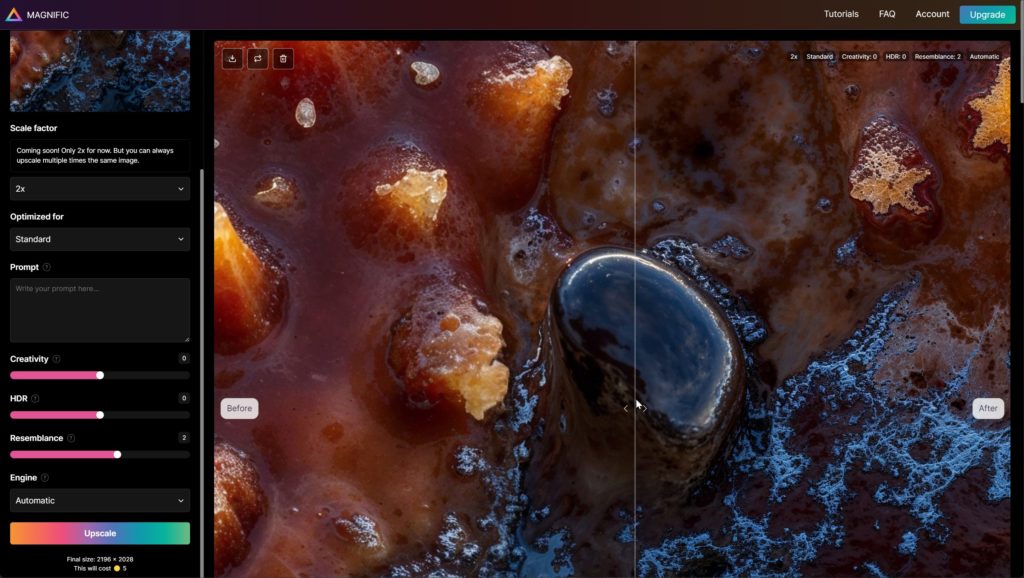

And of course there’s been great other advancements in AI image enhancement and generative AI tool development this year, including updates for Remini AI, Topaz Labs and a newcomer, Magnific that’s making some waves in the forums.

Magnific is a combination of an enhancement tool and a generative AI creator – but starts with an image to enhance, along with additional text prompting and adjustments in the tool’s interface.

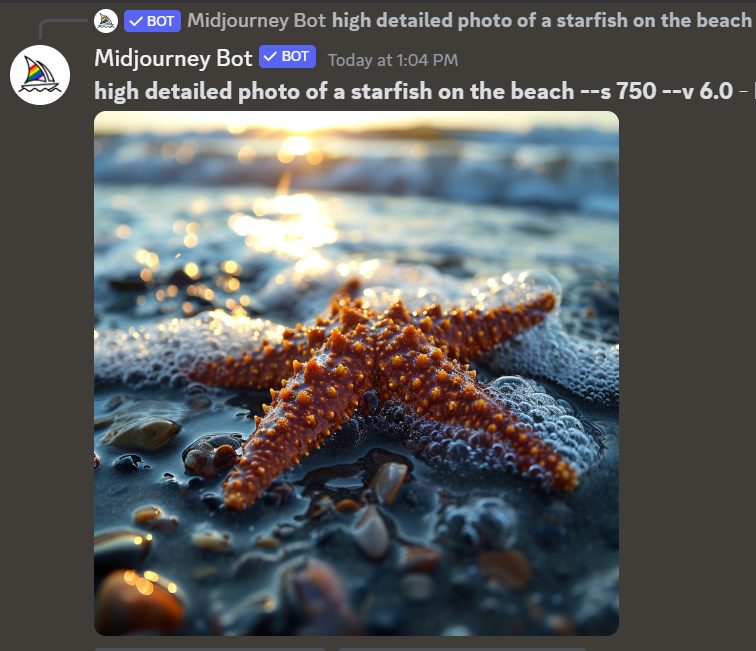

Since I just gained access to the tool, I thought I’d start with a Midjourney image that we could zoom into by using Magnific. I used a very simple prompt to get this lovely AI generated starfish on the beach.

I then add it to Magnific and used the same text from my Midjourney prompt to double the upscaling while adding more detail. (note that currently the maximum upscaling is limited to 2x with a resulting file resolution of 4K).

That means you will need to download your rendered results and re-enter them for further upscaling until you max out, then crop an image into the area you want to get more details and then upload and render that. Repeat until you get to a result you like. I’m sure we’re going to be literally going down a rabbit hole as we experiment with this tool in the coming weeks, so stay tuned!

But while image enhancement and detail generation are powerful tools, the creativity is already looming online with content creators and designers to generate stunning simulated extreme “zooms”. For instance, check out this post from Dogan Ural that not only showcases this amazing zoom in video from his renders, but he explains the steps he took to create it in the thread as well.

Magnificent 128x zoom

NanoLand: Day 05

What is real?

Sound on

Prompts and settings are in the thread

pic.twitter.com/kH69BIlqB8

— Dogan Ural (@doganuraldesign) December 22, 2023

That’s kind of a reverse process that I created for my Zoom Out animation using Midjourney and After Effects in my full article AI Tools: Animations with Midjourney & After Effects earlier this summer. I’m looking forward to experimenting with this new process as well!

Audio tools

There have been some advancements in audio tools as well. Take Adobe Podcast for instance.

When it was first released as a beta it was just a drag/drop your audio and hope it helped clean it up (and it usually did pretty well). But now not only does it have the Enhance Speech tool, but also a good Mic check tool that will determine if your setup is good enough quality to record your voice over. The Studio allows you to record, edit and enhance your audio right in your browser and has tools for transcription and pre-edited music beds.

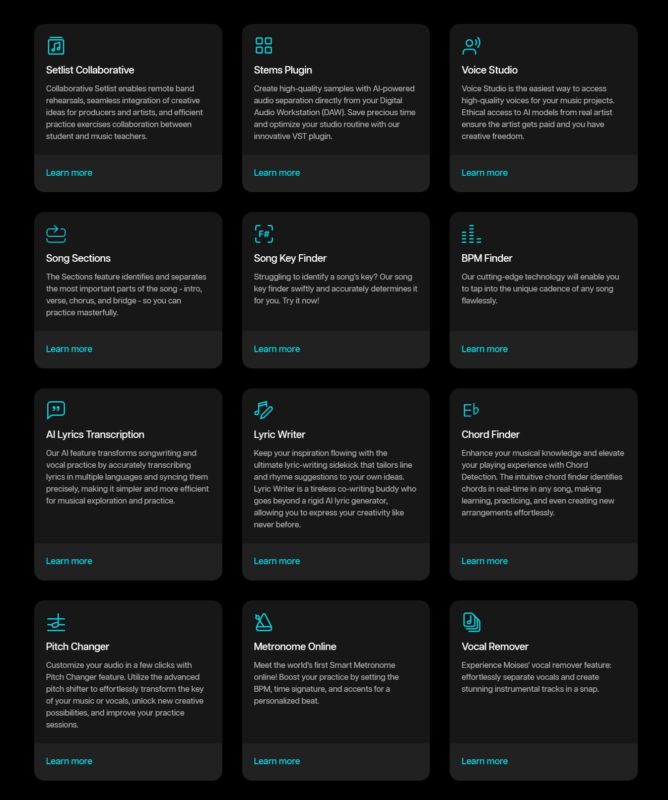

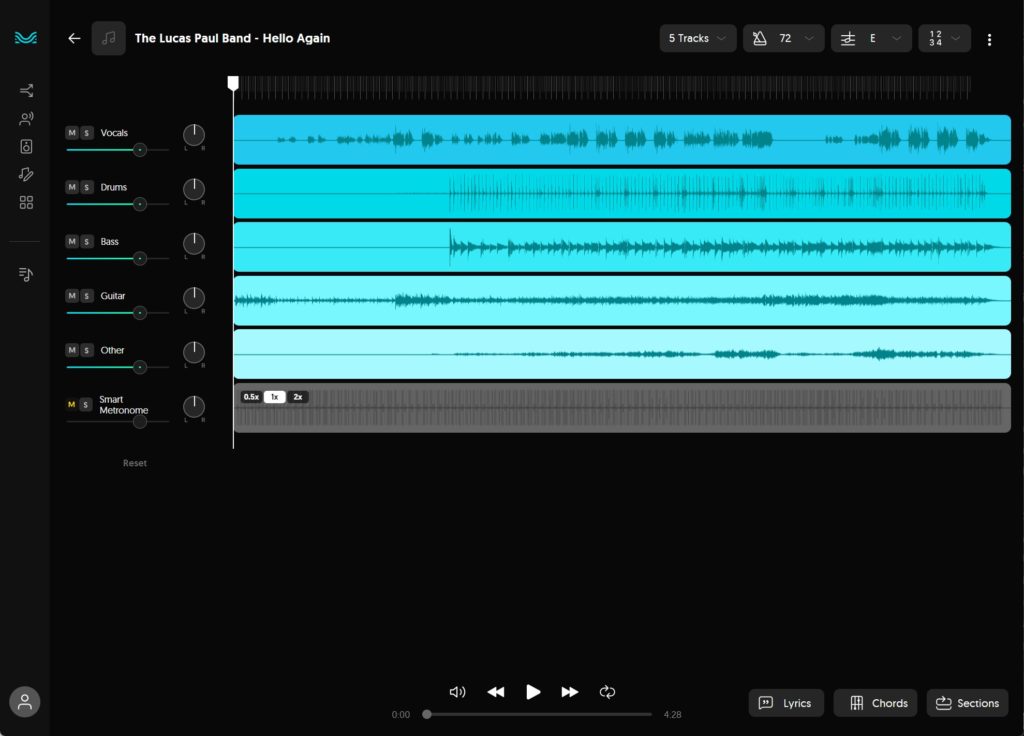

A surprising recent discovery was Moises.ai, a series of AI tools developed for musicians for your desktop computer, web browser or mobile apps. It has several features I’ve yet to explore fully, such as Voice Studio, Lyric Writer, Audio Mastering and Track Separation.

With the Track Separation feature, you can upload a recorded song and specify how you want the AI to break it down into individual tracks, such as vocals, bass, drums, guitar, strings, etc. It does a pretty remarkable job that lets you isolate and control the volume of the different tracks so you can learn your guitar riffs or sing along with the vocals remover.

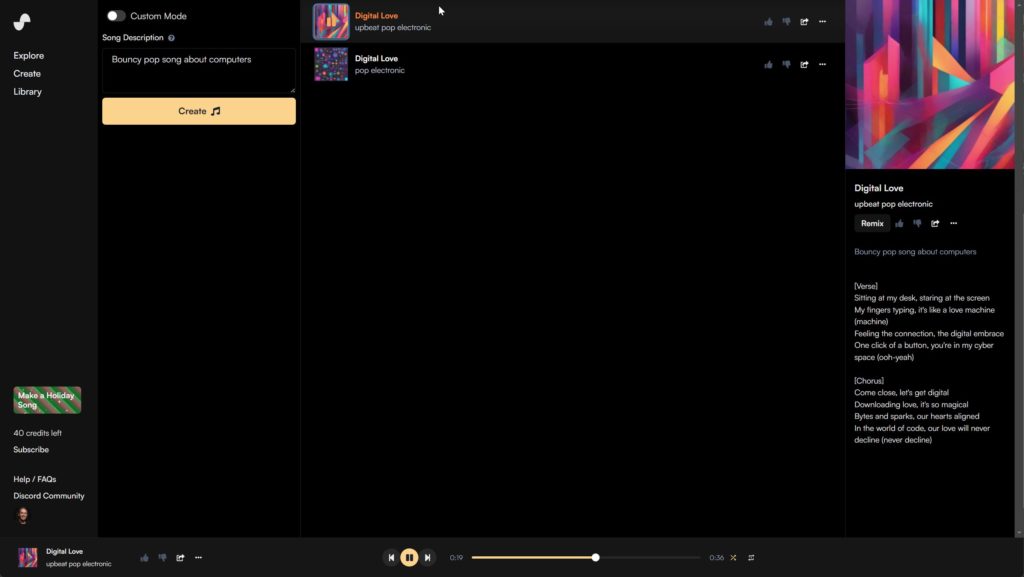

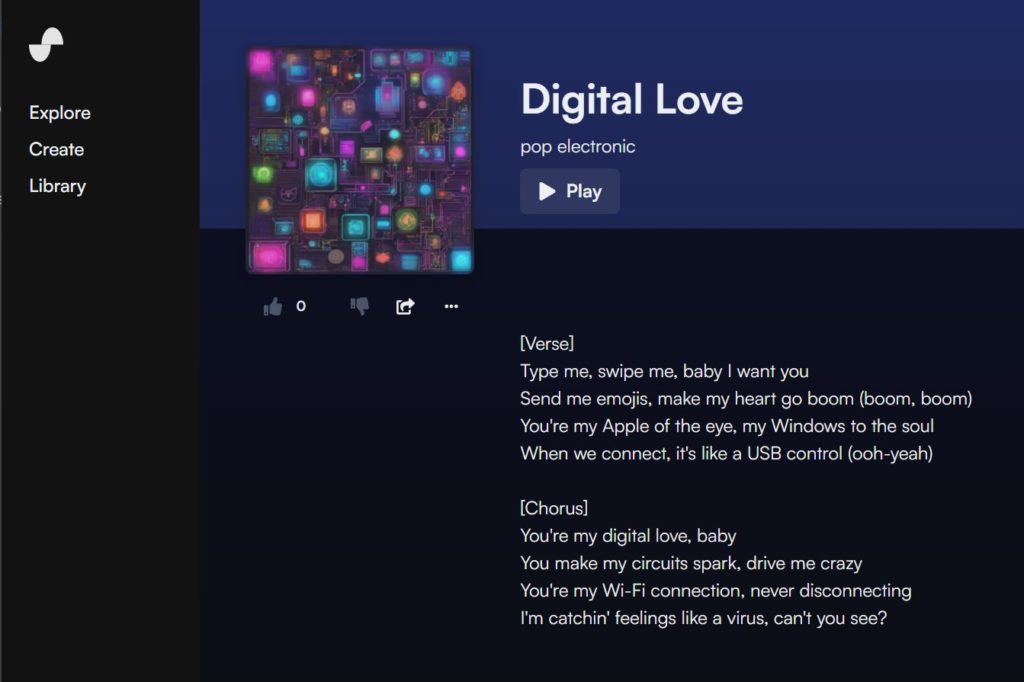

And for fun, you can use Suno.ai where you can generate a short song with just a text description. In this example, I simply wrote “Bouncy pop song about computers” and it generated two different examples, including the lyrics in just seconds.

Here’s a link to the first song it generated (links to a web page)

And here’s the second song (with lyrics show below):

I’ve covered a lot about ElevenLabs ai in several of my articles, and how it has been part of our production workflows for how to videos and marketing shorts on social media. I’ve even used it in conjunction with my video avatars covered below.

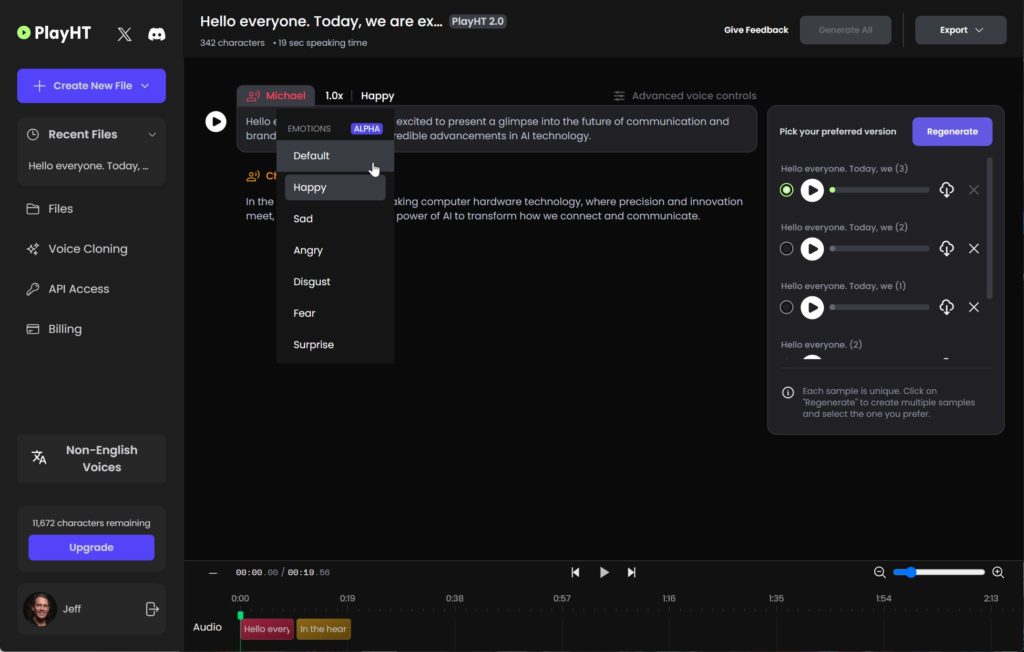

But there are new ai tools that are up and coming to challenge them with more features besides cloning and synthesis, such as adjusting for a range of emotions and varying the delivery of the text. One such tool is PlayHT. You can start with 100s of synthesized voices and apply various emotions to the read, or clone your own voices and utilize the tool the same way.

Video & Animation tools

I’ve been mostly interested in this area of development as you can see from some of my other AI Tools articles, including AI Tools Part 4 where I shared workflows and technology for video and animation production back in August.

I’ve been experimenting more with available updates to various AI software tools, such as HeyGen, which I featured in an article and tutorial on the production workflow for producing AI Avatars from your video and cloned voice.

Since then, I’ve been working at further developing the process and have been producing AI Avatar videos for various high-end tech clients (I can’t divulge who here) but I did create this fun project that utilized 100% Generative AI for the cloned voice, the video avatars and all the background images/animations. It’s tongue in cheek and probably offensive to many, but it’s gained attention so an effective marketing piece!

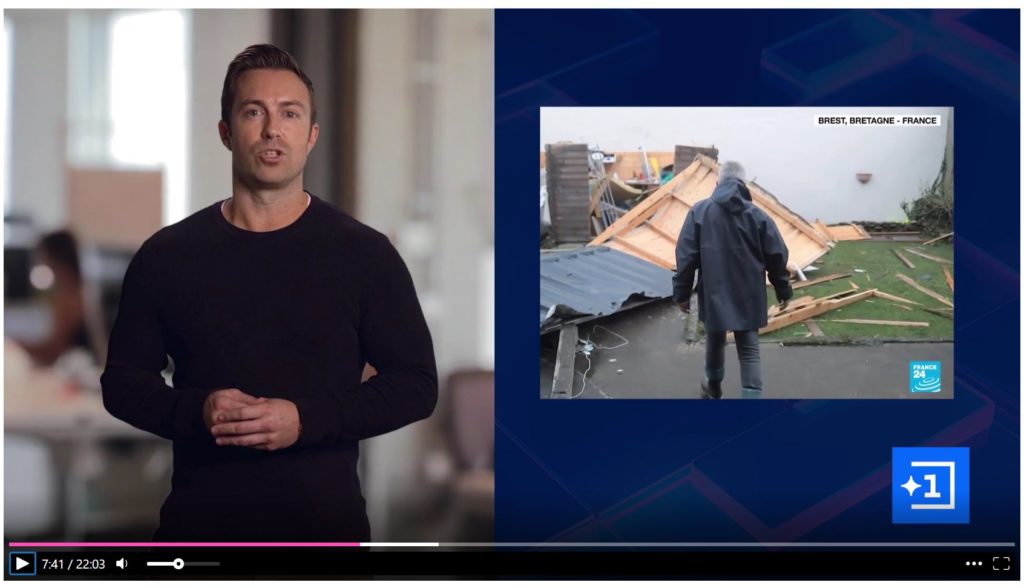

On that note, there are already business models lining up to utilize this technology for commercial applications, such as this model for a personalized news channel: https://www.channel1.ai/ They’re mixing AI Avatars for the news anchors and reporters and feeding collected reels to stream stories to your region and interests.

Another tool that’s been making great strides in video production is Runway AI. I’ve featured it in past articles, but the tools and workflows for producing some creative content have been shared around social media and the community is really getting creative with it.

I featured a how-to article/tutorial on how to make a “AI World Jump Videos” like this one:

I’ve also demonstrated several of these tools and techniques for virtual conferences I’ve taught at such as the Design + AI Summit from CreativePro earlier this month. Here was a teaser I produced for the session on LinkedIn:

You will be seeing much more utilizing this incredible technology in the coming months. I’m really excited about its development and projects I’ll be working on.

New Technological Developments

So what’s next?

I won’t be continuing these category-laden industry articles into the new year, so expect to see more shorter, individual AI Tools articles and tutorials, which will include updates and most likely, project workflows. I also won’t be continuing to update the massive “AI Tools The List” as it’s nearly a full-time job keeping up, plus there are so many “lists” out there from various tech portals that it’s all becoming redundant. I may do some kind of smaller list for reference or whatever, but we’ll have to see what the industry does, as things change literally daily.

What I WILL commit to is to bring you exciting new tech as it happens and I can share it as I discover it to. The best way to find up to the minute announcements and shared white papers is to follow my on LinkedIn account.

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now