I know this! I just use H.264 for everything!

Whoa there. Let’s take a quick step back and take this a step at a time. There are a lot of codecs (methods for COmpression-DECompression of video data) out there, and a lot of myths and outdated opinions. What was true ten years ago is no longer the case, and though it looked like distribution was standardizing around H.264 a few years ago, things have changed. Often, different codecs will be used in production, for delivery to web platforms, for delivery from streaming services, and it can vary quite a bit.

Starting with an easy one, can I tell what codec a file uses from its file extension?

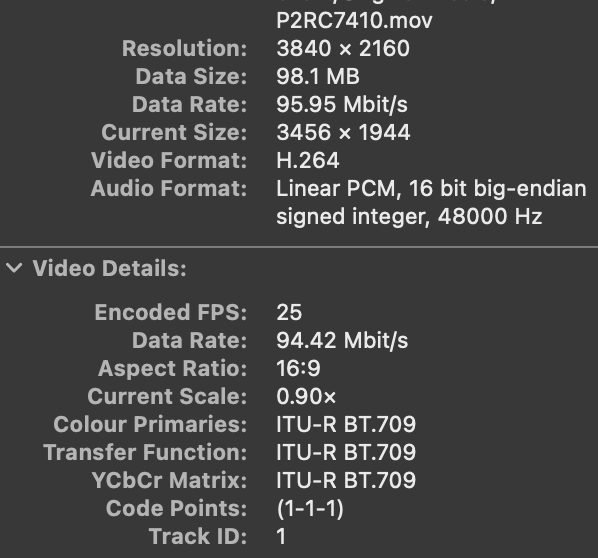

No — you can only tell the type of container, not the codec used to compress the video information inside. Containers (including those with extensions MOV, MP4, MXF and MKV) describe how the information is stored, and what kinds of information can be included, but they aren’t an exciting part of the codec story. While most containers don’t cause any problems, you can’t put a timecode track in an MP4 like you can in a MOV. (Incidentally, MP4 is actually a ISO standard based on MOV.)

What are a few common codecs?

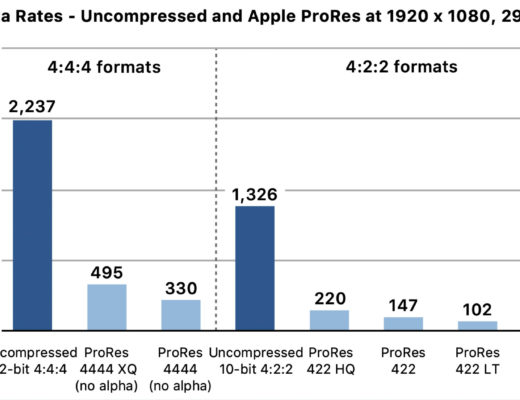

H.264 (or AVC), HEVC, ProRes and DNxHD are a few you may have encountered. AV1 and VP9 are heavily used in distribution, but they’re not yet widely used in production, for reasons that will become clearer. Some of these codecs are usually more heavily compressed (H.264, HEVC) and some usually less so (ProRes, DNxHD, DNxHR). It’s also important to realize that most compressed codecs have variable bit rates (to hit a specific size target) while less compressed intermediate codecs like ProRes have (more or less) fixed data rates at specific frame sizes and frame rates.

Is data rate important?

Hugely — it’s the reason a file is small or large. In general, a newer compressed codec will be more efficient and have higher image quality than an older codec at the same data rate. Another way to look at it: older compressed codecs need to use higher data rates to achieve the same image quality as newer ones. But also remember that different encoding apps can achieve different results.

I’m in production. Why should I prefer one codec over another?

Different kinds of shoots have different needs. If you’re an event shooter, you probably need moderate file sizes at high quality (H.264 or HEVC). A feature cinematographer needs pristine quality at any file size (ProRes 422 HQ or better, a camera-native format, or a RAW format like BRAW, ARRIRAW or ProRes RAW). A game streamer needs screen recordings in high resolution, with high frame rates but moderate data rates. A social media content creator probably just wants quick transfers. One size does not fit all.

But not matter how you record — remember to keep post-production happy. Shooting in a codec that needs conversion before playback means extra work in post.

So what about post-production?

If you’re an editor, you want to work with files in a codec that’s high quality, and easy to play back, so you might be able to use camera original files (H.264, HEVC, or ProRes, depending on the shoot) if your computer can handle them. But if your production shot on a codec that looks great but is hard to work with, you might convert to a high quality intermediate (ProRes, DNxHD, DNxHR) or a low quality offline proxy format (ProRes Proxy or H.264) depending on how much storage you want to use.

If you’re in VFX or color, you probably want the absolute highest quality files, and the file size doesn’t matter, so pick the nicest flavor of the intermediate codec your provider prefers. (In fact, many VFX pipelines often use still images rather than videos.)

What about video consumers?

Most consumers don’t care about codecs directly, but they do care about their effects. Speed of playback and battery life impact on a mobile device are probably the biggest issues, with quality a distant third for most.

If a file can be made smaller, it’s less likely to be interrupted by a slow network, and if a codec is chosen that is hardware-accelerated on most devices (hello, H.264) then that will save battery life. Providers will always strike a balance between quality and file size, but may have to choose a codec based on what’s easy for their customer base’s devices to play back.

If you want the best possible image quality, purchase your content explicitly. Paid streaming is higher quality than mainstream “free with your plan” streaming, and Blu-ray is (usually) better again.

So, streaming providers always choose a codec that’s easy for consumers to play back?

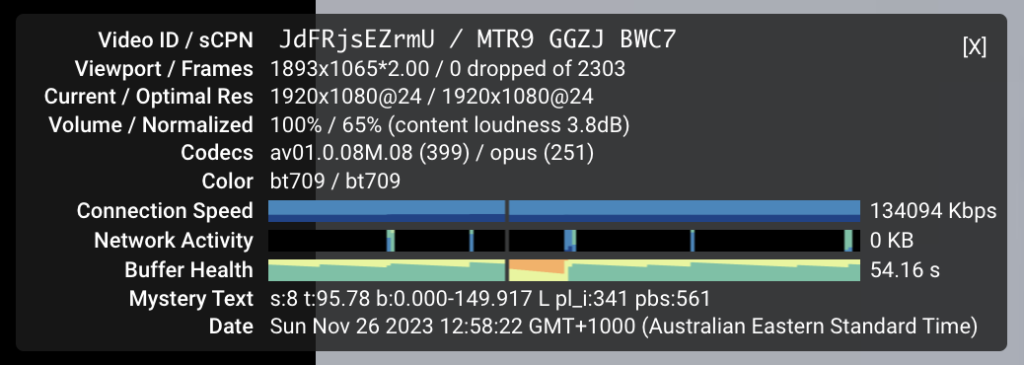

Not necessarily. YouTube largely sends video in the VP9 codec to desktop computers, and though it looks good, it’s not widely hardware accelerated like H.264 (and to a lesser extent, HEVC) are. This impacts CPU usage, and if not plugged in, battery life. However, because iOS and iPadOS devices are far more likely to be unplugged, YouTube will still send them video in the hardware-accelerated H.264 codec.

Why not stick with H.264 across the board?

Simply, H.264 doesn’t look as good as more modern codecs in the same amount of data. Also, H.264 hardware decoders mostly can’t cope with 10-bit data (needed for HDR) and can’t cope with resolutions above 4K. To move forward, the same standards process that produced H.264 created HEVC (once known as H.265) as the successor to H.264, with the same quality at a lower data rate. The same process is now proposing H.266, or VVC, which promises even better compression. But YouTube doesn’t use either HEVC or VVC.

So why doesn’t YouTube use HEVC?

Some codecs, including HEVC, are patent-encumbered, meaning that the algorithms and methods used to compress video aren’t all free to use. In general, large companies that use H.264 and HEVC choose to join patent pools, paying fees, sharing relevant patents with one another and managing the patent licensing process. There are multiple HEVC patent pools, and HEVC is more expensive to support than H.264 was.

Also — YouTube is a massive target. If YouTube was to use HEVC for the colossal amount of footage they distribute, a previously unknown patent holder, not part of the existing pools, could perhaps make a claim over some obscure part of the encoding process, leaving YouTube on the hook for a colossal amount of money.

To avoid these issues, and keep data rates similar while increasing resolution, they moved to the open VP9 codec for resolutions larger than 1080p. Another open codec, AV1, is available for some videos and some resolutions, though it’s more demanding on the playback device.

So to avoid patent issues and high costs, everyone else moved to VP9 too?

Nope. VP9 is not hardware accelerated on most devices, and to some at least, it’s seen as a Google invention. There are questions about how patent-free it truly is, too. Instead, some (like Apple) use HEVC, pay the fees, and rely on the patent pools to cover themselves. They’ve even created an extension, MV-HEVC, to cleverly store the separate 3D video streams used by Spatial Video.

Others (like Netflix) have skipped over VP9 and moved to AV1, a newer open, royalty-free codec designed to avoid patent issues and minimize costs while compressing data even further. The Alliance for Open Media, creators of AV1, includes many high-profile members, including Google, Netflix, Microsoft, Intel and (though they joined late) Apple.

Hang on, royalties? Do I have to pay per-video with some codecs?

Codecs like H.264 are only truly free to use when the videos are delivered free to consumers. In theory, if you’re delivering paid training courses or other video, then your delivery platform should probably be paying a royalty on your behalf — but I’m not a lawyer and this is certainly not legal advice.

Royalties are at least part of the reason why so many video games use the ancient-but-royalty-free Bink Video codec instead of something more modern. Standards are great, but they won’t always be widely adopted if they’re not free.

So should we all be moving to AV1?

Probably not yet, because working with complex codecs purely in software can be slow and intensive. Hardware accelerated decode support for AV1 only just arrived in the latest M3 chips, and there’s no accelerated encode on Macs yet. DaVinci Resolve only added AMD-based hardware encoding of AV1 over the last year or so on PCs, but no other major NLE has followed suit, and playback is still not universal — Windows needs a plug-in for support, for example..

Let the streaming services worry about bleeding-edge codecs for now, and stay with things that are easy to work with. Use a higher data rate to keep quality high, or switch to an encoding app that does a better job with existing codecs. Eventually it’ll become much easier to make and play back AV1, but don’t worry about it until it turns up on a spec sheet with your name on it.

What programs should I use for converting between one codec and another?

Compressor and Adobe Media Encoder are popular and widely used for production tasks, like making proxies or converting to intermediate codecs. Note that some NLEs can do this for you too.

What codecs should I be using for editing?

Whatever your system plays back efficiently, which means whatever your system has hardware acceleration support for. On a modern Mac, that’s H.264, HEVC or ProRes, and on a PC, your CPU and/or your GPU should accelerate H.264 and HEVC at least, but check actual footage against your workflow — not all files are equal. AV1 decode acceleration just turned up on the M3 Macs, but it’s not really a codec intended for editing.

What codecs should I be using for collaboration?

Start by taking the list of codecs everyone in the pipeline can work with, then figure out what needs to happen to that footage. If it’s copied once, processed in an NLE, then output, camera-native files might be fine, assuming they’re hardware accelerated in that NLE.

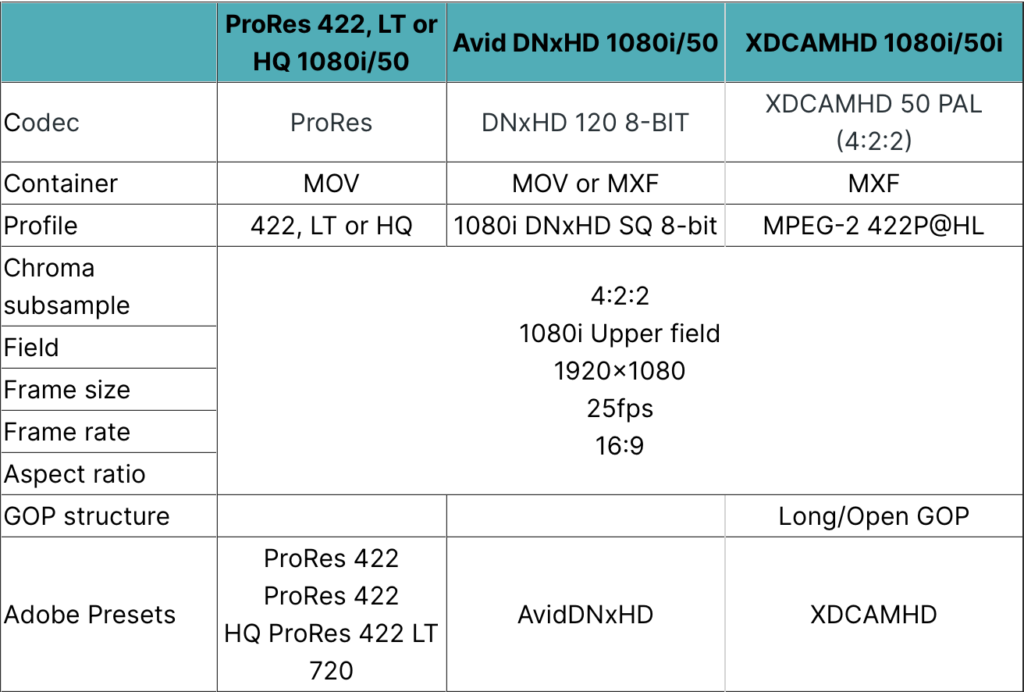

But if you need to send files to an unknown third-party on a random computer, or if some camera-native files are not easy to work with, converting at least those files into some kind of intermediate or mezzanine codec like ProRes (or DNxHD/DNxHR for Avid) will help keep workflows smooth. If you have space, use ProRes/DNxHD/DNxHR to replace camera-original files, or if there’s too much footage to allow that, use a low-quality proxy codec (potentially even H.264) as a smaller stand-in during editing. If you go with proxies, switch back to the original files for final output.

Test your workflows, and don’t assume that wisdom from five or ten years ago still applies. Converting everything to ProRes will work smoothly, but the file sizes are too much for some productions, and it might not be necessary.

I need to share files with transparency. Should I still use the Animation codec?

Nope, that won’t work in the Finder, Final Cut Pro, a random client PC or any mobile device. Instead, use ProRes 4444 for transparency support along with smaller files that play back faster on modern Macs. It will also work wherever ProRes playback is supported (which is an open standard, and therefore theoretically free forever). HEVC can also support transparency, but if you’re in production, you’ll probably want to use the higher quality ProRes 4444 instead.

OK, what about archiving?

Codec longevity is a concern for archivists, because many older codecs no longer function in the way they once did. You’ll usually be able to play those clips back somehow, but you might have to track down an older system or a specialist program to access them. For example, Apple deprecated their oldest codecs several years ago, meaning that files encoded with the once-popular Cinepak and Animation codecs don’t work on modern Apple software.

ProRes is a safe archiving choice for high quality media — ProRes decoding is an SMPTE standard, so it will always be readable in the future. Creating additional copies in common, industry-supported standards like H.264 and HEVC, using a high data rate, is also a sensible idea.

What codecs should I use for deliveries to web platforms and social media?

H.264 or HEVC for SDR content, HEVC for HDR content, or ProRes for everything if you have the bandwidth. Modern Macs have hardware encoders to accelerate all those deliverables, and PCs with decent GPUs can accelerate exports to most modern compressed formats. Whichever codec you choose, keep the data rate high.

Every web platform will recompress whatever you send them to a much smaller file, so you just need to send them a file that looks great to you, in any codec they support. Platforms usually recommend specific codecs or data rates, but they’ll actually take just about anything. Sometimes official guidelines are far lower than I’d be comfortable delivering, though. For example, if I’m delivering a 24/25/30 fps 1080p SDR H.264 to YouTube, I’d want to use a data rate of 20Mbps, but YouTube asks for just 8Mbps, and will show users around 2.5Mbps. For comparison, a ProRes 422 file requires around 120-140Mbps.

You can send them ProRes if you want, and while some people do see a quality boost from doing so, these files can take a lot longer to upload.

What about professional deliverables?

Standards here will focus on quality, not file size, and can involve older codecs if the specs haven’t changed in a while. The process won’t necessarily be convenient or quick — set some time aside if you need to make a DCP or IMF, for example. It’s entirely possible you won’t be using any of the codecs we’ve mentioned; DCPs and Netflix IMFs both use JPEG2000 (a still image format) as the basis of their compression.

For specific production deliverables, read the spec sheet carefully, then look to an app like Compressor or Adobe Media Encoder to set the precise combination of codec, container and data rate required.

What if I need to make really small files?

Normally, the best way to share files with clients is to post to Vimeo, frame.io, Dropbox Replay or another video review site, then send them a link. But if you do need to make very small compressed files for direct local playback, look at Handbrake (free, easy) or FFmpeg (free, complex) and use H.264 or HEVC. Encoding to newer codecs is usually slow, and produces a file that’s less compatible.

Can you give me a one-line summary?

No — codecs are complex. But:

- H.264 is still an decent choice for lots of video today.

- HEVC is fine for many purposes too.

- Intermediate codecs like ProRes still rule in production.

- Widespread hardware encode and decode is critical for widespread codec adoption.

- Eventually, many of us will probably move to a better open source codec like AV1 for many deliverables, but today is not that day.

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now