Today, here in Brisbane, Australia, I was one of the first regular humans to pick up an iPhone 16 Pro Max, so here’s a detailed early look at how the latest iPhone could impact the working lives of pro video people.

Not every job will end up being shot on a dedicated camera, and as our phones improve, it’s worth understanding how capable they can be. Today, you could use a phone for family snaps, for the occasional b-roll shot, or even as the main camera for a full interview, but if it’s going to work, you need to know its limits. That’s why I’ve been out shooting this morning, and why I have some sample footage and fresh opinions for you.

A quick recap of the new features

We covered the new features of the 16 Pro and Pro Max in an article shortly after launch, so I won’t spend too much time on them here. There’s a new Camera Control button that can be tapped to launch the Camera app or swiped to change camera settings, and it works with third-party apps too.

It’s placed to work in both landscape and portrait, and though it works well today, full support hasn’t arrived yet. In the future, you’ll be able to tap-and-hold this button to activate Apple Intelligence, telling you what you’re looking at, or adding a date from of a poster you’re snapping to your calendar.

As an aside, while I am running the latest beta and do have access to Apple Intelligence, I can’t really review the experience — it’s still in beta. However, for me it’s been working very well on my 15 Pro Max over the last few weeks, and when its features become more widely available over the next few months (it’s arriving in stages) I think people are going to really like it.

Back to pixels, the ultra-wide 0.5x lens has gained a 48MP sensor, increasing the amount of detail available at that focal length to match the main 1x sensor. In theory, this could give enough pixels to enable Spatial Video in 4K, but Apple has only enabled Spatial Photos officially, and you’ll still need to use a third-party app to capture Spatial Video in 4K. Unfortunately, 4K Spatial Video quality hasn’t significantly changed from the 15 Pro Max, though the Camera app interface now combines spatial photos and videos into a new Spatial mode.

In all video modes, you can now pause and restart video recording. While I don’t think I’ll use this much myself, I’m sure some will find this handy. Another surprise addition is Spatial Audio Capture. This uses four microphones to create an immersive soundfield, then allows you to tweak the positioning of audio in the shot to your taste. More on all these Spatial improvements below.

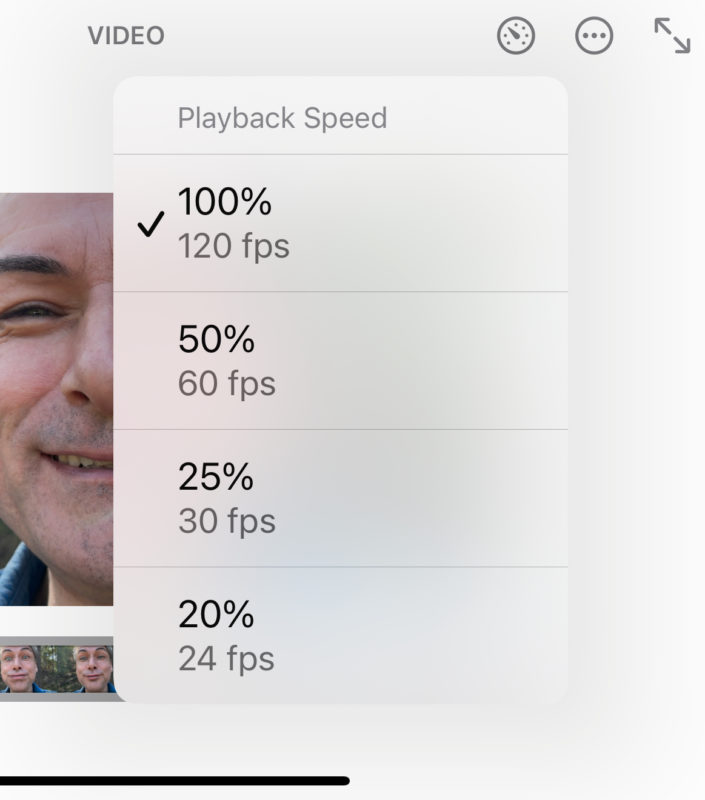

Video processing speed improvements mean that 120fps capture is now possible in 4K — though only on the main 1x lens — and playback speed can be set to 24, 30, 60, or 120fps. As usual, you’ll see the true frame rate when you bring it into an NLE, and of course you can still set the frame rate as you wish there, but it’s terrific to have the equivalent of Adobe’s Interpret Footage command right there in the field, ready for client review.

Lastly, benchmarks show the iPhone 16 Pro Max’s A18 Pro is about 15-20% faster than the A17 Pro, and that it’s faster than the M1 on single-core and multi-core benchmarks. While most people don’t really notice processing power in everyday tasks, this is (again) the fastest mobile CPU available, and if your current phone is two or years old, it’s a solid bump. Both the regular and pro iPhone models received A18-family chips this time around to provide processing power and extra RAM to drive Apple Intelligence.

Oh, and it can charge much faster than older iPhones too, both wired, and wirelessly. But that’s enough about the phone; we’re here to look at the images it can capture.

Log Video — still great

If your last experience with iPhone video was before the 15 Pro and Pro Max enabled Log recording, you’ve been missing out. When you shoot in Log, you’re not just getting a different gamma curve, but you’re deactivating a lot of the sharpening, tone mapping and other processing that’s normally applied to iPhone video. While you can only use Log with ProRes HQ if you use Apple’s Camera app, using a third-party app like Blackmagic Camera or Kino allows you to use codecs which don’t require as much space, like HEVC. You can bring the Log clips back into a “normal” color space automatically in Final Cut Pro, or in any other NLE with this free LUT. As usual with Log footage, add your corrections before the Log transform for maximum control.

Of course, a dedicated camera definitely brings flexibility that a phone doesn’t, and allows you to shoot in a wider variety of situations. But the phone’s getting really close. How about the newly upgraded ultra-wide angle? Here’s a matched, cropped still, taken from Log video, comparing the 16 Pro Max (on top) with the 15 Pro Max (below):

Has the iPhone 16 Pro Max changed the fundamental nature of Log over the 15 Pro Max? No. It’s very much the same, but the ultra-wide angle has improved, bringing good-looking wide-angle video to a wider audience. A bigger change for video is slow motion on the 1x lens — and that’s true for Log recording too.

Slo-mo video — much improved

Slow motion is one area where the iPhone was due for improvement. Though the iPhone has supported 120 or 240fps at 1080p for years now, quality definitely suffered compared to a dedicated action camera like the Insta360 Ace Pro, or the Lumix GH6.

Here’s the iPhone 16 Pro Max’s 120fps 4K, cropped next to the iPhone 15 Pro Max shooting 120fps HD:

In terms of dynamic range, the 15 Pro Max can’t shoot slow motion in HDR, while all the other cameras can. The 16 Pro Max can shoot Dolby Vision HDR at 120fps, but not Log — so you will see more sharpening in this mode. Still, the 16 Pro Max is the only device here that can record to ProRes in 120fps to an external SSD — required, as this mode can’t be recorded internally. Be ready for huge files if you really do need 4K ProRes Log at 120fps.

Increasing frame rate usually comes with compromises in terms of image quality. Some of these issues are down to light limitations (you can’t beat physics) but most high-frame-rate issues are down to how fast a sensor can be read out. This year’s focus on sensor speed means that 120fps footage looks much more like normal speed footage in terms of image quality. If you need to capture casual slow motion footage and an action camera doesn’t suit, this is a great option.

Spatial improvements

While I was hoping that the newly upgraded ultra-wide sensor would enable 4K Spatial Video recording, that hasn’t happened. Some developers of apps that do support 4K Spatial Video recording have updated for the 16 Pro models, but there seems to be very little difference in spatial video quality.

However, we do now have official Spatial Photo support, which is very welcome. Spatial now has its own tab in the Camera app (not just a toggle in the Video tab) from which you can shoot photos or videos, and you can view them on an Apple Vision Pro.

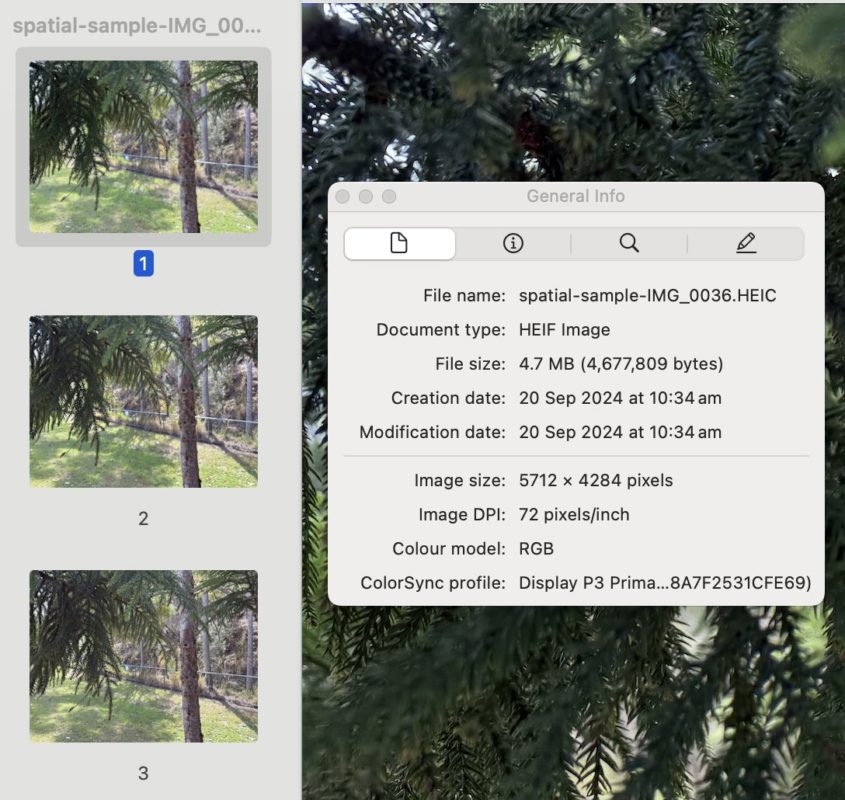

As an iPhone crops in on the ultra-wide 0.5x lens to capture an angle that matches the 1x lens, resolution can be a concern. The left and right angles in a spatial photo are 2688×2016 each, which look good, but are a step down (5.5MP) from a regular 12MP shot. However, a higher-resolution (5712×4284, 24MP) 2D image is also captured alongside the other two angles — great news, because you’re not compromising 2D when you capture in 3D.

One feature of visionOS 2 is that it can create 3D Spatial photos from 2D photos, but this algorithm won’t get everything right; the real 3D image is far more convincing. (Here’s a sample, if you have a compatible device.) Interestingly, when you view these images on the Apple Vision Pro, in visionOS 2, it’s not possible to resize a spatial photo except by using the immersive view. However, you can disable the spatial view temporarily, resize the image, and then re-enable spatial display, and it’s whatever size you want.

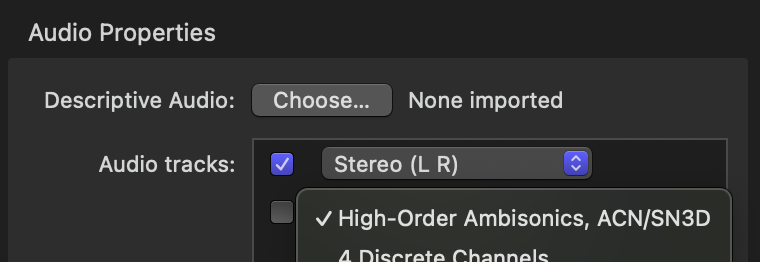

But there’s more Spatial tech, in audio form! The added microphones do allow spatial audio recording, and if you inspect the clip in Compressor, you’ll discover a new ambisonic track alongside the Stereo track, disabled by default. This is a welcome step towards being able to use surround sound in everyday videos, but as with HDR, it’s taking far too long to adopt this tech more widely, and it’s largely a distribution problem.

Today, it’s easy to enjoy both HDR and Atmos audio served to us by paid streaming providers, or on Apple Music, but we can’t deliver it through YouTube for smaller clients. While it’s possible to work in Surround formats in an NLE, Atmos is harder, and distribution is harder still. Since YouTube doesn’t even support 5.1 Surround playback on most iPhones, Androids, iPads, Macs or PCs — only on TVs and devices connected to them — it’s still tricky to tap into the Spatial Audio support now in almost all Apple devices and many Android devices.

Fingers crossed that Vimeo’s upcoming Spatial Video support includes Spatial Audio support too; we need some competition in this space.

Still image improvements

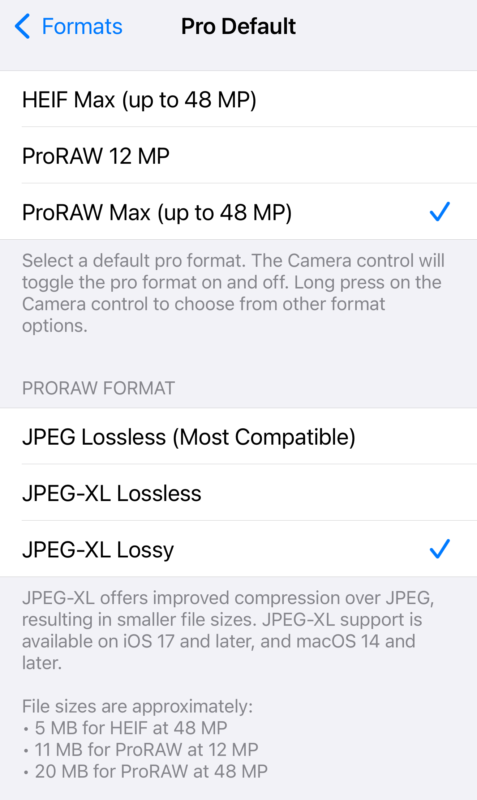

As video professionals are often tempted into still image shooting as well, photos are worth a quick look. The 48MP sensor on the 0.5x lens gives these stills a big quality boost, and since this lens also supports very close focusing, it enables high-quality macro images too. By default, the 48MP sensor produces 24MP images, but if you prefer, you can record the full 48MP as a ProRAW Max or a HEIF Max image instead. This year, ProRAW has some subtle changes, and you can use JPEG-XL Lossy compression if you’re willing to sacrifice a small amount of quality to save a lot of space. A 48MP ProRAW shot on the 15 Pro Max takes approximately 75MB, but JPEG-XL Lossy ProRAW on the 16 Pro Max takes just 20MB.

Note that this isn’t regular JPEG XL support, it’s just the type of compression used internally within ProRAW. As discussed in this article about WebP, there are good reasons to look beyond traditional formats if they can slot into our workflows, but for compatibility reasons, we may still need to ultimately share in older formats too. Today, JPEG XL is still not supported by Chrome (nor by many other apps) so we’re not there yet, but Apple’s (partial) support is certainly welcome.

Besides low-level changes, Apple have made their Filters preset system far more flexible, and given it a new name: Photo Styles. Underneath each image, while adjusting it, you’ll now see a control pad that you can drag to affect the tone and color of the image — much more flexible than a single “strength” slider. If you swipe to pick a preset photo style, you will also see a Palette slider underneath the pad, controlling the strength of the effect in addition to tone and color. Different parts of the images (such as faces) will receive unique treatment, so it’s more complex than it first appears.

These choices can be made while you take photos (in the Camera app) or afterwards (in the Photos app), and all choices made before shooting can be changed after shooting.

Photo Styles can produce extreme or subtle results — with two or three ways to tweak, depending on the style chosen

If you shoot in raw and plan to process them on a desktop, keep working that way. But if you’re just casually snapping, and you don’t need the flexibility of raw on every shot, Photo Styles will help you get a lot closer to the image you wanted, without having to compromise nearly as much. So, if you’re unhappy with the default, somewhat flat look of standard iPhone photos, consider shooting mostly in HEIF, and just dial in the Tone to your taste.

Conclusion

The iPhone 16 Pro Max is a solid step forward for image makers. Camera Control is truly useful, and can be used with third-party apps as well as the native Camera app. The slow motion is more capable, and the device in our pockets is, like it or not, the only camera which many new video creators own. We are not yet at the stage where a phone can replace every professional camera, but every year, a few more features that were unique to dedicated cameras are added to the iPhone too. This year, the Camera Control button makes the iPhone 16 family feel more like a regular camera than any previous model, and Photo Styles should help a lot of people’s casual photos better suit their preferences while JPEG XL Lossy ProRAW will help fans of raw save a ton of space.

Finally, although I prefer the big Max-size screen for a better playback experience, and a bigger battery for longer recording times, there’s a smaller option that’s just as capable. This year, the iPhone 16 Pro has almost exactly the same feature set with a smaller battery and screen, so there’s little compromise if you prefer a smaller phone.

Recommendations? If you ever plan to use a phone to record video, and you’re fussy about images, you’ll notice the difference that Log brings. For most users with a 15 Pro or Pro Max already, you’ll get Apple Intelligence when it’s ready, and you’ve got very similar Log and ProRes video today. Upgrade from a 15 Pro or Pro Max if you want 120 fps, Camera Control, a better ultra-wide lens, or Photo Styles.

It’s an easier recommendation if you’ve got an older iPhone or an Android, and you’re not happy with the quality of the photos or videos you’re able to capture. The bottom line: if you’d like to record high quality Log video on a phone, and you’d like to access to Apple Intelligence over the next few months, the iPhone 16 Pro or Pro Max is a solid choice.

Do you want to know more?

Here are some worthwhile video reviews with plenty of detail and samples:

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now