One of the session tracks at NAB is the Global UHD Conference, devoted to a discussion of progress in higher-than-HD television. I attended two sessions on Saturday. In the first, Clyde Smith talked about adding High Dynamic Range (HDR) and Wide Color Gamut (WCG) into the mix; in the second, Renard Jenkins presented his findings on high frame rate (HFR) studies at PBS. I scribbled notes and grabbed screenshots…

The Current Stage: Adding HDR/WCG to 4K and 1080P

Clyde Smith, SVP Advanced Technology, Fox Engineering

While UHD is distance-dependent (you have to be close enough to the set to see it), HDR and WCG can be seen across the room. HDR and WCG produce an audience “WOW” factor. Bandwidth constraints may limit UHD distribution, especially with channel repacking and ATSC1.0 / ATSC 3.0 sharing, so 1080p with HDR / WCG may be the way to go, especially since 1080p upconversion in most UHD sets is very good.

Example: BT Sports broadcast a UEFA Champions League game in HD (not UHD) HFR, mostly to provide extra value for mobile viewers, not TV watchers!

One size doesn’t fit all, and there are too many formats to even try. HDR10+ coming (scene-by-scene metadata added to HD10), which will help. (Have you ever lined up five HD10 TVs side-by-side and tried to watch the same content on ‘em? It’s not pretty!) At Fox, if you license HDR content, you don’t get to convert to SDR yourself; Fox will provide the SDR downconverts.

You’ll have to provide multiple formats to cover differing production workflows, distribution channels, consumer sets, and other consumer devices (phones and tablets). With ATSC 3.0 we’ll be feeding those other devices directly. Will need to supply multiple formats (e.g., SDR, HD10, HLG, DV), multiple resolutions (1080, UHD, etc.). Nonetheless, for some time to come the majority of viewing will still be HD SDR, so you can’t leave that out.

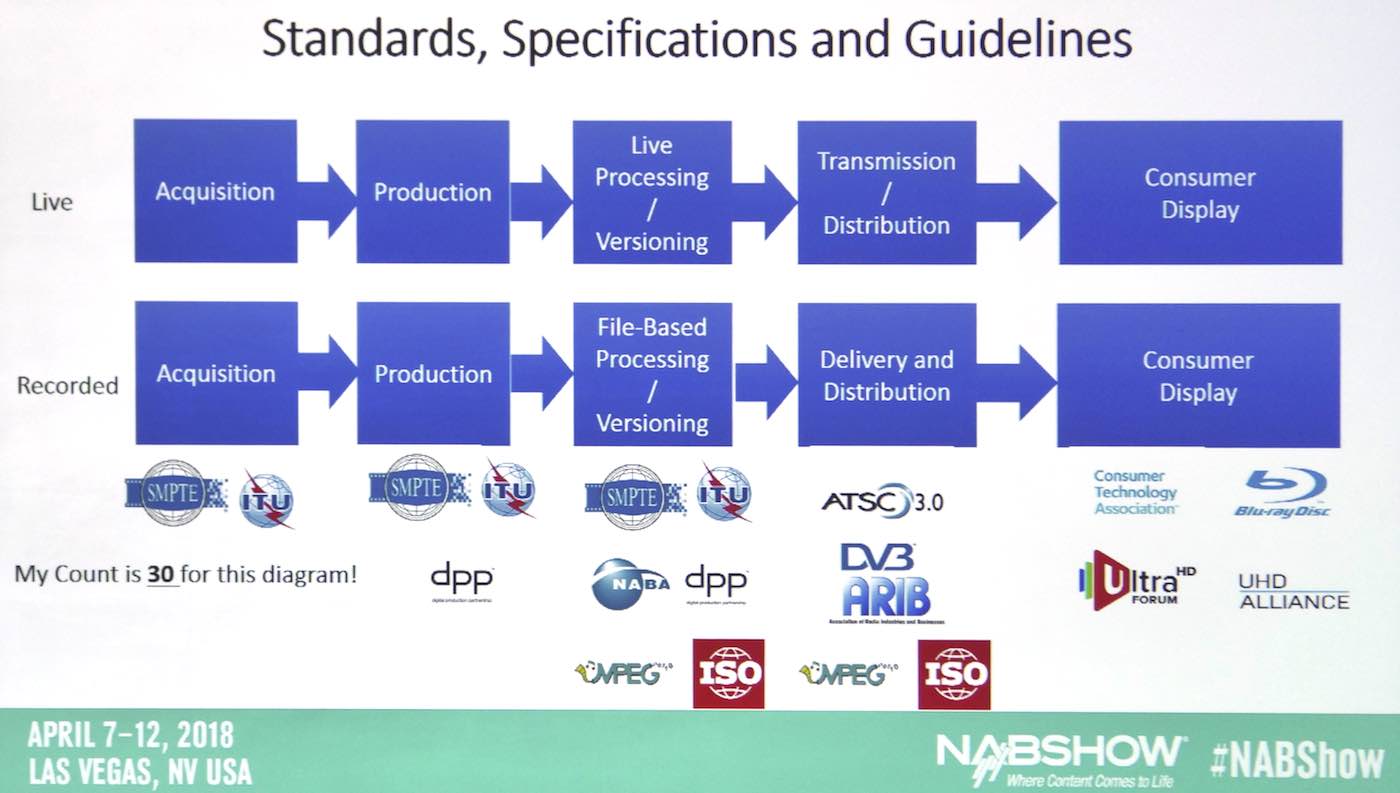

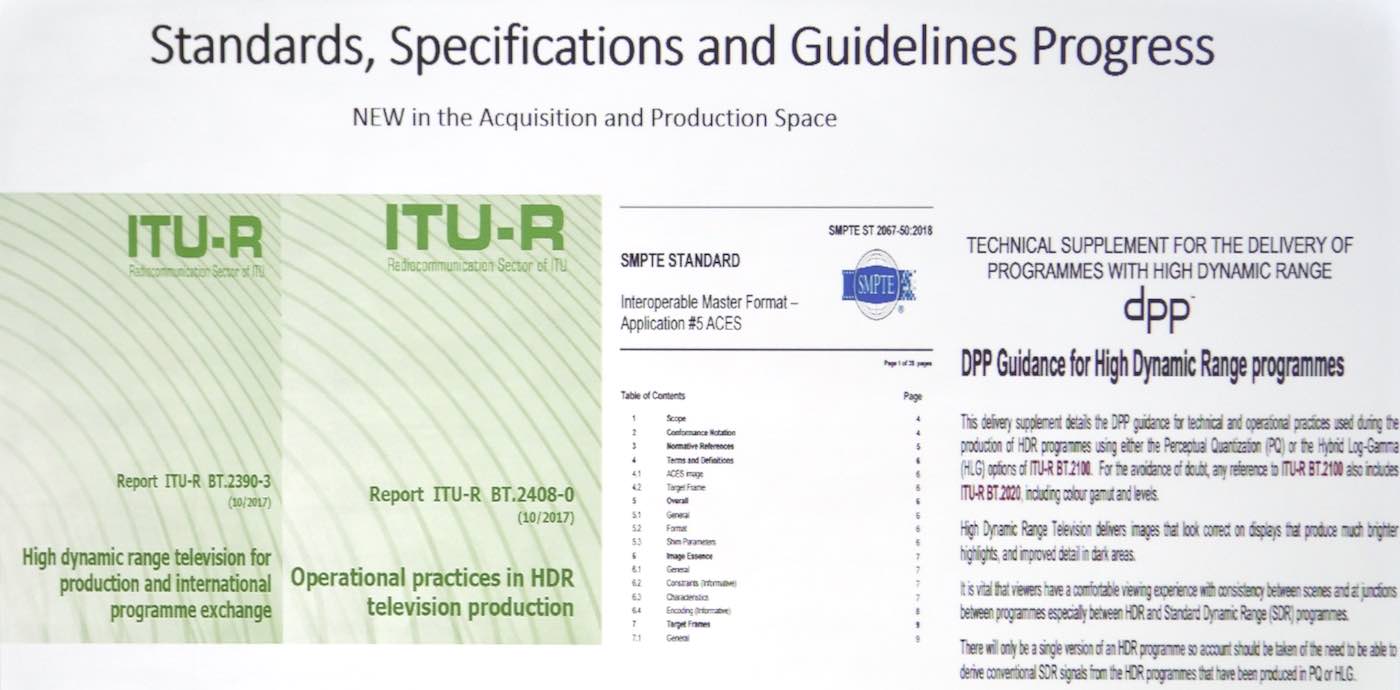

Look at all these standards and parameters…

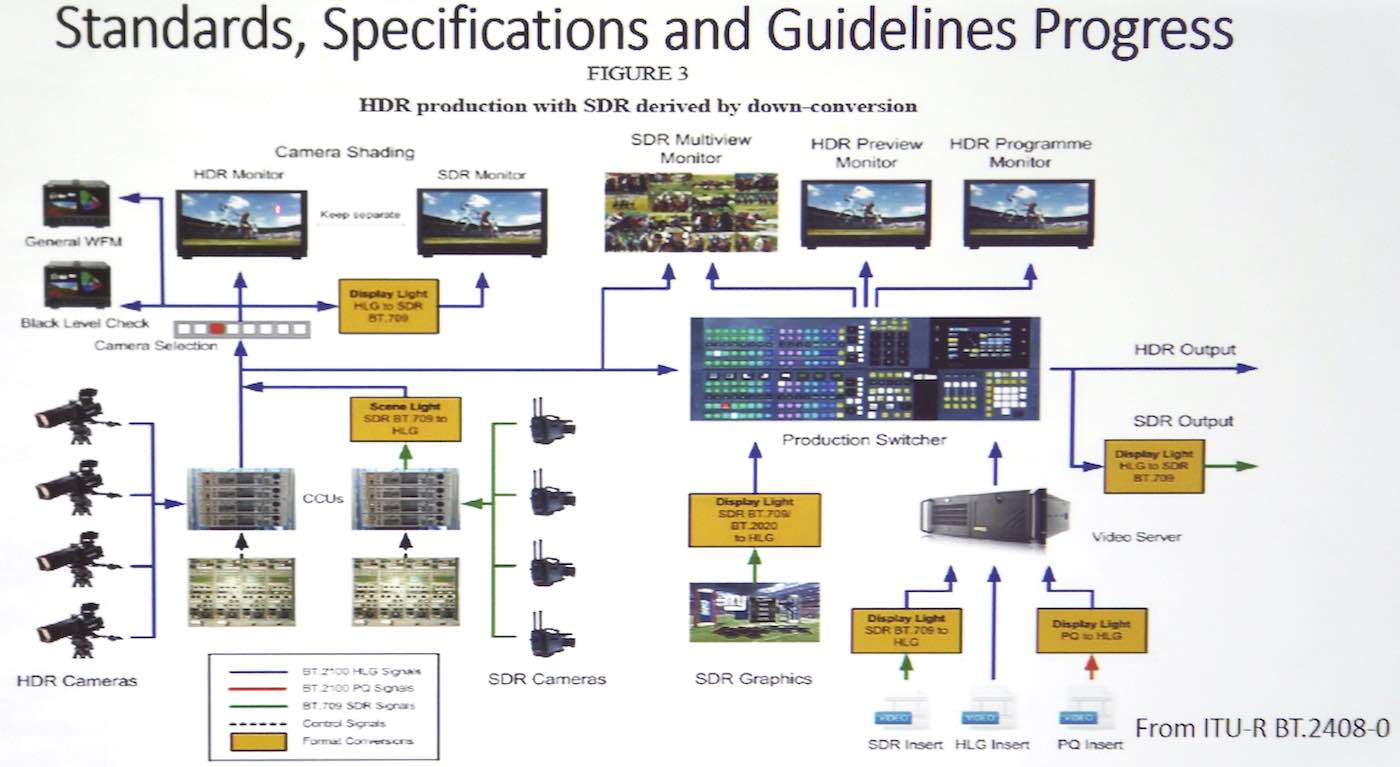

One thing to notice in this chart: keep SDR and HDR separate: If you grade in HDR, your eye will accommodate to HDR, and it takes time before you’ll be able to look at SDR properly.

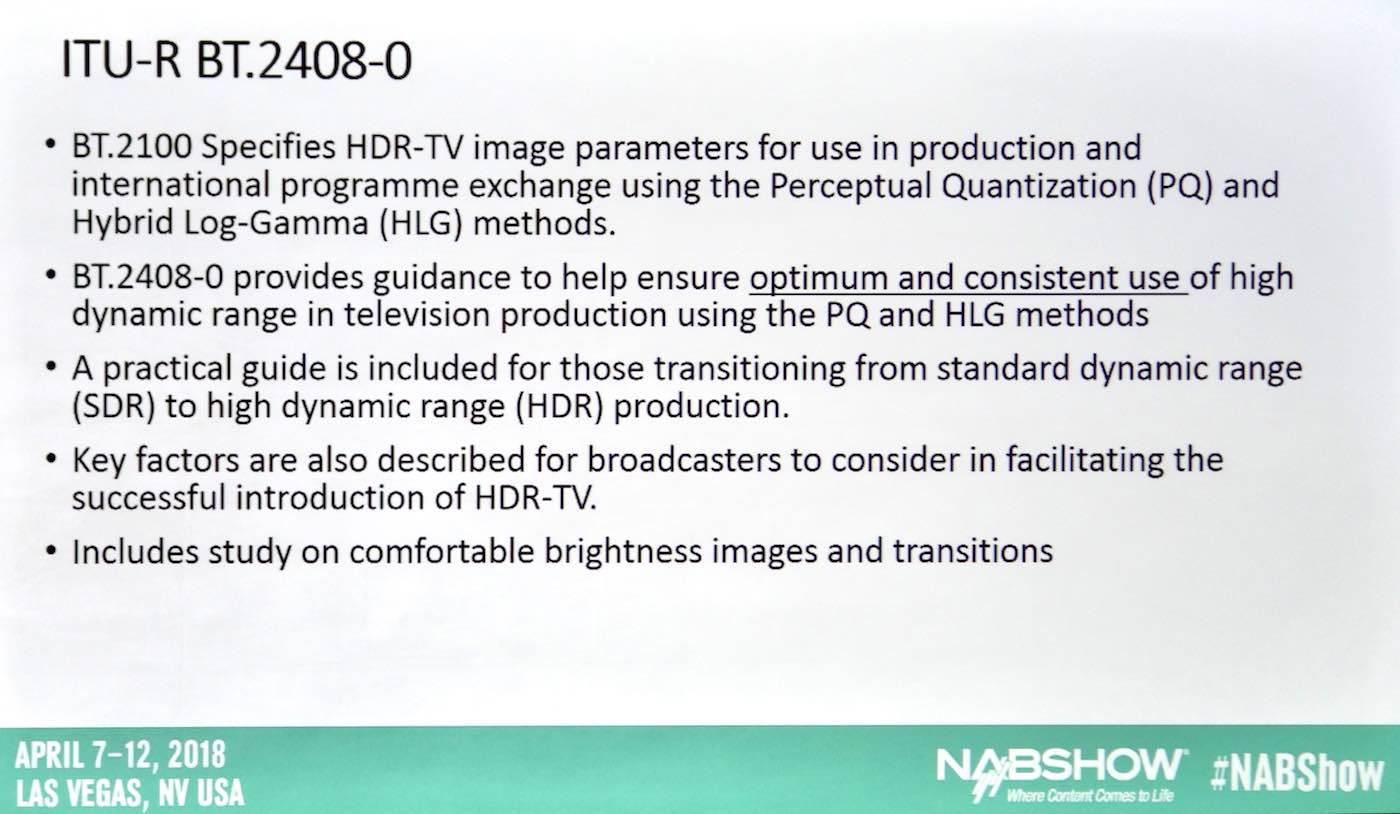

There are recommendations in ITU-R BT.2408 about what people can tolerate before they’ll complain!

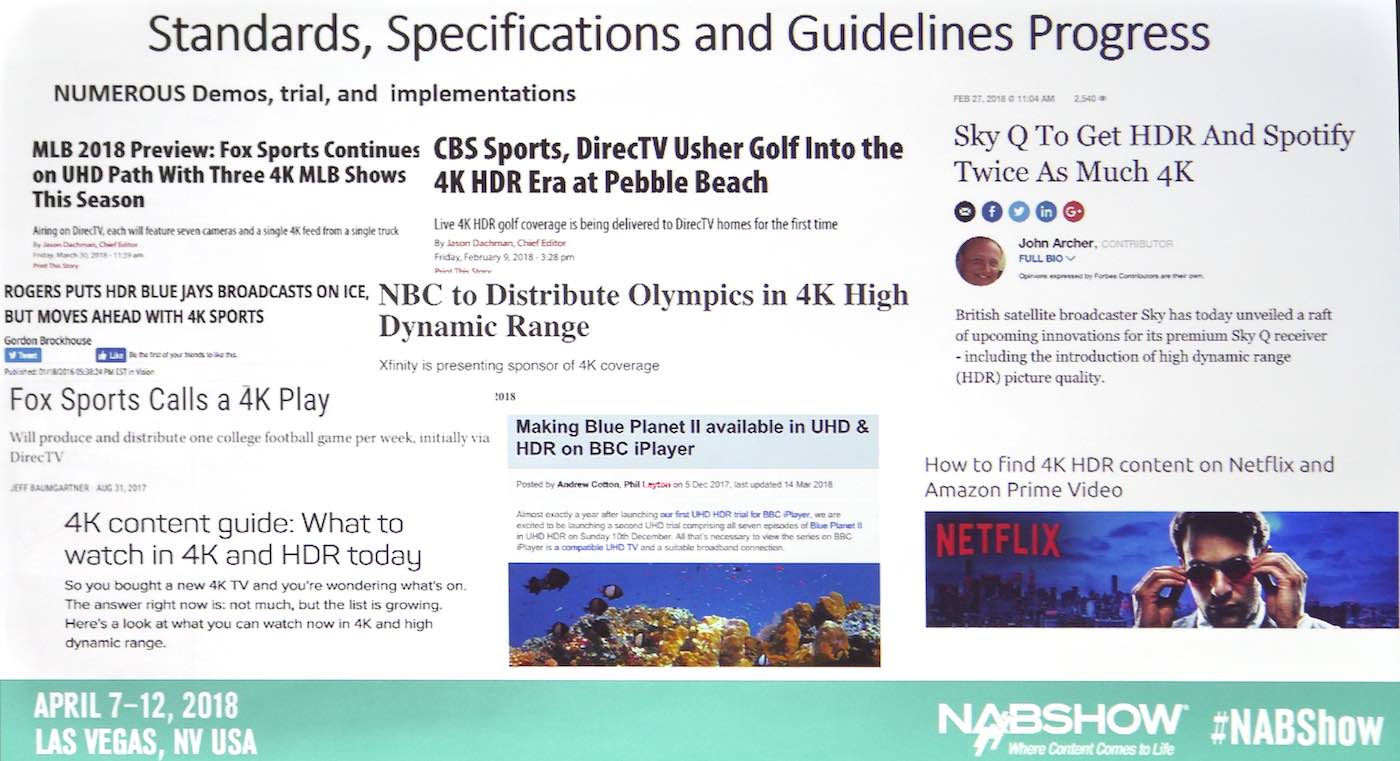

As a technologist I’m always trying out new things on my family. “Does this look better, or does this look better?” Showing the family Blue Planet II in UHD HLG was something they all agreed looked better.

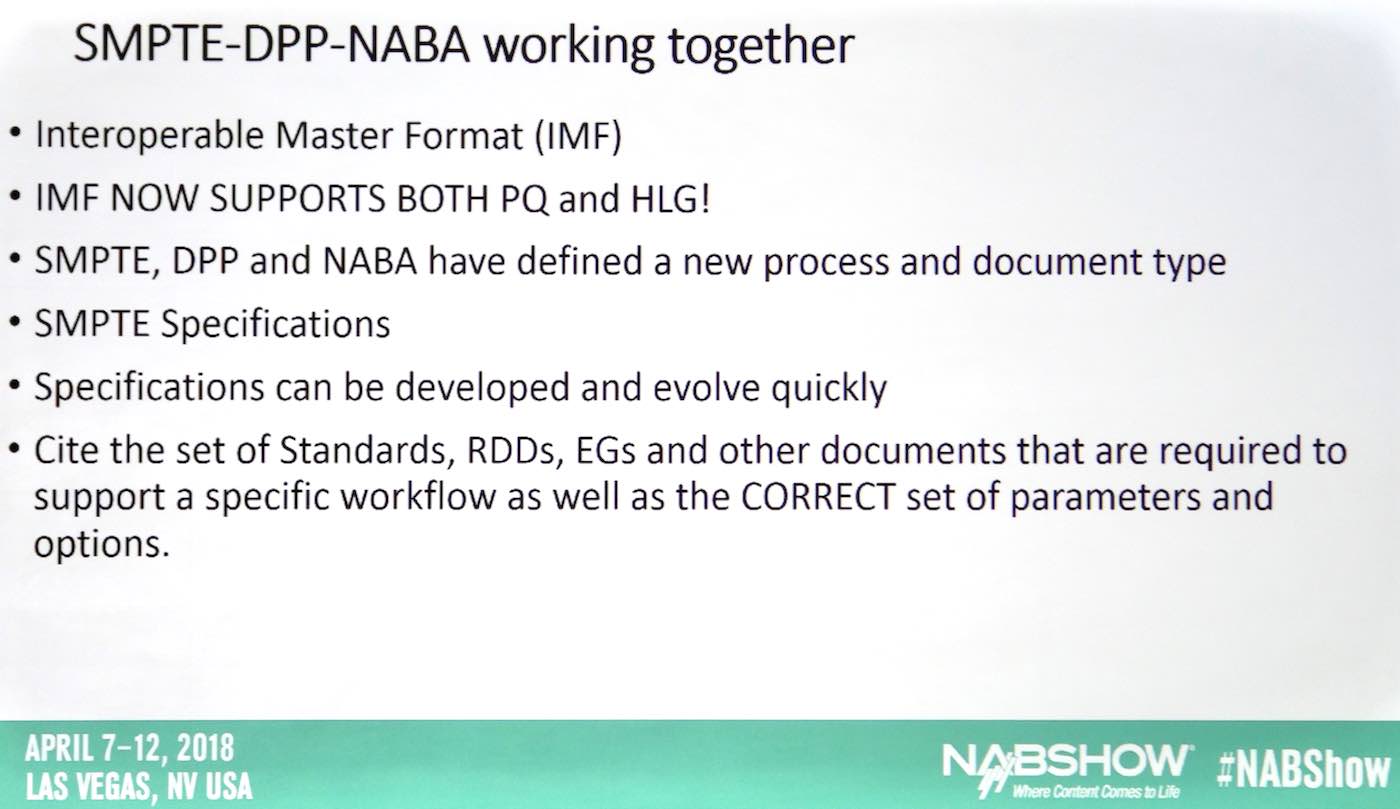

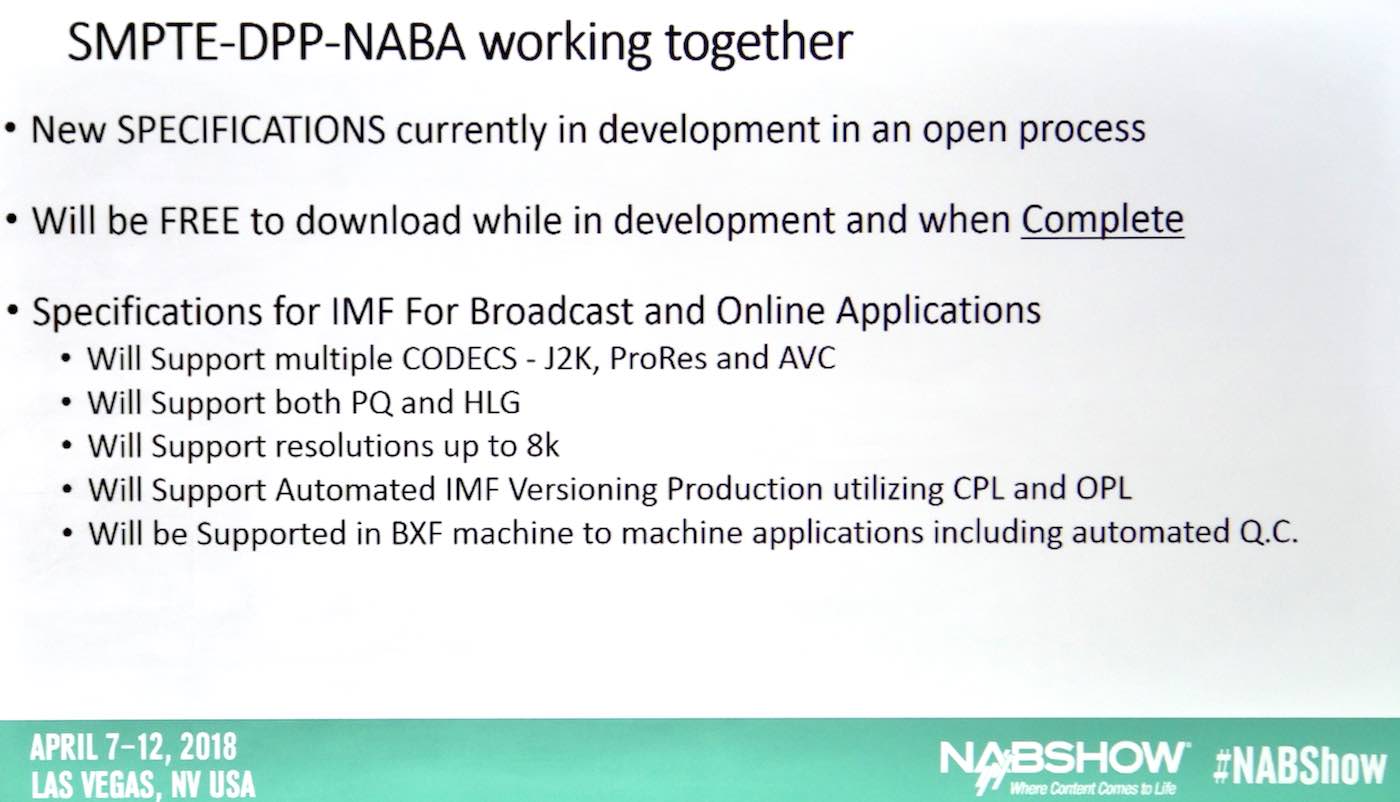

[SMPTE: Society of Motion Picture and Television Engineers. DPP: Digital Production partnership. NABA: North American Broadcaster’s Association.]

For broadcasters, emit a format and stay with that format (that is, don’t mix frame rates and HDR standards). Format switching tends to glitch receivers and cause disruptions for viewers.

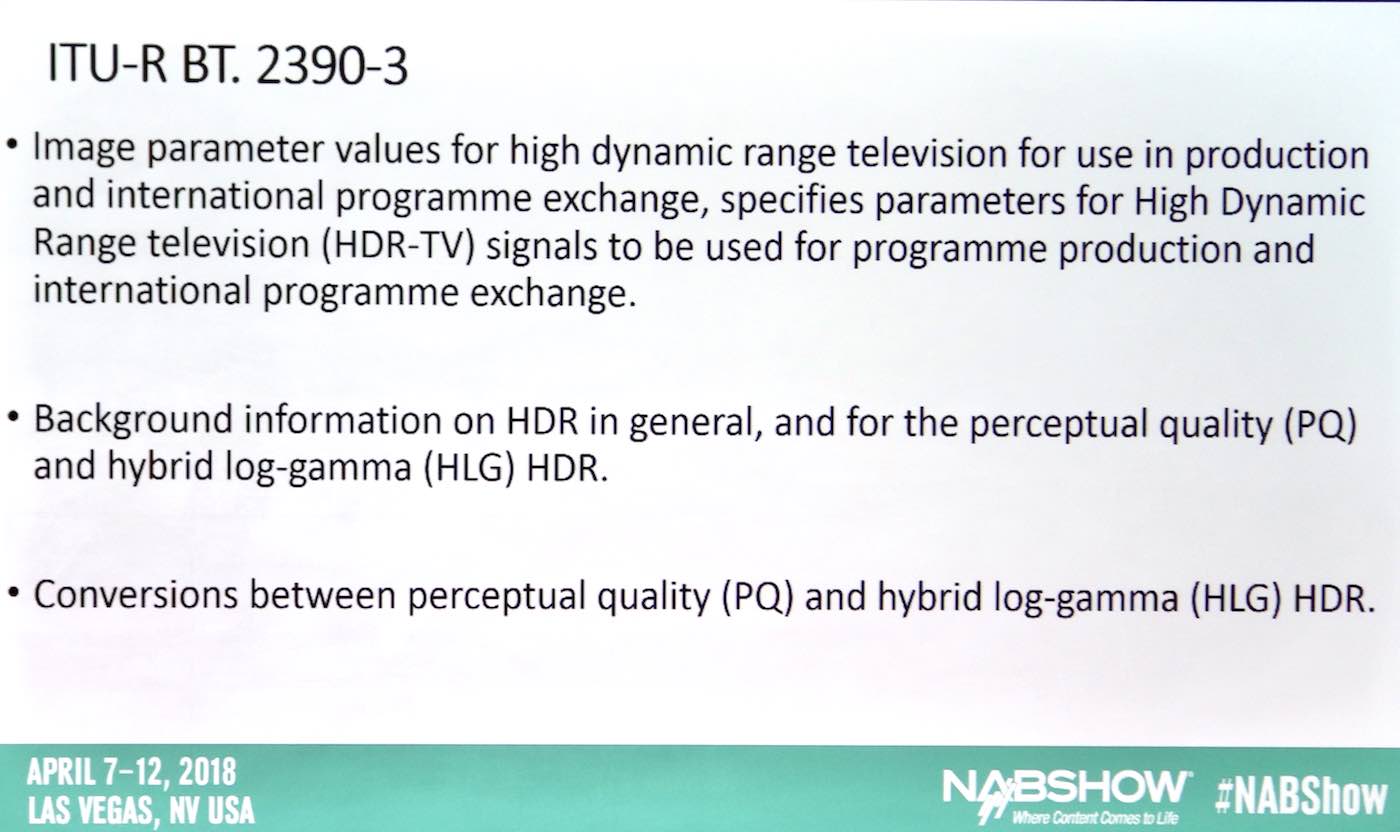

If you want to understand format conversions HDR-to-HDR and HDR-to-SDR etc. there are good recommendations in 2408. Mathematical conversions that until recently were not well understood. There has been tremendous confusion about scene-light and display-light conversions, which has hindered HDR tests in which conversions were done artistically as opposed to by formula, leading to inconsistent results.

Wide Color Gamut will permit broadcasters to show theatrical color, for the first time. The same color space that people enjoy in the theater. HDR’s increased judder (due to higher contrast) can be solved by high frame rates.

High Frame Rate: One Person’s Perspective

Renard Jenkins, PBS

We at PBS have been working hight frame rates (HFR) for 2–3 years. It’s how we want to shoot nature and science productions. Question: is HFR primarily the domain of treaties or engineers?

Definitions of HFR: “Any frame rate that exceeds those typically used.” “It relates to any content filmed at a higher rate than the standard of 24fps.”

The human eye begins to fuse motion at 15–18fps. 24fps is the lowest at which you can sync audio [though Mark Schubin would disagree -AJW]. Early frame rates were all over the map: 16fps, 40fps, etc. In 1952 the NTSC II meeting standardized 59.94i for US TV.

HFR advantages: high motion content, smoother frame blends, cleaner still pulls (very important for PBS marketing; they don’t want to pay for a still photog on all these productions). In early days of UHD testing, an elephant eye at 29.97 had too much motion to pull a still:

It looked great as video, but motion blur killed resolution on a still frame.

It looked great as video, but motion blur killed resolution on a still frame.

Two and half years later, we were in New Zealand shooting sharks with an experimental RED camera that shot 120fps raw. The 120fps came in handy as the still photog was busy getting out of the way of the shark. Even though the shark was moving, we were able to pull crisp, sharp stills out of the video stream.

What do we do with HFR content? There aren’t a lot of monitors, editors, etc that handle it properly.

Some guidelines for shooting in HFR: Good glass, composition, and talent.

You have to have good glass. We have partnerships with Canon and Zeiss, and have been using some really sharp lenses.

Use the entire frame. Let the action evolve in the frame, don’t move the camera (to prevent adding motion blur). Keep the background from blurring out.

Keep the background in the foreground of your pre-production work. If you’re producing episodics (instead of docs, where you really don’t have that level of control), avoid excessively busy backgrounds, as pans get distracting.

As the frame rates increase, detail increases. One our biggest gains was the move from 59.94i to 60p.

Plenty of challenges converting existing plants to support HFR (encoding/ingest, playback, monitoring). Do we put temporal low-pass filters in encoders? How to play back the actual frame rates? Some high-speed camera vendor have playback apps for field reviewing, but most NLEs will slow it down or drop frames. Monitoring: not a lot of 120fps displays (some in Korea, but not here yet). So you play back slowly, or skip frames and hope you’re not missing something.

Conclusions / further thoughts: Investigating adoption of higher frame rates throughout. Improving coding efficiency. Decide whether pre-encoder filtering or encoder improvements is the way to go. Adaptive down-filtered sampling is a must.

It’s fun and it’s a lot of work, but that 120fps shark is a very compelling piece!

Any mistakes in transcription or paraphrasing are mine alone. Photos are shot off the projection screen, and the images remain the property of their owners.

Disclosure: I’m attending NAB on a press pass, but covering all my own costs.

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now