When Adobe introduced CS4 they also introduced a new feature that many thought would be the suite’s first real killer feature: Speech Transcription. I think the fantasy might have been this: gone was the day of paying a human to transcribe footage as the machines could finally do it for us. That wasn’t the reality as the results were often a garbled mess. The humans continued to do a better job. Adobe’s transcription accuracy has been improved since version 4 and with CS5.5 it’s actually usable. I used it recently and I’m convinced it saved a lot of typing.

When working on a recent job the producer feel behind a bit on transcribing some of the talking head interviews we were using in the cut. There were four subjects, each interviewed several times in different locations. We only had two full days, with one prep day, to cut this 5 minute piece and he asked me if I could transcribe one of the four subjects. I was expecting to spend the prep day, well … prepping for the edit (organizing, logging, syncing) and still needed to do just that so I thought I’d give Adobe’s transcription another try and see how it could save some time.

Into Premiere Pro CS 5.5

I was cutting this job in Final Cut Pro so my first inclination was to open the audio files (this was a DSLR shoot with double system sound) into Audition, Adobe’s new to Mac audio editing tool, and transcribe them there but Audition doesn’t have transcription capabilities (the old Soundbooth did) so I opened the wav files in Premiere Pro CS5.5.

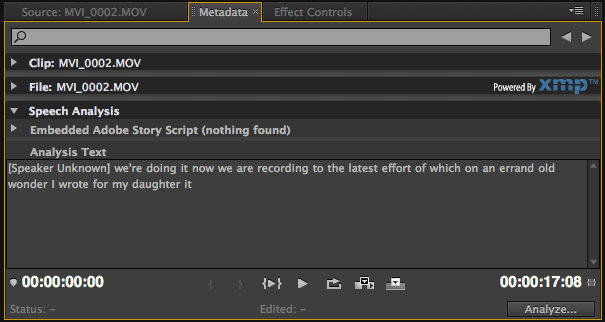

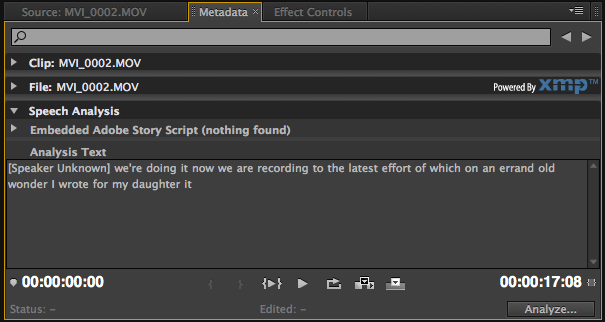

The actual act of transcribing is quite simple: load a clip into the Premiere Pro Source monitor, select the Metadata tab and near the bottom under the Speech Analysis heading click the Analyze button at the bottom.

Speech analysis is performed under the Source clip’s Metadata tab.

That action launches Adobe Media Encoder where the actual file analysis takes place. You’re presented with a quality option before it moves forward:

Choose the transcription quality. The higher the quality the longer it takes.

There’s an option to select different languages if you have different languages installed. Those addition languages can be downloaded from Adobe’s website, apparently free of charge. But the most important choice for me was the Quality option. I chose the High (slower) option as my interviews weren’t terribly long (the longest being 1:06) and these transcripts were going back to the producer so I wanted them to be as accurate as possible.

The Analyze Content box is also where you do things like attaching a reference script if you have one (which will go a long way to making it even more accurate) and analyzing for face detection. The Identify Speakers checkbox can be checked if you have different speakers in a single piece of footage but in my case I did not (except for the off camera interviewer but I wasn’t worried about that).

Once done click OK and this launches Adobe Media Player Encoder and begins the transcription process in the background. When it’s complete you have brand new metadata attached to each file. The actual transcription itself is located at the bottom of the metadata tab.

The above video is the Speech Analysis in action. Notice how each word is highlighted during playback. You can also mark IN and OUT points and perform edits from that window.

There’s two big questions that’s usually asked about this type of automated transcription: how long does it take and (most importantly) how accurate is it?

The actual analysis time is, I think, quite acceptable. My longest interview clip was 1:06 and using the Best setting it took around 1:09 to analyze the clip. That’s just about realtime. But then I also grabbed another random 14 minute clip and that took just under four minutes so I’m not sure exactly what the ratio of clip length to analyzation speed might be. You’re always going to trade speed for accuracy in situations like this and I’d even be happy to see some type of super accurate option, even if it took twice as long as the best setting.

The accuracy was far from perfect but appeared to be noticeably better than the couple of times I tried to used transcription in CS4. The accuracy depends a lot on the quality of the interview itself. Noisy backgrounds, fast talkers and speakers who don’t enunciate will all reduce accuracy. My interviews contained a producer asking questions off camera and the interviewer audio was, as expected, pretty much useless. The interview subjects were non-professional talent but they were mic-ed well and the accuracy was good enough that I used it as a starting point for my transcriptions.

Above is an example of Speech Analysis of a speaker with very good enunciation that hasn’t been corrected.

The same text after it has been corrected.

As mentioned above, once the footage has been transcribed it appears as text in the Analysis Text window. One very handy thing that happens as you play the footage in PPro (and click back to the Metadata tab) is each word will be highlighted as it plays along. At any point you can click a word in the transcription, hit play and playback begins from the section that contains that word. When you hit play the Source tab jumps back to the video tab so I found it best to tear off the Metadata tab so I could see the video and the text simultaneously. It’s an incredibly easy way to navigate around an interview and will impress the producer who has never seen it operate. The transcription is now attached to the file as metadata so it will be there whenever you use the clip in PPro from that point forward.

One point that Adobe makes about the accuracy of transcription is that it will be far more accurate if you attach a text-based script file before transcription. This makes sense and if you were working with talent using a teleprompter, a scripted series (write your script in Adobe Story and you’ve got an easy in!) or something similar then I can see where this would be possible. By far more of my jobs that feature talking heads don’t have scripts than those that do so I’m most interested in transcription from a raw file.

Next Up: The two big places where Speech Analysis could use some work.

There’s two big places that I could see room for improvement (besides in the obvious one of more accuracy overall): editing of the text once it’s transcribed and moving the transcription out of Premiere Pro.

Editing the Text Once it’s Transcribed.

Once you’ve transcribed footage and have an Analysis Text window full of words, that text is editable. You can dive in and manually change the text to make it more accurately reflect what is being said. This in itself is a tedious process (but a perfect job for an intern!) but it’s made even more tedious by the limited text editing tools that Adobe provides. You can click and type on a word to change that word or use one of the contextual functions offered up by right + clicking on a word.

Limited text editing tools are offered once text had been transcribed.

Don’t get me wrong, these text editing options are better than nothing but I found myself trying over and over again to do things that I would do in a word processor. For example: I wanted to highlight a couple of lines of garbled text (the interviewer’s off camera questions) and delete. You can’t highlight in that manor so it was deleting a single word at a time. That was beyond tedious. And beware, if you delete something you can’t undo and get that back. I first though this might have something to do with Adobe needing some type of word associated with each sound in the file but since you can delete single words at a time this isn’t the case. You can highlight text by marking an IN to OUT but you can’t seem to do anything with that text that is then marked. It would also be nice to have the ability to select a range of words (such as the off camera questions in my case) and have PPro turn them into xxxxx’s or something similar to signal to the editor that there’s something there but it’s not relevant.

As far as editing the transcribed text goes, the process would be so much easier if the Analysis Text window had what amounts to a basic word processor built in. I don’t think that means things like bold and italic but things like selecting a range of words, copy/paste and spell checking would be nice. Just an overall easier way to edit the text once transcribed. I would have loved to stay within PPro to do my text transcript editing but instead I moved it out of PPro and went a word processor.

Another example of Speech Analysis accuracy.

This speaker’s text was cleaned up in a word processor.

Moving Transcribed Text Out of Premiere Pro.

That brings me to the second place where Adobe’s transcription could use some help: moving transcription data out of Premiere Pro.

If you want to move the transcribed text out of PPro there’s really only one option that I could find, right click on the text and choose Copy All.

COPY ALL is the only option when it comes to moving transcription out of Premiere Pro.

This will allow you to paste the entire transcript elsewhere, like into a word processor. One way that Adobe is currently positioning Premiere Pro is as a compliment to Final Cut Pro or Avid Media Composer. PPro’s ability to XML and AAF in and out of its competitors makes it kind of a Trojan horse that might bring in editors who are moving jobs to After Effects. If you want someone to try a new tool you have to get them in there so this is a good way to do it.

Transcription would be another good way to get an editor into the application and better options to move the transcript out might bring them back again. Obviously an XML out to Final Cut Pro really wouldn’t matter since FCP doesn’t have any type of text track for footage but Avid has the optional ScriptSync so that might be a possible option. At the very least exporting a more accurate transcript would make ScriptSync more accurate when it does its thing. Since ScriptSync doesn’t have to work with just a traditional Hollywood-style script so a transcript out of PPro could be handy thing.

What seems to be the most obvious exporting option is to export the transcript with some kind of timecode stamp. The words are somehow associated with the timecode (or timestamp at the very least) of the media since they follow right along as you play the file. It seems obvious there would be a way to export this relationship out in some human readable form.

This might be a feature that is coming in the future but we’ve already had a couple of big upgrades since transcription came along. Adobe might also be wanting to keep the editor inside PPro once they’ve come over for transcription but I think without some usable timecode stamp it’s less likely to make the editor come back.

In the end I copy/pasted the transcript into a word processor and made the corrections there as I played it along in Final Cut Pro. I wasn’t ideal but with a dual monitor setup it wasn’t too bad. Staying right in the Premiere Pro interface to clean up the transcribed text would have been much more preferable but since I had to type in my own timecodes (and with PPro’s limited text editing capabilities) the word processor with FCP was much easier.

Overall Abobe’s Speech Analysis Saved Time.

This isn’t to say I won’t be back in the future to utilize Premiere Pro’s transcription capabilities. It was accurate enough and worked well enough that I do feel it saved some valuable time when transcribing the interviews. It’s probably the only tool any of us have installed on our desktops that can do such work without paying for it separately (as with Mediasilo and its transcription services) or setting up some kind of hack-around playback into standalone transcription software (maybe MacSpeech or your iPhone’s Dragon app).

The ability to really use transcriptions of your interviews in the editing process is something that I really enjoy. A big documentary project with hours and hours of interview footage is a place where transcriptions are a valuable tool. I often find it helps crafting the story the interviewees are telling if I can sit away from the editing screen and read their content.

With transcripts in a word processor files you can search pages and pages of information for specific words and ideas in just a few seconds. Add phonetic searching tools like Avid’s PhraseFind and Get for Final Cut Pro and there’s yet another tool at the editor’s disposal to quickly find specific things and spend more time editing and less time searching.

Taking that idea one step further there’s Intelligent Assistance’s prEdit (Paper cuts without the pain!). It uses the transcription of media to create what amounts to a non-linear editing tool that lets the user create a rough cut / radio edit by splicing together text instead of actual picture. Set your producer/director to using that and you’ve gone one step beyond a paper edit.

Transcription is a wonderful thing for the post production process. It’s not always cheap, it’s not always easy but with Adobe’s transcription in Premiere Pro it goes a long way toward being accessible. Is it as good as a real transcriptionist doing it by hand? Definitely not. Would I take Premiere Pro’s transcription over nothing at all? Most likely yes. With a few more improvements in the editing of that text after Adobe has done its thing and I’ll be using it more and more.