Recently, the app Prima Immersive launched, featuring the first episode in the Sessions series. If you missed my article, you can read it here, and if you have access to an Apple Vision Pro, you really should download the app from here. The video and audio quality inside this app is unmatched, and it’s a must-watch for anyone in the immersive space.

Soon after that first article went live, I was able to catch up with some of the key people behind the shoot and the tech for a chat, and of course, we had to meet in Spatial FaceTime, each in our own personal Apple Vision Pro, but meeting in a virtual desert at night. The participants:

- Tom Rettig of The Spatialists, the creators of Sessions

- Andrew Gisch and Andrew Chang of Immersive Company, behind the camera and platform that made it possible.

This article is an edited transcript of our extended chat, and we cover technical details about lighting, frame rates and shutter speeds, camera tech and encoding, plus a custom-made in-headset NLE. But it’s not all technical — we also talked about what kinds of productions work best in immersive, and what the future holds. If you’re remotely interested in this space, find a comfortable seat and settle in for a long read.

Before we start, though, please note that this chat took place before the latest Apple Immersive video featuring Metallica had been released (just a few days ago) or even announced.

Although Sessions has, to me, better visual clarity, the Metallica video is at an entirely different scope, and a reminder of the emotional power of immersion. Featuring shots of a full stadium alongside intimate shots of the band and up-close moments with fans, it’s Apple’s first big-scale live multi-camera Immersive concert video, as well as a great example of a concert film done well. Had it been released when we had this talk, we would certainly have talked about it, and I’m sure we’d have had opinions! Please bear that in mind when reading the sections where we discuss how a large concert production could work in immersive.

On with the chat!

Iain Anderson, for ProVideo Coalition: Who are you guys? Why are you driven to do this amazing stuff?

Andrew Gisch, Immersive Company: Well, we’re a couple of entities here, but we’re definitely one team and certainly what we put out, we’re very proud of it.

Andrew Chang, Immersive Company: Both Andrew and I have done immersive media for quite some time. We’ve both been passionate about the space and we’re excited when we saw Apple come in and really show to the world how important quality is, both visual quality as well as content quality.

We, of course, had been thinking about this for quite some time and we didn’t want to say we’re better than Apple. They’ve set a high bar but we’re not trying to compete against them. We wanted to just see how far we could get and we think we got pretty far.

Tom Rettig, The Spatialists: I’ve been in tech for a long time. I started out in computer games, doing audio for computer games way, way back. For the last 15 years, I’ve been working in big data, entertainment data, at a company called Gracenote, which is part of Nielsen. I left Nielsen a couple of years ago and had been doing consulting when the Vision Pro came out.

I went out and saw the demo and was very impressed by the immersive video. When Alicia Keys came up, and she is singing to my from a few feet away, I was like, f***, that is crazy, and it really was emotional. And the same thing with the gathering baby rhinos around your legs. The sense of presence and intimacy was mind blowing. But I had no intention to buy it.

I went home and thought about it for a few days.

My background has always had this connection with music. I’m a composer. I went to music school, and have been in bands and stuff. I just thought that sense of presence and intimacy in particular were the two kinds of feelings I was most impacted by in that demo. And I thought somebody should make some really cool, intimate music performances. And I really felt strongly that it was important that they be acoustic.

I wanted a sense that you’re there, with the person playing the instrument, all that stuff you were just experiencing, no translation through electric or electronic medium of any kind. I went out and bought an R5C and started shooting experiments in my living room and was super unhappy with the results. I was chatting about it on Reddit with other people who were experimenting, and then Andrew reached out. He was going to be in the Bay Area fairly soon.

We met up and he showed me some preliminary footage he had shot, and I was already going down the path of finding the artist. Once I saw what Andrew had been working on, I thought, this is really a lot closer to the quality that we’re trying to get at. And to be honest, I had no idea he was going to so greatly surpass that preliminary footage to achieve the level of quality that we did.

Talking about the camera(s)

Iain: So if I can ask, how does your camera work? What can you share? Is it two cameras internally that are tied together and genlocked?

Andrew C.: Well, I can say that we did not build it up completely from spare parts. So we didn’t start at the sensor level and then attach a brain to it and do it in that approach.

Everything that we’ve talked about publicly so far has been about Immersive Camera Two, right? That is our latest generation, like best in class. It goes up to 12K per eye, slow motion support, all the bells and whistles. You can do things even like spatial videos. So you don’t just have to be limited to VR180. It does things like, you can zoom in, because it’s not a fixed focal length camera, with interchangeable lenses and all that good stuff.

The interesting thing is that Sessions was filmed on something we call Immersive Camera One, which is our first generation camera, which is even more prototypy. It is internally, essentially two cameras, and we think synchronization is very important, and we have noticed issues with synchronization from other systems in the past. So our cameras are synchronized at a pixel level. Similar to what is coming out for the Blackmagic [URSA] Cine immersive, ours is also pixel synchronized as well. This is very important to us.

I actually came into this space pretty new and built everything from, like, first principles in a way. I didn’t have any preconceptions. For better or for worse, I didn’t know what was right or wrong. I ended up doing things that people in the industry who I had talked to, they kind of laughed, honestly. They’re like, why would you even do this, that’s silly. We ended up doing a lot of these things and it worked out, and the results obviously hopefully speak for themselves.

Immersive Camera Two, we think, is a much more flexible system overall. It comes with quality improvements over Immersive Camera One, which we are still finding the limits of today because we’re still putting it through its paces. But it is, of course, production-ready. It is ready for productions that are willing to work closely with us.

We have to do the entire post-processing workflow because we have some things which are non-standard. But that’s also one of the reasons we love the upcoming Blackmagic camera because of how many new standards they’re going to be introducing, which Apple is also going to be supporting.

I asked if they’d used the Blackmagic URSA Cine Immersive.

Andrew G.: We have not been working with Blackmagic Design directly,but we’re very excited about the upcoming camera and we’re hoping to get one of the early units. Everyone is hoping to get an early unit. So we’ll see how that works out.

A new encoder for immersive content

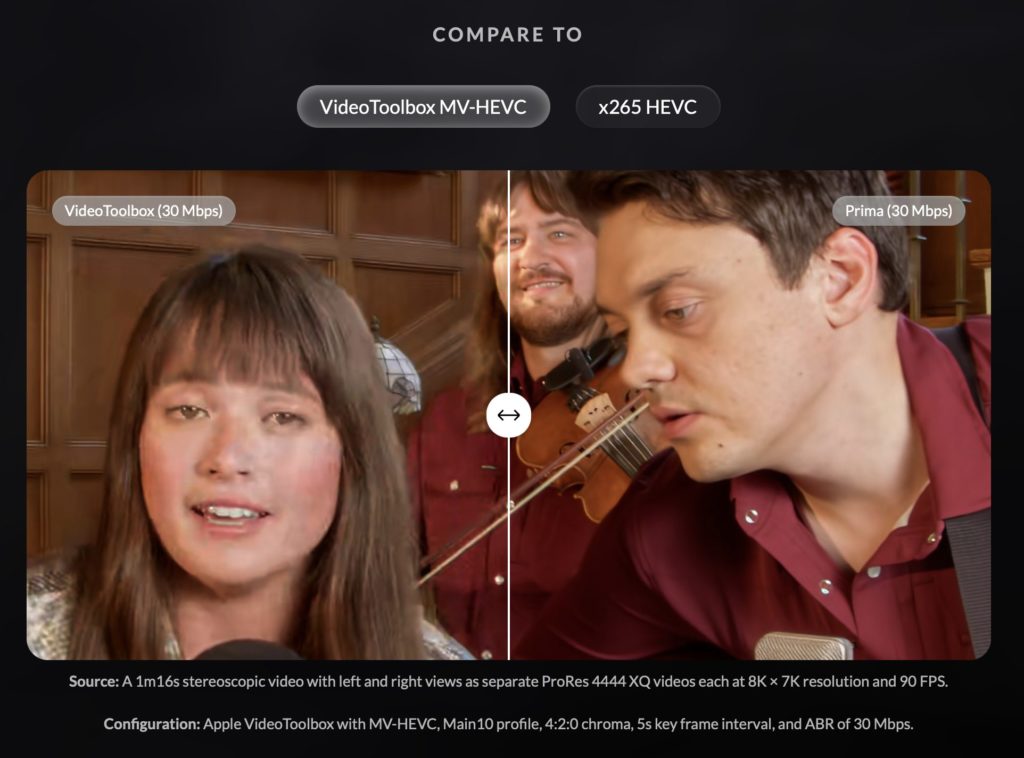

Iain: One of the most interesting things about the spec was the encoding system. So, 30Mbps HEVC for an 8K by 7K frame. That was just for one eye, I think, reading the spec. That sounds like an unbelievably low data rate. Can you share anything about the secret sauce for that?

Andrew G.: So it’s actually 30 Mbps for two eyes. It’s for the entire stream. Well, for Sessions, we go up to 50 Mbps for our highest rendition. But we can go down to 30 without artifacting.

I know Apple currently, they might change, but currently they have issues on their lower renditions where there’s tons of artifacts and tons of, like, weird things that happen. But we found a solution which really performs well even on low bit rate encodes. So that is kind of one of our big things, and [there’s] much more to come there as well.

Iain: MV-HEVC was originally supposed to allow for some good efficiencies between compressing the left and the right eye together. So you’re taking advantage of that, I’m guessing?

Andrew C.: No. We really wanted to be cross-platform. This release is actually using our cross-platform technology, which allows us to do hardware decodes on the Quest as well as upcoming Android XR. So we will take advantage of MV-HEVC very shortly, and we’ll have some announcements there, which will provide us with that additional 30% gain in bit rate efficiency.

Production issues on set

Iain: And what’s your lighting setup?

Tom: We had a full lighting truck. And as the crew said, we used literally every light on the truck. But everything sat behind the camera. We just essentially had a wall of light behind the camera, because at 90 frames per second you need a lot of light.

It had been cloudy all day and the diffuse light in the room was perfect. And then when the band arrived, the sun really came through the windows. It was a bit problematic at first, but then settled down.

But basically, we had this enormous amount of light. And, you know, we were monitoring things and, though the director was sometimes saying, “can we get it lighter than this?” I think in the end, it turned out that we had the right amount of light. I think everything looks really well lit. And it looks natural. It doesn’t look artificial. I mean, it may look like there is light in the room, but I think it’s a good character of light in that room.

Yeah, it took a lot, a lot of light.

Iain: With audio recording, is it just the mics that were visible in shot or even more?

Tom: So we took this three-mic approach. It’s a traditional bluegrass miking configuration that is an outgrowth of the single mic in radio that they used to use a long time ago. This has a history with bluegrass music, and so most people who are really entrenched in bluegrass know how to play to a mic configuration like that, whether it’s one or three mics.

I chose that because I wanted that choreography of them moving in and out, you know, if it’s spatial, to have some dynamic movement and so that forms the core of the recording. Also, those are great Neumann mics that we’re using, getting a good, honest acoustic sound and what it sounded like in the room, that takes us a long way there.

There were spot mics on the stand up bass and the fiddle that attached to the bridge of those instruments, but they were microphones, they weren’t pickups. Again, to get a more authentic tone. You’ll notice every instrument has cables hanging off of it. All of them are used to playing with pickups.In my estimation, pickups just make the sound less authentic, and so those were really just there in case some disaster happened. We might use them occasionally to just spot-enhance something or support something. But the general tone was to capture that acoustic quality with the mics, not the pickups.

We wanted to take advantage of spatial. And so that three mic setup didn’t afford us a lot of opportunity in terms of what to do. Michael Romanowski, who did the Atmos mix, is a good friend of mine, so I went and saw him and said, hey, this is what we did. What could we do to help give a good sense of the space? And he took a crack at that. I think it’s quite a nice Atmos mix that he did.

He has now bought a Vision Pro because he wants to start doing more of this stuff. So he and Brian Walker, who is our recordist, the three of us are now talking about how we are going to capture a better spatial representation in the recordings going forward.

Post-production

Iain: OK, great. And just briefly, can you share anything about the software you’re using in post for mixing audio and editing video?

Tom: All of us used different things. Brian did the production recordings in Pro Tools. He and I both were doing preliminary stereo mixes. I work in Universal Audio’s Luna system. And Michael uses Nuendo from Steinberg. So in the end, I took Michael’s Atmos mixes, brought them into Davinci Resolve because there’s things that crossfade in the video.

I could lay out the whole mix for the video in Resolve.

Iain: In terms of video, is it mostly Resolve?

Andrew C.: Exactly. Our intention was to keep as much of a traditional video post-processing workflow as possible. Tom actually did the entire edit and it was done using very traditional video editing style. Then he handed it to us and we just pretty much exported it. I built out an entire editor in headset to do mastering on the Vision Pro — well final coloring, not the actual grading.

So final coloring and convergence, the stereo stuff that you need to do was done inside the headset with our custom editor.

Iain: There have been a few pushes towards VR in the past. I guess we’re still at a stage where the audience is still small, but the quality is finally there.

Andrew G.: I think quality is absolutely a huge thing, certainly. I mean, for me, when I put on the Vision Pro for the first time, it was the first time ever being in a headset where I am not taken away from my existing environment. I think that’s so important because every other headset, there’s an occlusion, you’re immediately disconnected from your room and from your space.

What Apple has done is essentially redefine the entire UI of spatial computing. I mean, they’ve even put out new terms that are already dominating between immersive and spatial. It’s a credit to them from the design standpoint, the format that they’ve created for this.

Planning an immersive project

Iain: If someone was considering an immersive project, what would you recommend to them? What should they be filming? Music and like these live acoustic performances seem like a great strategy. What other things would work well for an immersive project, you think?

Andrew G.: I think in general, experiences and environments and moments to share that need to be captured in time so they’re not lost, or the idea of some special event or something that other people can’t physically travel to, or in a sports example or concert stadium, have a perspective and experience of the performance that you couldn’t replicate with the most expensive seat in the house.

So it’s not just even about being there, it’s about more than that. I think it’s important to always talk about “Why immersive?” or “Why VR?” and that it shouldn’t just be essentially the same experience in 2D.

The sense of having agency of where to look is fundamentally different. In a visual storytelling perspective of traditional filmmaking, where it’s a very confined frame and you’re locked, you don’t have that agency, and so you have to naturally motivate people in a different kind of visceral way. I think you’re engaged with other elements of the story or environment.

We talked about the existing Apple Immersive content.

Andrew G.: Man vs. Beast I thought was especially interesting for those kinds of shots compared to the other Apple immersive pieces because it seemed more vertically cropped at times. And I found that interesting.

I think my favorite part about Man vs. Beast is how it’s one of the first Apple Immersive pieces I’ve seen where they do a very good job of composing the scene with the natural element lighting and the environment and blocking the key characters. They’re actually doing a sort of spatial, cinemagraphic composition, in a way, they’re sort of using the environment.

Blocking is a part of the cinematography in immersive. Because it is spatial, it is, in part, about proximity, and positioning. It’s debated how well the 3D rectilinear type shots are working there and everyone has strong opinions about that, but I do think from a blocking and compositional perspective, some nice creative choices were made.

Iain: Could you, and you may not be able to, could you give a rough idea of cost? What kind of budget do people need to be considering?

Andrew G.: So with the caveat, of course, that we did launch Prima as a platform and so we are looking to work with the most elite types of productions and creative. It doesn’t always need to be money, but generally that helps, certainly from production dollars.

It all depends on the scope, of course, but assuming just the cost for the camera, the techs that come along with it, and some assumption of travel probably, although that’s variable. You’re looking at… It’s in the five figures, just for that small single camera team. And then, in addition, you have to fully manage a shoot.

Tom: Yes, it is generally the cost of a typical shoot, just replacing the camera and camera support costs with what Immersive Company provides.

Looking forward

We talked about the potential audience.

Tom: I mean, half a million is a lot of people, but, it is not like cell phone or PC numbers. I did think very much about doing a scaled down production so that we have something sustainable that we could do as the platform is growing. And so if people want to keep doing this, which I do, I’m definitely planning for shoots that are modest, for now. For music, I feel what we’re doing with Sessions, is a completely new way for people to experience music. If I was not doing it, I would want somebody else to be doing it, because I think it’s a great way to experience music.

Iain: What do you see of the future for immersive and the Vision Pro?

Tom: It’s kind of obvious there will be another Vision Pro that is at a more aggressive price point, which the market really needs. I would assume that of the features that get adjusted to reach that price point, that our immersive videos would still look fantastic.

Then there’s also non-Apple stuff being discussed. Google made their announcement. There’s the Samsung. So, you know, I think that Apple has set this bar by which immersive video is going to be an experience that we will all be having in the future.

And the question is, is it a sort of longer growth over the course of two or three years or is there a triggering event, like perhaps a weekly immersive sports event, where all of a sudden the sales just like skyrocketed because everybody had to have whatever that thing was. And so those kind of things will happen. But while we are in this ramp, this slow and steady ramp, the new cameras come out. From what I’ve heard, there’s going to be like a hundred of them. That’s a lot of opportunity for people to be making immersive video.

I suspect that, particularly the second half of 2025, we will start to see a lot more content, which is exciting for Vision Pro owners because I think that’s been one of the big complaints in the past year. And I think people will experiment. They’ll do stuff that none of us have thought about.

I do believe there is going to be a catch point with immersive video where all of a sudden it becomes feasible for people to do larger scale productions.

Andrew C.: Well, we’re in it for the long game. That’s for sure. We don’t expect to be millionaires overnight. We are investing in the future and we have a very compelling roadmap for the next year that we’re excited to start sharing with folks.

I suggested getting a big star like Billie Eilish or Taylor Swift to do a session.

Tom: I don’t imagine only doing Sessions videos in the future. So I think there is room to do other stuff. But I agree with you, there will be immersive videos with big name artists.

It’s interesting you mentioned Billie Eilish because I think she is someone who could envision something in a space like this. But some people are really about the extravaganza. And I think it is a very different medium and there has to be some sensitivity to what works and what doesn’t in this new environment.

I think it’s one of the reasons that the three of us were able to strike a chord with Sessions. We thought a lot about what would actually make sense in immersive. We didn’t just go find some people who happen to be shooting some stuff and say, “Hey, use this camera instead.”

We have to find those artists that have a sensitivity [to the medium]. And I think some of that is you have to actually wear the Vision Pro for a while and see what the state of the art is and kind of imagine what does make sense and what doesn’t.

Iain: I agree that it doesn’t have to be acoustic, but I think maybe it does have to be intimate.

Tom: I do think that intimacy is a big part of it. So like, you know, Taylor Swift’s “Eras Tour”, that is an extravaganza. Like I don’t imagine you putting an immersive camera out there and squeezing all of that spectacle down to this space… I don’t yet know how that would work.

The other thing that’s tricky with live music is the visceral impact of the power of the sound in a live space. You can’t move enough low frequency sound out of the little speaker that’s on the headset. The AirPods help, but not everybody’s going to use those. Currently, the visceral experience of a full volume live show is difficult to recreate in VR.

And so again, the way in which you understand immersive, you have to think about things like the difference between the effect of live audio versus a more intimate setting.

The importance of eye contact and visual clarity

Tom: Well, it’s interesting when, when we were shooting AJ Lee and Blue Summit, we did mention to the band that, these lenses are the eyes of the people who are watching you. And I didn’t know that AJ would take that as “I’m going to sing every song right to you.” We didn’t ask her to do that, but that was a choice she made as a performer.

There was some concern that maybe it might be too much, but I feel like the consensus has been that it’s a really engaging quality, and in fact, that was the thing with Alicia Keys in that first moment from the demo. She was singing to me and it blew my mind.

Iain: I love it. I think most people love it, but my wife couldn’t look at her, it was too much. But there’s other stuff to look at. And it’s all so crispy, crisp and clear and sharp. And I’m guessing that’s just down to the camera?

Andrew G.: There’s no special trick to keeping everything in focus. Just, you know, tight aperture. Good camera practices. Well, you’re definitely shooting at a hyper-focal [aperture].

But you want to shoot at a 360 degree shutter and that helps. It’s a hyper realism. It also helps with light. But, you know, there’s a few things that are counterintuitive or seem a little bit different in VR or immersive from traditional.

It has to do with the relative motion blur per frame as well, because that just feels that the amount of motion blur per frame is essentially more similar to the 180° at the lower frame rate. When we’re at 60 frames [or] 90 frames and that’s your playback speed is, as well, you capture and playback 360 degree shutter.

I’ll throw another thing at you. At least for an American standard of 60 hertz with our electrical grid, now that we’re bumping to 90 frames, you really have to be careful about the lighting.

Some people say, oh, you’re at 90 frames, you’re cutting out even more light. You got to pump all this light in. From 30 to 60 fps is, you know, one stop. But from 60 to 90 is, like a half stop, right? You’re not losing that much more light, to gain it back with a 360 shutter. But watch out for flickering.

All the flickering issues are going to be introduced in this switch from 60 to 90. So really be careful with your lighting.

Conclusion

To close out the chat, we talked about 3D lenses and shared some 3D photos, which looked terrific. As an aside, if you ever get a chance to join a Spatial FaceTime, do it. Though it’s not common yet, it’s easily the best way to talk to a group of people short of actually being there. And be sure to high-five whomever you’re talking to.

Keep a keen eye on Immersive Company, on The Spatialists, and on Prima Immersive to see what comes next. This won’t be the last you see or hear of them, and I can’t wait for clips with more bands, more styles of music, and more cameras. The future of immersive is bright.

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now