For many users, the topic of performance comes down to the question of “which component should I buy”, or “I have $xxx, how should I spend it?” While it’s reasonable to assume that the more money you spend, the higher the performance of whatever it is you buy, that’s not always the case. It’s why I’ve repeatedly used the word “complicated” whenever it comes to performance.

In parts 10 and 11, we looked at the meteoric rise of the GPU as the main focus of current graphics performance. But while it was a lot of fun to look back at the early days of computer graphics and see where the GPU came from, simply reading a good story doesn’t exactly help us when it comes to shopping.

One of the motivations behind writing this series was seeing people make what I considered to be poor purchasing decisions. Way back in part one I suggested that in 2012 an iMac and 3 Mac Minis would be a better investment than a single, fully specced Mac Pro. While I may not be right, working towards an explanation of why I think that has taken us through an additional eleven articles and close to 100,000 words.

I hadn’t originally planned this particular article, but I can’t ignore how I keep using the word “complicated” without elaborating. Additionally, I hadn’t planned on paying too much attention to GPUs simply because After Effects didn’t really utilize them. However, because of the costs involved and their dominance of recent headlines, I now realize that this is a very significant point that deserves to be examined – because GPUs are one of the easiest avenues for overspending.

So before we draw a line under the history of the GPU and return our focus to After Effects, there’s one more thing to consider – the Quadro conundrum.

NB. While Ati / AMD have their “FirePro” range, equivalent to nVidia’s Quadro, the reason I’m specifically mentioning the Quadro line is because of the unusual situation it had with Apple computers in the 2000s (see down below). However most of what follows also applies to AMD cards. It just gets really repetitive to keep typing GeForce / Radeon and Quadro / FirePro.

In a general sense, the overall gist of the article applies equally to AMD as well as nVidia, and none of this is directly relevant to After Effects!

Split personalities

Part 10 ended with the launch of the GeForce 256 in 1999, and in Part 11 we looked at how it was the first step towards the monster GPUs that we have today.

The GeForce 256 was a significant card for several reasons that were mentioned in the previous articles: it was the first card to be marketed as a “Graphics Processing Unit”, and it was the first video card to feature programmable textures and lighting.

But there’s yet another significant claim to fame for the GeForce 256 that still echoes today: it was the first card bearing the “GeForce” brand, which is still used by nVidia for its line of gaming products. But in 1999 nVidia didn’t just release the GeForce 256 for gamers – they also launched a separate product range aimed at graphics professionals, which they named “Quadro”.

Quadro confusion

More than twenty years since the first Quadro card was launched, the exact differences between a GeForce and a Quadro video card are still being Googled, debated and discussed on forums today. Informally, no-one I asked could really offer any authoritative information on what the difference is, and Google searches just revealed twenty years of the same questions and the same vague answers. It also appears that the differences between the two product lines have evolved, along with the software they’re aimed at. So advice that may have been correct in 2005 or 2010 no longer holds true in 2020, or was rendered obsolete by various software developments.

The whole GeForce vs Quadro / Radeon vs FirePro thing is not something I have researched extensively, however there is one thing I can say for sure:

Anyone and everyone who’s purchased a Quadro card specifically to make After Effects faster has wasted their money.

And since, ultimately, the question of performance comes down to value for money, I guess we’d better scratch the surface of this slightly annoying topic.

Playing hardball with softimage

If you begin with a simple Google search for the difference between a GeForce and a Quadro GPU, you’ll quickly see the same vague answers pop up: GeForce cards are for gamers, Quadro cards are for professionals. But what does that actually mean? If you’re reading this then you’re almost certainly a graphics professional, so should you be buying a Quadro card? Well – skip to the end – No. You can stop reading here if you want to, I won’t be offended.

But let’s look back at how the split in product lines happened.

In Part 10 I outlined that a lot of pioneering 3D work was done in the field of CAD and engineering. Early 3D graphics were being created on high-end, expensive computers made by companies such as Silicon Graphics, and even Cray – the most powerful supercomputers in the world at the time. Part 10 was the story of a few SGI employees who left their exclusive world to bring 3D graphics to cheap, desktop PCs. If you missed it, it’s a great read, but the takeaway point for this article is the cost of those early 3D workstations. If you worked in CAD or similar engineering fields, then suitable 3D workstations started at over $100,000 – and if you wanted to spend half a million dollars on a single computer then Silicon Graphics would happily take your money. There’s not a lot of detailed information about the prices of these high-end 3D machines, but one WIRED article on ILM from 1994 mentions that 100 workstations cost $15 million, and that averages $150,000 per computer.

As the end of the 1990s approached, the desktop video revolution was in full swing, and it was pretty hard to ignore the rapid development of 3D graphics on desktop PCs. While this was initially being driven by games, it was also clear that it wouldn’t be long before other, more professional applications would make the transition from expensive workstations to cheap PCs. And one significant player already had, signaling a groundshift in the visual FX industry.

In 1994, two years before Quake appeared and prompted the first 3D cards for PCs, Microsoft quietly purchased a software company called SoftImage. SoftImage had authored a pioneering 3D animation app that only ran on Silicon Graphics workstations, and it retailed for $30,000. It was the 3D package used for visual effects on Jurassic Park. Next time you complain about the cost of the Adobe Creative Cloud subscription, spare a thought for the time when working with 3D graphics meant you had to pay $30,000 for software to run on a $150,000 machine.

I don’t know whether Microsoft’s purchase made many headlines in 1994, or what people in the industry thought of the move. At that time, 3D animation was still a very niche industry – it would still be roughly two years before Toy Story was released. Terminator 2 and Jurassic Park had ignited interested in using computers for visual effects, but despite the impact these films had, there was a surprisingly small amount of 3D animation in them. The total duration of all the 3D animated dinosaurs in Jurassic Park was approximately 4 minutes, while Terminator 2 had roughly 3 ½ minutes of CGI. Pixar had produced some groundbreaking, Oscar winning short films that were entirely computer animated but they were, well, very short.

Toy Story was released in December 1995 and suddenly 3D animation was a hot topic. A number of tech articles appeared that noted how demanding 3D rendering was, and detailed the long render times involved even on expensive hardware. Toy Story was animated on Silicon Graphics workstations, but the render farm was comprised of 117 Sun computers, which used Sun’s own “Sparc” CPUs.

So in January 1996, riding high on the success of Toy Story, 3D animation looked like becoming a boom industry – but for everyone involved, it was a very expensive industry.

Only one month after Toy Story made history as the first feature-length computer animated film, and smashed the box office in the process, Microsoft rocked the nascent world of 3D animation. SoftImage, which had previously only run on Silicon Graphics machines – and even then, only if you could afford its $30,000 price tag – was released for Windows NT. If that didn’t grab your attention, then the price drop would: Microsoft slashed the price down to about $8,000.

Professional visual fx software was now available for everyday, desktop computers. The barrier to entry for professional visual fx hadn’t just been lowered, it had been demolished. With the release of SoftImage on Windows NT, along with the unprecedented price drop, Microsoft had sounded the death knell on the high end workstation market.

Market Segmentation

Actually that’s a slight exaggeration. Just releasing a 3D app for cheap desktop computers didn’t change the industry overnight (it would happen slowly over the next ten years). Dropping the price from $30,000 to $8,000 certainly raised eyebrows, and pissed off everyone who’d already bought SoftImage, but there was still one thing to remember – in 1996 the average Windows computer was still pretty crap. Those expensive Sun and SGI workstations didn’t cost 30 x more than a typical PC for nothing. Performance wise, computers from Silicon Graphics, Sun, Dec and everyone else in that market could run rings around the average Pentium desktop running Windows. In 1996, the average Windows machine would have 4 or 8 megabytes of RAM, while the SGIs and Suns had between 192 to 384 megabytes.

But it wasn’t difficult to look to the future and see how quickly that would change, especially with the rapid development of 3D games, and 3D graphics acceleration. In only a few short years, 3D graphics and 3D games on home computers had evolved from being virtually non-existent to becoming a new boom industry. While there were plenty of people who didn’t see the desktop PC as a threat to high-end workstations for visual FX, there were just as many who could see how quickly desktop PCs were developing, and that the value proposition was rapidly changing.

It wasn’t just Microsoft and SoftImage. Autodesk released 3D Studio Max version 1 in 1996, and Maya version 1 was released in 1998 – both for Windows NT. While 3D Studio Max had an existing low-budget heritage from older DOS computers, Maya was the brand new product from a company called Alias, who were a subsidiary of SGI. Their existing software products included 3D animation packages that only ran on Silicon Graphics workstations, and they’d been used to create the visual fx in a number of Hollywood blockbusters including Terminator 2. The development of Maya by Alias was a direct response to Microsoft’s purchase of SoftImage, and it was hugely significant that a company which had previously written software that only ran on SGI workstations had now developed a brand new 3D package for Windows.

OpenGLing the doors

In Part 10 we looked at the importance of the Open GL language to 3D graphics, and that the purpose of 3D graphics cards was to take well known, existing algorithms – including OpenGL commands – and process them as fast as they can. When John Carmack ported Quake to Open GL, he was using the same graphics language that had been developed for CAD and other high end 3D applications. When Quake GL was released, there wasn’t a single graphics cards for Windows machines that could accelerate Open GL, although 3dfx came up with a GL patch for their Voodoo cards within a month.

In 1996, Voodoo cards were flying off the shelves so gamers could play Quake, but there was clearly the potential for software developers to write 3D software for CAD, animation and visual fx that also utilized the power of these new 3D accelerators, running on everyday Windows machines.

But although games like Quake and apps like SoftImage and Maya shared common ground through their use of 3D graphics, they were still very different industries with different priorities.

Splitting the difference

It’s one thing to use terms like “image quality” and “high end”, but another to strictly define what they mean. However when it comes to the differences between rendering 3D for CAD / engineering and rendering 3D for games, there are a number of technical distinctions that can be made. Drawing a simple line is one example – 3D gaming engines aim to draw that line as fast as possible, and that means no anti-aliasing. Lines can look jaggy as long as they’re drawn super quickly. But CAD users require nice, smooth, anti-aliased lines. They don’t need to be drawn 30 times a second, but they need to look good.

On a more technical level, CAD modeling requires support for “double precision floating point” values, while gaming engines typically don’t use floating point values unless they need to. This might sound overtly technical, but the way gaming engines calculate depth means that the further away objects are from the camera, the less accurate their position is. This is fine for games, where the things closest to you are the most important, but not so good for an engineer designing complex machinery.

Gaming engines prioritise speed over all else, needing to render an image as quickly as possible – even if the image ends up with artifacts because some objects couldn’t be rendered in time. In general, CAD, engineering and non-realtime 3D renderers don’t have a time limit, and every stage of the rendering process can wait until the previous steps have been completed.

In addition to technical details such as “double precision floating point” support, a more loosely defined difference is the requirement for stability and consistency. If someone is playing a modern 3D game and the frame rate is around 60 frames per second, then any glitches or rendering errors within a single frame probably won’t even be seen. But professionals require consistency across renders, and have a lower tolerance for glitches, bugs and crashes.

nVidia saw the potential to cater to these two separate markets with the same hardware, ie. the same chips. By using different firmware and software drivers, they could manufacture one set of graphics chips but produce two different products – one aimed at realtime 3D for gamers, and the other aimed at non-realtime 3D for professionals.

nVidia named their gaming cards “GeForce” and the professional cards “Quadro”, and launched them together in 1999.

Quadro yo money…

Ever since then, there’s been a lot of speculation, misunderstanding and general confusion about the difference between these two lines – mostly due to the large difference in price. Ever since the two separate product lines were launched in 1999, people have been Googling the difference and wondering which one to buy.

nVidia’s pricing reflected the differences between these two markets. The competition amongst 3D cards for gamers kept prices in the hundreds of dollars. Companies like nVidia, Ati, S3 and 3dfx weren’t just competing against each other, they were also competing against low cost games consoles like the Playstation and the Nintendo 64.

But the competition amongst professional software was very different. The market leaders didn’t cost hundreds of dollars, they cost hundreds of thousands of dollars. If nVidia were going to release 3D cards that were competing against Silicon Graphics and Sun workstations, their target market could afford to pay more and still feel like they were getting a bargain.

Subsequently, the GeForce range of gaming cards has always been significantly cheaper than the Quadro range of “professional” cards. The same is true for Ati / AMD’s range of “FirePro” cards.

Getting what you paid for

Much of the confusion surrounding the differences between the two product ranges comes down to the large price disparity, especially because of persistent rumors that the actual hardware is the same.

The gaming market, understandably, equates a higher price tag to higher frame rates. From the moment that Quake GL was launched, the purpose of 3D graphics acceleration was to improve frame rates – faster video cards meant faster renders and higher frame rates.

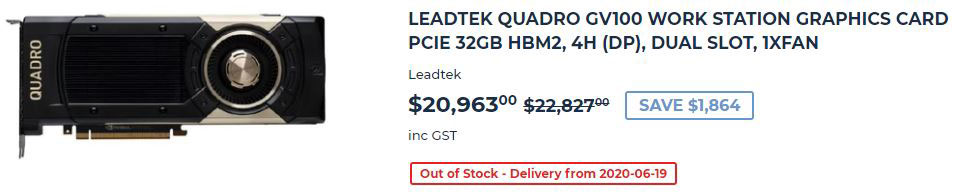

But the Quadro range of GPUs, even though they cost more – a LOT more – were usually slower than their GeForce equivalents. Any gamer (and there have been many) who spent five times more money for a Quadro card expecting a huge leap in performance would only be disappointed.

Very disappointed.

Spot the difference

So what is actually the difference, aside from a vague marketing reference to “professionals”? If you Google the question, you’ll disappear down a rabbit hole made from 20 years of internet debate, and we know how that can go… There’s been so much written about this that is almost feels like a waste of time going over it – except I keep thinking of people who’ve spent thousands of dollars expecting After Effects to suddenly be magically fast.

We can separate a graphics card into three main areas: the processor on the board that is doing all the work, the other hardware components on the board, and the firmware / software drivers.

In the same way that Intel regularly release new models of their CPUs, nVidia and AMD regularly release new families of GPU processors. While the retail products are marketed with the names GeForce and Quadro (AMD use Radeon and FirePro), internally the companies have different codenames for each model of chip. Each new chip design can be referred to as a “microarchitecture”, and they’re usually updated every few years. nVidia have so-far named theirs after famous scientists. In 2006 they named their design “Tesla”, followed by “Fermi”, “Kepler”, “Maxwell”, “Pascal”, “Volta” before their latest range was named “Turing”. In Part 5, we saw how confusing Intel’s range of CPUs is, and that there are over 200 options and models available. nVidia and AMD don’t have 200 options for each of their chips, but they do make them with a few variations. This means that the cheapest, entry level graphics cards will not have exactly the same chip on it as the most expensive. They will, however, belong to the same family. For every Quadro video card, there will be an equivalent GeForce card that uses the same chip. The Quadro card will cost more. This is the same for AMD and their Radeon / FirePro line.

This is possibly where some confusion around the product differences comes from. In Part 5, we saw that the main processor in a computer – the CPU – is often the most expensive part of the whole machine. To many people, the CPU IS the computer, and many computers have been named after the CPU. So the idea that nVidia could release two products based on the same GPU chip, but at wildly different prices, seems counter-intuitive. What makes it seem even more absurd – to gamers – is that the more expensive Quadro cards are slower.

Because the whole point of a gaming card is to render as fast as possible, the GPU chips on GeForce cards are clocked at the highest possible speed (we looked at megahertz / gigahertz in part 5). But – just as the case with the Pentium 4 – the higher the clock rate, the more heat is produced. Heat can lead to instability and can also reduce the lifespan of components, so it’s not an insignificant issue.

The Quadro line of cards, while being significantly more expensive than their GeForce equivalents, actually run at lower clockspeeds to reduce heat production, and increase reliability and longevity. So when it comes to 3D gaming, Quadro cards aren’t faster even though they can cost substantially more. They’re just not designed for speed. In theory they should last longer, and although I haven’t seen anyone specifically test this, the warranty on Quadro cards is longer than for GeForce cards.

Looking beyond the main GPU chip, probably the most visible difference between GeForce and Quadro cards is in the memory. Generally, Quadro cards offer the option of WAY more Ram than GeForce cards, and they use more expensive ECC error correcting chips. As a rough indication, the latest GeForce gaming cards from nVidia can be bought with up to 8 GB of video Ram, while Quadro cards have options up to 48 GB. Looking back at older product lineups and comparisons, the Quadro range has always offered models with MUCH larger amounts of RAM than the GeForce range.

When I asked a number of co-workers and industry colleagues about the differences between the two product ranges, the amount of memory on the boards was the only one that they were immediately aware of. If you absolutely need a large amount of video memory, then the Quadro is for you.

Soft options

While clockspeeds and ECC memory are tangible differences between the two ranges, the real difference is in the software. The drivers for the card provide a bridge, or translation, between the applications running on the computer and the chips on the card that do the hard work. Most of the differences between Quadro and GeForce cards come down to the drivers.

The drivers for GeForce cards and Quadro cards are different. It’s not as though the GeForce drivers are just cut-down, or intentionally crippled versions of the Quadro drivers. It’s not like some After Effects plugins, where cheaper versions only work up to HD resolution and you need to pay more if you want to work at higher resolutions.

The drivers for the GeForce and Quadro ranges are developed by two different and separate teams, each with their own priorities. The GeForce team write drivers to get the highest possible frame rates in games, while the Quadro team write drivers to cater for their “professional” market. In the same way that software prices vary for all sorts of apps, nVidia evidently value the software they create for Quadro cards much more highly than the GeForce range, with AMD feeling the same way about their FirePro products.

nVidia have a certification program for their Quadro drivers to help software developers with stability, bug fixes and updates. While I haven’t looked into it, there are claims that some 3D software developers ignore bug reports and requests for technical support if the user doesn’t have a Quadro card.

One further point which may be significant is that the Quadro range of cards is only manufactured by nVidia themselves. While any 3rd party manufacturer can buy chips and components from nVidia and release their own range of GeForce cards, the Quadro range is strictly in-house.

Many of these areas have been the subject of much internet debate, some of it valid and some of it simply wrong. It’s common to find claims that Quadro cards and GeForce cards have identical hardware, and that some secret hidden file somewhere disables various features of the card, depending on which model you’ve bought. That’s just wrong. If you dig around long enough, you’ll find people who’ve hacked cheaper GeForce cards to run Quadro drivers, so 3D software acts as though it’s running on a card that costs 10x more, but that’s not really different to people running Hackintoshes.

I have no special insight into how nVidia work internally, and all of this information is just a collation of various Google searches. One article I read (from a reputable engineer) suggested that drivers for Quadro cards have roughly two years more development and testing invested in them before they are released, to help guarantee stability and reduce the number of bugs. GeForce drivers, on the other hand, tend to be updated much more frequently and with far less testing to cater for the gaming market’s never-ending desire for more performance.

But if you read user forums you’ll find just as many people complaining about bugs and glitches with their Quadro cards as you do with GeForce cards, so honestly – who really knows. I’m not defending the high price of Quadro cards, nor am I suggesting they offer value for money. But I do think it’s silly for people to claim that GeForce and Quadro cards are identical except for some “secret” setting that disables certain features.

Right up to the end of the viewport

For all of the differences between GeForce and nVidia cards, there’s one that is actually rarely mentioned – and that’s what they’re actually rendering, or more precisely, what they’re accelerating. From my perspective, as a non-3D artist, this feels like a very important distinction and yet I haven’t come across anyone else who’s emphasized this before.

This is something that has changed over time, which brings us back to the “complicated” tag. For the first (roughly) ten years of Quadro cards, it was relatively simple to understand but then – thanks to the developments outlined in Part 11 – it got messy.

Part 1: The first 10 years

In Part 11 I emphasized that there’s no one, single “best” way to do 3D. The way that 3D games are rendered is totally different to how 3D for visual fx are rendered.

When GeForce cards are rendering a game, the card is rendering the “final” output, in real-time. The monitor is plugged into the graphics card, what the graphics card renders is what the player sees on the monitor. That’s what they’re designed to do. Simple!

A Quadro card, by comparison, is designed to accelerate the viewport of the 3D software app. It’s speeding up the user interface. The actual “final” render is something separate. If you’re a Max, Maya or Cinema 4D user and you produce your final outputs using a commercial renderer such as Vray, then it doesn’t matter what GPU you have at all. Quadro or GeForce, Radeon or FirePro – the “final” rendering is purely CPU based.

This distinction is the same as the distinction between real-time gaming engines such as Unreal and Unity, and non-realtime renderers like Vray, Arnold, Renderman and Corona. If you’re working and animating in Maya, Max, Cinema 4D then you spend most of your time looking at the viewport – the user interface. This is what the Quadro range of cards was designed to accelerate. This is why they support such large amounts of RAM, so that large objects and scenes files can be loaded onto the graphics cards and then be processed directly by the GPU.

When nVidia refer to “professional” apps in their marketing, they’re referring to a range of 3D applications that are certified to work with Quadro cards. In some cases, this includes specific drivers for each 3D app that can be installed if you have a Quadro card, and that accelerate viewport performance – but only viewport performance. The “final” render is still processed by the CPU.

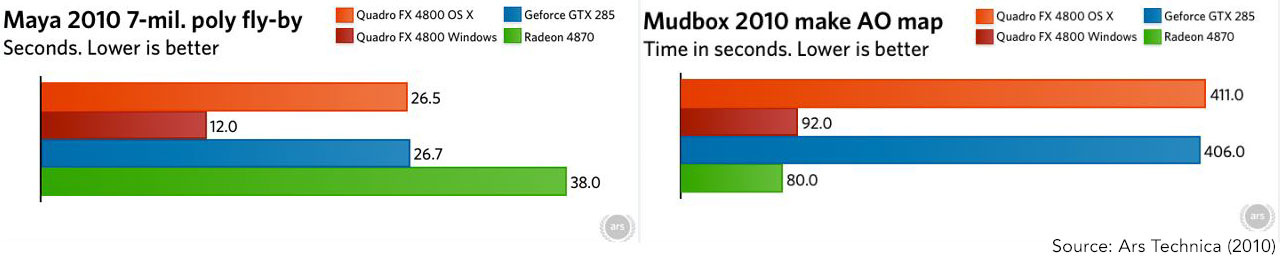

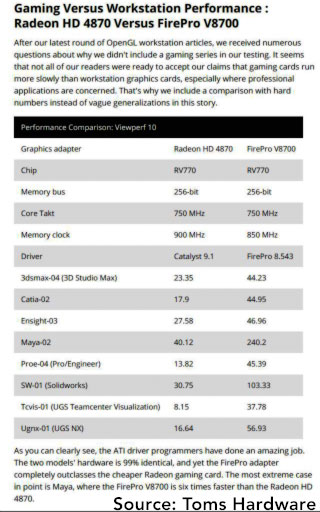

In 2009, Tom’s hardware tested an AMD FirePro against the equivalent Radeon, and found that the FirePro was up to 6 times faster in Maya, even though the clock speed was 50mhz slower. A different article references a 30x speed up when drawing anti-aliased lines. Rendering something 30x faster is the sort of performance gain that people pay for, even if it’s just a line.

Part 2: Viewport 2.0

So far, this isn’t too difficult to follow, the concept is straightforward: GeForce cards are designed to render 3D games as fast as they can. Quadro cards are designed to accelerate the viewport of 3D apps. Game developers write code specifically to take advantage of GeForce cards, app developers write specific Quadro drivers to take advantage of Quadro cards.

But it didn’t stay that simple for long.

In Part 11, we saw how the GeForce 8 and CUDA heralded the age of the GPGPU. The phenomenal boosts in speed that GPUs have bought to software over the past 10 years were made possible by the CUDA and Open CL languages, which enable “normal” software developers to write code that runs on a GPU. This is different from using a GPU to render a 3D object, the GPU is now “general purpose” and can be used for all sorts of other applications.

The Quadro cards specifically accelerated OpenGL commands, so as long as the 3D apps were processing all of the 3D graphics using Open GL, then a Quadro GPU would make things faster. But – and here’s the complicated bit – the advent of CUDA and OpenCL now gave software developers an alternative way to render 3D graphics on a GPU. They no longer had to use Open GL (or DirectX on Windows), they could write their own rendering algorithms using CUDA or Open CL.

This wasn’t restricted to the viewport, either. The non-realtime, photorealistic 3D rendering algorithms which had previously been processed by the CPU could now be re-written with CUDA and Open CL to utilize the power of the GPU. In 2012, Octane launched as the first fully GPU based renderer, followed by Redshift. Since then, existing 3D renderers have incorporated GPU acceleration into later releases.

Having developers write their own software using CUDA and OpenCL shifted the emphasis away from the customized OpenGL drivers that Quadro cards used, and towards the CUDA / OpenCL processing power of the GPU. In many cases, the GeForce range of cards had more capacity for CUDA processing than Quadro cards.

This presents us with the messy and confusing landscape we have now, because performance isn’t just the GPU you have: it’s also a combination of settings in your 3D app. As 3D companies updated their software, the viewport could now use CUDA and Open CL as well as Open GL. I think that in 3DS Max, the newer accelerated viewport was called “Nitrous” while Maya had the more restrained name “viewport 2.0”. Honestly, I don’t care. The whole thing is a pain in the butt and you still end up with that horrible sinking feeling when some accountant decides to pay lots of money for something that doesn’t help you at all.

By giving the user the choice of how the viewport was being rendered, they were also choosing which parts of their GPU were being used. Because the primary focus of Quadro cards had been accelerating Open GL, if the user chose to use CUDA and Open CL then the advanatges of a Quadro card were negated. One article looking at this issue noted that when using double-sided materials with Open GL, then a Quadro card could render certain things 33x faster than the equivalent GeForce card. But if you switch the viewport to render with Open CL, then there’s no difference at all.

I’m just glad I don’t deal with this stuff every day.

Apple in the ointment

If the advent of CUDA and OpenCL didn’t make things more complex, then up until 2009 Apple made the situation even more murky.

As detailed above, the GeForce and Quadro cards used the same family of GPU chips, but ran different software drivers. However, up until 2009, Apple wrote the drivers that came with OS X, not nVidia. This means that the difference between the GeForce and Quadro lines on Windows computers was not the same as on a Mac. If we think of a driver as a piece of software – and it is – then the situation was the same as if Apple decided they wanted to write Microsoft Office for Macs, and not Microsoft themselves. The end product might appear similar, but it’s not the same as if Microsoft had ported their own software to the Mac.

The problem was that Apple’s Quadro drivers weren’t anywhere near as optimized as nVidia’s drivers for Windows, and so a video card that was identically labeled had dramatically different performance between the two platforms. In some benchmarks, the Quadro card on Windows was twice as fast as the same card on OS X, with the OS X card performing at roughly the same level as an Ati card that cost $2,300 less. In one benchmark using the Mudbox 3D modeling app, the Quadro card on the Mac was about 1/3 the speed of the MUCH cheaper Ati Radeon.

There are plenty of people who think that Quadro cards are a waste of money, but at least there are clear differences between them when running certain applications on Windows. But if you had paid thousands of dollars more for a Quadro card on a Mac in 2009, then you certainly did waste your money. In fact, if you were using Mudbox then it appears that you paid thousands of dollars extra for your software to run three times slower.

Three months after the MacPro launched in July, nVidia took over control of the OS X drivers, but there weren’t any instant improvements.

More recently, relations between Apple and nVidia have deteriorated. Initially, Apple switched from nVidia hardware to rival AMD, before dropping support for nVidia products entirely. AFAIK the latest MacPro – the one with $400 wheels – has no option for nVidia cards at all. Since then, nVidia confirmed that they are officially discontinuing support for CUDA on OS X.

GeForce and the masters of the pixelverse

Judging from 20 years of Google searches, articles, internet forum questions and general rumors, there’s been no shortage of confusion about the difference between the two product lines. While the original explanation above is fairly simple – GeForce cards are for gamers, Quadro cards are for professionals – the huge price difference between them has fuelled speculation and debate around their respective value for money – some of which are valid, and others are just plain wrong.

For certain markets at certain times the Quadro and Firepro cards offered a clear value proposition. If you needed anti-aliased lines, double precision float calculations, two sided lighting, multiple-monitor support and so on then you were in the market for a Quadro or a Firepro. These features came at a price – and often a very steep price. Even today, a quick Google search easily finds a bunch of Quadro cards that are more than $10,000 – while I can also find a wide range of budget GeForce cards for less than $100. While I haven’t specifically searched, I imagine the same is true for AMD.

What’s it all about?

In the context of this series, the question would be – what’s the difference between a GeForce and a Quadro card when it comes to After Effects. The answer is: nothing.

The one thing that links Quadro cards (and AMD FirePro cards) to After Effects is that they’re the cause of poor purchasing decisions.

This series has covered a lot of ground, some of it roughly chronological. But we can summarise a lot of points here that have been dealt with in previous articles:

- After Effects is primarily a 2D application

- After Effects shuffles bitmap images around, it doesn’t render 3D geometry

- GPUs are designed to accelerate 3D rendering

- After Effects is not a 3D application

- Until very recently, After Effects has not made any significant use of a GPU for accelerated rendering

- After Effects definitely does not have special drivers optimised for Quadro / FirePro cards

There are some minor exceptions, mainly where 3rd party plugins for After Effects utilize a GPU.

But to generalise the first 25 years of After Effects’ life: the most expensive GPU will not make After Effects render any faster than a cheap GPU.

The distinction between Quadro and GeForce cards is contentious even amongst 3D artists – where the difference is supposed to be noticeable. Among my friends and colleagues, no-one is 100% certain of what the Quadro range offers, and none recommend a Quadro card over a cheaper GeForce alternative. While there may have been more visible differences about ten years ago, before CUDA and OpenCL acceleration really took off, I couldn’t come across any reliable indications that a current Quadro card offers any benefits to Max, Maya and Cinema 4D users. The only thing which everyone agreed on was that you could buy Quadro cards with a lot more memory than you could get with GeForce cards.

Because I’m primarily an After Effects user, it’s never been a concern for me. As I stated at the start, this particular topic wasn’t something I’d ever planned to write about. But I’ve worked for people who’ve spent thousands of dollars on Quadro cards expecting After Effects to render faster, and my heart still sinks at the thought.

Of all the random articles I found through Google on the topic of Quadro vs GeForce, I found this one the most informative and insightful. Hats off to Doc-Ok.

This is part 15 in a long-running series on After Effects and Performance. Have you read the others? They’re really good and really long too:

Part 1: In search of perfection

Part 2: What After Effects actually does

Part 3: It’s numbers, all the way down

Part 4: Bottlenecks & Busses

Part 5: Introducing the CPU

Part 6: Begun, the core wars have…

Part 7: Introducing AErender

Part 8: Multiprocessing (kinda, sorta)

Part 9: Cold hard cache

Part 10: The birth of the GPU

Part 11: The rise of the GPGPU

Part 12: The Quadro conundrum

Part 13: The wilderness years

Part 14: Make it faster, for free

Part 15: 3rd Party opinions

And of course, if you liked this series then I have over ten years worth of After Effects articles to go through. Some of them are really long too!

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now