Plenty of people have said that money can’t buy you happiness, and anyone who says it can hasn’t used After Effects. In Part 1 of this series, I said that there’s a point where spending more money won’t make After Effects render any faster, and while that’s generally true for multi-core CPUs and GPUs, there are other avenues for performance gains that don’t involve money.

In this article I’m going to look at how changing your workflow and project settings can significantly improve the performance of After Effects, in most cases without spending anything at all.

Work smarter, not expensively

Spending money is easy. Convincing people to spend money so we don’t have to work as hard keeps the advertising industry busy. Initially they sold us vacuum cleaners so we didn’t need to physically beat carpets and rugs. Now they’re selling us robot vacuum cleaners so we don’t even need to do the vacuuming. If we don’t feel like cooking, we can eat out and pay someone else to cook for us, and if we do cook at home then we can buy a dishwasher to do the dishes. It’s just throwing money at the problem, which is easy if you have the money.

If we compare cheaper computers to more expensive ones then we expect to see faster performance for our money. It’s a fair assumption that if slow performance is the problem, then spending more money can solve it. For some applications this holds true. If we look at CPU-based 3D rendering, then spending more money for more CPU cores definitely makes the rendering process faster. For real-time 3D games, a more expensive GPU renders a higher number of frames-per-second (unless you’re talking Quadros). All we have to do is spend the money.

But when we get to After Effects it’s a different story – at least it is at the moment, in 2020. We can buy the most expensive CPU and GPU on the market and it won’t make our renders noticeably faster than a much cheaper computer. In fact – without 3rd party rendering software – it’s possible that a more expensive CPU will render After Effects compositions (slightly) slower than a cheaper model. This is what we covered in part 5 & part 6: After Effects is predominately single-threaded, and so its performance is governed mostly by CPU clock speed.

But modern CPUs have focused on more CPU cores, not faster ones. The most expensive CPUs have the most cores but they run at slower clock speeds, however it’s possible to buy cheaper CPUs with fewer cores running at higher clock speeds. As After Effects performs better with higher clock speeds than it does with a higher core-count, a cheaper CPU with a higher clock speed will provide better performance for a lower cost.

A quick look on Google suggests that AMD’s most expensive processor is the 3990x, offering 64 cores for about $4,000. However the base clock speed is 2.9 GHz, and After Effects simply won’t use most of those cores. A few months ago, Intel announced a new “Core i9 10900K”, and although it only has 10 cores as opposed to AMDs 64, it offers a boosted clock speed up to 5.3 GHz. More importantly, it’s only $1,000 – 1/4 the price of AMD’s flagship. If you’re building a rig for After Effects and After Effects only, then the Intel i9 10900K is going to provide faster After Effects rendering for a much lower cost than an AMD 3990x. This is only true for After Effects – I’m not claiming that the Intel CPU is better or faster than the AMD one overall. If we were comparing VRay renders then it would be a completely different story. The issue isn’t Intel vs AMD, it’s After Effects vs multi-threading.

It’s where this series began: the observation that a MacPro doesn’t render After Effects projects faster than an iMac that costs 1/3 the price. You can spend lots of money on a “God box” but After Effects simply doesn’t utilize all of the power available.

If happiness is faster After Effects renders then sorry, money will only get you so far.

Spending money is easy if you have it, but it’s not the only avenue to better performance. There are plenty of other ways to make After Effects work faster – but it involves extra work for the user. In some cases, a few simple steps can result in dramatic speed improvements but it requires re-thinking the way you use After Effects. The key is to work smarter, even if it involves jumping through hoops.

After Effects is not perfect. It’s old, and currently it’s relatively slow and sluggish. But that’s also the case for some After Effects users. Spending money is easy, changing the way you’ve worked for years is not. But the point of this article is that if you’re prepared to change the way you work, you can get more performance improvements than if you simply bought a more expensive CPU.

Recap: Part 3 and all those numbers

Many of the problems facing After Effects are outlined in parts 2 and 3. In part 2, I looked at exactly what After Effects does: it’s a tool that processes bitmap images. Most of what After Effects does involves loading up a bitmap image and performing calculations on each and every pixel. Then doing it all over again.

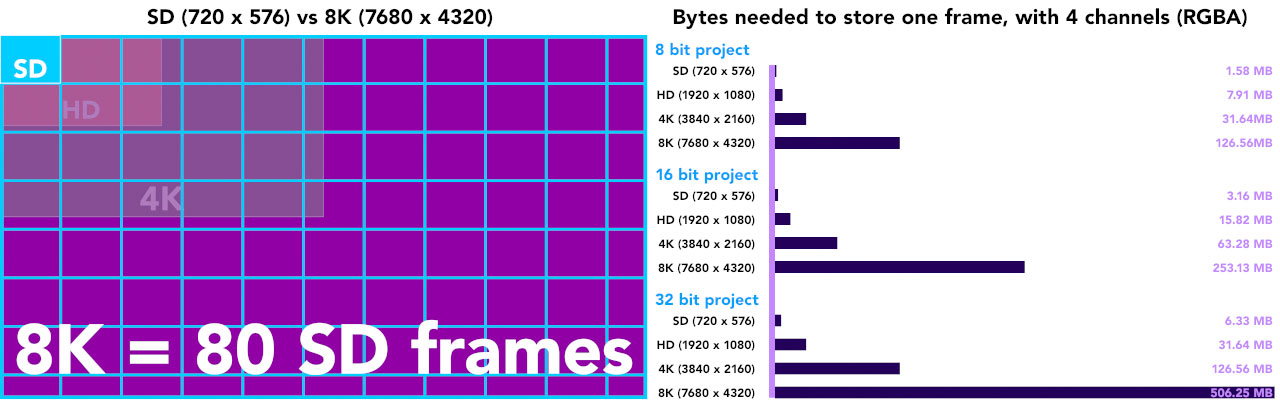

In Part 3 I looked at how quickly the number of pixels can stack up with larger image resolutions, and how the technical challenges for After Effects have changed over the years. When I started work in 1997, I worked with 8 bit projects at standard definition – 720 x 576. A single frame would take up roughly 1 megabyte (1.6 MB with an alpha channel). Now, the industry is pushing for 8K HDR images, and a single frame takes up about 506 megabytes – that’s half a gigabyte for one frame!

As noted in Part 3, a single 8K frame has the same number of pixels as 80 standard definition frames – that’s 80 times the number of pixels that need to be processed. And changing from an 8 bit project to a 32 bit project, necessary for HDR, means we have 4 times as much data for each pixel.

So to exaggerate the difference by comparing these two extreme situations: a single HDR 8K frame has 320 times the data to be processed than a single 8 bit SD frame.

If we have a project that is 8 bit, SD and it takes 1 second a frame to render, then it’s reasonable to assume that it will take 320 seconds a frame to render if we change it to 8K, HDR. But 320 seconds is almost 5 ½ minutes, and for most After Effects users that’s just not acceptable for rendering a single frame.

In reality, the render time difference won’t be anywhere near that large, but the point is that we can easily overlook how much work we’re asking After Effects to do.

Every time we double the resolution, there are four times as many pixels to be processed.

The timelines they are a changing

After Effects in 1997 was understandably different to the current version of AE we have in 2020, but there’s more to the differences than just a list of new features. Some of the changes reflect developments in the industry itself, and how the technical expectations for video and vfx have evolved.

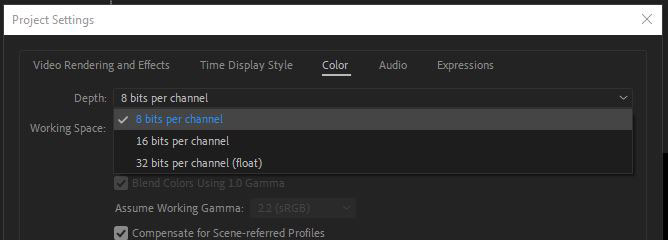

After Effects in 1997 only processed 8 bit images, without colour management. After Effects in 2020 can work in either 8, 16 or 32 bit mode (HDR), with colour management options. These aren’t just new features, they’re now fundamental industry requirements.

But these options also have significant performance implications because they directly affect how much processing After Effects has to do to each pixel. And the amount of processing that each pixel requires is directly related to overall performance.

It’s easy to have a default After Effects setup that you rarely change, or don’t think about too much. For many After Effects users, the project will default to 16 bit colour, sRGB or Rec 709 colour, and HD resolution. Some may be working in 8 bit, others in 32 bit mode. There are still AE users working in SD (!) while others are routinely working with 4K and 8K footage. But if you’re usually working with the same types of projects day in and day out, it’s easy to forget that you can change fundamental project settings with a mouse-click.

Laying out the problem…

Having re-capped a lot of the stuff we went through in earlier articles, we can summarise the basic issues facing After Effects & performance:

• After Effects is primarily an app that processes bitmap images

• The more pixels in a bitmap image, the more processing AE has to do

• The number of pixels in an image increases exponentially with resolution

• In addition to the number of pixels, After Effects also has settings for the number of bits per pixel. A 16 bit image takes up twice the amount of memory than an 8 bit image. A 32 bit image takes four times the memory of an 8 bit image.

• Colour management, a modern requirement for professional graphics, presents additional processing that needs to be done on each pixel.

Toggle on, Toggle off

Looking at it this way, we can see two approaches to improving the performance of After Effects without considering different computer hardware:

1) Reduce the number of pixels that After Effects has to process

2) Reduce the number of calculations that After Effects has to do to each pixel

At first glance this might not seem feasible – if your project requires a HD or 4K delivery then surely you can’t just change the composition to be smaller!

But while your final delivery may be a set resolution, eg. 1920 x 1080, you can certainly toggle a few settings while you work to help improve your productivity. This might be something you already do, or it might involve learning some new workflow practises. If you’re changing the preview resolution from “Full” to “half” or “Quarter” then you’re already on the right track. There are plenty of settings we can toggle to change the number of pixels that After Effects is processing, and the clever use of pre-compositions and pre-renders can shift the burden of rendering away from the “final” render. In some cases, After Effects might be doing a lot more processing than you realise – some file formats are much more demanding than others.

Something as simple as rendering to a different format, or pre-rendering one format to another, can make a huge difference – for free.

Example: Looks fast or looks funny

One example is colour management vs RAM preview. As detailed in the part 13, for the past few years After Effects hasn’t always been able to play back a RAM preview in real-time, or in-sync with audio. Even the very latest versions of CC 2020 still have trouble with specific nVidia drivers, and 32 bit linear projects. If you’re focusing solely on designing motion then this is a big problem – designing motion means you really need to see your animation playing back reliably in real-time. But while you’re working, if you’re concentrating on animation – adjusting keyframes and movement – then colour accuracy might not be so critical. You might find that turning off “Display colour management” makes a noticable difference to performance – your colours might shift a bit, but you get real-time RAM preview back. When you’re happy with the motion elements of your design, turn colour management back on for when it’s time to focus on colour – colour grading doesn’t always require perfect playback speeds.

So you can think of Colour Management as a sort of performance toggle – turning it on and off allows you to focus on either motion or colour accuracy. And while you probably need both for the final render, when you’re working away you can focus on one thing at a time.

What’s this button do…

Compared to 1997, there are now a bunch of switches and settings in After Effects that we can toggle, which can all have a major influence on the overall speed that After Effects works at. Here’s a list:

• Project bit depth

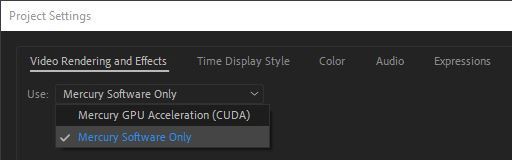

• Project rendering – software or GPU

• Composition resolution

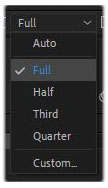

• Composition preview resolution

• Composition aspect ratio conversion

• Composition Region Of Interest

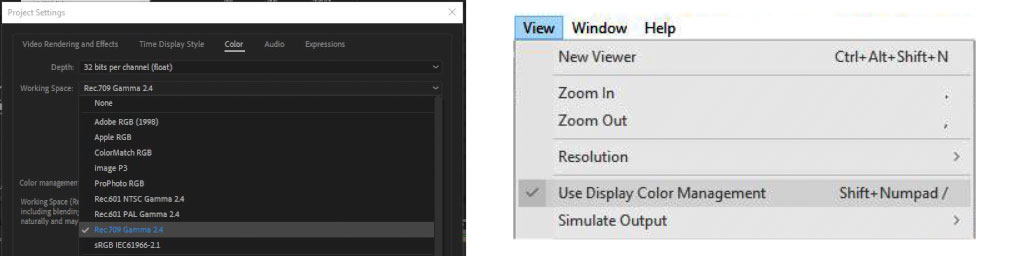

• Project colour management

• Display colour management

• Footage colour management

Every one of these settings can have a significant impact on the amount of processing that After Effects has to do, and as long as we’re mindful of the settings needed for the “final” output, we can toggle these as we work to balance performance.

Depending on exactly what type of work you’re doing, and what assets you’re using, some of these settings may have more or less impact than others. For example, if all your assets are square pixel then “aspect ratio conversion” won’t make any difference. If you mainly use plugins that haven’t been GPU accelerated then switching between Software and GPU rendering may not make a noticeable difference either.

If you’re working on the same types of projects for the same clients, then it’s easy to have a default project / composition setting that you barely think about. But overall there are a number of settings that can collectively make a bigger difference to your rendering and preview speeds than a hardware upgrade.

It’s difficult to give consistent examples or provide hard figures because After Effects is used by so many different people in so many situations. Again this was introduced in part 1 – there’s no such thing as a typical After Effects user. Depending on the type of work you do and the technical requirements of your projects, your mileage may vary.

Let’s start with some of the simple stuff.

Composition Preview Resolution & Zoom

When you set up a new After Effects composition, choosing the resolution and frame rate is the most important part. Most briefs start with a technical guide for deliveries – usually 1920 x 1080 or perhaps 4K.

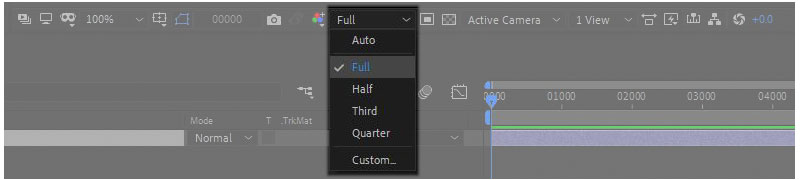

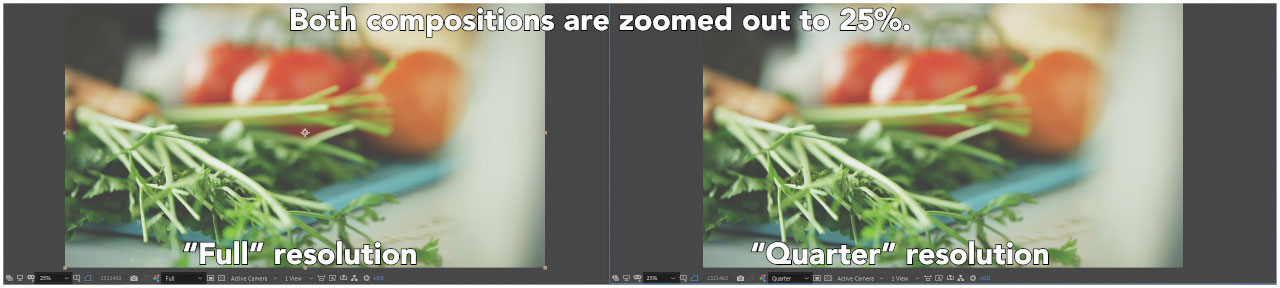

But once a composition has been created, you can preview and work with it at a lower resolution – there’s a simple drop down menu at the bottom of the Composition panel. This is the composition preview resolution. It’s a basic feature of After Effects that’s been there for as long as I can remember, and yet I often see it being overlooked as a tool for toggling performance.  This is the “full” “half” “third” “quarter” res drop down menu. These settings determine the resolution that the composition window is rendered at. If your composition is 1920 x 1080 but the resolution is set to “half”, then After Effects treats the composition as if it’s 960 x 540. As we covered in Part 3, this is ¼ the number of pixels, so rendering times can be 4 times faster. If you set the composition to Quarter resolution, you have 1/16 the number of pixels, so the composition previews 16 times faster.

This is the “full” “half” “third” “quarter” res drop down menu. These settings determine the resolution that the composition window is rendered at. If your composition is 1920 x 1080 but the resolution is set to “half”, then After Effects treats the composition as if it’s 960 x 540. As we covered in Part 3, this is ¼ the number of pixels, so rendering times can be 4 times faster. If you set the composition to Quarter resolution, you have 1/16 the number of pixels, so the composition previews 16 times faster.

As higher resolutions become more common, it’s not unusual for After Effects compositions to be larger than the monitor they’re being displayed on. Not every After Effects user is sitting in front of a 4K monitor. Even a regular 30” monitor with a resolution of 2560 x 1440 isn’t that much larger than HD. If your monitor has a resolution of 2560 x 1440, by the time you arrange your workspace with panels and the the timeline, you might find that the main composition window isn’t large enough to show a 1920 x 1080 image at 100% size.

So you do what every AE artist does all the time – you zoom out. Tapping the “,” and “.” keys- even if you think of them as the “<” and “>” keys – zooms you in and out. It’s something you do constantly without thinking, a key part of AE that’s ingrained into muscle memory. At the bottom of the composition panel the “zoom” now shows 50%. Perhaps you’ve set it to dynamically scale the composition window, so it might show something else.

But if the zoom doesn’t match the preview, then After Effects is also scaling up the preview to display it in the composition window – and this takes time too. It might not be much, but it adds a little bit to every frame. The key here is to match the zoom amount to the preview resolution. Matching the composition preview resolution to the zoom will mean you’re not wasting time by rendering stuff at one resolution and then scaling it up or down. After Effects does have the “Auto” setting, which toggles this for you as you zoom in and out, but this can sometimes play havoc with your cached frames and so I usually have it turned off.

The larger your composition and source footage, the more significant the effect of composition resolution and zoom can be. With 4K and higher resolution footage becoming increasingly common, it’s more likely to find AE artists working with their composition zoomed out to 50%. Yet if the preview resolution is set to “full”, then this means AE is rendering 4x more pixels than the user is looking at, AND scaling them down, all of which slows everything down.

There will always be a time when you need to work at 100% zoom, and at full resolution, and in this case your rendering and preview times will be what they are. But working at half resolution means you’re rendering 4x faster – and if you’re zoomed out to 50% then you might not see much difference, if any.

Region of Interest – ROI

There will be times when you need to work with your project at full resolution, 100% zoom, and with colour management on. If you’re not working on the entire image, then the Region Of Interest can improve performance by only rendering the area that you’re looking at. This can be useful if you’re doing masking or roto work to a small area of a large plate. It’s a feature that’s been in AE for years, but I rarely see people use it. Which is a shame, because it’s a pretty powerful performance tool.

The region of interest is also useful for cropping compositions. You can define the area you want to crop down to, then use the “Crop Composition to Region of Interest” function in the Composition menu.

Additionally, when you’re only working on a small area of a large composition, you can RAM preview a much longer duration than if you’re looking at the full screen, because smaller regions of interest take up less memory than the whole comp.

Project Bit Depth

The Project bit depth toggles between 8, 16 and 32 bit projects. Exactly why you’d choose one setting over another is outside the scope of this article, but the main point is that a 32 bit project will take up more memory and require more processing than an 8 bit project. This affects your entire system – your CPU has more processing to do, your memory is storing larger files, and your system bus is transporting more information around the various parts of your computer. Storage and networks speeds will become noticeable bottlenecks if they have to store and transfer larger and larger files, and your hard drive will fill up faster.

Despite my “worst case” exaggerated example earlier, rendering in 32 bit mode isn’t 4x slower than rendering in 8 bit mode – just as rendering in 16 bit mode isn’t 2x slower. In fact the difference is much less than you might think, although my attempts to come up with a reliable benchmark produced a range of erratic results.

In one test composition that only used solids, masks and strokes, an 8 bit render took 10 mins 42 secs, while switching to 16 bit mode took 15 mins 5 secs. Depending on how you word it, you could say that the 16 bit render was 50% slower than the 8 bit render.

But in a different test project, an 8 bit render took 3 mins 34 secs while a 16 bit render took 4 mins 20, a difference of about 20%.

Yet another test – a “real life” TVC I recently worked on – an 8 bit render took 9 mins 50 secs while a 32 bit render took 11 mins 6 secs. Jumping from 8 bit to 32 bit only increased the rendering time by 12%.

Yes, those extra bits do take longer, but exactly how much longer seems to vary from project to project, and depends heavily on what type of assets you’re using.

If you’re doing 32 bit HDR compositing, especially in linear space, then it’s difficult to switch to 8 or 16 bit mode to speed things up, as the rendering differences can be too dramatic. But if you’re doing motion graphics that don’t require floating point calculations, then the visual difference between 8 and 16 bit colour might not be noticeable, while offering a modest speed boost.

If you’re colour grading footage or working with photographic images then 16 bit mode will provide you with more precision when adjusting colours, and also when stacking up lots of effects. But for more graphic approaches with flat colours then 8 bit mode will be fine, and in addition to a small speed boost you also have more memory available to cache previews.

For motion graphics work, you can always work in 8 bit mode and then switch to 16 bit mode when rendering the final output. Toggling between 8 and 16 bit modes will not change the colours in your project – they are just calculated with more precision. It’s like measuring something in mm instead of cm – the measurement is more precise, but the size of the object doesn’t change. Toggling between 32 and 8/16 bit mode can change colours, and so if your final render is going to be rendered in 32 bit mode then you’re best off using 32 bit mode throughout.

Colour Management

Earlier I used the “Display colour management” setting as an example where we can toggle After Effects settings while we work, to improve performance when we need it. Personally, I’ve found that display colour management is the one factor that determines whether or not a RAM preview plays back reliably at 100% speed.

The “display colour management” option determines whether an individual composition is displayed with a colour managed preview. But colour management extends beyond this setting and includes every footage item, and these days it’s increasingly likely that you’ll encounter footage that’s been shot in colour formats other than the Rec 709 standard for HD. Overall project colour management and individual footage items can be easily overlooked when it comes to performance, and yet project and footage colour management can have a significant impact on rendering speeds.

When colour management is turned on – your “working space” is set to something other than “none” – After Effects automatically converts any footage that’s tagged as a different colourspace to match the project settings. However this can involve a lot of additional processing behind the scenes, making previews and renders slower. Colour management with lots of source footage that doesn’t match your project requires several additional steps of processing to your workflow – every single pixel of every single footage item will go through extra processing, including all RAM previews as well as output renders. All of this can add up, very quickly. This is especially true for larger resolution files – never forget that the number of pixels in an image increases exponentially with resolution.

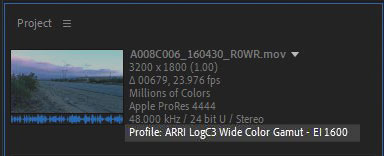

Several major camera manufacturers, including Arri, Canon and Sony, all make cameras that record footage in a Log format. Arri have LogC, Sony and Canon have SLog and CLog respectively. These colour formats need to be converted to the same colourspace as the project, usually sRGB or Rec 709. Working with Log files in a regular HD project is a lot slower than working with regular Rec 709 ProRes files.

How much of an impact this has on your project will depend on the differences between your source footage and your project. If you have a bunch of ProRes files that are Rec 709, and your project is set to Rec 709, then no colour conversion needs to take place – everything matches. But if your footage is in a different colourspace, eg LogC, CLog, SLog or something else, then every pixel of every frame will need an additional colour management process applied to it. This can slow things down a lot more than you might think.

In a quick test, I imported a 3K ProRes file from an Arri Alexa that had been recorded in LogC colourspace. The duration was just under 30 seconds. I set up a simple composition that scaled the clip down to 1/3 size – 1066 x 600 pixels – and rendered it out as a regular ProRes 422 clip.

With colour management turned off, the clip rendered out in just over 1 minute – 72 seconds. With colour management turned on, rendering exactly the same thing took over 5 minutes – 305 seconds. Rendering the same thing but to a LogC ProRes (not the default Rec 709) added another 25 seconds to the render time again, for a total of 330 seconds.

Source footage: 30 second LogC ProRes file, 3K resolution

Colour management off, render to ProRes: 72 seconds

Colour management on, render to Rec 709 ProRes: 305 seconds

Colour management on, render to LogC ProRes: 330 seconds

So in this very simple example with one layer, using footage in a Log format took over four times longer to render.

In another test, I imported 5 ProRes files and layered them up in a 10 second composition. I changed the interpretation of all ProRes files and timed the renders:

Source footage: 5 ProRes files layered up in a 10 second composition

Colour management off: 28 seconds

ProRes as rec 709: 28 seconds

ProRes as LogC: 215 seconds

ProRes as CLog and SLog: 207 seconds

ProRes as rec 709, render to sRGB: 32 seconds

In this case, with 5 layers of ProRes files instead of just one, the render times are over 7 times longer when using Log footage. But what’s also worth knowing is that if your project is set to sRGB and your footage is Rec 709, which would be a default for many users, then there’s still a colour management overhead in the conversion from Rec 709 to sRGB. It’s not as bad – 32 seconds as opposed to 28 – but it’s still there.

Colour management doesn’t make as much of an impact on items that are generated by After Effects itself – strokes, text layers, shape layers and so on. But if you’re importing lots of footage, and especially footage that’s a different colourspace to the project, then toggling colour management on and off can make a big difference. The problem with this approach is that turning off colour management can make footage look very different – the solution is to pre-render to a faster format. Which brings us to our next topic…

Mind your Pre’s and Queues

A while ago I joked on twitter that you could tell if an After Effects user came from a design or vfx background by their use of pre-comps. In my experience, designers seem to hate pre-comps and avoid them where they can. VFX artists are much more likely to pre-compose.

The use of pre-comps and pre-renders can dramatically affect the rendering time of After Effects projects. Embracing the use of pre-comps, and pre-rendering when you can, is mostly what I meant earlier by changing your workflow.

Render early, render often.

Pre-composing and pre-rendering is probably the most effective means of improving performance in After Effects.

In several previous articles I’ve written that the “final” render of an After Effects composition can take a surprisingly small percentage of the overall time spent working on the project. If you have days, weeks or maybe even months to work on the same After Effects project, then most of that time After Effects isn’t rendering the “final” output. In fact, most of the time that you spend working on a project, After Effects isn’t rendering anything. It’s perfectly normal to only queue up a render when you actually have something to render.

We can take advantage of this time by using it to pre-render layers and elements that take a long time to render. One advantage of After Effects’ under-utilisation of processor cores is that you can render things in the background without noticeably slowing down the main After Effects app.

While this does involve 3rd party rendering software, the cost of buying a script isn’t nearly as significant as a major hardware upgrade.

Pre-rendering regularly is something of a mind-set. For many users – and in my opinion, mostly those from a design background – pre-rendering is often avoided to retain maximum flexibility in a single, large composition. But when used appropriately, pre-rendering saves huge amounts of time by shifting rendering time away from the final deadline. By using the CPU to render in the background, and then using pre-renders in your compositions, the performance burden is shifted off the CPU and onto your storage (and possibly network).

It’s much faster to load a frame from a drive than it is to render it from scratch, especially with the latest SSDs.

A good example is keying. If you’re compositing a performer in front of a background – visual FX 101 – then keying your footage can take some time and care to do properly. It’s a topic I covered extensively in my 5-part masterclass on chromakey in After Effects. If you follow every step, then keying will include degraining the source footage before you apply the key, possibly combining several layers together with different keying settings, and then cleaning up areas of the keyed footage afterwards.

All of these steps combined can result in a heavy “final” composition that’s slow to render, and that means it’s tedious to work with and preview. Pre-rendering in the background results in a keyed file that’s fast to work with, and will generally preview in real-time.

You can see a lot of productivity improvements by pre-rendering stages of your composition in the background. For example, if you’re starting a project that involves a lot of keying, then you might begin by setting up and pre-rendering all your footage with a denoise filter. Once your footage has been keyed, you might pre-render it again before you do any frame-by-frame cleanup. Painting frames individually is certainly much easier if you’re working directly with a pre-rendered file, and not a heavy composition. Any time spent waiting for pre-renders can be made up many times over.

As I said before, this takes the burden off your CPU and moves it onto your hard drives. If you start to pre-render a lot then you’ll fill up your drives sooner, and it helps if you’re organised and clean up unwanted files regularly. But overall, investing in fast SSD storage for After Effects is a better use of money than investing in an expensive multi-core CPU that won’t get used.

P is for Proxy

The Proxy function in After Effects lets you pre-render compositions, and then toggle between the pre-render and the actual composition. This can be useful if you want to keep track of where your pre-renders came from, and also if you need to go back and revise the original comp.

You can pre-render at lower resolutions than your actual composition, which can speed up the process of working on parts of a project before you turn off the proxy to render the “final” version at full resolution.

There are plenty of tutorials and articles around that introduce the use of Proxies, and demonstrate how they can dramatically improve your productivity and rendering speeds.

File Formats

After Effects can work with a wide range of file formats. Every day we deal with JPGs, TIFFs, PNGs, AI and EPS files as well as footage formats such as ProRes. But not all formats are created equal. So here’s one big tip for you when it comes to performance:

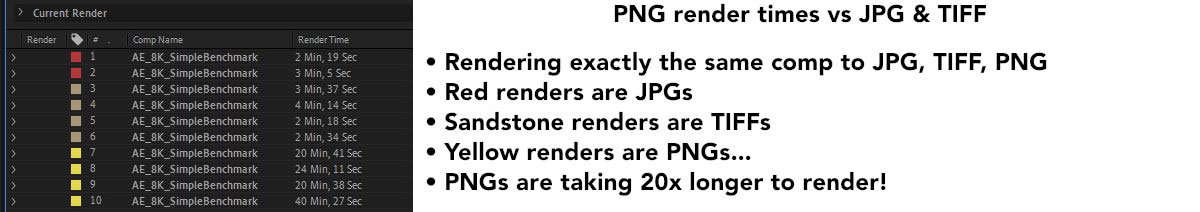

Don’t use PNGs.

Yes PNGs are popular. They’re everywhere. Many people recommend you use PNGs instead of Tiff files, but don’t. They’re slow. I wasn’t aware that PNG files were so much slower than other file formats until I started rendering some benchmarks.

PNG files are so slow that I even checked in with Adobe to see if it’s a bug. It’s probably not. It’s just how they are, and it caused by the compression algorithm they use.

The larger your resolution, the slower they get.

By the time we get to 8K resolution, a PNG file takes about 20x longer to save than a JPG or TIFF file. In the project I was using to test the rendering speeds of different file formats, rendering to a JPG sequence took AE about 2 mins 20 secs (140 seconds). Rendering exactly the same thing to a PNG sequence took over 40 minutes.

Yes that’s right – just rendering to a PNG sequence instead of a JPG sequence increased the rendering time from 2 minutes to 40 minutes.

Just use TIFFs.

Captain’s Log

Earlier I mentioned that Colour Management affects performance, and if your imported footage is a different colourspace to your After Effects project then every pixel of every frame needs additional processing. I’ve noticed this with Arri LogC footage because that’s the format I seem to be given the most, but the same is true for Sony and Canon Log formats as well. Log formats are popular because they record a wider range of brightness values than regular rec 709. The increased lattitude that you get with high-end cameras is one of the main reasons you’d choose to use one, and the Log format is key to storing the full dynamic range of the image. But as we saw earlier, processing Log footage can introduce a very noticeable overhead to your projects.

The solution is to pre-render to another format that’s faster to load, and the obvious candidate here is an EXR sequence, although you could choose a regular rec 709 ProRes file too. The whole point of recording footage in Log colourspace is to maintain a large dynamic range and wide colour gamut, and so if we’re looking to transcode something like LogC to another format, we need to choose a format with a similarly wide range. That’s exactly what EXR files are. However the various compression algorithms used by EXRs are much faster for After Effects to process than the colour conversion needed for LogC, Clog and Slog.

If you’re working in 32 bit linear mode, then pre-rendering any Log footage to a linear EXR sequence means the colour processing is only done once – when you pre-render. Once you import the EXR sequence, it will be in the same colourspace as your AE project – 32 bit linear – and so the footage won’t require any additional colour managment processing as you work. This can result in major speed improvements to your workflow.

As an example, I used the same 3K LogC clip that I used earlier, and built a simple AE comp with it. Rendering it out using the original LogC ProRes files took just over 5 minutes – 303 seconds. But after replacing the LogC ProRes files with a pre-rendered EXR sequence, the render time dropped to 1 minute 41 – 101 seconds. In other words, by pre-rendering out the Log C files and converting them to EXRs, the final render was 3x faster. This is for a simple comp with only 1 layer – you’ll see a bigger difference and greater benefits when working with multiple layers.

Source footage: 3K Arri Log C ProRes

Rendering out 30 second clip using LogC as source: 303 seconds

Rendering out 30 second clip after converting to EXR: 101 seconds

This approach isn’t just for people working with 32 bit comps. Log footage is increasingly common. If you’re working in a standard HD project with rec 709 colour, just pre-rendering any Log footage to Rec 709 will also provide you with a significant performance boost.

Feeling Raw

While After Effects users are probably used to dealing with ProRes Quicktimes and MP4s, the last decade has seen significant developments in digital cameras, many of which record footage in their own proprietary format.

While the Arri Alexa records a standard ProRes file that can immediately be opened in any app that reads Quicktime files, other camera manufacturers record in a RAW format that needs to be processed before you can see it. RED, who pioneered the use of “Raw” footage, have their own proprietary R3D format. Blackmagic have followed their lead, with some of their cameras offering the option of a “BRAW” format.

In these cases, the cameras aren’t recording a regular image that can be immediately viewed on screen. Instead, they’re recording the “raw” data from the camera’s image sensor, and it needs to be converted into an image before it can be used. This process is called “de-bayering” and it’s very processor intensive.

Currently, After Effects is able to import R3D files natively, and support for BRAW files is provided through a plugin by Autokroma, perhaps better known for their “After Codecs” product. Working directly with raw footage means you have access to all available image data – but the price is performance.

Again – pre-rendering to a different, faster format will result in a faster project – again I recomend an EXR sequence. The initial render may take a long time, but once the footage has been converted to something that is faster to process then the overall project will be significantly faster to work with.

As a quick test, I just opened up a R3D file and RAM previewed 60 frames at full resolution. It took 96 seconds. Then I pre-rendered it out as an EXR sequence, imported that and RAM previewed it at full res. It took 31 seconds, about 3 x faster.

Source footage: 8K R3D file, 60 frames duration

Ram Preview, R3D file: 96 Seconds

Ram Preview, EXR sequence: 31 seconds

Waiting for guff

Changing the composition preview and zoom settings affect how many pixels are being processed in your current composition, and using the Region Of Interest can temporarily reduce the number of pixels After Effects is working on to a specific area.

But even more significant, long-term improvements can be found by limiting the amount of time After Effects spends processing pixels that are never used – you can do this with pre-composing, pre-rendering and cropping.

I’ve previously written about some extreme examples of this – an early article of mine detailed how I was able to drop render times from over 5 minutes per frame down to a few seconds per frame by cropping layers to the size they were needed.

Exactly the same principle was behind the tale of the “worst After Effects project” I’d ever seen, where a single 14K Photoshop file was duplicated hundreds of times in one composition, with each layer masked to the area needed, resulting in render times that were over 45 minutes per frame. By pre-composing each layer and cropping it down to just the size that was needed, the “final” render times were reduced to less than 10 seconds a frame.

A similar situation can easily occur when scaling down high-resolution footage. We’re working at a time where even low-cost cameras can shoot 6K or even higher resolutions, and yet there are still AE artists in broadcast environments who are delivering standard definition content. If you do have footage elements that are significantly larger than your delivery size, then pre-rendering the footage scaled down to the resolution you need it is another way of shifting the rendering time away from the final deadline.

Recently I worked on a TVC and the background plate had been shot with a Blackmagic Pocket camera, at 6K resolution. The TVC itself was composited and delivered at HD. Pre-rendering the background plate at HD resolution significantly improved the final rendering time, as not only was the image already scaled down from 6K to HD, but by rendering to an EXR sequence the footage didn’t need to be processed from the Blackmagic RAW format every time I previewed it.

Reducing the amount of guff that After Effects has to process is so critical when it comes to rendering performance that I’ve written two After Effects scripts to help automate the process. The first script is the one I mentioned in an older article, and it’s designed for vector artwork. It takes a single layer with many masks on it, and it copies each mask to an individual solid – while cropping each new solid to the size of the mask. By reducing the number of pixels in each layer that are never used, rendering times can be significantly reduced.

A similar script takes a footage layer with many masks, and it pre-composes the footage layer, once for each mask, and crops each individual pre-comp to the size of each mask. This makes it easy to create pre-comps that can be pre-rendered out, ultimately saving After Effects from processing pixels that aren’t needed.

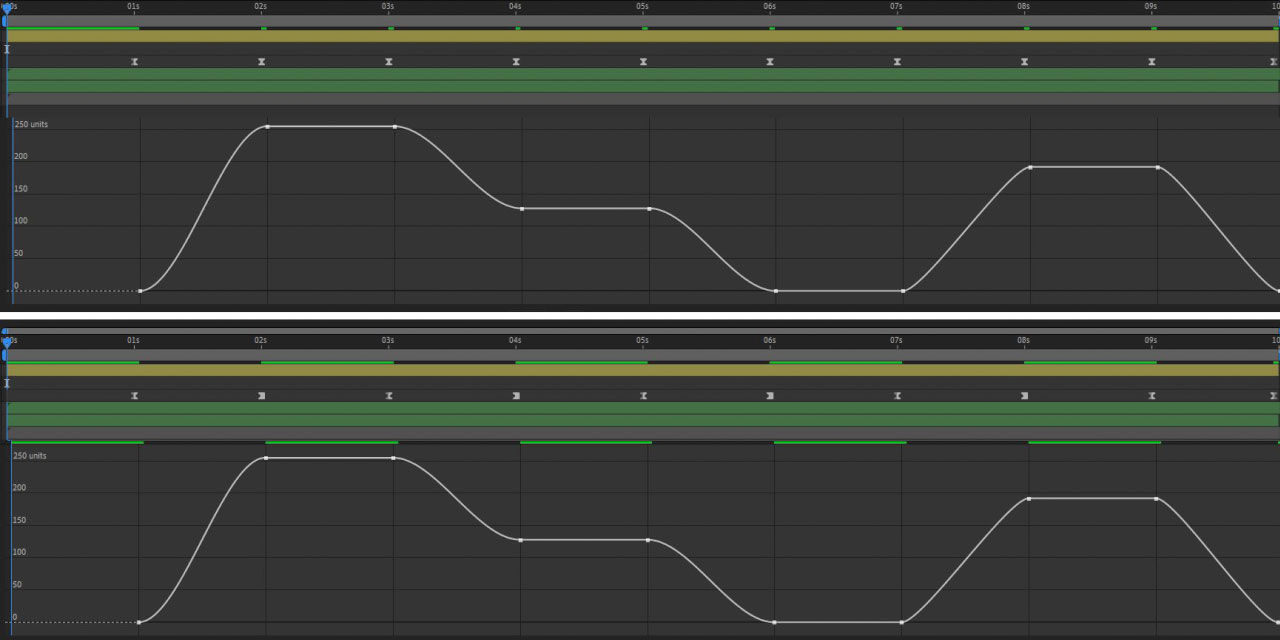

Hold it right there

After Effects has improved dramatically over the years when it comes to memory management and caching, but there are still cases where it can do with a helping hand. Sometimes After Effects will render frames that it doesn’t need to, but you can manually fix this by using hold keyframes.

In fairness this seems to be a fairly niche issue, and it might be restricted to adjustment layers, but I discovered this a few months ago and it made a huge different to my project at the time, so it’s worth sharing here.

In my case, I was using an adjustment layer to animate a depth-of-field effect. By using a Z-depth map and a camera lens blur effect, I was pulling focus from the background to the foreground, then back to the background. I noticed that After Effects was rendering every single frame, even though my source footage was all still images. Because nothing was moving, there weren’t any changes from one frame to the next and After Effects didn’t need to re-render every frame – it should have cached the last frame and re-used that. The keyframe values for the camera lens blur effect didn’t change, but After Effects rendered each frame anyway.

By manually changing the keyframes to Hold, After Effects realized that nothing was changing and it used cached frames to significantly speed up rendering.

What’s in the oven

In more niche areas, expressions can sometimes cause noticeable slowdowns, and this seems to be especially true when expressions are applied to lights, or to expressions that reference other expressions.

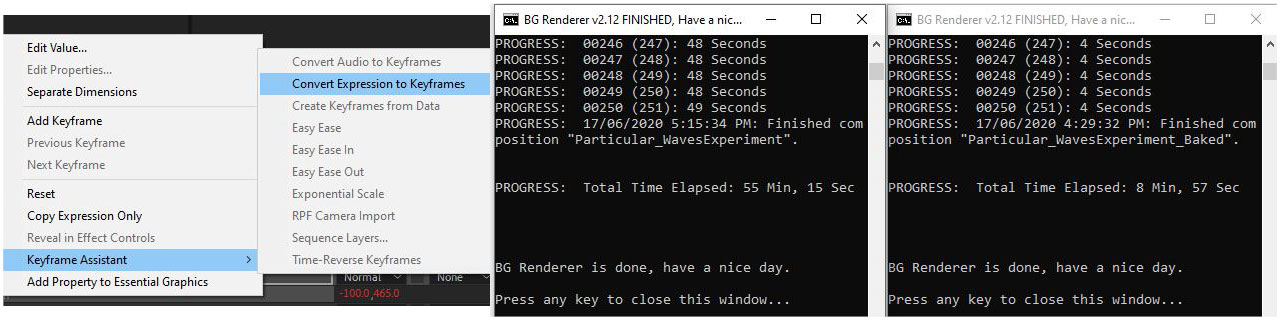

After Effects has a keyframe assistant that converts expressions to keyframes – generally referred to as “baking” keyframes – which calculates all of the expressions and converts them into keyframes. This means the expressions aren’t calculated during the rendering process. Depending on exactly what you’re doing, this can result in massive performance improvements – it’s not unlike pre-rendering, where you’re shifting the processing time away from the “final” render.

In this simple example, lights are being used as emitters for Particular. Each light has an expression applied to oscillate it with a sine function. With the expressions turned on, the overall rendering time is about 55 minutes. But after converting the expressions to keyframes, the render time drops to about 9 minutes.

Making Adjustments

Adjustment layers are wonderful things, and in some cases they can also be used to improve rendering performance. If there are any effects or styles that you’re applying to every layer in your composition, then using a single adjustment layer will save After Effects from doing to same thing again and again. Blurs, glows, focus-pulls, noise and grain are all effects that are commonly added to layers to help them look great, and over time it’s easy for the same effects with the same settings to be added to every layer in the comp. Even something as simple as making footage black & white can be done with a single adjustment layer, instead of each individual layer.

Mercury in ascension

Over the past few years, Adobe have been steadily updating their native plugins to utilize the power of GPUs. Each new release of After Effects usually sees a couple of additional plugins added to the list of those which benefit from GPU acceleration.

In order to utilize the GPU – if possible – the project needs to be set accordingly. If your project doesn’t utilize any effects that have been GPU accelerated, then you won’t see any difference. But as the list of updated plugins continues to grow, there’s more and more potential for After Effects to render faster thanks to GPU processing. For more information on which plugins have been updated, check the Adobe website.

The VR plugins that were recently bundled with After Effects are unique in that they ONLY work with GPU acceleration enabled.

I’ve tried benchmarking a few projects to see what sort of difference there is between Software and GPU rendering, but so far I haven’t found any hugely significant changes. Many projects I tried gave almost identical render times with each option. The biggest difference I found was with a fairly complex composite, where the render time for Mercury GPU acceleration was 26 minutes exactly, while Software Only took 28 mins 57. Presumably the performance difference between these two options will continue to grow with future releases.

Hitting the hip pocket

Many of the tips outlined here don’t involve spending any money. Although buying the latest multi-core CPU won’t necessarily make your renders any faster, there are other avenues where spending money can improve performance. Choosing to render a TIFF sequence instead of a PNG sequence doesn’t cost you anything but it can make your renders 20 x faster, which is nice. Pre-rendering a bunch of LogC quicktimes to EXR sequences can result in similar performance gains. And pre-composing elements to crop out any unused areas of the image will also dramatically reduce the amount of unnecessarily processing that After Effects has to do.

One of After Effects’ greatest strengths is the level of support from 3rd party developers, and there’s a huge variety of plugins available to purchase. Several sites have tried to keep track of every single After Effects plugin but I don’t think anyone has been 100% successful, there are just so many.

If you’re prepared to pay, then in many cases 3rd party plugins can provide faster and better results than native After Effects plugins. The two most obvious examples that I can think of are Neat Video’s denoise plugin, and Frischluft’s Lenscare package. Both of these products duplicate functionality that After Effects has built in: After Effects has the “remove grain” plugin and also the “Camera Lens Blur” plugin, but Neat’s “Denoise” and Frishluft’s “Depth of field” plugins are significantly faster, as well as looking MUCH better.

Instead of paying for hardware that runs faster, you can pay for 3rd party software that runs faster.

Scratching the surface

After Effects is such a complex piece of software, used by such a diverse user base, that it’s difficult to even try and explain all the different software settings that affect overall performance. While some people disagree with me – which is fine – I also feel as though there are different working cultures between motion designers and vfx artists, which can also change the way users approach working with After Effects and shape their experiences.

Personally, I pre-compose and pre-render whenever I can, to shift the burden of performance away from the “final” render.

Unfortunately, this article is really only scratching the surface of how various workflows and approaches to working in After Effects can affect performance and rendering times. But there are a few takeaway tips that will hopefully help you out in the future.

The underlying fact is that performance in After Effects comes down to the number of pixels that are being processed, and the number of calculations that are being done to each pixel.

Unfortunately, there is currently a limit to increasing the speed at which those calculations are done by the computer’s hardware – so spending lots of money on a monster multi-core CPU isn’t going to guarantee faster renders. However by intelligently toggling the various settings in After Effects as we work, we can control both the number of pixels being processed, and the number of calculations being done on each one. And in some cases this can result in massive speed differences, all without spending any money at all.

Here’s a summary of some of the examples I’ve covered here:

- Don’t use PNGs. They’re incredibly slow. Just use TIFFs.

- Toggle “Display Colour Management” on and off if you find it’s affecting RAM previews

- Only turn on colour management when you need it, otherwise leave it off.

- Choose your bit depth wisely. Although I’m always advocating that After Effects users work in 16 bit mode minimum, simple flat graphic styles will be fine in 8 bit mode. Although rendering isn’t massively faster in 8-bit mode, RAM previews and caching will benefit from having more free RAM and this can improve your productivity.

- Pre-Render slow file formats such as R3Ds, BRAWs, Arri LogC to something faster, for example EXR sequences.

- Pre-Compose and Pre-Render any layers that are being scaled down significantly. If you have 4K, 6K or even 8K source footage but you’re only working in HD, then pre-render at the resolution you need.

- Pre-compose and crop any unused pixels. Perhaps you’ve got a performer who’s been shot against greenscreen… by the time you’ve keyed them out they may only be taking up a small amount of the screen. Crop the area you need, Pre-Render it and then After Effects is only processing the pixels it needs to.

- Using complex expressions? Try baking them – especially if you have expressions that reference other expressions.

- If you find yourself applying the same effect to every layer, eg. colour corrections or LUTs, then try using a single adjustment layer instead.

- If you’re working with a large composition but it’s zoomed out to 50% or less, you may as well set the preview resolution to half or ¼. While some plugins will look different when rendering at a lower resolution, the rendering times are much faster. Half resolution renders 4x faster, Quarter resolution renders 16x faster.

- If you do need to work at 100% size and Full resolution, then use the Region of Interest to isolate specific areas of your composition that you’re focusing on. This saves After Effects from rendering the entire image, speeding up previews while taking up less memory.

One final thing to mention is something I covered in Part 4 – system bottlenecks, and it’s the different bandwidth speeds of various devices. Many workflows see footage and other assets delivered on external hard drives, and it’s not uncommon to see motion designers and vfx artists importing footage directly from external drives. The problem here is that they’re much slower than internal hard drives, and MUCH slower than SSD drives. Footage files can be very large, and reading files from small, slow external hard drives is a huge performance bottleneck. Always transfer footage files to the fastest storage that you have available.

There are other things I haven’t covered – aspect ratio conversion, fast draft previews, layer quality & scaling algorithms – but this is a decent starting point. As Adobe continue to update After Effects and improve GPU support and multi-threading, we can look forward to a time where we don’t have to jump through hoops just to make After Effects faster. Until then – pre-compose, pre-render, and forget about PNGs!

This is part 15 in a long-running series on After Effects and Performance. Have you read the others? They’re really good and really long too:

Part 1: In search of perfection

Part 2: What After Effects actually does

Part 3: It’s numbers, all the way down

Part 4: Bottlenecks & Busses

Part 5: Introducing the CPU

Part 6: Begun, the core wars have…

Part 7: Introducing AErender

Part 8: Multiprocessing (kinda, sorta)

Part 9: Cold hard cache

Part 10: The birth of the GPU

Part 11: The rise of the GPGPU

Part 12: The Quadro conundrum

Part 13: The wilderness years

Part 14: Make it faster, for free

Part 15: 3rd Party opinions

And of course, if you liked this series then I have over ten years worth of After Effects articles to go through. Some of them are really long too!

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now