The most fitting end possible for this series on “After Effects and Performance” is an interview with Sean Jenkin, who heads up the “Performance” team for After Effects, at Adobe’s HQ in Seattle. Sean, a Senior Engineering Manager for Adobe, sat down for a comprehensive interview about all things “performance”, and it was so comprehensive I had to split it across two articles. (this is part 2!)

If you missed part 1, then it makes sense to take a few minutes and catch up. We began our chat by looking at the topic of “Performance” in general, and what Adobe’s been doing to bring After Effects up to speed.

But now it’s time to get serious. A few months ago, Adobe announced something that After Effects users have been waiting many years for: the ability for After Effects to more effectively utilize multi-core CPUs. While the official announcement was specifically about Beta-testing “Multi-Frame Rendering”, there’s a lot more to it than just faster renders.

Multi-Frame Rendering, by itself, is a new feature that allows After Effects to utilize multiple CPU cores to render more than one frame, simultaneously. At first, this might sound very similar to the old (and deprecated) feature that was known as “Render Multiple Frames Simultaneously”. The older RMFS feature was analysed in-depth in part 8 of this series, but the short version is that it was something of a clever hack. RMFS was basically running several copies of After Effects on the same machine, with each background version rendering individual frames.

For some projects, on suitable hardware, this could be very effective – but the technical implementation was relatively crude. Each version of After Effects running in the background needed to load up its own copy of the project. If the project was large this could take a long time, and having multiple copies of the After Effects application in memory was a waste of RAM which could have been used for rendering. In practice RMFS was unpredictable, unstable, and could easily result in renders that were slower than if it was turned off. As I detailed in Part 8, RMFS never worked properly for me, and I was glad when Adobe removed the feature from version 13.5.

While “Multi-Frame Rendering” may sound like it’s the same thing, the underlying technical implementation is totally new. But what’s even more significant is that the work done to enable “Multi-Frame Rendering” has laid the foundation for all sorts of future performance enhancements. What this means for After Effects users is that “Multi-Frame Rendering” may be the newest feature to grab headlines, but it’s only the first in a whole range of new features that are all aimed at improving performance.

There’s no-one better to take us through “Multi-Frame Rendering” than Sean Jenkin, head of the “Performance” team at Adobe.

So let’s pick up our interview where we left off…

————————————————–

CHRIS: So probably the question you’ve been waiting to be asked. What can you tell us about the new multi-frame rendering feature?

SEAN: A couple of years ago we took a step back and said, OK, if we’re going to think about rendering performance, then what do we focus on? Do we invest many, many man years to get After Effects working fully on the GPU? And if we did, what’s the negative consequences of doing that? You know, what happens to text? What happens to the scale of compositions? Because there’s only so much VRAM going around. Is there a solution for a 30,000 by 30,000 pixel composition on a GPU? What would it take? Do we have the resources?

There was this whole discussion on that side and then there was a whole discussion about OK, well, we don’t use a lot of CPU threads, and that’s probably leaving a significant amount of performance on the table. So could we look at both of those scenarios?

And so we started heading down the GPU route and we started porting effects and transforms and blending modes, and we also started looking at what it would look like if we could get multiple frames rendering concurrently.

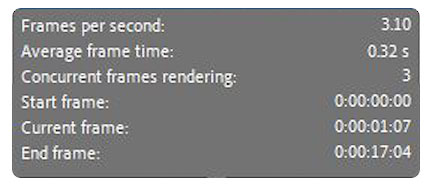

What we found in our testing, and what’s out there now with real world Beta testing, and internally on high-end machines, is that we’re looking at 3x, maybe 4x faster renders on complicated projects. It’s going to render three or four times faster. On high-end machines we think there’s even a bit more we can get out of it.

The fundamental work, which we’ve talked about a bunch of times so far (see part 17), was getting the core rendering pipeline thread safe. This involved a few million lines of code, 35 different projects, all working in such a way that they are thread safe. So now we can have multiple frames rendering at the same time without one frame negatively affecting another frame.

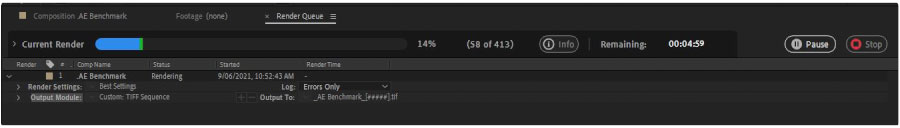

We have it working in the After Effects Beta release, and so you can see the effect of multi-frame rendering in the render queue. Within the next couple of weeks to months, that same functionality will start working in RAM previews, so when you hit the spacebar it will start rendering multiple frames. The goal there is to get closer to real time.

If your comp is currently rendering a preview at 1 frame per second, right now with the Beta we’re probably only going to get to 3 or 4 frames, but you know, it’s certainly better than one frame.

But if you have a composition that’s rendering a preview at six or seven frames a second, you might be getting pretty close to real time there, and that makes a huge difference in terms of understanding your animations, as well as the final output of whatever you’re trying to build.

This involved a few million lines of code, 35 different projects, all working in such a way that they are thread safe.

-Sean Jenkin

Then there’s a bunch of things that we are going to be able to enable in the future, such as support from Adobe Media Encoder. When Media Encoder goes to render an After Effects project we’ll be able to support multiple frames rendering concurrently, which will speed up the output of your composition.

That will also work with Premiere Pro, so when you take a motion graphic template built in After Effects and bring it into Premiere Pro, Premiere Pro will actually talk back to After Effects and render multiple frames simultaneously, which will speed up those motion graphic templates.

Another thing we’ve sort of hinted at, is what can we do when After Effects is sitting idle? Can we do other things now to help prepare comps so they are rendered, or at least ready to render faster? For example, while we’re having this conversation, I could have pre-rendered a composition and then be ready to actually work a lot faster when I get back to After Effects.

Another thing which is super exciting is Render Queue Notifications. Using Creative Cloud Desktop and Mobile apps, you can receive notifications when your render queue jobs are complete. It even works to your associated watch. You will know that the render is done, or if there’s an error that’s occurred.

The render queue UI is also being updated. We’ve done one part of that, the top information section, but we’re working on more of that right now to make that more efficient and simpler for people to actually understand and use.

There are other things that we’re looking at, around profiling, and we haven’t talked too much about this but I’ve been a user of After Effects long before I was an engineering manager for After Effects. And I’d often end up, as a lot of designers do, getting handed a project, opening it up, having no idea what’s going on and then trying to figure out why it’s so slow. We all go through clicking eyeballs, showing layers and trying to turn things on and off, right?

That is a ridiculous waste of all of our time, and so we should be able to pop up a column that shows you: these are the layers that are slow and this is why they’re slow, and here’s the things that you could do to make this better. And then you can make a choice, or we can say hey, why don’t you proxy this? Or why don’t you pre-comp this and then pre-render that off?

We all go through clicking eyeballs, showing layers and trying to turn things on and off, right?

So now that we’ve broken up and made our rendering pipeline thread safe, let’s see if we can actually make things like a composition profiler. And then, how do you take all of that information and give users some action items that they can actually take…

So that’s where multi-frame rendering is sort of the over encompassing word, but we’re really talking about is what can we do now that After Effects is a lot more thread safe? And how do we take advantage of machines that have 16, 32, 64 cores… and there’s the AMD 3990X with 128 cores?

If we can access all of those cores, not only can we make your renders super-fast, but we can do a whole bunch more at the same time. That’s really what we’ve been trying to achieve here. It’s a massive project, not only for us internally, but for those third-party developers who are making plugins. They’ve had a software model that they’ve been working with for 15, 20 years. If we are making them rethink their entire model, we still need to keep After Effects working with the old versions of the plugins to some degree.

But as they migrate and make their plugins support multi-frame rendering, it’s unlocking the performance that maybe has been stuck, because it’s been After Effects that’s the cause of it being stuck. For example, if we think of an effect where the developer has done a really good job of porting it to run on the GPU. Those effects are still using the GPU really well, but while the GPU is busy, we could be doing a whole lot of other render steps on the CPUs, and rendering other frames rather than it all being stuck, one thing behind each other.

That will take us to a stage where we’re like, oh the GPU is going great, the CPU is doing this thing, and we’re writing to disk with this frame, and all of those things are happening concurrently.

Suddenly it makes you feel really good that you spent a whole bunch of money on your machine but also, you’re actually getting results a lot faster.

CHRIS: Can you detail how much more sophisticated the new multi-frame rendering feature is than the older render multiple frames simultaneously feature?

SEAN: I can do my best to try and explain that. So, in the old world, you could pick a number of background instances of After Effects that would start up. And then After Effects would try and split the composition into a certain set of frames and then it would pass those off to render on those background instances.

That certainly had a lot of benefits and things like Render Garden today is doing something similar to that.

The problem that you run into is that one instance of After Effects requires a certain amount of RAM to start up and has certain expectations that it’s the only application of After Effects running. If you start two or three or four or ten or more of those, you’re now having to use RAM that should be used for rendering, just to host the After Effects instances.

Each instance of After Effects doesn’t know about the other instances of After Effects, and so if an effect was using memory in a strange or unique way, or writing to files to disk, the effect may have no idea that it’s overwriting another instance of itself.

The old RMFS worked well for outputting file sequences, but the overhead of trying to take a QuickTime file, distributing frames across multiple background instances, and then merging all those back together was complicated at the very least.

So with the old system, the Render Multiple Frames Simultaneously feature, there’s all these sort of weird architectural things going on so you’re not getting the most out of your system.

My understanding from talking to the team over the last few years, is that a lot of people oversubscribed their system. They would find the setting for the number of background instances and they would set it to 16, but the machine could never handle 16, and so they’re actually getting worse rendering performance than normal rendering. If they took the time to figure it out then maybe they could get better performance, but the vast majority of people either didn’t know how to, or they just didn’t use it. (this problem was discussed at length in part 8 – Chris)

So as we went to build the new multi-frame rendering, we wanted to make sure that only a single instance of After Effects was running. Any plugin thinks there’s just one After Effects, and there’s only one instance of me running. And we’re doing all of the work to make sure that the same instance of an effect can be run concurrently over lots of different frames. The developers have to do some work to make it thread safe, but effectively MFR is transparent to the effects.

The big thing from an After Effects perspective is that now we can see all of those concurrent renders happening, and so we can actually start to do more intelligent things like – oh, you know what – when we started to do 4 frames at the same time instead of three, the GPU started to run out of VRAM and we’re actually running slower than we were. So, we can scale back to three concurrent frames. Or- there’s spare CPU cores, so we could actually probably go to 9,10, 11, or 12 frames concurrently. We can actually make those decisions dynamically and our goal, by the time we ship MFR, is that every single machine in the world will get the best performance for their After Effects render. We’ll be paying attention to their hardware, and their compositions, and dynamically adjusting what we’re actually doing.

There’s a lot more that we think we can do there. As we finish out this work, just to make that entire experience better, we want to add options so users can tell After Effects: I want to be able to do email or I want to be able to work in C4D while I’m rendering in the background, and then we can make intelligent decisions about rendering performance rather than taking over your entire machine. Fundamentally, we now just have a much better idea of what’s happening and After Effects can optimize for the hardware and the composition as opposed to you as a user having to try and figure it out every single time you go to render.

CHRIS: All of it makes perfect sense, especially the bit about needing backwards compatibility with plugins and what it means for developers. When I interviewed the software developers a couple of articles ago, that was a recurring theme – Adobe just can’t change the API with every release because they rely on that third party market.

So if you are going to make a big sweeping change to the API, one limitation that’s been in After Effects since day one is a 2 gigabyte frame size limit. That’s something that I’m starting to run into now I’m doing all my work in 32 bit, and I’m working with larger and larger composition sizes. If you’re going to overhaul or introduce a new API, will we finally see the two GB frame limit lifted? Is there a reason that it’s still there?

SEAN: Fundamentally, it’s because it’s a 32-bit number that’s storing some of those things. You’ll find other limitations like that, such as a UI panel can’t be more than 30,000 pixels high or wide. There’s a number of those fundamental things that have existed and haven’t been fixed because they impact so few people. Up until now that it hasn’t been an issue.

But as compositions get more complex as we move to 4K, 8K and beyond, those are things that we do have to think about. Is there an immediate plan to change that 2 gig limit? Not immediately, but it’s on us to be listening to people’s needs for that. So, if there’s you and other people that are affected then let us know – I’m hitting this limit – then we’ll have to figure that out. It might mean that a bunch of effects need to be updated to support a change there.

CHRIS: Yes, I’d assumed something like that.

SEAN: We do have the ability to version certain API’s so we can say OK, look if you’ve got an effect and it’s going to do this, that’s version one of the API. But you need to use version two to support a different sized composition limit, or something like that.

CHRIS: With multi-frame rendering there’s an underlying philosophical approach of farming out separate frames to different CPU cores. Have you considered, or is there a team looking at, say, a tile-based renderer where instead of multiple CPU cores rendering different frames you’ve got multiple CPU cores rendering different parts of the same frame?

SEAN: Yeah, so there’s actually two different investigations going along. There is an early investigation in doing tile rendering, to see how much further we can push the GPU to larger composition sizes, but that’s still pretty early on.

The other piece is: How do we take an individual frame and render it across multiple threads? Right now, we’re rendering multiple frames, and each thread has one frame to render. That’s the core philosophy of multi-frame rendering, it’s lots of threads, but each one of those threads only has one frame.

Then there is the sort of inverse version of that which is one frame has multiple threads. And that is something that we have spent a lot of time internally thinking about. We couldn’t work on it until this first version of Multi-Frame rendering was complete. It’s taken all this time to get the code thread safe.

The next step is, when you go to render an After Effects composition, we build what we call a render tree in the background. This process takes all the layers and all of the effects and all the transforms, all the masks, everything that makes up the After Effects composition and actually breaks it up into a tree, or something that looks like a node-based system, in the back end. And then we traverse that tree to be able to say OK, well we need a square and then we need to transform that and then we need to apply an effect to that, whatever it takes to output that final composition frame.

How can we break that render tree up so that we can say OK, this portion can be safely done on one thread and this portion will be done on another thread, and then how do we merge all of those pieces back together? Our early estimates show that we might be able to improve performance 50% if we can make that system work. I wouldn’t quote me on that, because it’s very, very early on.

We don’t know if we’re actually going to end up prioritizing that as work, but that’s the sort of two angles for tile-based rendering, and then also breaking up a single frame into its renderable nodes.

CHRIS: One recurring theme in my article series is bottlenecks, when you have system bottlenecks causing slowness that’s not After Effects’ fault. I’ve personally sat next to designers using a $12,000 machine and they’re reading ProRes files off a USB 2 hard drive and things like that. Is there a way that After Effects can tell that? I’ve freelanced at studios with multiple employees and they talk about “the server”, and “the server” is a consumer grade Synology which is battling away trying to keep up with a room full of artists.

Is there a way that After Effects can say – Hey, it’s not my fault, you know, the USB drive or the network server is the problem?

SEAN: So today – no. By the time we’re finished with multi-frame rendering, then quite possibly. That has been one of the core things that we need to be able to analyze – compositions and hardware resources, and then optimize for that. We need to be watching CPU, RAM, VRAM, disk throughput, all of those sorts of things and so our goal here is to figure out a way to take that information and surface it to you so that AE could tell you that it’s using 100% CPU and maybe you should get a faster CPU. Or AE is running out of RAM about 3/4 of the way through a render. Maybe you should get more RAM, and then that will let the render complete, and also be faster.

So, we’re going to have all of these key points of data, and what we’re now trying to figure out is how do we present that in a way that a user can take action on that. System throughput, network speed, disk speed, all of those things we can get access to.

But then you know there’s that additional step of ‘How do we tell users what’s happening?’ so they can make a good choice.

CHRIS: You’ve mentioned a few times about the potential for After Effects to do stuff in the background. In terms of my personal workflows, I’m a huge fan of pre-rendering, I pre-render a lot, whenever I can. I use BG renderer and I tend to do it manually. I do sometimes use proxies, but I often find myself doing it manually because I have to say I don’t find the proxy system always intuitive, and it’s a bit limited. So this is an example of an area where performance isn’t a specific hardware or engineering challenge, it’s more of a workflow tied to the user interface.

From Adobe’s perspective, how do you separate performance feature requests that need engineering solutions to those that are actually a user interface issue, trying to make something easier for the user?

SEAN: It is the crux of many conversations every week, which is what are we prioritizing, how do we prioritize it, who we prioritizing for? Some of it’s just fundamental architecture and engineering.

For example, if we know that that pre-comp is slow and you’re working on something else in AE, and that pre-comp is not being touched, we could go and render that in the background. At least get it to the disk cache for you, and so the next time you go to playback, that’s already done. You don’t have to think about proxying it or pre rendering , it’s already down on disk and it’s usable.

That’s one example of the types of things we’re trying to now think about, about how we can do that, something we haven’t been able to do in the past.

The workflow situation where we AE detects that this comp is complex, but we see layers 11 through 50 could probably be made faster. Do you want us to do that for you? That could be something else that we start to think about. It’s going to take time to do that render, but maybe you’re OK with that and AE spins that off in the background. You continue to work and it’s just ready when it’s ready.

So yeah, those are things we definitely want to start to think about taking advantage of.

CHRIS: One thing I haven’t mentioned is the disk cache. I’ve got a high speed NVMe disk cache, but is using a disk cache a bit like using the older Render Multiple Frames Simultaneously feature, where if it’s too big or too small then rendering ends up slower than it would be without it? Or is it always a good thing?

SEAN: At this point it’s always a good thing. If we do have to start clearing out the disk cache, that’s going to slow things down to some degree. But if it’s on a fast drive and it’s large, that’s great, yeah.

Honestly, in a lot of our internal testing that we do right now, we turn the disk cache off so that we’re not having to purge the disk all the time for our tests. It’s funny when I turn it back on I’m like, oh, this is even faster! It’s one of those things where you stop using the disk cache and you just get used to it, and then you turn it on and it’s like, oh it’s actually better.

One of the things we’re trying to talk through internally, just hypothetically, is what would happen if we didn’t have a RAM cache? If we saw that you had an NVMe drive and it was always fast enough to get frames from the disk up into the display pipeline, do we need a RAM cache? Because the ram cache has that sort of issue where if you can run out of RAM cache, then you can’t cache the entire composition.

But if we can fit everything on the disk, and if we can get it off the disk fast enough, then do we need it in RAM? That could be a user preference one day or something like that, where we’re always just writing directly to the disk cache and reading from it. The disk cache is an interesting thing, and it’s probably got more potential value than we’re using it for right now, it’s probably one of the issues that we need to deal with.

When you think about what’s going on in the gaming world, what Xbox and PlayStation have been able to do in terms of streaming frames, or loading content off the SSD fast enough to get it into memory and rendered, then we should be able to start thinking about taking advantage of those techniques too.

CHRIS: I know that when people start talking about performance, for some of them it just means a hardware recommendation. I assume Adobe is hardware agnostic, but are there technical differences between, say Intel and AMD which the end user may or may not be aware of?

SEAN: We’ve tried to be hardware agnostic, and often will defer optimizing some bit of code because we don’t want to be faster on only a particular piece of hardware. The Apple Silicon hardware is something we’re going to spend more time on, as we make After Effects work on it, and making it work with the systems that are on that chip – whether it’s machine learning or the neural network or video encoding or anything like that, we want to take advantage of that hardware when it’s there.

It’s been more of – After Effects is a generic solution that needs to work on Mac and Windows, all the sorts of flavors on each OS, and we want it to be consistent. So, we haven’t spent the time to do that – to optimize for specific hardware. That said, we are starting to shift. I mentioned this on the Puget Systems interview, but the AMD Threadripper CPUs have a different caching structure to Intels. Every four or eight cores has a different cache, whereas on the Intel side it’s one cache shared across all of those CPUs. Both of the architectures have benefits and drawbacks. But if we can optimize when we’re on an AMD Threadripper and we’re working on frames 1 through 8, and that’s all going to be on one CPU block, cores 1 through 8, we can put all of the data into that cache and not spread it around.

It’s probably a mental and paradigm shift that we’re starting to work through to be like, OK, you know an Afterburner card in a Mac – how do we take advantage of that so that we get Prores decodes and encodes faster? These sorts of things we’re now starting to think more and more about.

After Effects is a generic solution that needs to work on Mac and Windows, all the sorts of flavors on each OS, and we want it to be consistent.

CHRIS: It’s obviously been a huge undertaking just to get the multi-frame rendering implemented. Have you looked beyond that to things like overhauling watch folders and implementing a built-in Adobe render farm?

SEAN: I haven’t, there might be other people who are thinking about it, but I certainly haven’t at this point now.

CHRIS: Because there’s a point at which a render farm is a next logical step. You can give people hardware advice for a specific system, and you can talk about GPUs and so on, but there’s a point at which you’ve spent as much money as you can to get performance out of one box. The next step is to just build a render farm, and obviously there are people who cater for that, like Thinkbox with Deadline and so on. But I also do a lot of regular work for a studio that still relies on the old After Effects watch folders and that is a bit painful. I don’t think watch folders have been touched for 25 years.

SEAN: Sounds about right. I’m not going to say people aren’t thinking about it, whether it’s Adobe Media Encoder and thinking about how that extends.

An obvious extension is rendering in an AWS or an Azure cluster or something like that. Imagine if you could host licenses of After Effects up there, and you could split your project and then have multi-frame rendering on top of that. Could you take a 12 minute single frame render, throw it to the cloud and have that render in 20 seconds? I think that’s a bit of a dream for me to see that happen one day. But I can’t confirm anything is happening right now.

Right now, we’re going to finish what we’ve started with Multi-Frame Rendering.

CHRIS: What actually is the difference between AE, AE without a GUI, and aerender?

(NB. This might seem like an unusual question. But for 3rd party developers who’ve written tools to assist with After Effects rendering, including BG Renderer, Render Garden and Render Boss, there are different technical options for background rendering.)

SEAN: There’s actually 3, maybe 4 different versions of the After Effects renderer. There’s After Effects that you run that has a UI. There is a way to run that version of After Effects where the UI doesn’t appear. Then there’s another thing called aerender, which is what people generally use to render from the command line. Internally we have another thing called AE Command, which may one day replace aerender but we haven’t had time to finish it. Then there is something we call AElib, which is basically the communication between Premiere Pro and After Effects when you’re doing motion graphics template sharing.

All of those run in various ways, they all end up calling the same code, but there are various entry points.

CHRIS: I think one of the big advantages of aerender is that it very explicitly says on the Adobe website that it doesn’t require an additional license. Once you’ve got your CC subscription for After Effects, it’s very generous, you can run as many render farm nodes as you want, but I was wondering whether running the GUI less version of AE has that same freedom or whether you’re using up one of your two licenses.

SEAN: You’ll still be using your licenses there; it’s just not presenting the UI.

(NB. Again, this question relates to 3rd party background rendering utilities.)

CHRIS: Just looking back over my notes, one of the big things in my series was talking about bottlenecks and throughput and bandwidth. Probably bandwidth because that’s what After Effects does. It’s streaming, you know “all of the pixels all the time…”

SEAN: All the pixels all the time, yeah.

CHRIS: Does that reliance on bandwidth translate to some hardware you can add to your system? Like if you’re planning on building an Intel system, should you wait a month for a PCI 4 motherboard? Is that gonna help? Personally, I always advocate for SSD’s and fast NVMe drives. Do you have anything to add just on the topic of bandwidth and After Effects?

(NB. At the time I interviewed Sean, Intel motherboards with PCIe v4 hadn’t been released yet. It’s possible that by the time you’re reading this, Intel PCIe v4 motherboards have become available – Chris)

SEAN: Yes, there are use cases right now where it is possible to saturate the PCI bus, that’s PCI version 3. PCI 4 is a little bit harder to saturate, but as we add more and more threads, and if that involves transferring data to the GPU to run certain effects processing, that bus is certainly going to be more and more busy. I don’t think we’ve got a situation where we’ve been able to saturate a PCI 4 bus at this point.

So that would be one piece of advice- if you are looking to buy a system in the next six months, I would say hold on for a couple more months. If you can wait for the point where you can test the shipping version of multi-frame rendering, you can see exactly what its demands are.

We’re working with Puget Systems, and the Dells and HP’s and other OEMs to update their configurations and recommended specs. In the past it has been an Intel CPU with 8 gig of RAM, maybe 2 gig of GPU VRAM. That’s no longer going to be enough to really take advantage of multi-frame rendering to the extent you want to. Soon you’re going to be looking at 16 to 32 cores, and you’re going to be looking at 64, 128 gig of RAM, and you’re going to want to get as much video RAM as you possibly can.

We’re going to come out with some guidelines, such as for this spec machine, here’s the performance improvement we saw. Then you can make a choice as a consumer to say, OK, well, I’ve got $3000 to spend. This is going to be the best machine that we can come up with. Or if I’ve got $10,000, here’s what we can go with.

The CPU clock speed is still going to be super important. A little less important than it has been in the past, because we can have more cores going, but at the same time it is going to have to be some sort of balance there.

CHRIS: When I was interviewing the third-party software developers, a few of them had some great quotes. One of them was talking about how sometimes software development just takes as long as it takes. He mentioned a quote “It takes nine months to have a baby, no matter how many women you’ve assigned.”

SEAN: The mythical man month!

CHRIS: Yes! What parts of After Effects, specifically talking from your experience at Adobe, just takes as long as it takes? i.e. What can’t be sped up by just hiring a bunch of programmers?

SEAN: I think one of the things that people lose sight on is After Effects being part of Adobe, and being part of the Creative Cloud Suite. And so there are a lot of things that a lot of customers probably don’t care about that we still have to do. You know the home screen? That, for instance, is a thing that every Creative Cloud product has. Would we, if we were After Effects and only After Effects, have spent time building a home screen? I don’t know and it’s hard to say, but that’s an initiative that is part of the Creative Cloud suite. There are things as you plug into Creative Cloud, whether it’s language support or it’s installation or versioning or synchronizing preferences or talking to back end systems. And there’s a lot of stuff that no-one has any clue that we have to do, and we have to spend a lot of time doing that.

So that’s one thing that sort of waylays us from fancy features that really make a difference.

Another piece is – Yeah, we’re working in an organization with 400 other engineers, and so when you make a change, if it’s in a shared piece of code, you might be affecting Premiere Pro and Audition and Prelude and whatever other products are in the codebase and so you, as the developer, using our entire infrastructure, have to spin up builds of all of those products and make sure that everything is still working with your changes. Even changing one line of code could cause you four or five hours of building different products, and then testing those products.

We have moved to a model where we don’t check in code until our testers have run that code and tested the product. So, it might take a day for someone to implement something, and then we might spend a week testing it and then it gets checked in. Then it goes into the beta process and then we have, days to months where it’s in our community and we’re getting feedback on it. We might have to iterate multiple times.

So, it’s just being part of a larger organization, and one with a 27-year-old code base. Now you know there is legacy code in there. When people first come to work on After Effects, it may be code that no one’s ever seen in their entire coding career because we’re going back that far. And I have had the privilege of touching code that was written in 1995 and being like – oops, I don’t want to break this. I know that this code is used throughout the app, and you’ve just got to be very careful as you touch it.

CHRIS: OK, so when I wrote up a list of notes for our interview, I had two questions which I thought you probably get asked a lot. The first one, which I know many users have asked, is ‘why can’t After Effects use the Premiere engine?’, and you’ve answered that in great detail, thanks. (see part 17 – Chris)

So I think it’s time to finish off with the second one. And while this might not be as common as the Premiere question, I’ll admit I’ve spent a very long time pondering this, and I know I’m not the only After Effects user to do so.

So here we go…

Has it ever been discussed to just draw a line under After Effects as it is – freezing it and calling is something like After Effects Classic. Then starting again from scratch, rewriting it from the ground up and calling it After Effects Pro?

SEAN: I mean, has there been a conversation like that? Yeah, absolutely. I believe you’ve asked other people how long would it take to rewrite After Effects if we were going to start again. We internally refer to that as “What is After Effects.Next?”

But we also ask ourselves: if we decided to do that, then what would be the first three things we would go on to build, and would they look any different than they look today?

And that’s a question that we haven’t found a strong enough answer to go off and do it yet.

We’ve talked about things like – What would it look like in the mobile space? Is there a reason to have After Effects running on a mobile device? It’s hard to know. That’s a topic that we want to talk about, but we’re not sure that people want to make motion graphics at pro quality levels sitting on an iPad. Maybe they do and we just don’t know it yet.

Or is there a simplified version of After Effects? I’m not going to say “After Effects Express” or something like that, but is there a simplified version to make that easier? Maybe, but should we just simplify the UI and keep the current version of After Effects and just make it easier to start with for a user? Maybe that’s the right approach to that option.

So yes, conversations like that happen every time we have a manager offsite for After Effects, but we don’t have a compelling reason to do it right now.

One recent reason for asking this question though occurred as we have thought about Apple Silicon. Do we just port After Effects as it runs on the Mac today to work on M1/Apple Silicon natively? Or are there things that we’ve held onto from a legacy standpoint that we should end of life? Then we could make the Apple Silicon version a little bit more streamlined… but the answer is pretty much no. We’re going to port it, that’s what people expect After Effects to do, and as much as we can make work, we will make work.

CHRIS: I’ve spent a lot of time thinking about this sort of stuff, you know, what happens after After Effects?

First of all, I see After Effects being defined by the fact that it’s timeline based and layer based, so for me that’s what After Effects is. It’s never going to be a node based compositor. Anyone who asks for nodes, you just say go use Nuke, or Fusion. There’s no reason to make After Effects node based.

Then you ask something like – OK, so we have a timeline, because After Effects is timeline based, and we have layers, because After Effects uses layers. After Effects IS layers in a timeline. But how do you mix 2D and 3D layers in a timeline? Because at the moment I hate binning. I think that it’s quirky. It takes years to understand. So mixing 2D and 3D layers in a timeline is a fundamental UI problem to be solved.

(NB. If you haven’t heard the term before, “binning” refers to the way groups of 3D layers are rendered seperately and combined with 2D layers – Chris)

And then you’ve got annoying inconsistencies like the coordinate systems. This is a real pain, especially when you get into script writing. Layers are anchored from their top left, with Y inverted. But then you have shape layers, which are centered around 0. But then you also have 3D layers, which default to being centered around composition space. So you have three different coordinate systems in the one app.

So I just sit there and think – a lot of the big problems with After Effects are actually to do with the user interface. I think if anyone was going to rewrite After Effects, the difficult issue isn’t GPU rendering, or tile-based renderers, it’s not talking about multi-core support, or Open CL versus CUDA or something like that. Those problems have logical solutions.

The hard part is what do you actually want the interface to look like? Like how do you mix 2D and 3D layers, while retaining the core identity of what After Effects is? Because if you make everything 3D then the timeline/layer system breaks, and all of a sudden After Effects is just another 3D app. So then the problem becomes – how do you make After Effects “After Effects”, without it becoming a Cinema 4D clone?

But then if you don’t make everything 3D, and you keep the distinction between 2D and 3D layers, then how do you work with them, differentiate them, layer them with blending modes? And I don’t have answers for that. I guess if I did I’d be a UI designer, and I’d be a software designer.

It’s the same problem with expressions. How do you make expressions easy for a user? Because even now something as simple as keyframing the amount of wiggle, well you still need to know what you’re doing. It shouldn’t be as hard as it is.

So I just think that the biggest problems and the hardest part to change is the user interface. I think if anyone ever wants to replace After Effects or rewrite After Effects from scratch, you don’t worry about the technical side of it, you’ve got to solve interface problems. No one has done it yet, I mean.

I’m just commenting on this because there are so many users out there who just want GPU rendering, or are saying hey, why don’t Adobe rewrite it from scratch. But my opinion is that I don’t think that GPU rendering is the hard part. There’s other stuff to fix first. Stuff like rigging and physics. How do you mix forward kinematics with inverse kinematics – you want a character to pick up a piece of text and hand it off to another character? Or mixing physics with keyframes. Or how do you toggle parenting on and off. How do you mix expressions and keyframes – how do you make it easy, you know?

SEAN: Yeah, After Effects for a long time has been built by engineers and not by designers. But over the last couple of years, we have grown our design team from basically half a person to 3 ½ people.

The problem space of how you mix 2D and 3D is something that’s actually being deeply looked at right now, and that may fundamentally change some of the way we think about binning, or not binning things, and those sorts of questions I think are important for you to keep raising because we’re listening to that. There is stuff happening, it’s early. It’s hard to talk about too much right now because we’re still trying to figure out what that all means.

But you know, the UI is certainly something… we’re conscious of it being too hard for beginners. It’s still too hard for a lot of people who know what they’re doing. There’s a lot of stuff that’s just sort of hidden away, and we need to stop thinking like engineers and start thinking like designers and users of the product to make this the best possible thing we can.

My response to all this is that you’re not wrong on any of it, and these are areas that we want to address.

CHRIS: Brilliant. Thanks so much for your time and willingness to talk about everything.

—————————————————

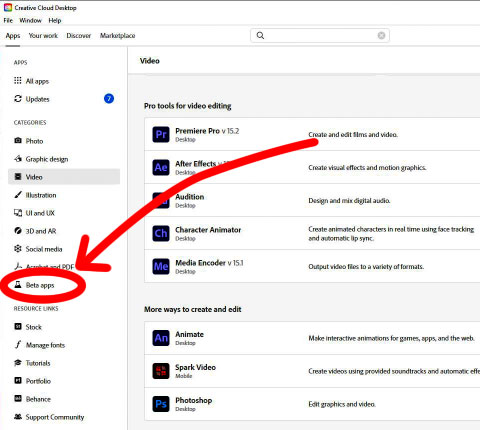

Creative Cloud subscribers can download and test out multi-frame rendering RIGHT NOW!

Something I think about a lot is what users expect when they buy new hardware. If you spend $1,000 to upgrade your GPU, then what do you expect from render times? Rendering twice as fast, 25% faster, more or less? Common benchmarks for 3D games can often show relatively modest frame rate increases between old and new GPUs, and CPUs can be even less. From this perspective, the ability to have After Effects render a composition twice as fast just by downloading a Beta version – for free – is incredibly exciting. As as we’ve just heard, this is only the beginning. As the After Effects Performance Team continue their work, the improvements in performance that we’ll see from software updates could well outpace any improvements that rely on expensive hardware.

I hope that readers who’ve made it this far through the series are grateful to Sean, and the After Effects team, for their help in this series on “After Effects and Performance”.

Overall, it’s clear that After Effects is in good hands and judging from the huge progress that the Performance team have made in only 18 months, we have a lot to look forward to in the future.

This is part 18 in a long-running series on After Effects and Performance. Have you read the others? They’re really good and really long too:

Part 1: In search of perfection

Part 2: What After Effects actually does

Part 3: It’s numbers, all the way down

Part 4: Bottlenecks & Busses

Part 5: Introducing the CPU

Part 6: Begun, the core wars have…

Part 7: Introducing AErender

Part 8: Multiprocessing (kinda, sorta)

Part 9: Cold hard cache

Part 10: The birth of the GPU

Part 11: The rise of the GPGPU

Part 12: The Quadro conundrum

Part 13: The wilderness years

Part 14: Make it faster, for free

Part 15: 3rd Party opinions

Part 16: Bits & Bobs

Part 17: Interview with Sean Jenkin, Part 1

And of course, if you liked this series then I have over ten years worth of After Effects articles to go through. Some of them are really long too!

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now