Have you ever wished that After Effects was a bit faster? I’m guessing that every After Effects user has stared at the blue rendering bar hoping for a miracle. Let’s assume you’ve got a modest budget to spend on either a new computer system, or upgrading the one you’ve got. How best to spend the money?

If you’re trying to make After Effects run faster, how do you actually do it?

In Part 4, we’re going to look at this problem from a slightly backwards perspective. Instead of asking what we can do to speed up After Effects, we’re going to look at all the things that slow it down. In part 1, I said that it’s possible to spend a lot of money upgrading your computer without making After Effects any faster – and here we’re going to look at some reasons why.

There’s an old saying “a chain is only as strong as its weakest link”, and I’m going to paraphrase that for rendering: After Effects is only as fast as the slowest component in your computer. Your computer is a collection of hardware and software than generally cooperates and works together to get things done – but there are many individual parts doing specific jobs. Sometimes, depending on exactly what you’re doing, the workload is spread evenly among all of these bits. However it’s more likely that at any point, one part is doing significantly more work than another, or is being held up by waiting for something else to finish. This happens regularly, and in everyday usage you don’t really notice it, especially when you’re using your computer for a diverse range of tasks.

When we look at a very specific case, such as working or rendering in After Effects, we can take a closer look at what load is being placed on what components, and if any part is consistently slowing the overall process down. Such a scenario would be a classic example of a “bottleneck”. Fixing a bottleneck that’s holding everything up can make a bigger difference to performance than upgrading a component that’s not actually being stressed to capacity. In this article we’ll look at some hardware components. Future articles will be dedicated to specific examples including CPUs, GPUs and then there’s always software. But let’s begin with a few bits that are often overlooked.

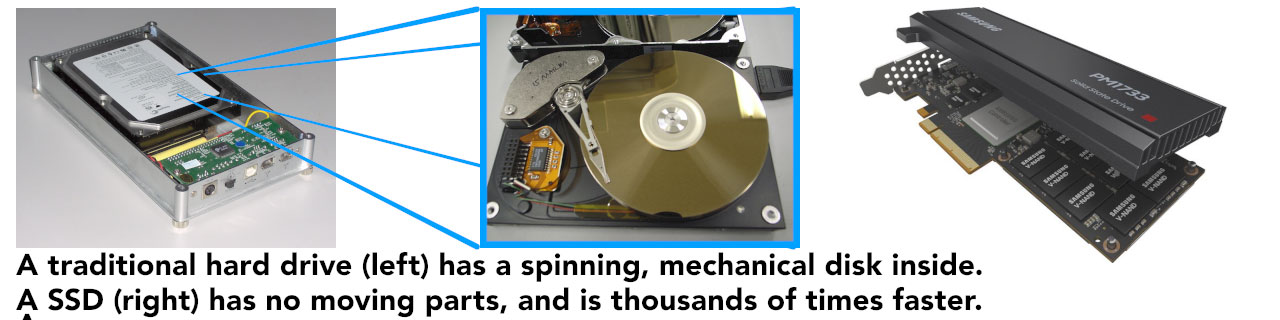

One very relevant example would be upgrading an older spinning hard drive to an SSD. Just a quick recap: traditional hard drives have disks inside that spin around – they’re a mechanical device. SSDs are just a collection of chips, like a gigantic USB stick. SSDs are thousands of times faster than older style hard drives. They’re more expensive than spinning drives and can’t store as much, but they are massively faster.

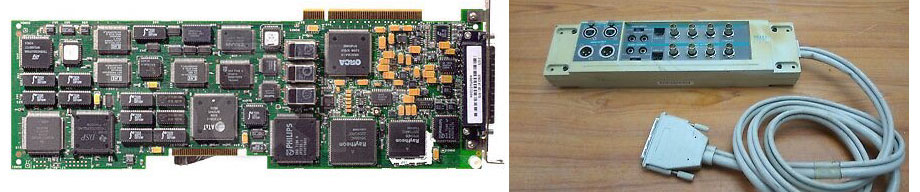

Consider a hypothetical example where we are buying a new laptop, and we have a limited range of configuration options. The default model comes with a spinning hard drive and a mid-range CPU.

For the same amount of money, let’s say $250, we can either upgrade to an SSD hard drive, or get a faster CPU. While you might expect the CPU upgrade to increase the performance of the computer – because it’s faster – in many cases the slow spinning HDD is holding everything else up – it’s a bottleneck. Even though it’s logical to assume that buying a faster CPU will make the computer faster, in reality you’ll get more performance out of the SSD instead, because the CPU isn’t working at 100% capacity – it’s mostly just waiting for the slow spinning HDD. Depending on what type of work you’re doing, it’s possible that you could spend your $250 on the CPU upgrade and not notice any increase in speed at all.

What’s in the box

No matter how technical you are, even a luddite will recognize the most basic components in a computer. Especially when it comes to laptops, hardware components have become pawns in marketing spiels. Something that was simply a processor now has a fancy name and slogan. The terminology used by competing manufacturers is not always standardized, or interchangeable, and is sometimes downright confusing. But beyond the marketing jargon, when buying a consumer-orientated computer there are four components that are used to differentiate models and prices:

- CPU

- Graphics card (GPU)

- RAM

- Hard Drive

There’s more in the box than just those four things, but they’re the high-profile parts that have the greatest impact on price.

Windows users, especially those who build their own desktop machines, may also pay attention to the chipset on the motherboard. Among other things, this will affect the RAM speed and overall bandwidth of the system. Mac users don’t have to choose between different motherboards (Apple calls them “logic boards”) but they are still a critical component of every Mac computer. Occasionally in the past Apple have touted memory speeds or other motherboard-related specs, but generally if you’re buying an Apple you just get what you’re given.

Another factor that can affect After Effects users is network adapter speed – generally a choice between 1 gig Ethernet or 10 gig Ethernet. Apart from my home computer, I can’t remember the last time I worked in an office that didn’t have some sort of network, or networked storage. The network is easy to blame when things are slow, you just say “the network is a bit slow today” and everyone grunts in agreement, except Keith who’s hoping that no one will notice he’s using bit torrent to download every episode of Game of Thrones in 4K.

Because of the type of work that After Effects users can do, it’s common to work off external drives. In Part 3 we looked at how large video files can become, and if you’re dealing with footage from the latest 4K, 6K or 8K cameras then you’re going to need a lot of external storage. This might be as simple as a small portable hard drive, a more industrial RAID unit, or an expandable solution from vendors like Synology, QNap or Drobo.

All together, this gives us a list of components that ultimately determines how a computer performs, and it’s reasonable to expect that higher performance parts will cost more.

- CPU

- GPU

- RAM

- Hard Drive

- Motherboard (System bandwidth / memory speed)

- Network adapter

- External storage

In addition, in a typical studio environment we must also consider –

- office network speed

- switch / router speed and bandwidth

- server and SAN (storage area network) speed

It’s natural to assume that spending more for a “faster” version of something will make your computer faster. It certainly can be, and if this series was on 3D rendering then it would be a different story. But as with our SSD example above, this isn’t always the case. After Effects – and non-linear editing – is a specific case, and it’s not so easy to generalise.

In part 2 of this series, I explained exactly what After Effects does – it combines bitmap images together. In Part 3, I looked at how large bitmap images can be – and how much information is involved in processing every pixel of an image. Unlike 3D rendering, it’s not unusual for After Effects to be working with a lot of huge files – non-linear editing is similar. After Effects performance isn’t just about the raw processing speed of a CPU, it’s about the speed of shuffling around large amounts of data.

Future articles in this series will focus on the CPU and the GPU, but we need to look beyond the most obvious parts of a computer when it comes to After Effects. As this series is about the history of After Effects and performance, I’m going to use a real-life example from my early days – now just a piece of history.

It’s the story… of a lovely codec

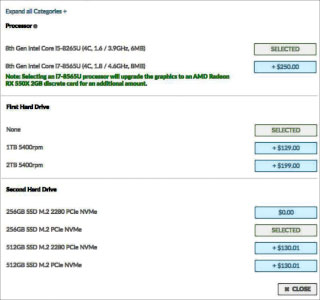

So it’s the late 1990s and you’re sitting in front of a Media 100 edit suite. The brief is to re-create the opening titles to “The Brady Bunch”. Someone’s gone out and filmed 9 people smiling at the camera, and you’ve got to assemble the clips together. Simples!

In Part 3 I outlined how desktop computers in the 1990s weren’t powerful enough to play animation. Premiere version 1 had a maximum resolution of 160 x 120. After Effects didn’t have a RAM preview until version 4 because, well, the average computer didn’t have enough RAM to store more than a few frames of video and even if it did, it wasn’t fast enough to play them back smoothly. If you wanted to work with video and animation, you needed a specialized video card.

(This era is something I’ve written extensively about in other articles, including a series on the desktop video revolution, and another on how non-linear systems were designed.)

Typical CPUs in the mid 1990s had clock speeds around 50mhz (today it’s more like 3000mhz), and even a single jpg file could take many seconds to open. Companies including C-Cube, Truevision and Media 100 developed specialized chips for processing video, which formed the basis of early video editing cards.

A 1990s video-editing card was basically an analogue to digital converter, which would input analogue video from a tape deck and save it as a digital file on a disk. Different brands of video card would save the file using their own format – or codec. This was referred to as a “hardware codec”, because the custom hardware on the video cards compressed and decompressed the images, not the computer’s CPU.

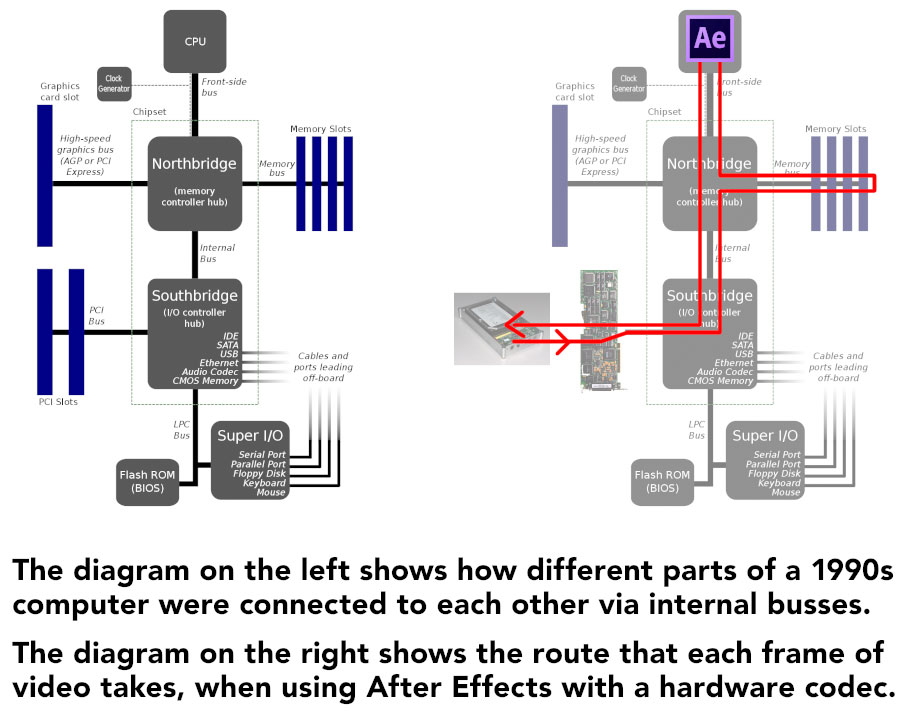

Playing video in real-time was handled by the video card – they needed high-speed disk arrays to read the files fast enough for playback, and high-speed SCSI cards to deliver the data from the hard drives to the video card. Shuffling all of this data around takes place on the computer’s “bus” – you can think of the different parts of a computer being linked together by a road, and the data travels along the roads like a bus, hopping on and off the different parts of the computer as required.

As applications like After Effects emerged – version 3.0 came out around 1995, with version 3.1 following in 1996 – it became feasible to open video files and do basic effects and compositing with a desktop computer. As mentioned above, you couldn’t preview what you were doing because there was no RAM preview, but you could set up compositions and render them out so they could be played in non-linear editing apps.

Because each video card company had created their own custom “codecs” to store the video files, using video in After Effects required the custom chips on the video card to decompress the image first, then transfer the uncompressed data to the computer’s RAM. If you were creating an animation to be played back in the editing suite, then once again it needed to be rendered using the video card’s codec – meaning the image was sent back from the computer’s RAM over the bus to the video card, where it could be converted and saved to disk. This process was completely transparent to the user – you just imported files into After Effects exactly the same way you do today, and rendering wasn’t any different either. Behind the scenes, thought, a lot was happening.

In technical terms this involved a lot of data being transferred across the computer’s bus to the different parts of the computer. A file would be stored on the hard drive, which needed to be read and transferred to the video card to be decompressed, which would then send it to the computer’s RAM to be accessed by After Effects, or whatever software was using it. Once After Effects had rendered a frame, the process had to be repeated in reverse – the image has to travel over the bus to the video card, get converted into the right codec, then get saved to disk. Transferring all of this data around the bus took time, and it was easy for all of the transferring to be slower than the actual rendering.

The latency bunch… the latency bunch…

Now that we’ve set the technical scene, let’s look at what this means for our Brady Bunch title sequence. We’ve digitised our 9 smiling faces, trimmed them all to be the same duration, and imported the clips into After Effects. We’ve scaled and positioned everyone into the iconic 3 x 3 grid and we’re ready to hit “render”.

But it’s a slow render. What’s going on?

Each frame is comprised of 9 individual video files. The time taken for the video card to decompress 9 frames, send them to After Effects, and then for After Effects to send the rendered frame back to the video card to be compressed and saved to disk, takes longer than the time needed to actually process the images in After Effects. The video card, and the bus connecting it to the rest of the computer, is a bottleneck.

The CPU isn’t working at capacity – most of the time it’s waiting for data to be delivered from the video card into the computer’s main memory. We could buy a faster CPU but it wouldn’t make After Effects render any faster; progress is dictated by the video card. The problem is the time taken to shuffle all of the information around on the bus.

As computers became more powerful, and After Effects became more capable, this “bus problem” became more obvious.

Video cards like this – specifically non-linear editing video cards – existed because computers weren’t powerful enough to play back video by themselves. Video editing cards worked because they had custom chips on them specifically designed to process video much faster than a CPU could. But computers are always getting faster and more powerful, and by the end of the 1990s the CPUs in the latest computers had caught up.

Video cards were still needed for digitizing video and playing it back, and they still used their own custom codecs to store the footage. But once the average computer was fast enough, “software codecs” became a thing. Video card and non-linear developers released software that could read the custom video files and decompress them using the computer’s CPU, not the specialized chips on the video card. By using the CPU to decode video files instead of the video card, data didn’t need to be shuffled back and forth across the bus, so everything was much faster. The faster your CPU, the faster it could decode video. There was no longer a bottleneck.

The problem with software codecs was that they were effectively turning the video card off. The system would either see the software codec or the hardware codec, and you couldn’t use both at the same time. Using a software codec was great when using applications like After Effects, but you still needed to use the video card when actually editing video or mastering back to tape. Working efficiently meant a juggling act of switching between the hardware and software codecs – which was effectively turning the graphics card on and off. However with the OS’s in the 1990s, switching would require a restart.

That’s the way we all became accustomed to rebooting a whole bunch…

So After Effects is rendering away but it’s taking too long. We want to make the render faster. By using the software codec instead of the hardware codec, the video card isn’t holding everything up and rendering is faster. But we still need to use the hardware codec when digitizing the footage, and when playing the finished animation back to tape.

In order to work the fastest, and not be held up by our “bus problem”, this is what we need to do:

- We have to boot up with the hardware codec so we can use the editing software, digitize all of the video files and export them for After Effects

- Then re-boot the computer with the software codec to work in After Effects. Rendering is now much, much faster because the CPU is able to process the images as fast as it can, without being held up by the video card.

- Once the file has rendered out, re-boot again with the hardware codec so we can run our editing software and play it out to tape.

This juggling act and frequent rebooting might seem complicated now, but at the time it was worth it because the difference in rendering time was so dramatic. It seemed normal at the time – almost a secret trick to work faster. In the 1990s everyone was just grateful if they weren’t still editing using tape.

Recreating the Brady Bunch titles is a great example of how a bottleneck can slow down a system, and it also demonstrates a situation where a faster CPU won’t make rendering any faster. However the bus problem wasn’t confined to people using non-linear edit suites, and it’s around the same time that another interesting part of After Effects history briefly took centre stage.

ICE ICE Baby

By the late 1990s, non-linear video cards and After Effects had made it feasible to work on motion graphics and visual fx with desktop computers. Even though this period can be called the “desktop video revolution”, it wasn’t exactly an overnight coup. Desktop computers from that time were still very slow. The first graphics work I was ever paid for (as a student) was made on a computer with a 30mhz CPU and 16 megabytes of RAM. The first computer I used as a professional had a 120mhz processor and 128 megabytes of RAM.

These computers made it feasible to do simple graphics and compositing in After Effects, but rendering was still very slow. At this time, you were considered pretty cool if you knew how to blur text at the same time as it faded up and down. Blurry text was all the rage, but it took a long time to render. A gaussian blur effect applied to an After Effects layer could easily blow rendering times out to minutes per frame, even with a relatively small blur radius.

One solution specifically aimed at After Effects users was an “ICE” card. While they’re little more than a hazy memory now, initially there was the “Green ICE” card and then the more powerful “Blue ICE”. These expensive expansion cards contained dedicated processors that could render some things much faster than the computer’s CPU. However there were still a few issues. Firstly, the chips used on the ICE cards – “SHARC” processors – were totally different to the PowerPC and Intel processors used in Mac and Windows machines, respectively. They had their own, unique instruction sets and worked in a different way – this meant that in order for them to work, they needed their own unique software to be written just for them. It wasn’t a case of plugging in an ICE card and having After Effects render faster, but instead the ICE cards came with their own set of After Effects plugins that had to be used, and only those plugins benefited from the faster rendering on the ICE card.

Because the ICE cards plugged into the computer’s bus, like video editing cards, they suffered from the same bus problem. Before an effect could be rendered on the ICE card, the image had to be transferred from the main memory over the bus to the ICE card. The ICE card would render the effect, then send it back again. If there were lots of ICE effects being applied to the layer at the same time, or if one of those effects was especially fast on the ICE card, then the speed benefits could be very significant – that’s why people bought them. But if there was only 1 ICE effect being applied, or if ICE effects were being combined with regular After Effects plugins, then the bus problem could result in slower renders overall, as transferring the data back and forth between the CPU and the SHARC processors would take longer than the time saved rendering.

Like rendering our “Brady Bunch” titles with a software codec, taking full advantage of an ICE card required an understanding of the bus problem, and an understanding of how to use an ICE card effectively. Otherwise it was possible to pay lots of money for an ICE card but end up with compositions that rendered slower than they would without one!

As the CPUs in regular desktop computers got faster and faster, the benefits of using an ICE card were reduced and they eventually became relegated to a distant part of After Effects history. It’s difficult to find information about the ICE cards, and probably the best resource that’s still available are posts on the Creative Cow dating back to when it started, in the early 2000s. Judging from the Creative Cow, it appears that ICE cards were more or less obsolete by 2002.

Whose bottle is it anyway?

The examples so far have been demonstrations of obvious bottlenecks. An SSD is thousands of times faster than a spinning hard drive, and video cards with software codecs, and the ICE cards with their custom ICE FX, clearly demonstrated the time taken to shuffle video files around the computer’s bus.

For everyday After Effects users, it might not be obvious if there’s any bottleneck at all in the system, and if there is, then where it might be. As outlined in Part 1, there’s no such thing as an “average” After Effects user, and five different AE users might be placing five very different sets of demands on their computer hardware.

After Effects doesn’t need to use imported video footage to create animations. Text layers, shape layers, solids and plugins can all be used to generate animations within After Effects. Still images, including Photoshop and illustrator files, don’t place the same demands on the computer’s bus as video files. This means that any motion graphics designers who are predominately using text layers, shape layers, solids and plugins are not going to be affected by the bus problem. The main hardware component being used by After Effects is the CPU. For users who aren’t shuffling large amounts of data around, and aren’t working with 3D graphics or plugins, then a faster CPU will result in faster performance.

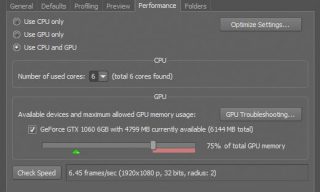

From the initial release of After Effects up until After Effects CC 2014, the GPU wasn’t used to speed up rendering at all. You could buy the cheapest graphics card or the most expensive, and it wouldn’t make any difference to After Effects. Beginning with CC 2015, a small number of effects slowly gained the ability to use the GPU for rendering, but generally After Effects wasn’t utilising GPUs for rendering to any significant extent. Right up to After Effects CC 2019 someone animating only text layers, shape layers and solids wouldn’t see any performance difference between a basic graphics card and an expensive GPU.

While I’m not sure how many After Effects users are out there using only text layers, shape layers, solids and native After Effects plugins, those that are would benefit from a faster CPU.

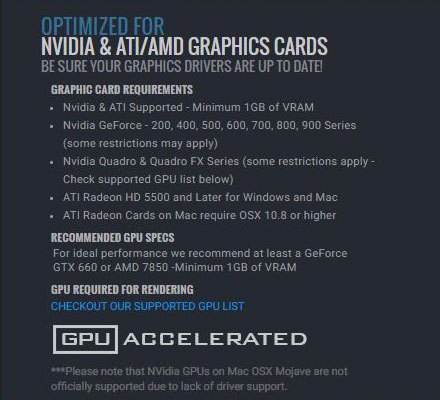

Over the last few years, 3D graphics have seen an increasingly significant role in the design of motion graphics. After Effects comes bundled with a lite version of Cinema 4D, and – anecdotally – I’m seen many motion graphics designers prefer to design in C4D and use After Effects more as a compositing and finishing tool. Similarly, there are a number of 3rd party plugins that provide basic 3D functionality within After Effects, including Video CoPilot’s Element, Superluminal’s Stardust, and various members of the Trapcode Suite. All of these applications utilize the power of the GPU – the graphics processing unit. While a future article will be dedicated entirely to the topic of GPUs, the important thing to note is that these applications utilize the power of a GPU because they were specifically designed to.

Cinema 4D users also have the option to use GPU-specific rendering engines such as Redshift and Octane, and the performance of these software components will almost entirely depend on the GPU they are running on. They’re also specifically designed to utilize multiple GPUs.

After Effects users who also work with 3D graphics, or regularly use 3rd party plugins that are optimized to use a GPU, face the difficult task of choosing the combination of CPU and GPU that provides the best value for money. There is no simple answer to this problem, because of the diverse range of After Effects users, hardware and budgets.

For designers who are working predominately in Cinema 4D or other 3D applications, then the GPU will be the primary factor in the system’s performance. Any system that is using a GPU based renderer such as Redshift will obviously benefit from a faster and more powerful GPU, or even multiple GPUs. It’s reasonable to expect that dedicated 3D software will be able to take advantage of all the features that the latest GPUs offer. For a designer who is working almost entirely within a 3D app, and rendering on their local machine, then multiple GPUs will be a better option than the fastest CPUs.

Choosing a GPU specifically for After Effects plugins such as Element isn’t as straightforward, as plugins may benefit from a GPU without utilizing every expensive bell and whistle. Of the few After Effects plugins that can use a GPU, many may only be able to use one, even if the computer has multiple GPUs installed. The additional features that differentiate a premium, top of the line 3D card from an entry level model are usually associated with advanced 3D rendering, and may not be relevant to After Effects plugins. As I write this, the most expensive GPUs from nVidia include their “RTX” technology, geared towards the goal of real-time ray tracing. However unless software is specifically written to taken advantage of the RTX features, it won’t be used. If you’re using 3D software that can take advantage of multiple GPUs, but hasn’t been optimized for nVidia’s RTX cards, then you’ll get better value for money out of two cheaper GPUs (cheaper because they don’t have RTX support) than one top of the range model. It’s always best to check with the vendor before making an expensive hardware purchase.

Some After Effects plugins can benefit from a GPU even if they’re not rendering 3D graphics. Neat Video, the best de-noising plugin available for After Effects, even has it’s own benchmarking tool built-in to work out how much power your system has, and if it’s faster to rely on the computer’s CPU, GPU or a combination of both. The additional 3D-specific features that differentiate a top-end GPU from a basic GPU may be great for games, but not so useful for non-3D After Effects plugins. So again, do some research before making a purchase.

Waiting outside the box

As I noted at the beginning of the article, the CPU and the GPU are two of the most obvious components in a computer, and the first two that people think of in terms of “performance”. For anyone buying or building a system, choosing the CPU and GPU combination will seem like the most significant decision, and it will probably be the most expensive.

But from my personal experience, however, any After Effects users in an office / studio environment will often find that the rendering speed of their machine is influenced more by the office network speed, including the speed of the network server and storage. I’ve seen many “God Box” workstations with high-end, super expensive CPUs attached to the office network through 1-gigabit Ethernet, and Ethernet is slow. For any After Effects users who work in a studio with a network, and access video footage or other large files from a network drive, the Ethernet connection is almost certainly the slowest component in the system. This is a comparable situation to the bus problem we looked at earlier. We could use the same “Brady Bunch” example but this time with footage stored locally vs footage stored on the network drive, and we would see a dramatic difference in rendering times.

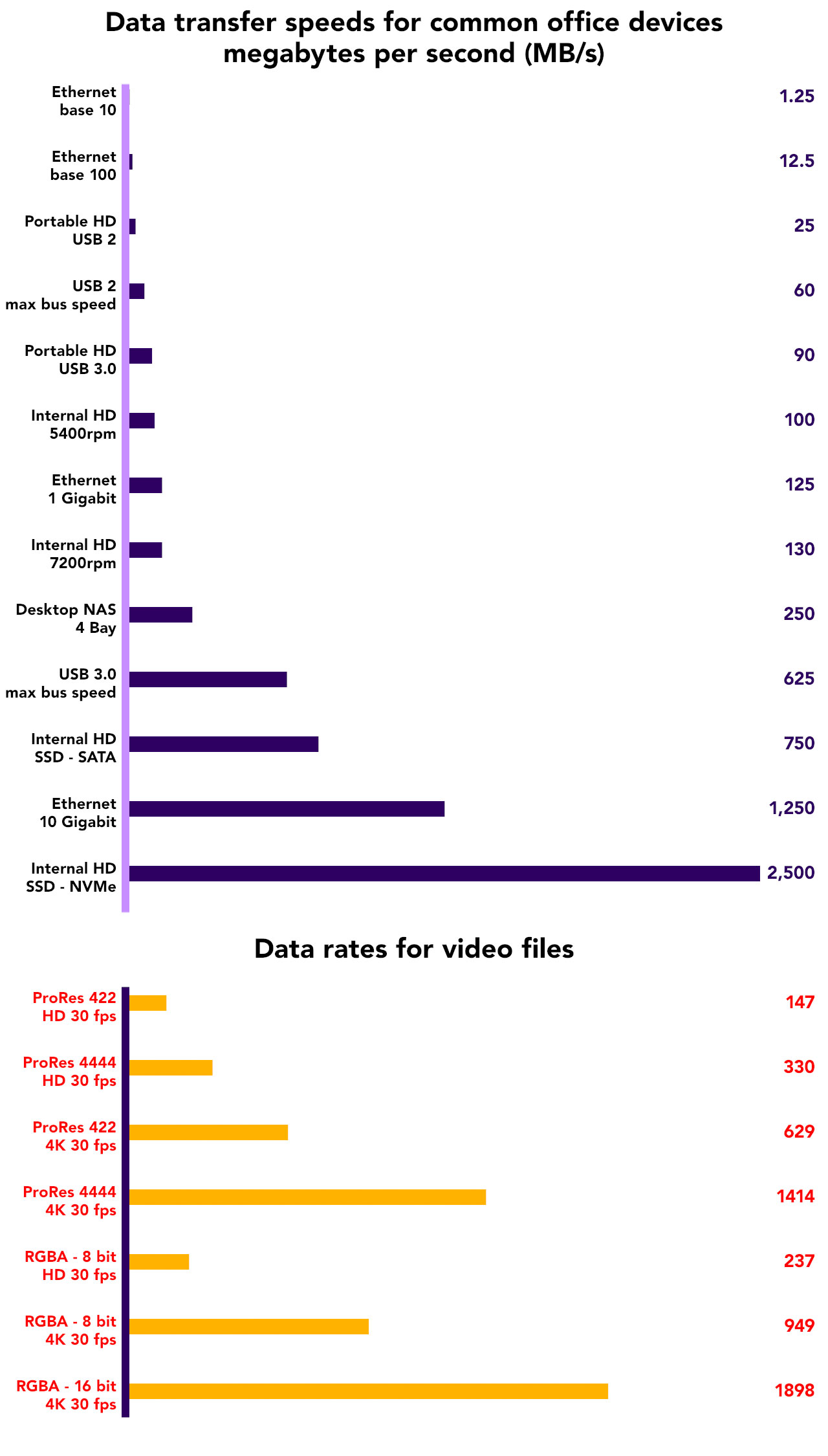

Unlike CPUs and GPUs, where newer and faster models are released several times a year, average office network speeds have increased on a much less frequent basis. While Wikipedia has an extensive table that lists the speed of every interface adapter you can think of, let’s quickly look at some common scenarios that After Effects users will have encountered.

During the early 1990s, when office networks could still be considered an emerging trend, the average office network would have “base 10” ethernet. Base 10 stood for 10 megabit, and although that might sound OK, it works out at about 1.25 megabytes per second. That’s really pretty awful. At the start of the 1990s this would have been fine for word and excel files, office mail and other small documents, but noticeably slow for larger files. It was so slow that the next version, “base 100” ethernet was called “fast ethernet”. Fast ethernet – 100 megabits – is 10 times faster with a maximum theoretical transfer rate of 12.5 megabytes per second. The G3 range of Macs were the first to have base 100 ethernet included as standard.

Jumping from 10 megabits to 100 megabits was pretty cool, and who’s going to complain about something becoming 10 times faster? People who have to work with large video files, that’s who. The next step was to introduce 1000 megabit ethernet, 10 times faster again. This is referred to as gigabit ethernet, because 1000 megabits is the same as a gigabit – and there’s a pretty good chance that the computer you’re in front of has a gigabit ethernet connection.

Gigabit ethernet has been around for a while, the first computers to come with gigabit ethernet built in as standard were Apple’s G4 range of computers, released way back in 2000. Again, this was a 10x improvement – and it’s still the most common speed today. Gigabit ethernet has a theoretical transfer speed of about 125 megabytes per second, and judging from market forces, people seem pretty happy with that, even though it’s almost 20 years old. It’s faster than most external portable hard drives, and about on par with an average spinning hard drive.

In Part 3 we looked at how massive video files can easily become, especially when working with larger resolutions. When George Lucas made history by filming “Attack of the Clones” with a digital camera, he needed custom made cameras supported by a truck load of equipment, to record images that were roughly HD resolution. Today, anyone can buy a Blackmagic camera that records 6K video for less than $10,000. Larger resolutions and larger file sizes are no longer uncommon, or the sole domain of Hollywood feature films. But although time has proved kinder to gigabit ethernet than it has to “Attack of the clones”, it’s easy to overlook that they’re both older than you might think.

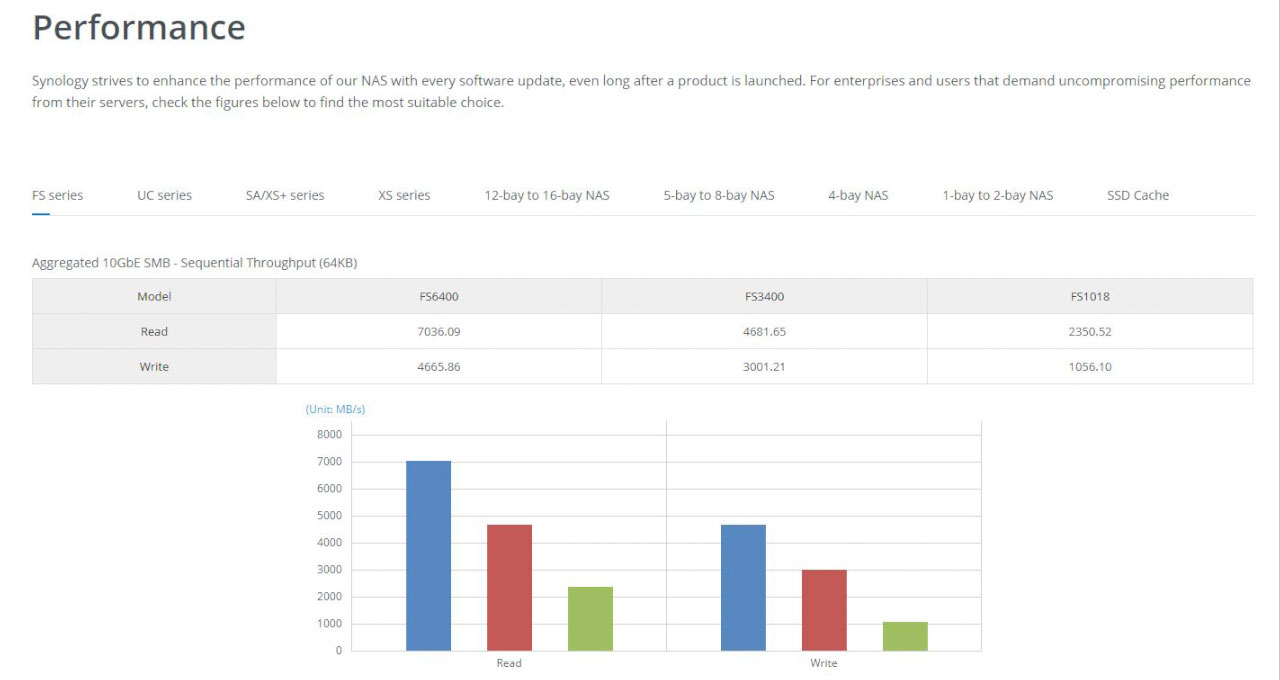

The general public seems pretty happy with gigabit ethernet, and it’s cheap, and it’s everywhere. But video professionals will still find it limiting when dealing with large files. 125 megabytes per second sounds like a lot, until you’re working with video files and then suddenly it isn’t. Non-linear editors will quickly find the limits if they’re trying to play back multiple streams of video over a simple network connection. The next step up is 10 gigabit ethernet, which again is 10 times faster. The theoretical transfer speed is over 1 gigabytes per second – 1.25 GB/s to be exact. This is really pretty fast, even by modern video standards, but although the technology has been around for nearly 10 years, there’s still a noticeable premium in price.

It’s quite probable that the average After Effects user doesn’t know the speed of their office network. Small studios and agencies may have never given it much thought, or perhaps they never expected to find themselves working with such large files. There’s a good chance that lots of After Effects users are sitting in front of computers that have 1 gigabit ethernet connections, connected to 1 gigabit switches, routers and storage. And while 1 gigabit ethernet isn’t exactly slow (125 MB/second) it’s not exactly fast, either. Anyone working over 1 gigabit ethernet in After Effects will almost certainly find that the network is a bottleneck, even if they haven’t realised it.

After Effects is not just a motion graphics tool, it’s a compositing application that’s designed to work with multiple layers of video, and average video files keep getting larger and larger. After Effects doesn’t just face “the bus problem” internally, as video frames are transferred between computer components, it’s also facing network problems, as it waits for data to be transferred over the network.

For an After Effects composition made up of several layers of video – for example our Brady Bunch title sequence – the speed of the network can make a huge difference in the speed of rendering. On a computer with the same CPU and GPU, rendering times will be significantly different depending on whether the footage is stored on a local spinning drive, a local SSD, a network drive with 1 gig Ethernet, or a network drive with 10 gig Ethernet.

The same is true for external USB drives. Many cheap, portable USB drives are only USB 2, with average file transfer speed of about 25 megabytes a second. USB 3 is faster again (average portable HD speeds are roughly 90 MB/second), and high-end portable drives can offer thunderbolt (also in various flavours) with speeds well into the gigabytes per second range. Again, I’ve seen After Effects users with expensive “god boxes” importing footage from external USB drives, and then wondering why it’s so slow. The problem isn’t After Effects, or the speed of the CPU. It’s the fact that the USB drive can’t transfer more than 100 megabytes a second.

All together, there are a range of components that have a significant influence on rendering times, not just the CPU or GPU. In many cases rendering times are governed by the speed that the video files can be accessed by the computer. Any user working over a slower network will not see any performance gains by upgrading to a more expensive, faster CPU (or GPU) as it will just be waiting for footage to be transferred. The same is true for anyone working off a slow external hard drive.

The other end of the Ethernet cable

A fast network connection isn’t the end of the story, either. Your Ethernet connection doesn’t end with the plug in the wall. Somewhere down the line is a server and a bunch of network storage, possibly with a switch / router in between. Any one of these could be struggling to keep up with the demands of video production. Just like every other component in a system, there’s a huge range of prices and options available. There’s a big jump in price and performance from desktop NAS systems aimed at small offices, to large SANs – storage area networks – that can handle high data rates with large numbers of users.

It’s possible to buy a cheap portable drive that can store several terabytes of data for less than $100. Companies including Synology, Qnap and Drobo have made redundant, network storage affordable for home users. However a consumer level NAS might be fine for a small group of users, but even a small animation studio can bring one to its knees. And once you start thinking about render farms you might be sharing the network, server and storage with dozens of other machines. Your computer might have a 10 gigabit network connection, but the server might not be fast enough to send data that fast. This is why high-end, high-speed network storage is so expensive. Serious SANs – storage area networks – cost hundreds of thousands of dollars, and you could easily spend millions if you wanted to. While I can buy a portable two terabyte drive at my local mall for about $100, two terabytes of high-speed storage for an enterprise level SAN may cost ten times that.

Of course, every After Effects user is different, and not everyone uses large video files or huge 3D renders. However I think it’s safe to assume that when the average After Effects user is thinking of ways to improve performance, or maybe spend a set budget on newer components, the network is often overlooked – especially if you’re only using a 1 gigabit Ethernet connection.

Stay tuned

While it’s important to acknowledge the “bus problem”, and to think about how your own particular After Effects usage may be affected by a bottleneck somewhere in your system, there’s no escaping the fact that the CPU is the primary component in a computer. If you thought this article was long, wait until you see the next one, when we take a brief look at the history of the CPU and try to understand what all the fuss is about.

But until then – if your computer still has a spinning hard drive in it, replace it with an SSD. It will be the single most effective, dramatic value-for-money investment you can make. If you’re not booting up from an SSD then do yourself a favour, google for one now, and buy one before the end of the day.

This is part 15 in a long-running series on After Effects and Performance. Have you read the others? They’re really good and really long too:

Part 1: In search of perfection

Part 2: What After Effects actually does

Part 3: It’s numbers, all the way down

Part 4: Bottlenecks & Busses

Part 5: Introducing the CPU

Part 6: Begun, the core wars have…

Part 7: Introducing AErender

Part 8: Multiprocessing (kinda, sorta)

Part 9: Cold hard cache

Part 10: The birth of the GPU

Part 11: The rise of the GPGPU

Part 12: The Quadro conundrum

Part 13: The wilderness years

Part 14: Make it faster, for free

Part 15: 3rd Party opinions

And of course, if you liked this series then I have over ten years worth of After Effects articles to go through. Some of them are really long too!

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now