When we look back over the last 25 years of After Effects’ history, and how its performance has evolved, we can see a few key milestones. The years 2006, 2010 and 2015 proved to be hugely significant. In this article, we’re going to look at what happened in 2006 to make it such a landmark year. It’s all to do with CPUs – the heart of a computer. There were two big announcements about CPUs in 2006, one was a complete surprise but both changed the desktop computing landscape forever.

In Part 5, we were introduced to the CPU, usually considered the heart of a computer. In Part 6 we pick up where we left off, so if you missed Part 5 I suggest you catch up here first…

Same problems, different solutions

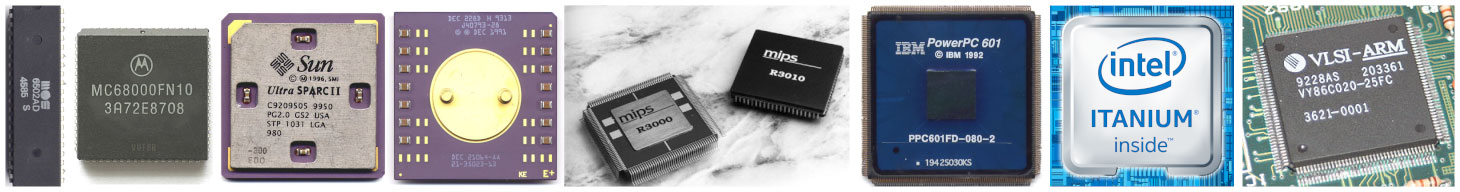

Although Intel’s 4004 is recognized as the first microprocessor, many other companies were competing with their own CPUs based on different designs. For the next 20 – 30 years after the 4004, Silicon Graphics developed their own “MIPS” processors, Sun used their own “SPARC” processors, DEC used their own “Alpha” processors, Motorola had their “68x” processors and various partnerships lead to the “PowerPC” and then “Itanium” designs. The ARM CPUs that are used in all modern smartphones can trace their heritage back to a small British company called Acorn Computers. These weren’t just competing products, but competing design philosophies. They haven’t all survived.

During the 1970s and 1980s Intel’s main competitor was probably Motorola, although the most recognizable home computers from that period – the Commodore 64, the Apple II and the TRS-80 – used cheaper chips made by MOS technology.

Even at this early time, comparing different chips made by different companies wasn’t easy, because their underlying architecture could give radically different results for different tasks. As we covered in part 5 of this series, different companies used different instruction sets, so one chip might be able to do a certain type of calculation with only one instruction, while a different chip might need to use several instructions to do the same thing. On a broader level, the way different chips interfaced with memory and other parts of the computer also influenced how fast a computer would work as a whole. For example, Intel’s flagship CPU was called the 8086 – it’s the great grandfather of every CPU in every desktop computer today. However – as was common practice at the time – Intel also made a cheaper variant with slightly lower specs. The 8088 was basically the same chip as the 8086, but it couldn’t access as much memory. But because of the way the chip was designed, this had the unfortunate side effect of making the 8088 take twice as long to perform some operations, in some circumstances. Overall, this made it difficult to accurately compare computers using CPUs made by different companies.

As early as 1984, Apple coined the term “Megahertz myth” in response to people comparing CPUs using the clock speed alone. In 1984, the Apple II had a clock speed of roughly 1 Mhz, while the IBM PC used a clock speed of roughly 4.7 Mhz. You don’t need to be Einstein to see that 4.7 is a larger number than 1, and you don’t need to read “Dilbert” to know that customers will think 4.7 Mhz sounds faster than 1 Mhz. So the initial impression to customers was that the IBM PC was 4.7 times faster than the Apple II. However the Apple II used a completely different chip – a 6502 made by MOS – compared to the 8088 made by Intel, that was inside the IBM PC. The 6502 and the 8088 were so different in design that comparing clock speed alone was not accurate. While the number 4.7 is obviously 4.7 times larger than 1, a computer with a 4.7 Mhz 8088 was not 4.7 times faster than one with a 1 Mhz 6502.

Apple were frustrated that consumers assumed the 4.7Mhz PC was so much faster than the 1 Mhz Apple II, and launched the “Megahertz myth” campaign in 1984.

History and sales figures suggest that it didn’t work.

Throughout the 80s, 90s and early 2000s this pattern continued. Computers were primarily compared by the clock speed of their processor, because it’s easy to compare one number to another. The reality is that different chips would perform differently depending on what they were doing, but that’s a tough sell to consumers.

But while the “Megahertz myth” was alive and well – and evolved to become the “Gigahertz myth” – the CPU was still the single most significant part of a computer.

By the late 90s the desktop computer market had settled down to two basic systems: Apple Macs which used PowerPC chips, and Windows machines that used Intel x86 chips. With the “Megahertz myth” having become the “Gigahertz myth”, comparing the two different processors was still not something that could be accurately done based on clock speed alone.

Choose your strategy

Every year, CPU makers release new processors that are faster than the ones from the year before. This has been going on since the 1970s, and up until the year 2006 this was only really evident to consumers via clock speeds. It took almost 20 years for average clock speeds to climb from 1 Mhz to 50 Mhz, before they leapt into the hundreds of Mhz and quickly headed towards the 1 Ghz mark.

But increasing clock speed is only one way to make a processor faster. In Part 5, I referred to a couple of books that provide more insight into CPU design. “Inside the machine”, by Jon Stokes, details all of the improvements made to popular and recognizable processors over the last 30 years. Concepts such as superscalar design, pipelining, instruction set extensions and branch prediction are all explained in-depth. These are all aspects of CPU design that have been developed over the past few decades, and they all contribute to the overall power and speed of a processor. More recent developments include “hyperthreading” and speculative execution.

It’s not 100% relevant to After Effects to list every single way that a CPU can be made faster, but it is important to know that increasing the CPU clock speed is only one avenue.

Like any competitive market, PowerPC and Intel were constantly improving their designs, and releasing newer, better and faster products. In 2000, Intel released the Pentium 4, the latest version of their x86 chips that date back to the original 8086 in 1978. The Pentium 4 was built using a new design that Intel had named “Netburst”. The underlying philosophy behind the Netburst design would be the foundation for all future Intel Pentium products.

Netburst vs PowerPC

Of all of the features which determine how fast and powerful a CPU is, the Netburst design focused on one element more than any other: clock speed. For the next six years, Intel bumped up the clock speed of their Pentium 4s and the performance of their processors increased accordingly. Because Intel had designed the Pentium 4 specifically around clock speed, the average clock speed jumped up very quickly – from about 1 Ghz to 4 Ghz in just a few years. Considering that it took about 20 years for the industry to go from 1 Mhz CPUs to 50 Mhz, the sudden increase from 1000 to 4000 Mhz was remarkable.

Intel aggressively marketed the advantage they had. The clock speed of the Pentium 4 approached 4 Ghz while the G5 chips in Apple Macs never even made it to 3. While Apple actively pushed back against the “Gigahertz myth”, it was always facing an uphill battle to convince the average user that a chip running at 4 Ghz wasn’t really faster than one running at 2 Ghz.

Increasing clock speeds suited software developers just fine. As long as the performance of a processor came primarily from its clock speed, then programmers didn’t have to do anything special to benefit from faster processors. Some other methods of improving processor performance, such as adding new instructions, would only benefit software that used those new features – this meant additional work for programmers. But a faster clock speed made everything faster, and software developers didn’t need to do anything about it at all.

The Pentium 4 presented a pretty simple proposition: If you bought a faster computer, your software would run faster.

Intel’s aggressive marketing of clock speed as performance changed the desktop computer landscape forever, when Apple announced they were switching from PowerPC chips to Intel in 2006. Intel had won the Gigahertz war. From that point on, all desktop computers have used CPUs with the same instruction set: Intel’s x86.

String theories

In After Effects terms, CPUs had always been easy to understand: buy a faster computer, it renders faster. The bigger the number on the box, the faster it is.

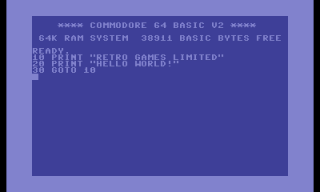

Just about all software at this time was “single threaded”. The terms “single threaded” and “multi threaded” will be the topic of a future article, but in simple terms a single threaded application does things one step at a time. This can be considered the “normal” way in which software works. When you write a program, you are writing a set of instructions for the computer to follow. The computer starts at the beginning and works through the commands one at a time. Some programming languages, such as BASIC, even use line numbers to list the commands of the program in order.

This is how computers have worked since they were invented. Applications were largely self-contained, and no matter how large or complex a program might appear to the user, at a very low level the computer was doing things one step at a time. A faster processor enabled the computer to make those steps faster, but no matter how fast the processor was, there was really only 1 thing happening at once.

This wasn’t due to laziness or poor design from programmers. It was how computers had always worked, right back to the original – purely theoretical – concept of a “Turing machine”. From the very first computer that was built, they worked through programs one step at a time.

Netburt unravells

However just as Apple rocked the computing world by announcing their switch to Intel processors, Intel’s newest chip changed everything – a change that is still being felt today. Earlier, I noted that there are a few key years which can be seen as significant milestones in the history of After Effects and performance. 2006 was one of those years, but not because Apple switched to Intel CPUs.

After about six years of pushing the Pentium 4 to faster and faster clock speeds, Intel discovered that there was an upper limit to the underlying design. Because of the way microprocessors work, all governed by the laws of physics, there’s a link between the clock speed of a processor and the amount of power it needs to run. The same laws of physics provide a link between how much power is needed and how much heat is produced.

Because the Pentium 4 prioritized clock speed over other aspects of processor design, they were relatively power hungry and they generated enormous amounts of heat. By 2005 the Pentium 4 was as fast as it could ever get without melting itself. The “Netburst” philosophy had been pushed to the extreme, and there was no room for improvement. As Intel looked for ways to make their future processors faster, they faced a dilemma: they could either break the fundamental laws of physics, or begin again with a completely new design. Because it’s not possible to break the laws of physics, Intel went with a new design.

As the Pentium 4 reached the end of its development, so did Intel’s focus on clock speed. Intel acknowledged that in order for computers to become faster and faster, you couldn’t just take a processor and make the clock speed faster and faster forever. The Pentium 4 had taken that concept as far as possible. After six years of improving the Pentium’s performance by increasing the clock speed, Intel would now prioritise other aspects of CPU design to make their future processors better.

With the Pentium 4 officially at the end of its development, Intel’s next generation of desktop CPU was named “Core”. The first version released in 2006 was the “Core Duo”. It was based on a radically different philosophy that marked a profound change from the previous six years of Pentium releases:

If you wanted a more powerful computer, you would need more processors – not faster ones.

The Core Duo looked like a single chip. Its appearance wasn’t that different to any previous Intel CPU. But looking inside revealed the key difference to the Pentium 4: The Core Duo was actually two completely separate CPUs on the same chip. The Core Duo had a slower clock speed than the Pentium 4, but it had two CPUs – so in theory the overall available computing power made up for it.

The Intel Core Duo had ushered in the age of multiprocessor computing.

While increasing clock speeds had given programmers a free ride in terms of improving software performance, Intel’s new approach would shift the focus back onto software developers. Unlike increasing clock speeds, software didn’t automatically take advantage of more than one CPU. In fact, utilizing multiple processors effectively has always been considered a pretty tricky thing to do. But Intel had made the future of desktop computing very clear, and it revolved around multiple processors. If software developers wanted to be a part of it, they had to get on board.

The repercussions of that shift are still being felt today.

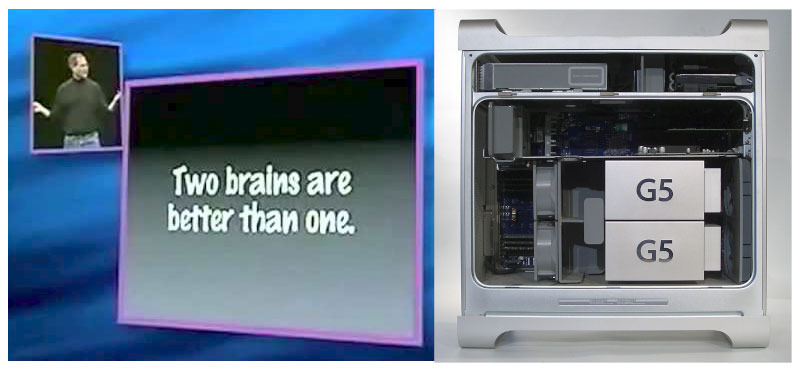

Begun, the core wars have

Having more than one processor in a computer was nothing new, but generally only in the more expensive “minicomputer” and “supercomputer” markets. The Silicon Graphics machines that ran Flame suites could have anything from 4 to 16 processors in them – the concept itself had been around for decades. Apple had occasionally released PowerMacs with two processors in them, but rumors of instability (for their OS 9 models in the 1990s) and a lack of clear benefits had made them more curiosities than revolutionaries. They certainly weren’t twice as fast as regular PowerMacs, and they certainly didn’t change the way software was developed.

What Intel were doing with the Core Duo was more significant than a computer manufacturer building a computer with more than one CPU. It wasn’t the same as Apple buying two CPUs and sticking them on the same motherboard. Instead, the CPU itself was made up of multiple CPUs. This was a fundamental change at the chip level. If you bought a new Core Duo CPU, you were getting a multiprocessor computer, even though both CPUs were on one chip. The name “Core” acknowledged the difference between the physical chip and the internal processor cores themselves.

The Core Duo chips that launched at the start of 2006 contained two processor cores – hence the name “Duo”– and the radical difference in design to the older Pentium 4 heralded a new direction for desktop computers. The clock speeds were much lower than the last generation of Pentium 4s, and this alone took some getting used to after years of focus on Ghz. The gaming market, who are especially tuned to benchmarks and frame rates, were the first to realize that two slower CPUs did not always add up to one fast one.

(Quick note: Intel released the “Core Duo” in January 2006, but it was intended for mobile devices and laptops. The desktop version was called the “Core 2 Duo” and was released 6 months later).

Speed Bumps

The first Core Duo chips launched in 2006 weren’t spectacular, the only people who’d describe them as “amazing” were those who were amazed that their new computer was slower than their old one. The significance lay in how the underlying design of the Core Duo heralded a completely new direction for the future development of both hardware and software – and this posed a few problems.

The first was that high-end computers that already used multiple processors were designed that way from the beginning. The “minicomputers” and “supercomputers” that used multiple processors – SGIs, Sun Sparcs, DEC Alphas and so on- all ran variations of Unix, an operating system that was designed from the ground up for multiple users and multi-tasking. Although Apple had recently launched their own take on Unix with OS X, Windows hadn’t been designed this way.

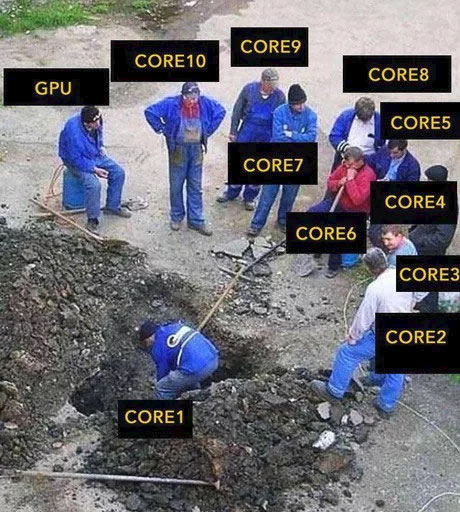

But secondly – writing software for multiple processors is hard. Really hard. To efficiently use multiple processors, programmers have to completely change the way they design software. It’s not as simple as just pressing a button. Unlike the Netburst days, where you could buy a faster processor and everything just ran faster, in many cases you could replace an older Pentium 4 with a newer “Core Duo” processor and things would run slower!

Programs that are designed to use multiple processors are called “multi-threaded”. Instead of running as one single “block” of code that runs on one CPU, it needs to be split up into smaller parts that can all run independently, each part on their own CPU, but synchronised with each other. The smaller independent sections of programs are called “threads”, hence the term “multi-threading”. If a program isn’t broken down into multiple threads, then it will only use one of the CPU cores – no matter how many might be available.

While this is difficult enough to do when starting from scratch, or completing an academic assignment in computer studies, it’s massively more difficult to take an existing software program that’s been around for over a decade, and re-write it to be multi-threaded without any obvious changes to the user.

This wasn’t just a problem for existing programs from established companies like Adobe and Microsoft, it was also a major issue for the operating systems too.

Software developers for Mac and Windows weren’t the only ones coming to grips with multiple processors. Roughly a year before Intel released the Core Duo, Sony and Microsoft had announced their latest gaming consoles: the Playstation 3 and the Xbox 360. Both of these consoles had multi-core CPUs, the Xbox 360 used a chip with 3 CPU cores while the Playstation 3 used an unconventional design that had 8. Both systems presented challenges for gaming developers, who had to come up with completely new strategies for utilizing the theoretical power of these consoles. Press releases and articles from the time give some indication of how challenging this was, with Ars Technica’s Jon Stokes summing it up nicely: “…the PlayStation 3 was all about more: more hype, and more programming headaches.”

However a better indication of how difficult it can be to utilize multiple processors comes from more recent articles looking back at the Playstation 3. The unusual “Cell” processor in the PS3 was very different to Intel’s Core Duo, but there are still parallels that can be drawn between the challenges facing software developers. Now that about 15 years have passed, developers are more open to discussing the problems they faced trying to utilize the Cell’s 8 cores. Most prominently, the developers of Gran Turismo called the Playstation 3 a “nightmare“, saying “…the PlayStation 3 hardware was a very difficult piece of hardware to develop for, and it caused our development team a lot of stress”. An article from GamingBolt, referring to the Cell processor as a “beast”, blames the difficulties of developing for the Playstation 3 on the multiple core processor: “there was an emphasis on writing code optimized for high single-threaded performance… The Cell’s wide architecture played a big role in the deficit that was often seen between the PS3 and Xbox 360 in 7th-gen multiplats.”

The 3-core CPU in the Xbox 360 didn’t provide the same headaches or years-long delays that the 8-core Cell processor did, but it was still new ground for games developers. In an Anandtech article on the Xbox 360 they say

“The problem is that today, all games are single threaded, meaning that in the case of the Xbox 360, only one out of its three cores would be utilized when running present day game engines… The majority of developers are doing things no differently than they have been on the PC. A single thread is used for all game code, physics and AI and in some cases, developers have split out physics into a separate thread, but for the most part you can expect all first generation and even some second generation titles to debut as basically single threaded games.” – Anandtech

The Core Duo may have represented a new direction for desktop computers, but the software that everyone was using in 2006 just wasn’t ready to take full advantage of multiple CPUs.

Future Fantastic

The first Core Duo chip contained two CPUs, which Intel were now calling “processor cores”. Initially, each individual core wasn’t as fast as a single Pentium 4, but you had two so in theory the overall processing power was greater. Because everyday software in 2006 wasn’t designed for multiple CPUs, only one of the processor cores was really being used – and because the clock speed of the processor was slower than a Pentium 4, the individual processor core was slower. The theory that two slower CPUs were more powerful than one fast one didn’t really pan out for everyone in 2006 – the real-world performance of the first Core Duo was usually less than impressive.

But just as some wise person said “a journey of 1000 miles begins with a single step”, you could apply the same logic to microprocessors. If we look at the Core Duo as the starting point on a new path for Intel, then the journey to 1024 cores began with the Core Duo.

Since 2006, every time Intel has released newer models and upgraded CPUs, the number of cores on each single chip increases. As I write this, Intel’s most powerful CPU has 28 cores and AMD’s has 32.

More recently AMD have leapfrogged Intel in spectacular style with their range of Threadripper CPUs. As evidence that competition is good for innovation, the next Threadripper CPUs offer 64 cores on a single chip. As I wrote in Part 5 – every time AMD release a new Threadripper CPU, they’re giving Intel a gentle kick up the rear. The competition can only benefit consumers.

Unfortunately, even after more than ten years, software developers are still struggling to adapt and catch up and effectively harness the power of multiple core CPUs. This is not just a problem for Adobe, and not just a problem for After Effects. This is an industry-wide, generational change that will be felt for decades.

Developing effective multi-threaded software isn’t just a difficult problem that one person has to solve, it’s a difficult problem that an entire generation of software developers need to solve.

I’ve previously recommended the book “Inside the machine“, by Jon Stokes, to anyone interested in CPU design. The last CPU analysed in the book is the Core 2 Duo, and the book concludes with the following sentence:

Intel’s turn from the hyperpipelined Netburst microarchitecture to the power-efficient, multi-core friendly Core microarchitecture marks an important shift not just for one company, but for the computing industry as a whole. – Jon Stokes, “Inside the machine”

Progress is slow.

This is part 15 in a long-running series on After Effects and Performance. Have you read the others? They’re really good and really long too:

Part 1: In search of perfection

Part 2: What After Effects actually does

Part 3: It’s numbers, all the way down

Part 4: Bottlenecks & Busses

Part 5: Introducing the CPU

Part 6: Begun, the core wars have…

Part 7: Introducing AErender

Part 8: Multiprocessing (kinda, sorta)

Part 9: Cold hard cache

Part 10: The birth of the GPU

Part 11: The rise of the GPGPU

Part 12: The Quadro conundrum

Part 13: The wilderness years

Part 14: Make it faster, for free

Part 15: 3rd Party opinions

And of course, if you liked this series then I have over ten years worth of After Effects articles to go through. Some of them are really long too!

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now