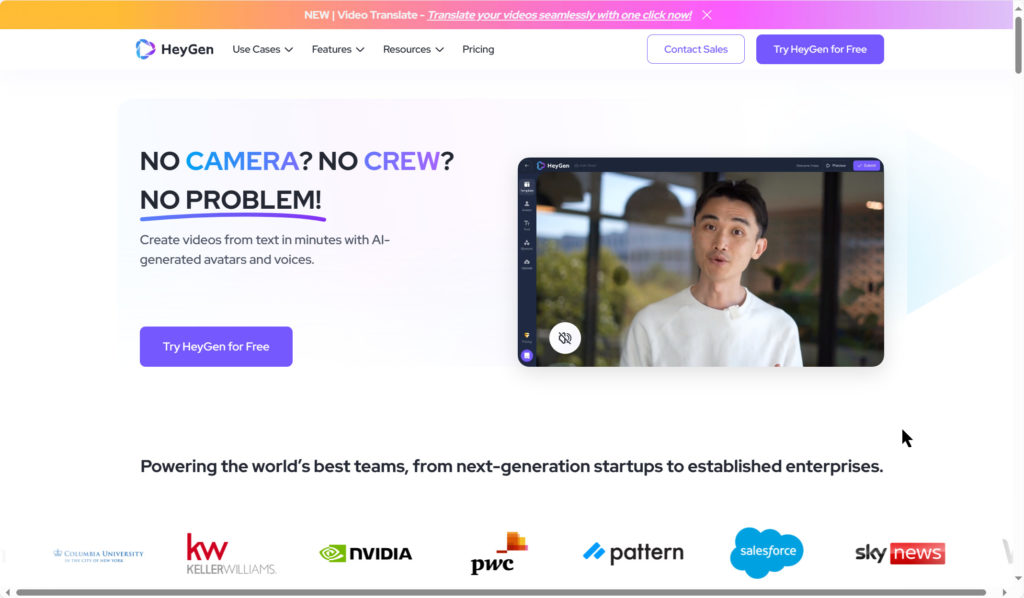

HeyGen AI software is a ground-breaking AI technology, bringing video avatars and translations/dubbing to the average prosumer production. If you need a talking head video for marketing, training and how-to videos, then this is the place to start! Aside from all the other features, options, templates for generating content from pre-made avatars and images, the real juice with this software is the ability to clone yourself from a video & audio clip is truly incredible.

As I’ve been saying in all my AI Tools updates: AI Tool technology is advancing at such a rate that we’re measuring it by days now, not even weeks, months or years. The developers at HeyGen are a perfect example of such rapid development, that I’ve had to change this product review several times in the past month; because either their advancements in technology, approach to developing these avatars, and their pricing structure of everything has changed almost daily.

It really took off when I saw a video posted on LinkedIn from the CEO of HeyGen teasing the capabilities of their new “Avatar Lite” beta, which I promptly got on board and applied to start testing – and I got this response in email the same day. The rest is a very, very short history!

While I’ll outline several features from this AI Tool, the biggest focus for me is on the Video Avatars – which have been evolving rapidly as I stated above. For instance, I made this video about a week ago and it’s already outdated in the features, quality and naming of the various tools. The “Avatar Lite” used to take 3-5 business days to generate a usable video avatar as shown (mostly with hands-on techs refining the process), but now is currently automated to generate an “Instant Avatar” in mere minutes! You can also now “Finetune” your Instant Avatar (for an additional fee of $59/mo – which we’ll discuss later in this article) and comes back to you in under 24 hrs.

Here’s a completely AI-generated video showing the process – including the voice translations feature mentioned below from only a couple weeks ago:

So what all does HeyGen do?

Video Productions from Templates

Depending on your skill level and requirements, there are many ways to start generating content in HeyGen’s studio. You can select one of dozens of starter templates you can modify directly in the portal and even switch your provided avatars from their library. The user interface is really straightforward and easy to navigate and make adjustments.

There are dozens of pre-loaded avatars and voices available to choose from.

You can also just make a video with an avatar on a green background and composite them directly in your NLE of choice. This example was from a generic avatar and voice generated in HeyGen and then composited in After Effects for an example social media short video.

Animated Faces from Photos & Images

This was the first step in discovering how much fun this software could be. I discovered it a few months ago and played around with various photos and images rendered from Midjourney. The process has changed a little since then, but the quality has improved a great deal.

They also have an option to generate AI characters with a text description directly inside HeyGen’s interface. It came up with some interesting results but note that only the faces/heads get animated and not the entire torso when you generate avatars this way.

It’s a quick process – simply upload your image and apply an AI voice (or cloned voice from your ElevenLabs API) and then create a video with your text input. Just upload your photo or rendered image to start (making sure the face is full visible and central to yoru image).

Here’s a few examples from my headshot photo and a couple Midjourney images:

Check out the example below where I’m setting up the green screen studio and our studio mannequin “Leana” complains. That was done from an iPhone photo in HeyGen using this same process.

Video Avatars & Voice Cloning

This is where we split off from the rest of the pack – and what got me excited about using HeyGen for regular marketing and instructional purposes at my day gig at a biotech company. It really has generated a lot of interest with our product marketing folks.

The first step is to make sure you have a good video and audio recording to work from. You can just put up a tripod and shoot yourself or your subject in a casual or business environment with a steady background and clean audio for your submission. You shouldn’t move around or make sudden gestures or facial expressions and let the video run for a full uninterrupted 2-5 minutes for the best cloning results.

When you create your avatar, you have to submit a video authorization (from the subject directly) for security purposes. This keeps the site safe from nefarious activities.

In this first video I generated from my home studio office was a baseline to build my other experiments on:

For more flexibility in my avatars, I set-up the green screen studio to shoot more tests of myself, reading the same 2-1/2 minute script from a teleprompter for my comparisons. Setting up the greenscreen after a few years since the first shutdown for Covid took awhile to dial everything in, so our mannequin “Leana” got a bit impatient standing there all day. (also animated with HeyGen) 😉

The process is really simple and I don’t need to outline all the steps here because it’s easy to follow their instructions from the web site and they have multiple video tutorials on the site they’ve created. You can use either a prerecorded voice audio file or TTS using a built-in voice or select a clone you’ve generated. I’ve downloaded several from ElevenLabs to generate many of my test videos but now prefer using the built-in third-party API to generate directly inside of HeyGen and I can access whatever ElevenLabs voices I have in my account through the voice manager.

So for this example, I applied one AI generated VO audio from ElevenLabs.com to create three different versions of the same script to see how they compared – or differed from each other. Keep in mind that these three avatars aren’t just dressed differently, they were sourced from three separate videos that I shot on the green screen at separate times. Applying the prerecorded AI voice from ElevenLabs assured the Avatars would sync properly. I could not get this same result had I run the ElevenLabs API to generate the VO on the fly repeatedly as there would be variations in the voices.

In this example, I ran the same script and composite in Premiere just exchanging the green screen composites from After Effects in the same sequence.

Instant Avatar vs “Finetuned”

The Instant Avatars you get with your plan are adequate for most purposes (you can purchase more if needed), but the Finetuned Avatars do have better mouth and lip sync performance, as seen in my testing.

In this example video, I used ElevenLabs to produce the audio track which I uploaded to HeyGen when I created the video avatars, so they have the exact same audio track for true side-by-side comparison. Notice the accuracy of the lip sync is improved on the Finetuned version on the right.

Better yet – I’ve found that using the ElevenLabs API link directly inside of HeyGen, I get much better lip syncing and mouth movements on BOTH the Instant and Finetuned avatars.

This is only the beginning… watch this tech closely in the coming months!

Translations & Dubbing

There are two ways of generating translations in HeyGen. One is to input translated text into the video avatar producer and select a multilingual voice from your ElevenLabs API and create a clean video avatar from there.

The other method lets you upload any video clip with a subject running at least 30 seconds facing the camera and it will generate a new video for you with a clone of the actor’s voice and lip syncing capabilities automatically in a few minutes. Here’s their rundown on the process in video form from the HeyGen website:

I’ve tested several video clips and the results have been amazing! Check out the intro video at the top of the article to see more examples I’ve created.

Here’s an example clip that I created from a scene from Pulp Fiction with Christopher Walken and translated into Spanish and French. You can see where this could be really helpful for video dubbing and regionalizations in the future.

Pros & Cons

While I have been a major fanboy the past month or so over these new features and capabilities, I’d be remiss to not point out some things that I hope get resolved or updated in future versions of the HeyGen software tools – and pay structures.

The tools are evolving quickly – to the point that I think most of this review will be obsolete by year-end. And with that, possibly positioned to be bought up by a bigger brand or another round of financing encourages the developers to make a leap toward world domination. (only slightly kidding) 😉

I would like to see the ability to control the Instant Avatars more with gestures, facial expression, etc. When the voices get more energetic, the faces should reflect that as well. Mabe just an “exaggeration/enhancement” slider or something.

The talking photos could use more control as well – like the way the puppet tool works in After Effects, where you can define the points that move or at least define the boundaries of the head/hair so the whole head moves – not just the face.

And pricing seems to be all over the place currently – but that might be due to the changes in product offerings as they develop. For instance, the $99/yr for a voice clone that I feel is sub-par to what you can generate in ElevenLabs. (which I’m really thankful for the application of the ElevenLabs API which produces the best of both worlds in one easy step). The monthly fee for the base service is fair, especially when 3 Instant Avatars are included with the $59 Creator package. The “Finetuned” option is an additional $59/mo for EACH AVATAR you upgrade this option for. That means if you upgrade all three Instant Avatars you create, that’s an additional $150 mo just to continue to use them. I guess if you don’t need them any more, just cancel the upgrade plan for each one, but I’m not really seeing that much value in the little bit of difference that the “Finetuning” provides at this point for most customers – but professionals will justify the additional cost to get a better level result.