On Thursday this week (Feb 15), Sam Altman, CEO of OpenAI (ChatGPT, DALL-E) released a sneak peek into our not-to-distant future of realistic AI generated text-to-video content with the announcement of their new model, Sora on a “Xitter” post:

here is sora, our video generation model:https://t.co/CDr4DdCrh1

today we are starting red-teaming and offering access to a limited number of creators.@_tim_brooks @billpeeb @model_mechanic are really incredible; amazing work by them and the team.

remarkable moment.

— Sam Altman (@sama) February 15, 2024

What is Sora?

*From OpenAI website –

*Creating video from text

Sora is an AI model that can create realistic and imaginative scenes from text instructions.

We’re teaching AI to understand and simulate the physical world in motion, with the goal of training models that help people solve problems that require real-world interaction.

Introducing Sora, our text-to-video model. Sora can generate videos up to a minute long while maintaining visual quality and adherence to the user’s prompt.

Today, Sora is becoming available to red teamers to assess critical areas for harms or risks. We are also granting access to a number of visual artists, designers, and filmmakers to gain feedback on how to advance the model to be most helpful for creative professionals.

We’re sharing our research progress early to start working with and getting feedback from people outside of OpenAI and to give the public a sense of what AI capabilities are on the horizon.

Sora is able to generate complex scenes with multiple characters, specific types of motion, and accurate details of the subject and background. The model understands not only what the user has asked for in the prompt, but also how those things exist in the physical world.

The model has a deep understanding of language, enabling it to accurately interpret prompts and generate compelling characters that express vibrant emotions. Sora can also create multiple shots within a single generated video that accurately persist characters and visual style.

The current model has weaknesses. It may struggle with accurately simulating the physics of a complex scene, and may not understand specific instances of cause and effect. For example, a person might take a bite out of a cookie, but afterward, the cookie may not have a bite mark.

The model may also confuse spatial details of a prompt, for example, mixing up left and right, and may struggle with precise descriptions of events that take place over time, like following a specific camera trajectory.

Altman then teased the public by taking on live requests to generate AI videos up to a minute long with prompts from the audience:

we'd like to show you what sora can do, please reply with captions for videos you'd like to see and we'll start making some!

— Sam Altman (@sama) February 15, 2024

And some of the results were pretty amazing!

https://t.co/qbj02M4ng8 pic.twitter.com/EvngqF2ZIX

— Sam Altman (@sama) February 15, 2024

https://t.co/uCuhUPv51N pic.twitter.com/nej4TIwgaP

— Sam Altman (@sama) February 15, 2024

here is a better one: https://t.co/WJQCMEH9QG pic.twitter.com/oymtmHVmZN

— Sam Altman (@sama) February 15, 2024

https://t.co/rPqToLo6J3 pic.twitter.com/nPPH2bP6IZ

— Sam Altman (@sama) February 15, 2024

https://t.co/rmk9zI0oqO pic.twitter.com/WanFKOzdIw

— Sam Altman (@sama) February 15, 2024

Pretty impressive – and up to a minute in length!

How does Sora work?

*From the Sora website – BE SURE TO CLICK THE TECHNICAL REPORT LINK!

*Research techniques

Sora is a diffusion model, which generates a video by starting off with one that looks like static noise and gradually transforms it by removing the noise over many steps.

Sora is capable of generating entire videos all at once or extending generated videos to make them longer. By giving the model foresight of many frames at a time, we’ve solved a challenging problem of making sure a subject stays the same even when it goes out of view temporarily.

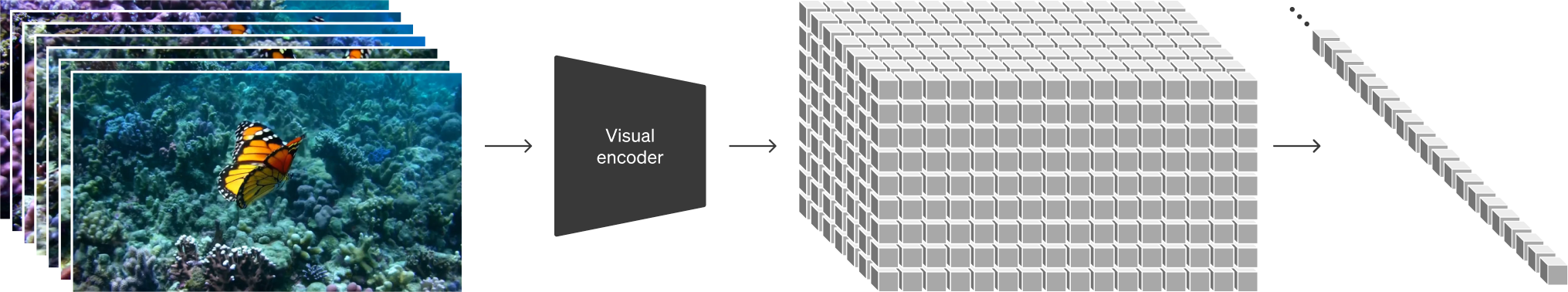

Similar to GPT models, Sora uses a transformer architecture, unlocking superior scaling performance.

We represent videos and images as collections of smaller units of data called patches, each of which is akin to a token in GPT. By unifying how we represent data, we can train diffusion transformers on a wider range of visual data than was possible before, spanning different durations, resolutions and aspect ratios.

Sora builds on past research in DALL·E and GPT models. It uses the recaptioning technique from DALL·E 3, which involves generating highly descriptive captions for the visual training data. As a result, the model is able to follow the user’s text instructions in the generated video more faithfully.

In addition to being able to generate a video solely from text instructions, the model is able to take an existing still image and generate a video from it, animating the image’s contents with accuracy and attention to small detail. The model can also take an existing video and extend it or fill in missing frames. Learn more in our technical report.

Sora serves as a foundation for models that can understand and simulate the real world, a capability we believe will be an important milestone for achieving AGI.

Initial Opinions and Overviews

Since this is making quite a buzz around social media already, and none of us mere citizens have access to the tech yet, there’s no sense in reinventing the wheel with a video overview and rundown when there are already some good tech vloggers out there on top of it!

Is it too good? Should we be concerned?

As others mentioned in the above overview videos, the first markets that will be directly affected by the latest generative AI models such as Sora will be stock photography and stock video used for short clips of B-roll in general video productions. We’re already seeing AI generated video and animation clips being used in marketing, but the real fear is something AI generated being passed off as “real” – like in journalism, campaign ads, etc. – hence the safety warnings and processes to attempt to protect from that.

Of course the tech writers are all discussing and addressing public concerns before this model is released for the public to play with. It appears that OpenAI is consciously trying to get ahead of the issues and potential problems generated with the technology:

*From OpenAI website –

*Safety

We’ll be taking several important safety steps ahead of making Sora available in OpenAI’s products. We are working with red teamers — domain experts in areas like misinformation, hateful content, and bias — who will be adversarially testing the model.

We’re also building tools to help detect misleading content such as a detection classifier that can tell when a video was generated by Sora. We plan to include C2PA metadata in the future if we deploy the model in an OpenAI product.

In addition to us developing new techniques to prepare for deployment, we’re leveraging the existing safety methods that we built for our products that use DALL·E 3, which are applicable to Sora as well.

For example, once in an OpenAI product, our text classifier will check and reject text input prompts that are in violation of our usage policies, like those that request extreme violence, sexual content, hateful imagery, celebrity likeness, or the IP of others. We’ve also developed robust image classifiers that are used to review the frames of every video generated to help ensure that it adheres to our usage policies, before it’s shown to the user.

We’ll be engaging policymakers, educators and artists around the world to understand their concerns and to identify positive use cases for this new technology. Despite extensive research and testing, we cannot predict all of the beneficial ways people will use our technology, nor all the ways people will abuse it. That’s why we believe that learning from real-world use is a critical component of creating and releasing increasingly safe AI systems over time.

Some comparisons with other Generative AI tools…

Is it all in the prompts? Are there shared LLM models somewhere on the backend?

For kicks, I tried a few myself with Runway AI using the exact same prompts in this short video test (It fails miserably on all counts!)

Now that Runway has been called-out, we’ll see how they end up rising to the challenge!

Nick St. Pierre on X has discovered some strange similarities with results from Midjourney with the same text prompts. Click through to see his results:

I ran all of the Sora prompts through Midjourney

Interesting how similar some are

side-by-sides against vids:

— Nick St. Pierre (@nickfloats) February 16, 2024

Some resulting renders were eerily similar – such as the woman’s dress below.

A grandmother with neatly combed grey hair stands behind a colorful birthday cake with numerous candles at a wood dining room table, expression is one of pure joy and happiness, with a happy glow in her eye. She leans forward and blows out the candles with a gentle puff, the… pic.twitter.com/MBxlJdTRCG

— Nick St. Pierre (@nickfloats) February 16, 2024

What’s next?

Obviously, 2024 is off to an amazing start with Generative AI Tools, and of course we’ll be on a close watch with Sora and all the competition rising to the challenge. We are literally just two days from the announcement and there’s still so much to learn and test, but we all know how this industry is changing by the minute.

Excerpted from Leslie Katz’ article on Forbes yesterday:

Generative AI tools are, of course, generating a range of responses, from excitement about creative possibilities to anger about possibly copyright infringement and fear about the impact on the livelihood of those in creative industries—and on creativity itself. Sora is no different.

“Hollywood is about to implode and go thermonuclear,” one X user wrote in response to Sora’s arrival.

OpenAI said it needs to complete safety checks before making Sora publicly available. Experts in areas like misinformation, hateful content and bias will be “adversarially” testing the model, the company said in a blog post.

“Despite extensive research and testing, we cannot predict all of the beneficial ways people will use our technology, nor all the ways people will abuse it,” OpenAI said. “That’s why we believe that learning from real-world use is a critical component of creating and releasing increasingly safe AI systems over time.”

In the meantime, we’re waiting to see the benchmarks raised

Will smith eating spaghetti.

This is the video to beat, let's see what sora can do. pic.twitter.com/tJgynMKRmY

— Jeff Kirdeikis (@JeffKirdeikis) February 15, 2024

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now