AI Tools? Aren’t you already exhausted from hearing “AI-this” and “AI-that” everywhere? We’re all constantly blasted with crazy AI generated fantasy images in our social media feeds these days (yes, I’m personally responsible for some of that… sorry!) but where is all this rapidly-growing AI technology really going? Is it just another fad? What is there besides just making crazy fantasy images and people with too many fingers?

Read on…

AI has been evolving for years already, but haven’t we all seen a major a rapid growth recently? We now have access to AI image generation, text and content generation, AI voice generation and image/video enhancements. Where will it go and how will it affect our viability as content creators? Where does AI pull it’s resource material from in the machine learning models? Can the resulting works be copyrighted or used commercially? Why is AI viewed as such a threat to some artists and creative writers? What is the potential for ethical and IP infringement cases?

It’s a heated topic full of questions and speculation at the moment.

I was originally going to just write about AI Image generation to follow up on my AI Photo Enhancement article last year, but with various new AI technologies emerging almost monthly, I decided to break this out and just start with an overview today, and expound on deep dives into the various AIs that come out on a regular basis. As I create subsequent articles on each particular AI tactic, I’ll update this article to use as an ongoing reference portal of sorts. This ongoing series of articles on AI will help us to get under the hood to better understand what AI is and how we might find the positives in this rapidly-evolving technology and how we might use it to our benefit as content creators, editors, animators and producers.

AI descriptive image generation is still pretty far from being very accurate in it’s depiction of certain details, albeit people and animals are looking much better overall in the past year. We’ve seen a vast improvement of how AI is depicting humans compared to only 6 months ago, but still, TOO DAMN MANY FINGERS!!

Voice AI is improving by leaps and bounds and text generation can actually be pretty impressive recently. Everything right now is still in dev/beta so we’re really just getting a tiny peek behind the curtain of what is possible.

You’re going to read arguments online that AI is taking away our jobs, in one way or another, and that may be partially true eventually. But not really anytime soon. It’s another tool like photography was in the beginning; as were computer graphics and desktop publishing to traditional layout, and NLEs and DAWs were to the video, film and audio industries, and 2D/3D animation has been to traditional cell animation, etc. Technology evolves and so must the artist.

But what exactly are the REAL issues creative people are concerned about the future of AI? Is its resourcing legal/ethical? Is it going to replace our jobs as creatives?

-

- AI will replace photographers

- AI will replace image retouchers

- AI will replace illustrators and graphic designers

- AI will replace fine artists

- AI will replace creative writers

- AI will replace animators and VFX artists

- AI will replace Voice Over artists

- AI will replace music composers

But will they really? Or will they simply make these roles better and more efficient?

As recently cited by Kevin Kelly, Senior Editor at Wired wrote in his article Picture Limitless Creativity at Your Fingertips

“AT ITS BIRTH, every new technology ignites a Tech Panic Cycle. There are seven phases:

- Don’t bother me with this nonsense. It will never work.

- OK, it is happening, but it’s dangerous, ’cause it doesn’t work well.

- Wait, it works too well. We need to hobble it. Do something!

- This stuff is so powerful that it’s not fair to those without access to it.

- Now it’s everywhere, and there is no way to escape it. Not fair.

- I am going to give it up. For a month.

- Let’s focus on the real problem—which is the next current thing.

Today, in the case of AI image generators, an emerging band of very tech-savvy artists and photographers are working out of a Level 3 panic. In a reactive, third-person, hypothetical way, they fear other people (but never themselves) might lose their jobs. Getty Images, the premier agency selling stock photos and illustrations for design and editorial use, has already banned AI-generated images; certain artists who post their work on DeviantArt have demanded a similar ban. There are well-intentioned demands to identify AI art with a label and to segregate it from “real” art.”

While those fears may be valid for some industries, it’s time to take a closer look at how AI can actually empower us in our work instead of replacing us entirely. In this article we’ll explore one of the ways AI has changed the game for professionals like ourselves–by becoming an indispensable tool and invaluable partner when tackling complex projects. By understanding what AI can do for us now and in the future, we will be able to capitalize on its advantages while still preserving our singularly human skills and creativity.

So let’s look at what all this AI technology really means to existing artists, photographers, video/film productions and audio producers and actors before throwing stones. (I won’t get into a huge legal/ethical discussion here, as that’s a completely different discussion). I’m only going to share what I’ve discovered in the AI communities and practical application of some of it so far. And I’m going to miss a LOT of tools at that right now, so this is NOT an exhaustive list of everything out there currently!

It’s only just the beginning with these tools – and they’re only that. TOOLS.

They’re not going away and yes, YOU WILL BE ASSIMILATED!

So, What is AI?

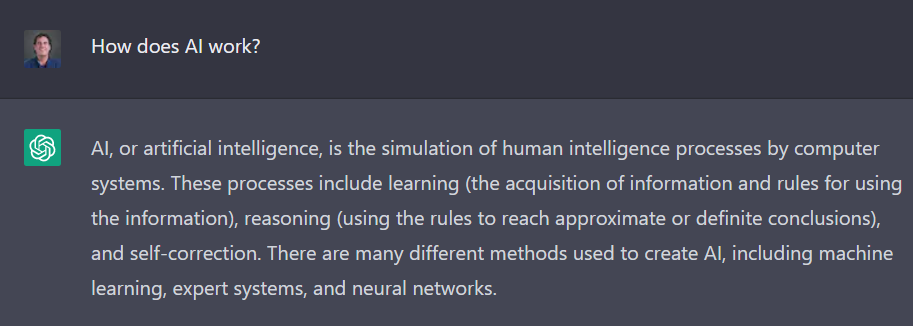

Well, let’s ask it and see if it can tell us!

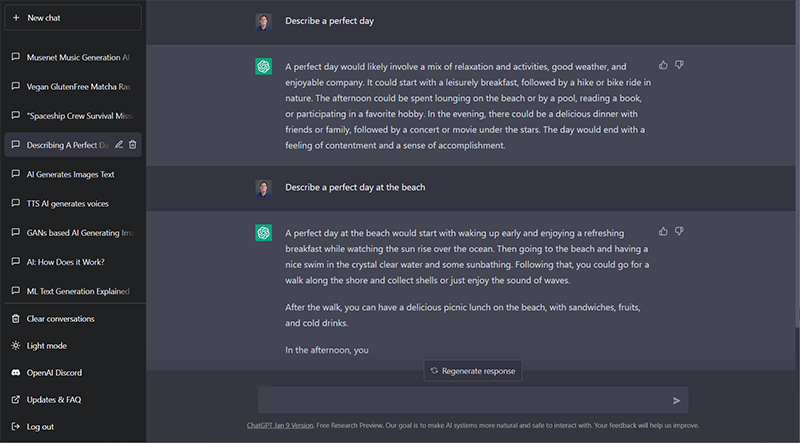

I used two different textual content generators to provide answers to these questions; ChatGPT and Jasper. I’ll discuss more on these AI tools later in this overview article.

I first asked ChatGPT “How does AI work?” The response was:

That’s a great formal response for AI technology in general, but what about Image generation models specifically?

That’s a great formal response for AI technology in general, but what about Image generation models specifically?

I queried ChatGPT again and asked, “How do image generating AI tools like midjourney and dall-e work?”

“Both Midjourney and DALL-E use Generative Adversarial Networks (GANs) to generate images. GANs consist of two neural networks: a generator and a discriminator. The generator produces new images, while the discriminator attempts to distinguish the generated images from real images. The generator and discriminator are trained together, with the generator trying to create images that can fool the discriminator, and the discriminator trying to correctly identify real and generated images.

Midjourney uses GANs to generate images of specific objects or scenes, while DALL-E generates images based on textual descriptions. DALL-E uses a transformer-based neural network architecture, which allows it to understand the meaning of text and generate images that match the description.

In summary, both Midjourney and DALL-E use GANs to generate images, but Midjourney uses GANs to generate specific images, and DALL-E uses a transformer-based neural network to generate images based on textual descriptions.”

And again, for audio, VO from TTS: How do TTS AI tools generate different voices from text in different languages?

“Text-to-speech (TTS) AI tools use a combination of machine learning algorithms and recorded speech samples to generate speech from text. For different languages, the TTS model is trained on a dataset of speech samples in that language. This dataset is used to learn the nuances and characteristics of how words and sounds are pronounced in that language. Additionally, the TTS model also takes into account the grammatical and syntactical rules of the language to ensure that the generated speech is grammatically correct. Once the TTS model is trained, it can be used to generate speech in the target language by synthesizing the sounds and words based on the rules and patterns learned from the training dataset.”

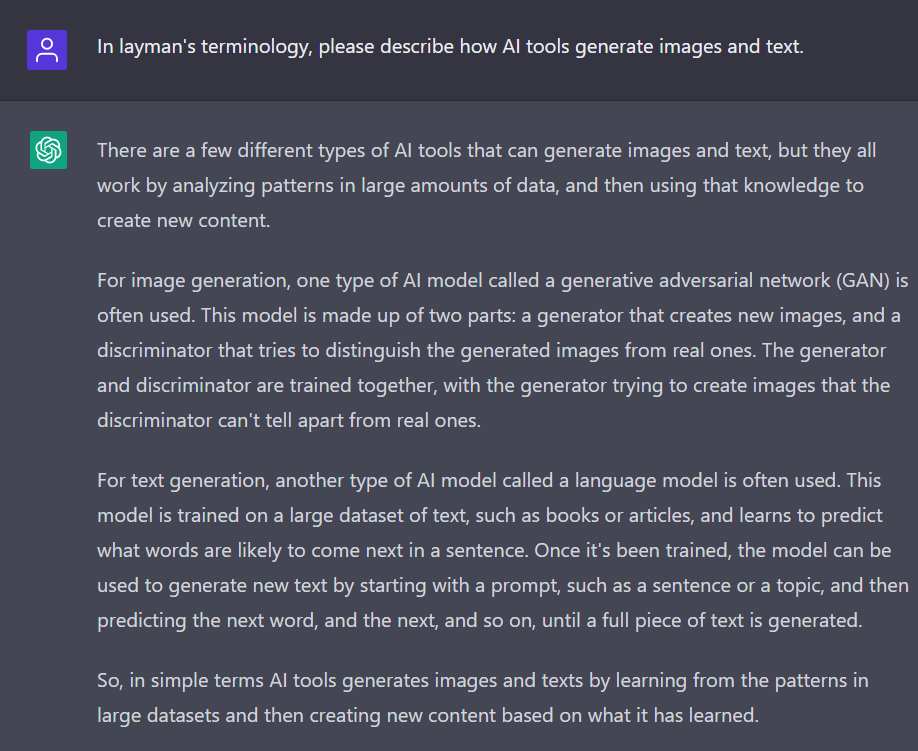

That’s all good technically, but what if we ask AI to break it down into layman’s terms? In layman’s terminology, please describe how AI tools generate images and text.

ChatGPT replied:

Jasper’s response to the same prompt:

#1 response [SIC]:

“AI text and AI imaging technologies have enabled machines to create content without the need for human creativity. AI tools are able to generate images and text based on collected data and pre-programmed artistic information. The AI tool assesses the data and visuals in its own database, recognising certain colour palettes or visual elements, before crafting an image or piece of text that best satisfies the criteria identified as part of its programming. AI technology can also be used to make predictions about what type of material could be created by a person in a particular context, using algorithms that analyse huge amounts of data. This AI-generated material can then be customised by humans to suit their specific needs.”

#2 response:

“AI tools have revolutionized the ability to generate images and text with relative ease. AI text generation technology combines a cutting-edge neural network architecture with natural language processing algorithms to enable AI to generate text that mimics human writing. AI imaging capabilities enable AI tools to generate realistic and high-quality pictures from photos, drawings, sketches and other visual artistry. AI is also able to guess what it sees and understand context, allowing AI models to construct new images that are based on old ones. This AI-generated creative content has opened up whole new avenues in terms of possibilities in both design and communication.”

Note that these were just really simple one line questions with no keyword prompting or details – and no post-editing of the raw text output. I’ll share more in the AI Text Generation section below.

I’d like to dig a bit deeper in my upcoming research to better explain HOW these tools actually gather their source library resources and convert those to images, text and audio files. We’ll get into that further in subsequent articles in the coming months, but for now, let’s take a look at a few examples of some commercially-available tool and others that are still in beta today.

—– —– —– —– —– —– —–

Examples of various emerging AI Technologies for the creative industry

AI is being used under the hood for almost every digital aspect of our daily lives already – from voice recognition, to facial recognition to push-marketing algorithms, to location identification and much more. So what are some (but obviously, NOT ALL) examples of how it’s being developed for creative producers? How can we eventually leverage AI technology to our advantage and producer better quality content in less time? We’ll start by taking a look at AI enhancement tools for images, audio and video. Then on to the AI image, music and text generation technologies.

UPDATED: Here’s a more complete list of AI Tools that’s updated regularly.

AI Image Enhancement and Scaling Tools

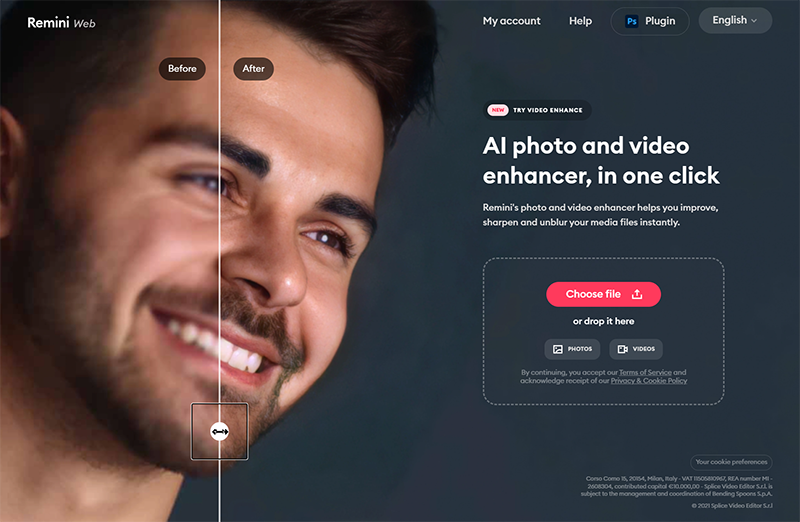

With Adobe embracing more AI into their apps and Neural plugins these days, lends me to think it’s more of an efficiency tool to produce better results in less time. Other apps out there, such as Remini.web that I covered in my AI Photo Enhancement article last fall, are continually improving with amazing results. I have personally benefitted in utilizing this software on a feature doc with over 1000 images to help restore clarity in poor quality prints and scans. Adobe is quickly adding retouching and facial enhancement tools to their Neural filters that actually are impressive. I can imagine they will only continue to get better.

Topaz AI

Topaz Labs AI has featured several photography enhancement products in their lineup, including DeNoise AI, Sharpen AI and Gigapixel AI. They’ve also just released an all-inclusive single product called Photo AI, which seems to have replaced Topaz Studio. I haven’t yet tried it out, but it looks pretty straightforward and includes all the features of the other standalone products, including Mask AI which is no longer listed on their website.

My go-to has been Gigapixel AI because most of the work I need it for is in upscaling and noise removal/sharpening and sometimes, facial enhancement if needed on older, low-res photos. I must use it at least once a week with some of the images that come to me for retouching or compositing.

Their Face-recovery is getting better as I’m sure their resource network is being refined for the AI modeling. I’ll have to try some older images in my next article to compare from last year’s article.

I’ve yet to find another software that handles the complexity of various image elements such as hair, clothing, feathers, natural and artificial surfaces and textures while upscaling 400% or more, and remove JPG compression and sharpening at the same time. It’s really quite remarkable and because of machine learning, it’s only getting better over time.

This example of singer/actress Joyce Bryant is a worst-case scenario just for testing purposes. She was a gorgeous woman and I wanted to see just how well the software would hold up, starting with an image this low-res at 293×420 px. This was featured in my article last year on photo enhancement software.

Really though, the best examples are on their website with interactive sliders on the before/after images.

As with many other tools in this article, I will dig deeper into Photo AI and provide more detailed analysis of the software in a future article.

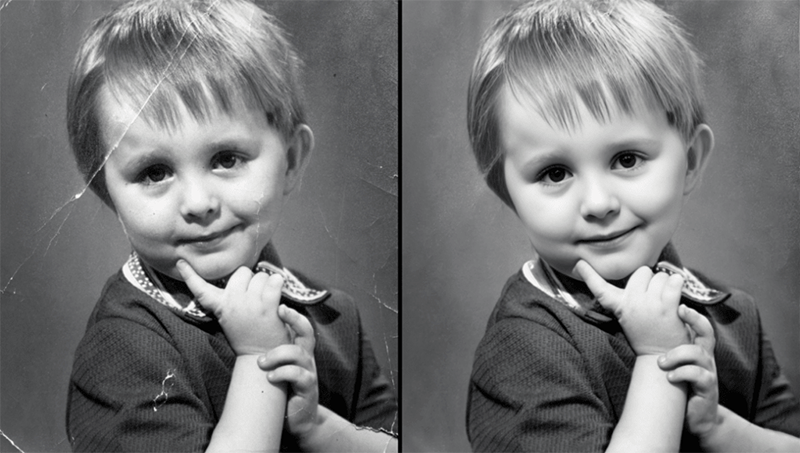

Remini web

Remini Web was another tool that I featured in my article last year on photo enhancement software for documentaries. I go into a lot more detail there, but so far I’ve processed over 1100 old photos for a feature doc we’ve been working on the past couple years, and this tool has brought so many images back to life!

Here’s an example of an image that was originally only 332×272 px and was upscaled 400% in Gigapixel (without face recovery) to a more useable 1328×1088 px. Then I ran that image through Remini Web and the results were astonishing.

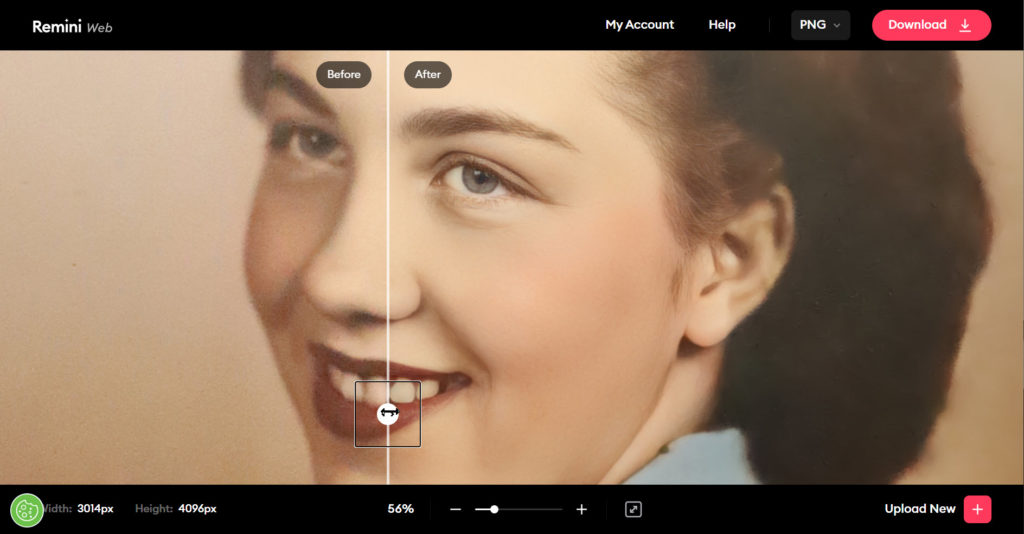

As was this photo of an old print of my Mom’s Senior Photo from the early 1950s:

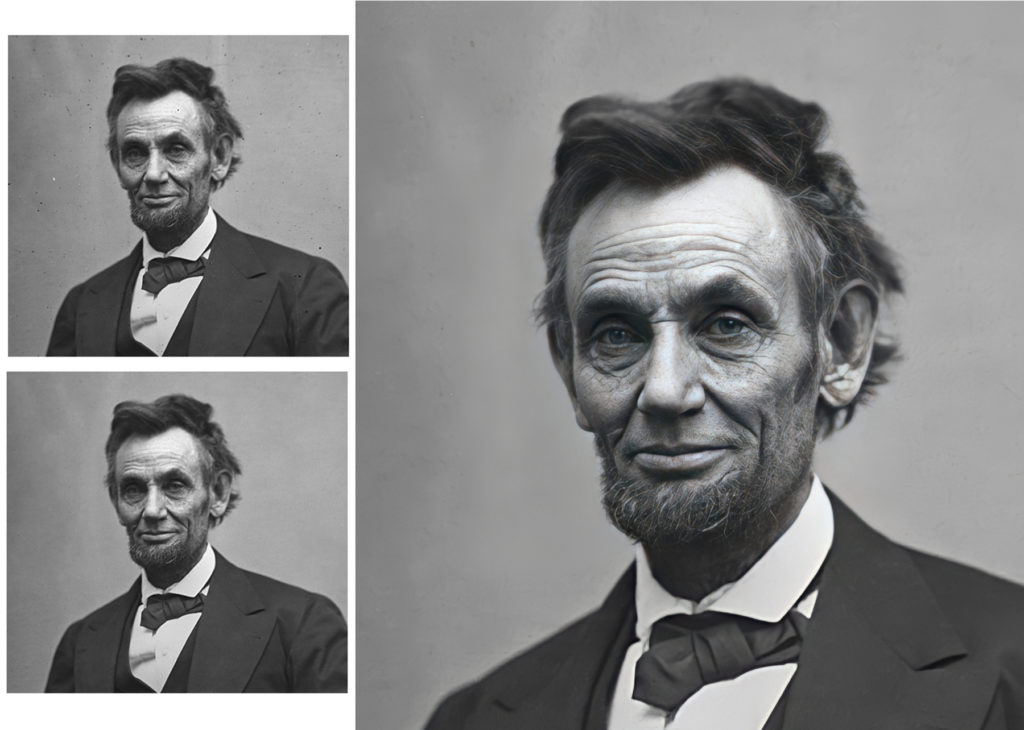

And of course, an example from the public domain of historical photo of Abraham Lincoln – retouched and run through Remini Web:

Remini Web is still my favorite AI facial enhancement tool to date, but the list of options is growing rapidly!

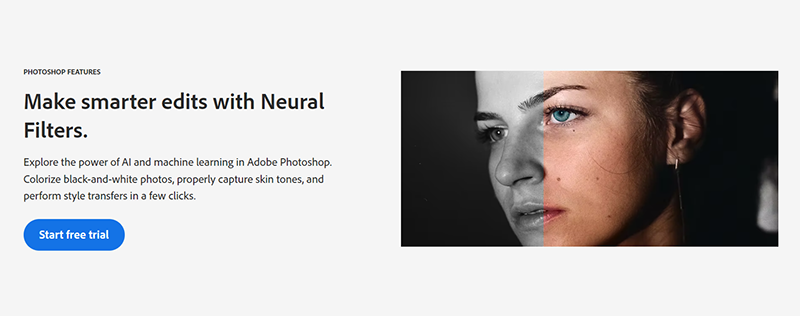

Adobe Neural Filters

Located under the Filters tab in Adobe Photoshop, Neural Filters are a fun and easy way to create compelling adjustments and speed up your image editing workflows. Powered by artificial intelligence and machine learning engine Adobe Sensei, Neural Filters use algorithms to generate new pixels in your photos. This allows you to add nondestructive edits and explore new creative ideas quickly, all while keeping the original image intact.

The different types of Neural Filters (Featured and Beta)

Once you open your image in Photoshop, there are several featured filters ready for you to use. Choose one to enhance your shot or try them all and see what works best for you.

Smart Portrait

Smooth it over with Skin Smoothing.

Brush and touch up your subjects’ skin effortlessly with the Skin Smoothing filter. Simple sliders for Smoothness and Blur allow you to remove tattoos, freckles, scars, and other elements on faces and skin in an instant.

Excavate unwanted items with JPEG Artifacts Removal.

The more times you save a JPEG file, the more likely your image will look fuzzy or pixelated. You may see artifacting (obvious visual anomalies) due to the compression algorithms used to reduce the file size. Reverse the process with this filter and fine-tune it by adjusting the edge of your image with either a high, medium, or low level of blur.

Switch it up with Style Transfer.

Just like it sounds, this filter allows you to take the look of one image — the color, hue, or saturation — and put it on another. Sliders for Style Strength, Brush Size, and Blur Background as well as checkboxes like Preserve Color and Focus Subject let you customize how much of the look your picture ends up with.

Super Zoom

Zoom in closely on a subject while keeping details sharp. Enhance facial features, reduce noise, and remove compressed artifacts to let your subject — whatever it is — shine through in extreme close-up.

Colorize

Quickly convert black-and-white photos to eye-popping color in a flash. Designate which colors you want to appear in your capture, and Adobe Sensei will automatically fill the image. Focus points let you add more color to specific areas to fine-tune the filter.

There are several other beta filters if you want to experiment with them as well. I haven’t had a chance to actually demo them as of this article, but will in an upcoming article on this segment category.

Makeup Transfer

Apply the same makeup settings to various faces with this useful tool. Add new makeup to a photo or completely change your model’s current makeup in post-production to get exactly the right look for your shot.

Harmonization

Match the look of one Photoshop layer to another for natural-looking photo compositing. The Harmonization filter looks at your reference image and applies its color and tone to any layer. Adjust the sliders, add a mask, and enjoy the color harmony.

Photo Restoration

Need to restore old photos? Try the new Photo Restoration Neural filter, powered by Artificial Intelligence, to fix your old family photos or restore prints in Photoshop.

NOTE:

Even though I’ve already dedicated an article previously for this AI tech, I will be expanding my findings further with a new updated article in the coming months. Stay tuned.

—– —– —– —– —– —– —–

AI Video & Audio Enhancement Tools

Video and audio AI enhancement tools can help video producers and editors, where video stabilization can smooth out shaky footage, while AI-powered noise reduction can remove background noise from audio tracks. AI-powered color correction can automatically adjust the color balance and brightness of a video, and AI-powered object tracking can automatically follow a moving object in a video. Additionally, AI-powered video compression can reduce the file size of a video while maintaining its quality. These tools can save time and improve the overall quality of the final product.

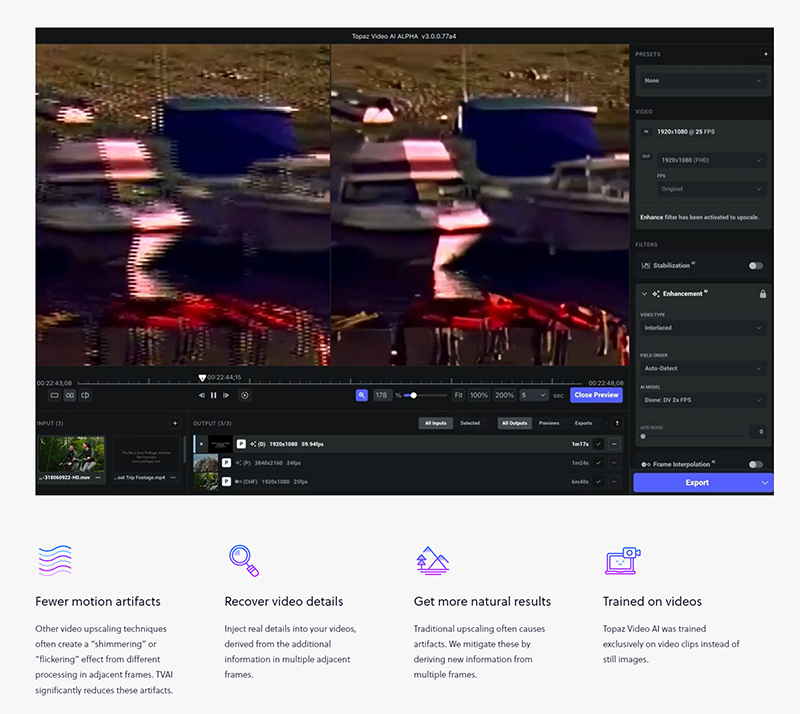

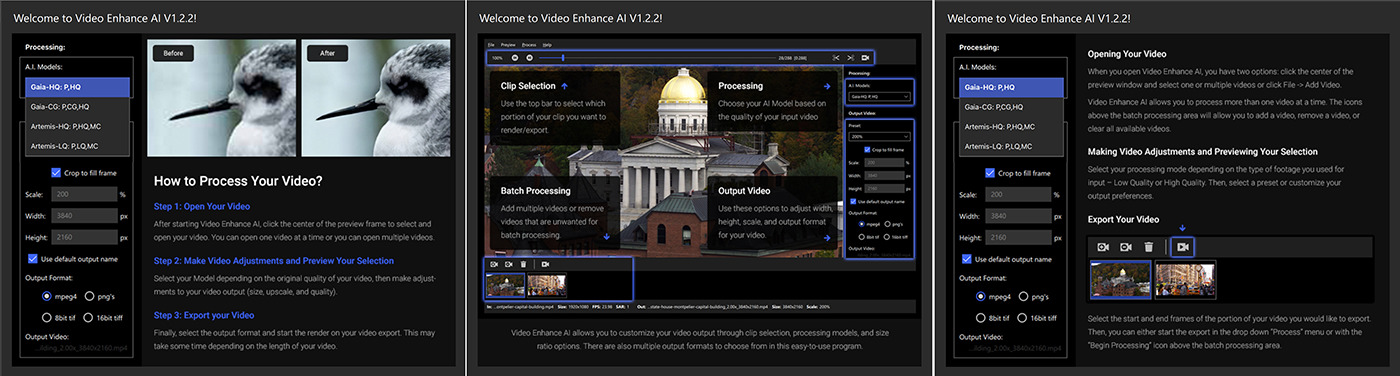

Topaz Labs Video AI

Topaz Labs Video AI uses a combination of AI-based techniques and algorithms to improve the quality of videos. The software applies techniques such as deinterlacing, upscaling, and motion interpolation to the footage.

Deinterlacing is used to remove interlacing artifacts from videos that have been recorded in interlaced format. It does this by analyzing the video frame by frame and creating new frames by merging the information from the interlaced fields.

Upscaling is used to increase the resolution of a video. This is done by using AI algorithms to analyze the video and add more pixels to the image, while maintaining the integrity of the original image.

Motion interpolation is used to add more frames to a video. This is done by analyzing the motion in the video and creating new frames that are in between the existing frames. This results in a smoother and more fluid video.

The software also utilizes the latest hardware acceleration technologies to speed up the processing times, which allows you to enhance videos with minimal wait time.

Overall, Topaz Labs Video AI uses advanced AI algorithms to improve the quality of videos, by removing artifacts, increasing resolution, and adding more frames for a smoother and more natural video.

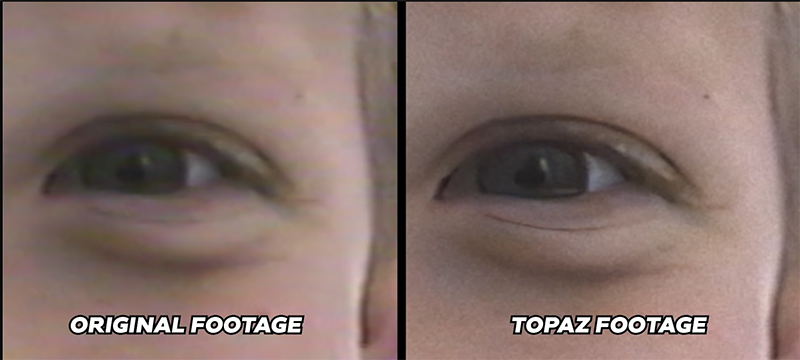

This example is a before/after screenshot from the video posted below. The original was standard definition from a compact VHS camcorder with interlacing, that was upres’d to HD and deinterlaced, as well as AI enhanced. The results are pretty remarkable.

This is a great demo video from Denver Riddle at Color Grading Central on YouTube that shows some various applications with older video footage. If you’re working on documentaries and need to put in old video archival footage, this is an invaluable tool that you MUST have in your toolbox! We’ve been using it for a couple years now on a feature doc that’s 4K and have been very impressed with what it’s done for old video and scanned film footage.

Adobe Podcast (web beta)

Adobe currently has an online tool in beta to quickly re-master your voice recordings from any source to sound better. Previously called Project Shasta, the new Adobe Podcast speech enhancement makes voice recordings sound as if they were recorded in a professional studio.

I’ve actually tried it on some VO recording we received that was professionally done, but still had some boomy artifacts from the small booth they recorded from in their home studio. I ran it through Adobe Podcast (drag and drop) and it spit out a clean version I could put into my animation project in Premiere and then apply some Mastering filters to really bring it to life without any artifacts.

To give a better idea of the effectiveness of this tool, I’m going to share with you a pretty thorough comparison video from PiXimperfect on YouTube (Subscribe to his channel for tons of great tips in all things Adobe too).

NOTE:

As with all of these tools, I’ll be exploring more and digging deeper in subsequent articles – but I really think that Adobe (and others) will be enveloping these technologies into their desktop and mobile apps before long!

—– —– —– —– —– —– —–

AI Image Generation Tools

Ok – so currently, the big controversial hubbub is this category right here; AI Image Generation. Creating “art” from text prompts. As I stated at the top of the article, I’m not going to debate in this segment whether or not it’s art, or if it’s ethical or stealing other people’s IP or whether I think it’s going to put people out of work or not. The jury is out on all that anyway, and as far as I can see, the results are still quite mixed in their ultimate usefulness, so for me, it’s just a visual playground to date. I can run most of mine from my iPhone while relaxing or waiting for video renders and uploads and post my unedited crazy results on my social media to stir up shit… and I do that a lot.

I’m only featuring a couple of the most popular AI generators out there for this article as I will be most likely expanding on this segment in greater detail in next month’s article and we’ll dig deep into how each one works and the many ancillary apps that access this technology.

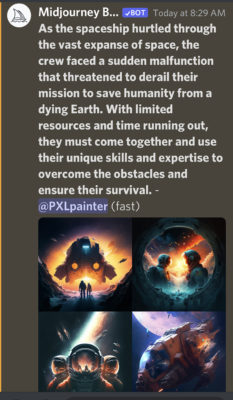

Midjourney

Midjourney is an independent research lab that produces a proprietary artificial intelligence program that creates images from textual descriptions. The company was founded by David Holz, who is also a co-founder of Leap Motion. The AI program, also called Midjourney, is designed to create images based on textual input, similar to OpenAI’s DALL-E and Stable Diffusion. The tool is currently in open beta, which it entered on July 12, 2022. The company is already profitable, according to Holz, who announced this in an interview with The Register in August 2022. Users can create artwork with Midjourney by using Discord bot commands.

Here’s a link to the Wikipedia page with more info about the development of this AI tool that’s gone from beta to an incredible AI image generation tool in 6 months!

You’ll notice that there isn’t really a lot of commercial visibility like a fancy home page or even an app. The only way to use Midjourney technology is through Discord (online or mobile app) and you’ll need some kind of paid account after your trial period is over. But you can sign up for the beta program through their main web portal.

The Midjourney beta produced fairly good images of people overall, but tended to get the eyes off weird and required further editing to resolve. I’ve found that taking the image into Photoshop and blurring the facial details a bit and running it back through Remini Web, it can regenerate a usable portrait image if needed.

Here’s an update of what this same prompt generated now in Midjourney v4. It’s amazing what only 6 months has done in the development of this technology so far!

This prompt was for “beautiful happy women of various races ethnicity in a group” – both the MJ beta from August 2022, to the MJ v4 image done today:

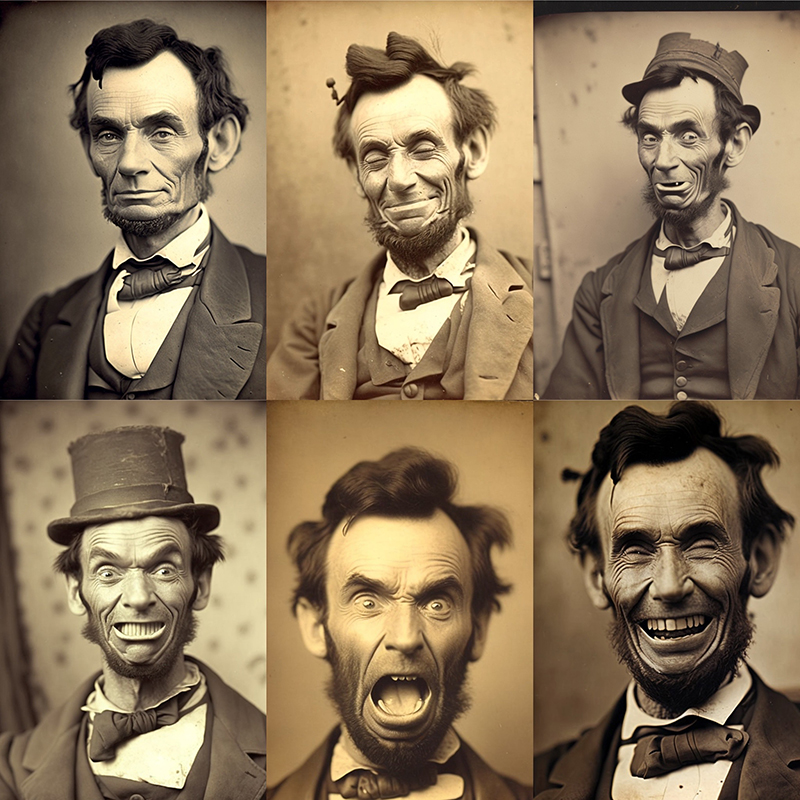

Also recently, I prompted for several expressions of Abraham Lincoln, based on his original portrait image that I retouched above with Remini Web. These were straight out of Midjourney v4. A few of them look like a crazy hobo.

But I’ve found that with Midjourney v4, sometimes the simplest prompts deliver the most rewarding and spectacular results!

This is what “Star Wars as directed by Wes Anderson cinematic film –v 4” produced:

And based on the prompt “portrait of the most interesting man in the world –v 4” – replacing the word “man” for various animals. Nothing further was done to any of these images individually:

And we couldn’t do without “Walter White cooking in the kitchen on set of Baking Bad tv show –v 4” LET’S COOK!

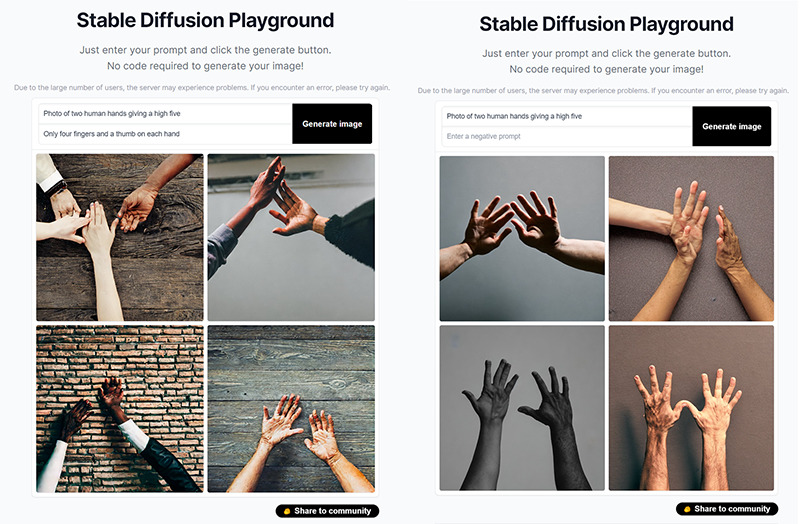

The one thing even Midjourney v4 still can’t replicate well is HANDS! The prompt for this was “two human hands giving a high five photo –v 4”

There’s really so much more to go over in depth with Midjourney, and in the coming months, it may be even many more light years ahead.

(We’re still waiting on normal hands!!)

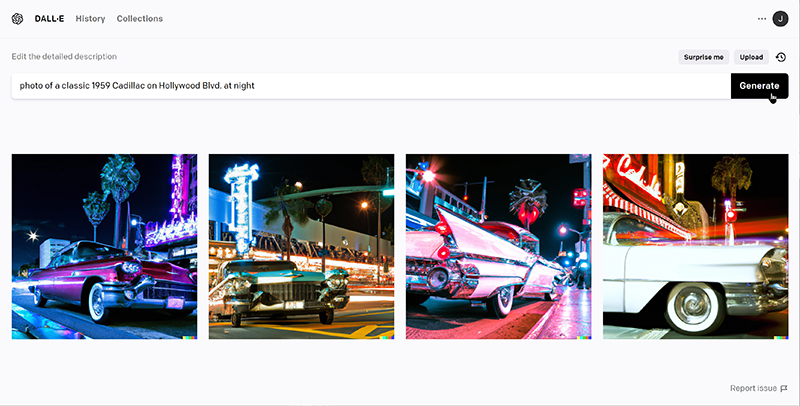

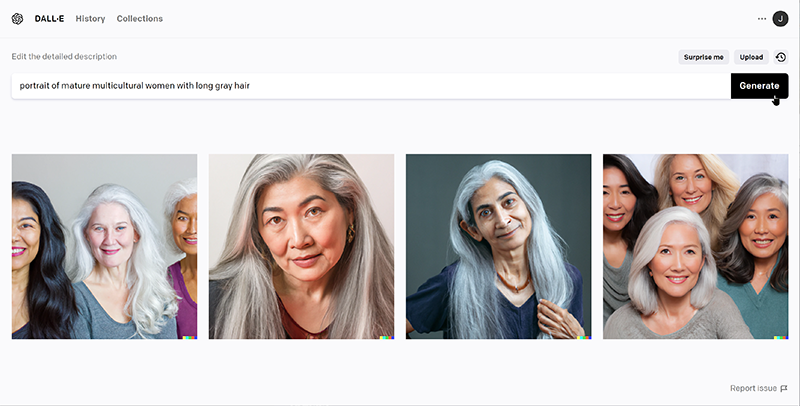

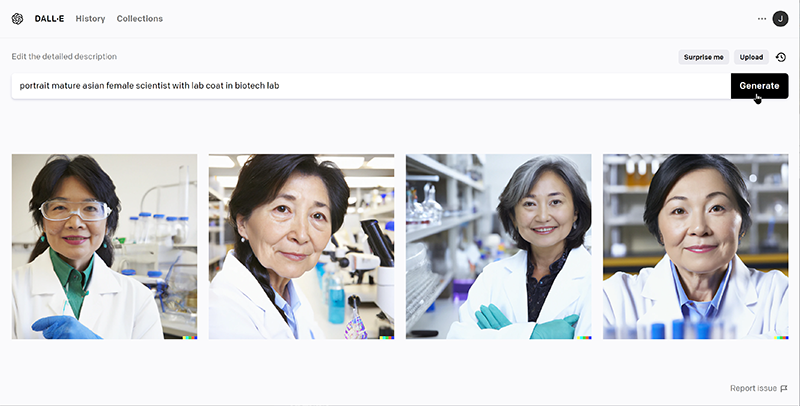

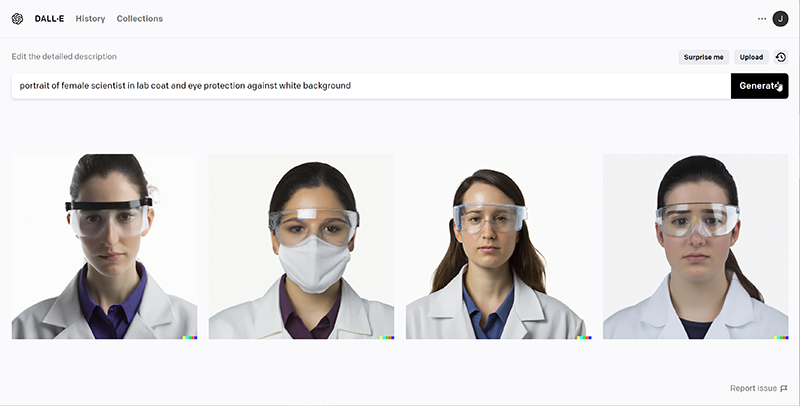

DALL-E 2

DALL-E 2 is a variant of the DALL-E model (from OpenAI – who also created ChatGPT), which is a deep learning model that uses a transformer architecture to generate images from text descriptions. DALL-E 2 is trained on a dataset of images and their associated captions, and is able to generate new images by combining the features of multiple images based on a given text prompt. The model is able to generate a wide variety of images, from photorealistic to highly stylized, depending on the text prompt provided. It also can perform image-to-text and text-to-image tasks. Even very simple text prompts can deliver good results.

Most portrait shots of people come out fairly good at first glance in DALL-E 2. As with all AI generated imagery, further retouching is almost always necessary to produce anything usable.

I have found that DALL-E 2 may produce some decent portrait shots and other kinds of basic artwork designs, but it doesn’t have the richness and full environments that Midjourney outputs. For portraits though, it does a fairly good job, but seems to fail in facial details like the eyes, primarily. But just like my earlier Midjourney examples, I’ve found that taking the image into Photoshop and blurring the facial details a bit and running it back through Remini Web, it can regenerate a usable portrait image if needed.

I have found that DALL-E 2 may produce some decent portrait shots and other kinds of basic artwork designs, but it doesn’t have the richness and full environments that Midjourney outputs. For portraits though, it does a fairly good job, but seems to fail in facial details like the eyes, primarily. But just like my earlier Midjourney examples, I’ve found that taking the image into Photoshop and blurring the facial details a bit and running it back through Remini Web, it can regenerate a usable portrait image if needed.

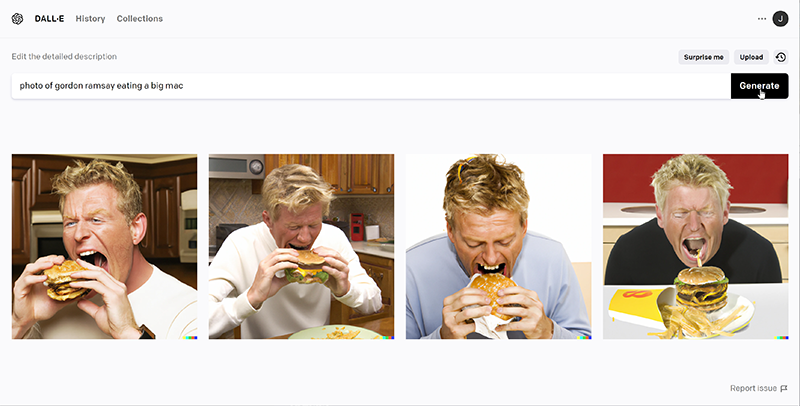

It also doesn’t seem to be able to replicate famous celebrities well as I can never get it to produce a recognizable image. It looks like it could be SOMEBODY, but not who you’ve prompted for!

It’s still fun to see what DALL-E 2 can create, and of course when you discover advanced prompting, you can fine-tune your results even further.

Stable Diffusion

Stable Diffusion is a deep learning, text-to-image model released in 2022. It is primarily used to generate detailed images conditioned on text descriptions, though it can also be applied to other tasks such as inpainting, outpainting, and generating image-to-image translations guided by a text prompt.

The Stable Diffusion technique is a method for training large language models that uses a technique called “gradient accumulation” to reduce the memory requirements of the model during training. This allows for the training of models with significantly more parameters than would otherwise be possible. Additionally, it uses a technique called “stabilization” to reduce the variance in the gradients, which in turn allows for a larger number of accumulated gradients before the update step. This leads to further reduction of memory requirements and training time.

The “stable-diffusion” model is pre-trained on a large corpus of text data and can be fine-tuned on a specific task using a smaller dataset. It can be used for various natural language processing tasks such as question answering, sentiment analysis, and text generation. You can use Hugging Face’s API to access the model and use it for your own projects.

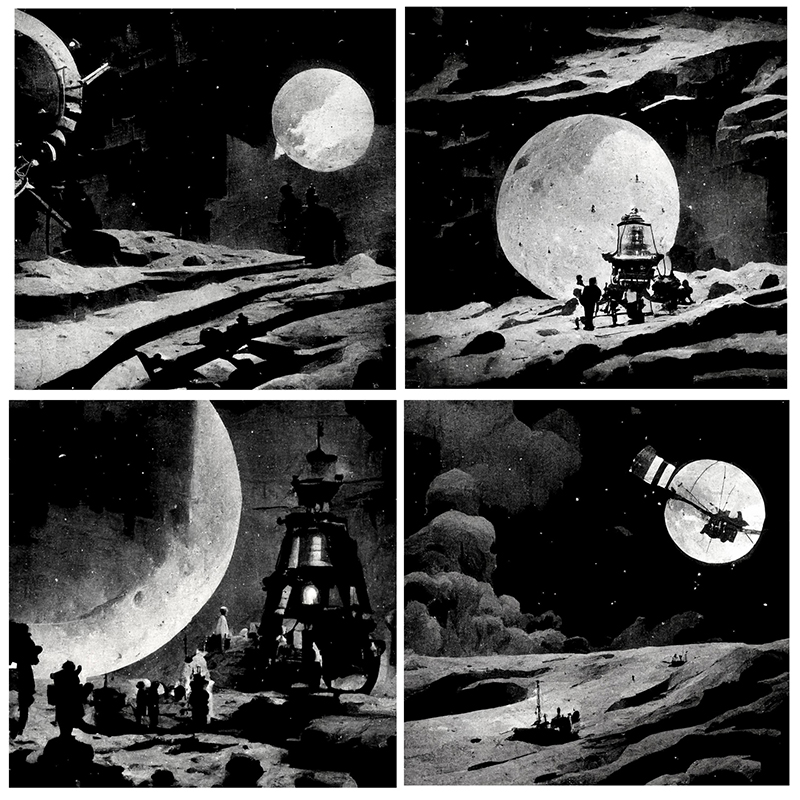

I only spent a little time with Stable Diffusion when it was first in beta on Discord, but there are several new mobile apps and others running it on their own servers now as well. The prompt for this image was Georges Méliès Trip to the Moon with Apollo 13:

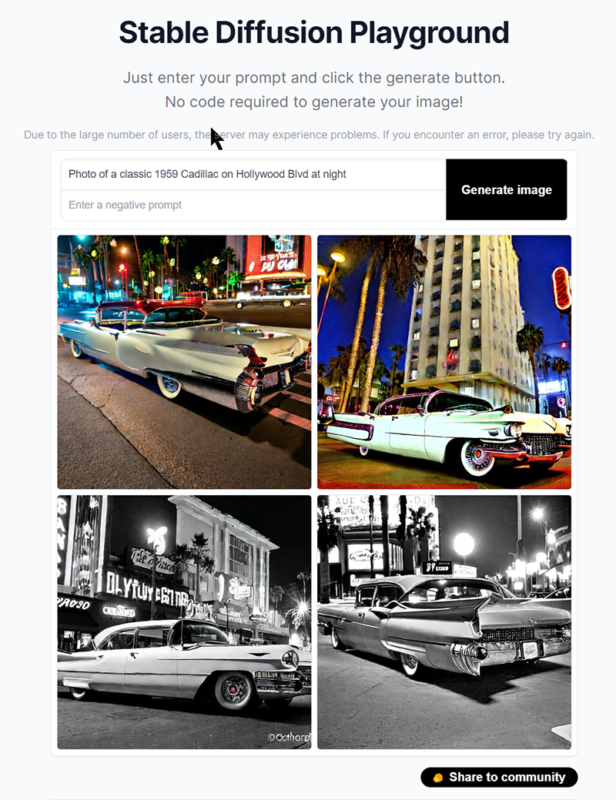

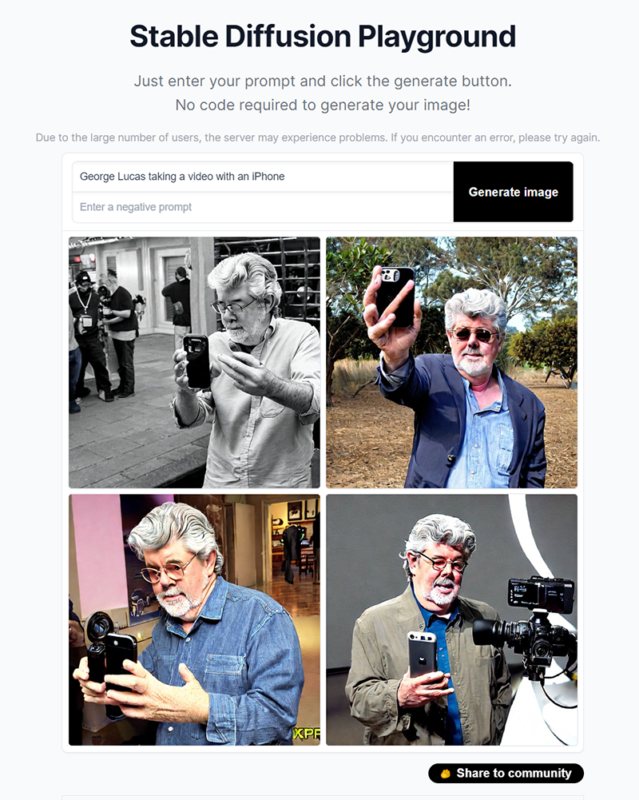

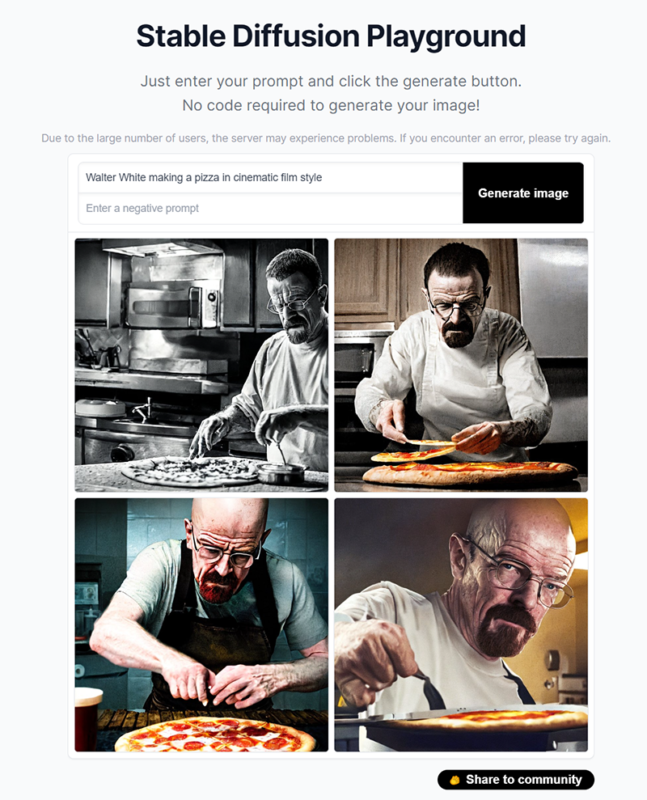

More recent tests in the Stable Diffusion Playground were from using prompts I’ve used in other AI generators like DALL-E 2 and Midjourney, have produced better results than in previous versions:

It certainly does a better job with celebrity faces than DALL-E 2, and with some proper negative prompting, they could be refined much further!

But like all the AI generators out there, hands still have major problems! Even with some specific negative prompts, it’s a big fail.

What is Negative Prompt in Stable Diffusion?

A negative prompt is an argument that instructs the Stable Diffusion model to not include certain things in the generated image. This powerful feature allows users to remove any object, styles, or abnormalities from the original generated image. Though Stable Diffusion takes input known as prompts in the form of human language, it is difficult for it to understand negative words, such as “no”, “not”, “except”, “without”. Hence, you need to use negative prompting to gain full control over your prompts.

This article and video from Samson Vowles of Delightful Design, explains how negative prompts work to eliminate unwanted results in Stable Diffusion images. Sometimes they even work, but no on hands, obviously.

Another good resource for Stable Diffusion negative prompting is this post on Reddit. The pinned guide walks you through how Stable Diffusion works, how to install it on your own server and of course Negative Prompting.

You can test out Stable Diffusion in their Online Playground and discover what it might generate for you!

NOTE:

I will be digging much deeper and exploring more with all of these AI image generation tools in my next article – AI Tools Part 2.

—– —– —– —– —– —– —–

AI TTS Voice Over Generation Tools

Ever since I got a new Macintosh in the mid 90’s that had Text to Speech (TTS) and Voice Command capabilities, I’ve been enamored with synthesized text and its development. We used to grok in the AOL chatrooms about how to create phonetic inflections to make the voice have better characteristics and inflections – and even sing!

But really, very little development and enhancements were made for at least a decade, and much of the functionality of the technology was removed in subsequent versions of Mac OS. Yes, there have been other synthesized voice apps and tech that has been developed over the years for commercial applications (SIRI, Alexa, automotive navigation, etc.) but in general, synthesized voices from user-input text has always sounded robotic and unhuman. Pretty much the way image generating AI can’t quite make “real” humans yet. (The hands, Chico, THE HANDS!!!)

There’s still a lot of totally robotic sounding AI TTS tools out there, and don’t get me started with those shitty TicTok videos with lame TTS! ACK!!

But there’s recently much more excitement in this industry now, and we are truly getting closer to some remarkable (and yet scary) realism in the results these days – much of what you’ve most likely seen and heard in various Deepfake videos.

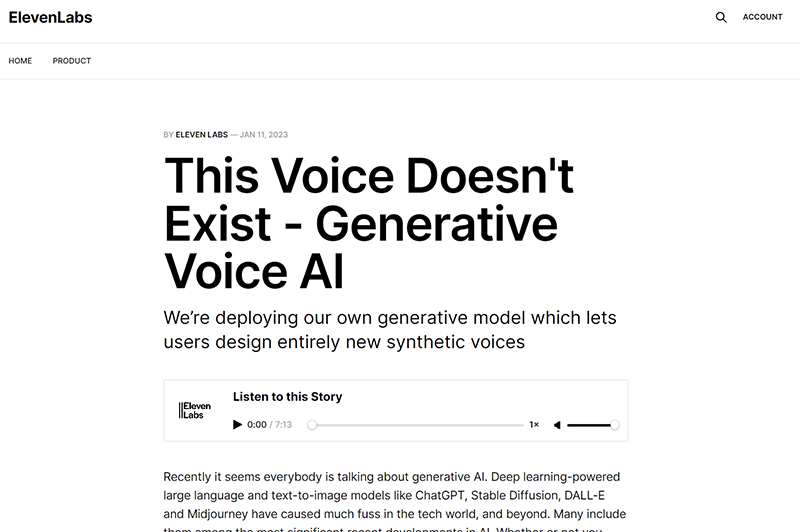

Eleven Labs

Probably the most impressive examples I’ve seen/heard yet are coming from a startup called Eleven Labs https://blog.elevenlabs.io

Not only do they generate an amazingly believable TTS voiced reading of your input text in various styles and accents, but their technology is expanding to provide Dubbing and Voice Conversions.

Imagine being able to let software do the dubbing of your video or film to another language, retaining the emotions and inflections of the original voice actor. Or totally change the voice of someone onscreen to sound like someone else. Or even utilize your own voice to read copy without having to sit in the booth to record over and over and over.

I’ve requested beta access, so stay tuned for a subsequent article on this topic once I’ve had a chance to get under the hood.

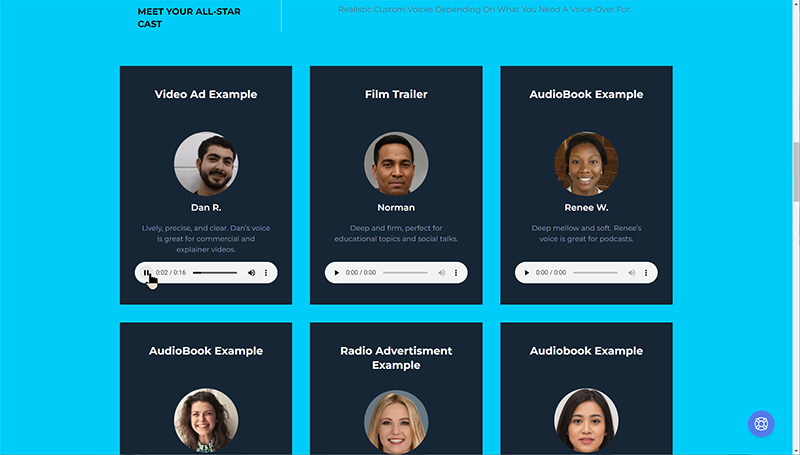

Synthesys.io

Synthesys is a pretty impressive TTS tool with realistic and convincing voices from text input, based on the examples from their website. They’re not cheap though, and it doesn’t appear to be any trial versions to test so I’m not going to be able to share any detailed feedback. Check out this link and review the examples to see if it’s the right tool for you.

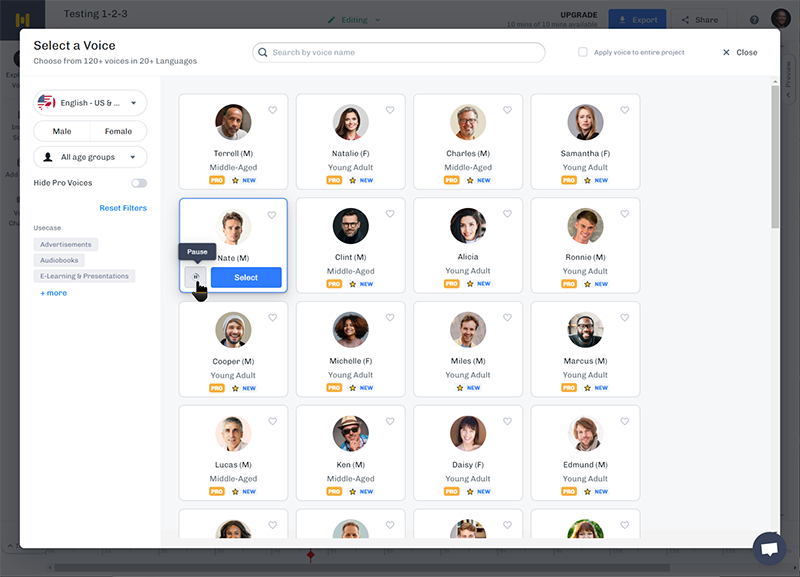

Murph.AI

Murph.ai is an online AI TTS tool that can take written text and turn it into human-sounding recordings. You can try it out for free.

There are a lot of various AI voice models to choose from, but like most TTS tools I’ve seen to date, some of the pronunciation and phrasing still sounds artificial. But it’s getting closer and I’m sure will only improve over time.

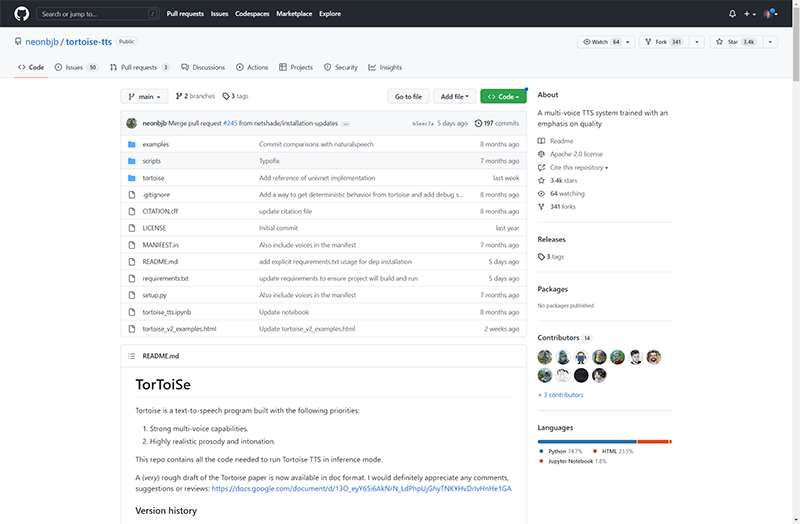

Tortoise-TTS

There are other tools in development and if you’re a heavy-duty programmer/developer and want to play with code and hardware, etc. then check out Tortoise-TTS. You can review some pretty impressive examples of the results from cloning one own voice (or the voices of others/celebrities, etc.) on this examples page. Keep in mind too, that this is Open Source technology still.

Here’s a video tutorial that provides some insight as to what it takes, plus some examples. It’s chapter-driven, so open it up in YouTube to see the chapter markers to jump ahead.

NOTE:

Exploring this amazing leap in technology, what are the ethical repercussions of using an A-List actor’s voice to speak your words? What will the IP laws be like once this rolls out commercially? We’ll explore deeper in a subsequent article in the near future.

—— —– —– —– —– —– —–

AI Music Generation Tools

There appears to be myriad AI Music apps and online tools available, so finding one that’s right for you may take a bit of exploring. I’m only sharing a couple that I’ve found to be most worthwhile considering to date. My list may change in time as I dig deeper into the technology and it’s evolution.

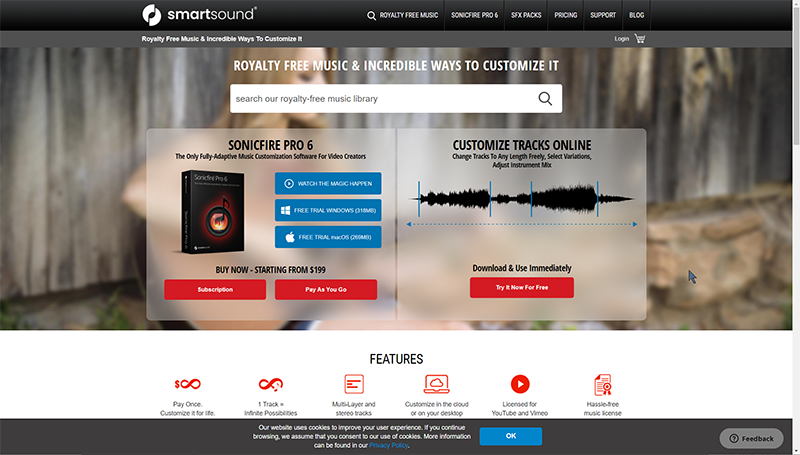

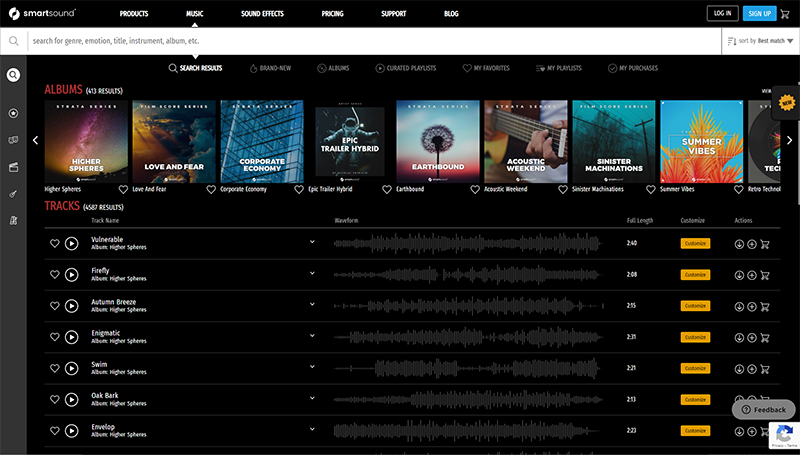

SmartSound

One of the oldest and most reliable custom music production tools that I’ve used for a couple decades is SmartSound. They first developed their desktop app in the late 90s called Sonicfire Pro and utilizes prerecorded sub-tracks and real instruments to develop amazing soundtrack compositions for your productions. While it’s technically not AI per se, the customizability and depth of what you can produce within their recorded libraries is ever-growing and a solid addition to your video production workflow. It also seems that many online AI music generation tools seem to copy several of their editing and customization features. If you’re a serious producer, this is probably the next best thing to hiring a music director and recording your own scores.

The online version of SmartSound is full featured and allows you to work anywhere with an Internet connection and your login to access the libraries and content that you’ve purchased – which makes it great for editing on the road or from your office/home.

Their desktop software, Sonicfire Pro allows some deep editing and exploration beyond the initially-generated tracks, and it also lets you create and edit your downloaded library resources while offline.

SmartSound isn’t cheap, but it’s still the king of auto-generated, customizable music tracks that I’ve found to date. And the fact that the instruments and resource tracks are actual recorded studio sessions, make the quality truly broadcast worthy.

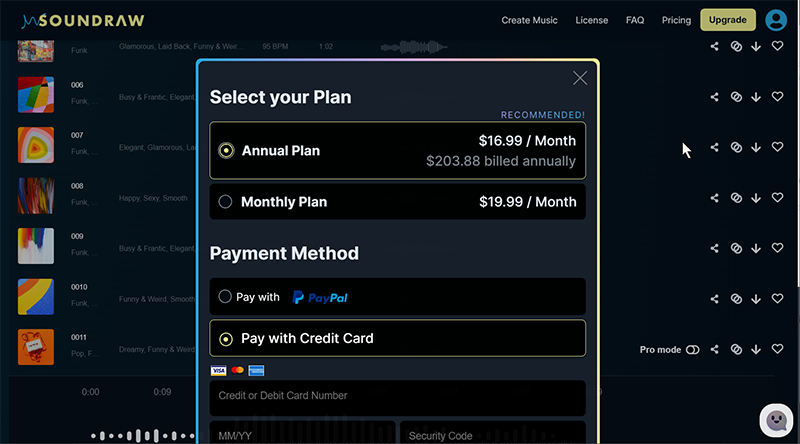

Soundraw

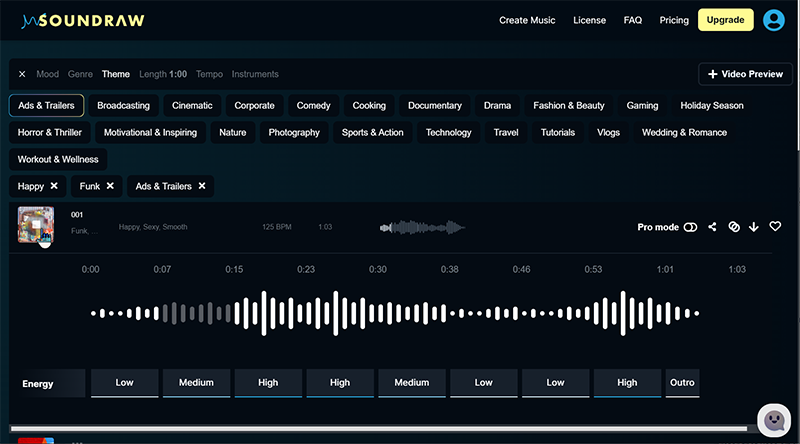

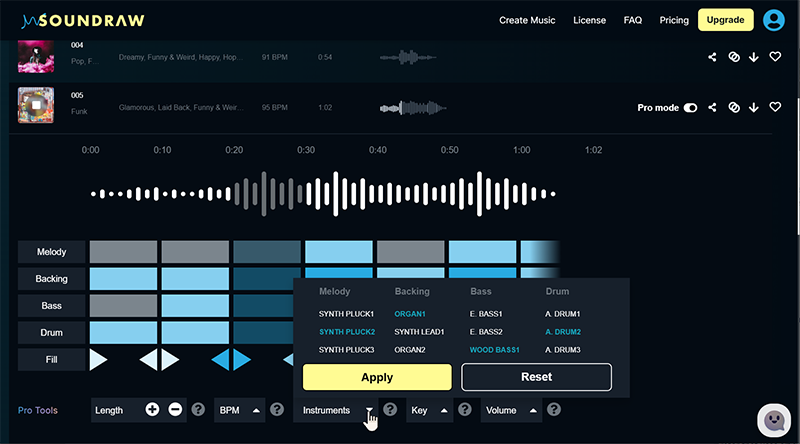

Soundraw is an online platform that allows users to create and edit music using AI technology. The platform offers a variety of tools and features such as a music generator, drum machine, and effects processor that can be used to compose and produce original tracks. Users can also upload their own samples and tracks to the platform for editing and manipulation. Additionally, the platform allows users to export their creations in various audio formats for use in other projects or for sharing online.

Creating a track can be as easy as defining the Mood, Genre, Theme, Length, Tempo and Instruments featured. Soundraw then provides you with doszens of options that you can download and use directly or go to the online editor to further refine your desired tracks.

The online track editor allows you to make several changes to each section in the created track, adjusting various instruments, volumes, energy levels, track length, etc.

Pricing for Soundraw is a nominal commitment but fortunately, it appears that most the the tool’s features are unlocked to explore in the Free version until you want to download a track.

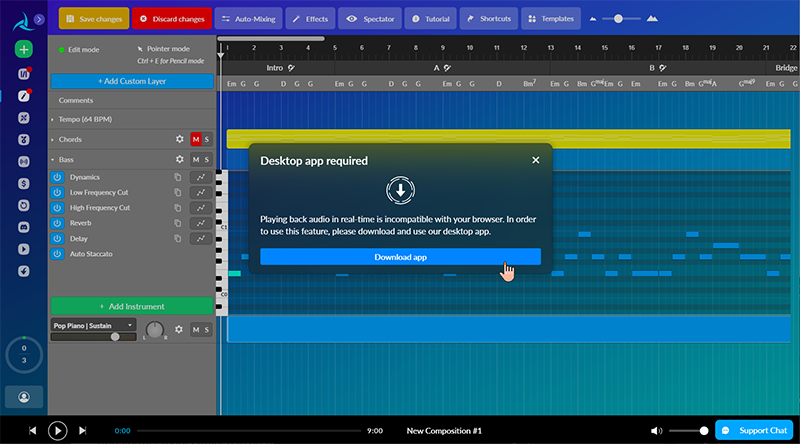

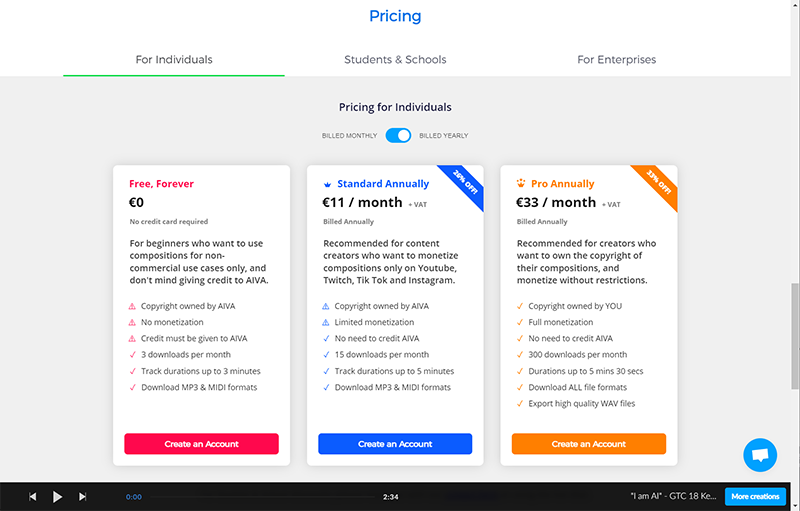

AIVA (Artificial Intelligence Virtual Artist) is a music generator that uses artificial intelligence algorithms to compose original music. It can create various types of music, such as classical, electronic, and rock, and can also mimic the style of specific composers or genres. It can be used by musicians, film makers, game developers and other creators to generate music for their projects.

AIVA (Artificial Intelligence Virtual Artist) was created by a Luxembourg-based startup company of the same name, founded in 2016. The company’s goal was to develop an AI system that could compose music in a variety of styles and emulate the work of human composers. The company’s co-founder, Pierre Barreau, is a classically trained pianist and composer who wanted to use his background in music and AI to create an AI system that could generate high-quality, original music. The company launched its first product in 2016, which was a website that allowed users to generate short pieces of music based on their preferences. Since then, the company has continued to develop and improve its AI algorithms, and has released more advanced versions of its music generation software.

While the instrumentation is primarily MIDI-based, many of the arrangements have more complexity and variability than some of the simpler music generators I’ve found online.

There’s an editor that allows you to see how the different instruments are programmed in the resulting track, but do require a free desktop editor app you can download directly. I’ve yet to install it, but will in my deep-dive article for Audio AI tools.

The cost isn’t horrible, but there are a lot of features unlocked in the free version to see how it works and learn from before committing to a paid subscription if you find it helps you generate soundtracks for your video productions.

NOTE:

I have a lot to explore with these tools yet and will follow up in a subsequent article that I can dig deeper and see how far I can push them and add customization and manual over-layering in recording my own instruments.

—– —– —– —– —– —– —–

AI Text Generation Tools

*NOTE: This segment written almost completely by AI

AI generative text modules are computer programs that use artificial intelligence techniques to generate text. They are trained on large datasets of human-written text and use this training data to learn patterns and relationships between words and phrases. Once trained, the model can generate new text by predicting the next word in a sequence based on the patterns it has learned.

There are several different types of AI generative text modules, such as:

- Language models: These models are trained to predict the likelihood of a given sequence of words, and are often used to generate text by sampling from the model’s predicted distribution of words. GPT-2 and GPT-3 are examples of this type of model.

- Encoder-decoder models: These models consist of two parts, an encoder that takes in a source sequence and compresses it into a fixed-length vector, and a decoder that takes that vector and generates a target sequence. These models are often used for tasks such as machine translation.

- Variational Autoencoder (VAE) : These models are similar to encoder-decoder models, but they also learn to generate new samples by sampling from a latent space. These models are often used for text generation tasks such as poetry and fiction writing.

All of these models are designed to generate text that is coherent and fluent, and can be fine-tuned for specific tasks such as chatbot, summarization, and text completion.

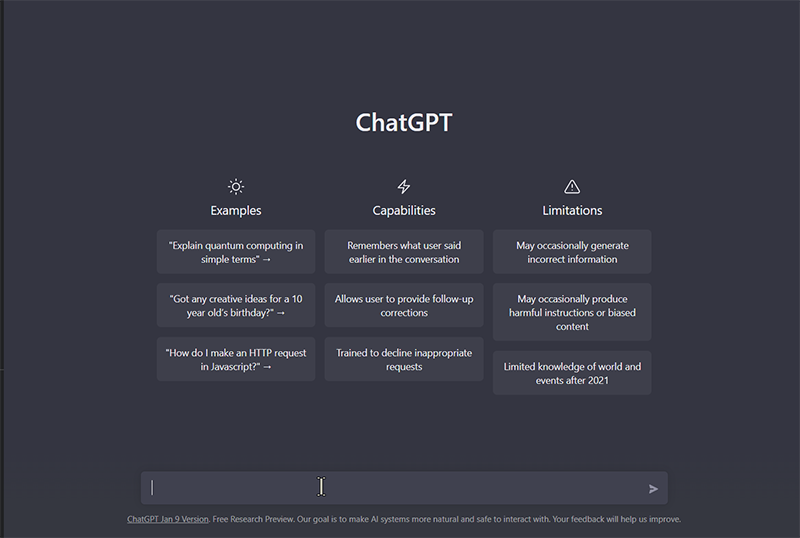

ChatGPT

ChatGPT is a GPT-3.5 language model developed by OpenAI that uses deep learning techniques to generate human-like text. It is based on the GPT (Generative Pre-trained Transformer) architecture, which is a type of transformer neural network. The model is trained on a massive dataset of text, such as books and articles, to learn patterns and relationships between words and phrases. When given a prompt or a starting text, ChatGPT generates text by predicting the next word in the sequence, based on the patterns it has learned from the training data. The model is able to generate coherent and fluent text that can be used for a variety of natural language processing tasks such as text completion, summarization, and conversation.

ChatGPT is a large language model that can generate a wide range of human-like text. Some examples of what ChatGPT can produce include:

- Text completion: Given an incomplete sentence or a prompt, ChatGPT can generate the missing words or phrases to complete the sentence.

- Summarization: Given a long piece of text, ChatGPT can generate a shorter summary that captures the main points.

- Conversation: ChatGPT can be fine-tuned to participate in a conversation, by answering questions or responding to prompts in a natural way.

- Language Translation: Given a text in one language, ChatGPT can generate a translation in another language.

- Text generation: ChatGPT can generate new text on a given topic, by sampling from the patterns it learned during training. This can be used for text generation tasks such as poetry and fiction writing.

- Sentiment Analysis: ChatGPT can be fine-tuned to predict the sentiment or emotion of a given text.

These are just a few examples of what ChatGPT can produce, the model can be fine-tuned for a wide range of natural language processing tasks and produce more specific outputs.

You’ll notice that these screenshots have my initial prompt at the top and the ChatGPT reply below. You’ll also determine how much of this section’s text was generated here!

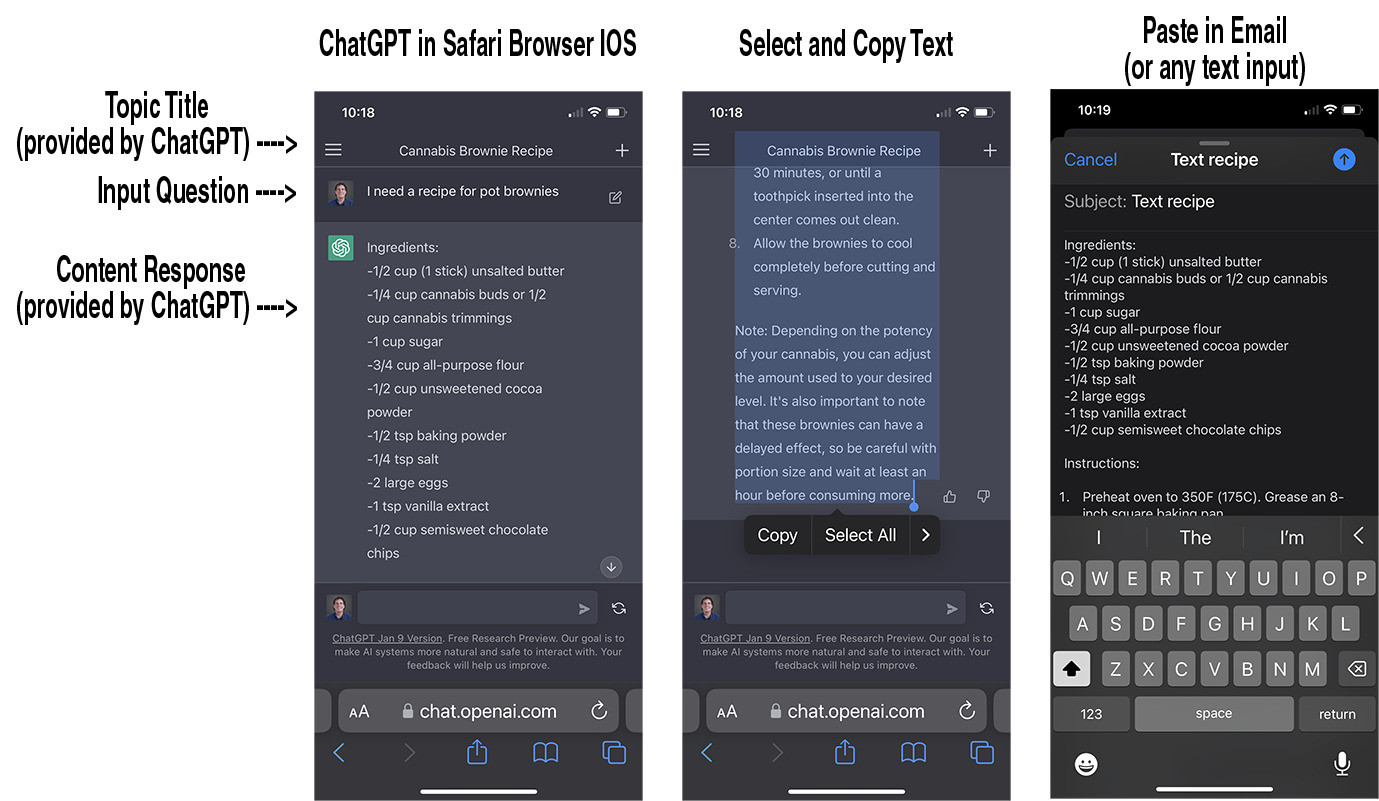

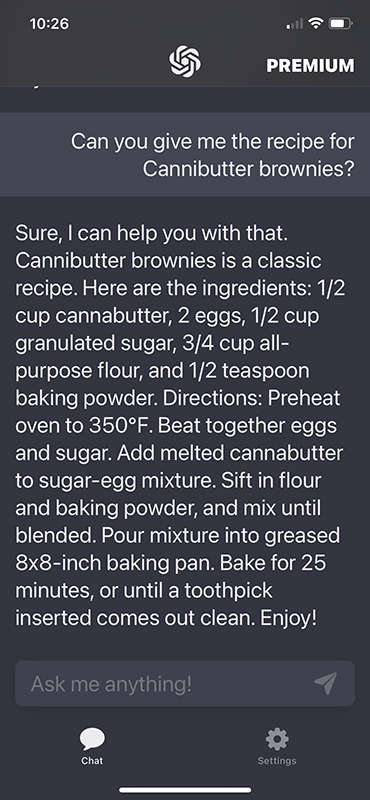

I’ve been using this on my iPhone as well – sometimes just to get a consolidated answer to a current events question. It’s like Google for text.

I’ve tried a couple different ChatGPT-enabled iPhone apps but they seem limiting compared to just signing into your account through your web browser. You still have all the functionality and accessibility that you would on your desktop web browser.

In this example, I was able to give a simple input question, and ChatGPT provided the query title and the complete response, including formatting…

…as opposed to the ChatMe app which took a lot more prompting to get an answer instead of stupid replies like “yes, I can give you that”! So frustrating I removed it from my phone!

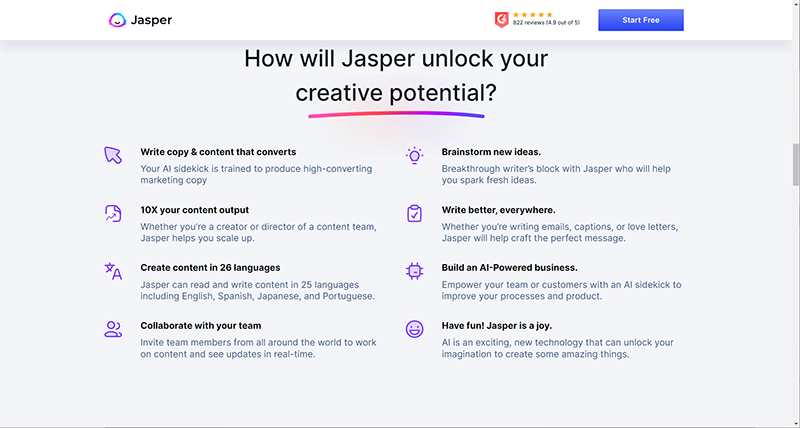

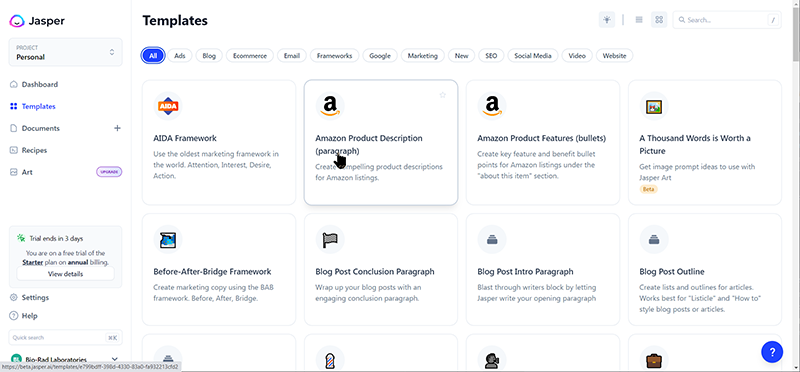

Jasper

Jasper is a copywriting software that automatically creates written content through the use of artificial intelligence and natural language processing. It shortens a writer’s research and drafting time since it provides original content in just a few clicks. Jasper is formerly known as Jarvis. But prior to being branded as it is today, it also went by the name Conversion AI. Just like how frequently the company changes its name, the people behind it also update the software almost every week.

Jasper AI employs GPT3 or the third generation of the Generative Pre-Trained Transformer for its artificial intelligence. GPT3 can generate large volumes of text since its machine learning parameters have reached 175 billion. Since Jasper AI is running in GPT3, it remains supreme in producing text-based content. To the untrained eye, the text created by Jasper would pass as something written by a human.

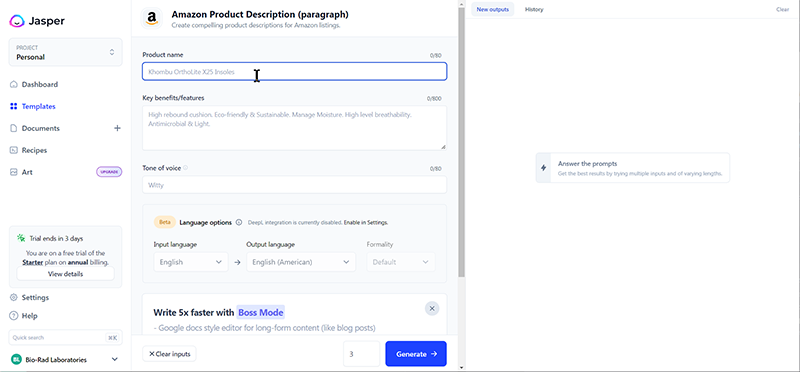

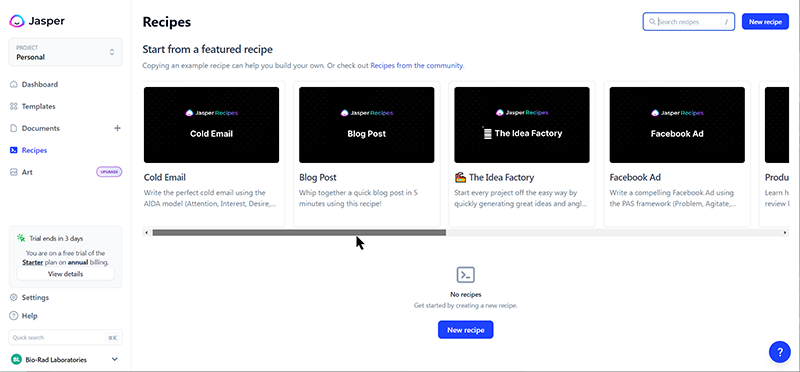

Jasper is a deep creative writing productivity tool with lots of templates and videos to get you started right away. There’s a 5-day free trial to explore and learn how useful it is and even the basic subscription allows up to 10 users on your account. Perfect for corporate marketing groups or small businesses and individuals alike.

In addition to over 50 individual templates for anything from an email to a blog post to an Amazon product ad or YouTube description, Jasper provides several “recipes” for complete ideas to produce from scratch.

Here’s a quick marketing video they just published earlier this month that gives you a quick look at how it works:

I’m looking forward to introducing this to our marketing team at my day gig (biotech marketing) to see how useful this may be to our writing staff and editors and marketers. I can see it’s usefulness instantly, but don’t confuse it’s capabilities with that of ChatGPT. Jasper has limited ability to fully create content without your guided input, so don’t expect it to give you EVERYTHING without feeding it the key ingredients first. But it’s highly trainable and can produce any style of messaging from very little data. And it can provide translations as well!

NOTE:

I’m pretty sure that many apps are going to start implementing ChatGPT (GPT-3) technology under the hood in the coming year, so watch for this evolving technology to pop up in your web editors and word processors shortly. There’s already a couple Chrome extensions available so I’ll explore how those work as well in my upcoming article on this segment.

—– —– —– —– —– —– —–

If AI technologies are combined – What will they produce?

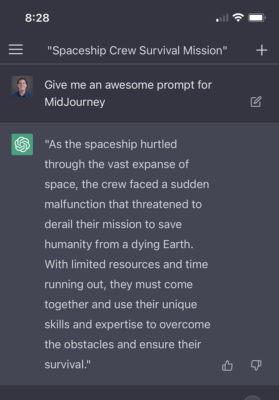

So I got thinking one night while I was experimenting with ChatGPT and Midjourney AI on my iPhone, “What if I totally left it up to AI to create something original with no input from me? Not even a hint or suggestion!

So I asked ChatGPT to “Give me an awesome prompt for Midjourney” and this was the response (even the “Theme” was generated by AI):

I copy/pasted the response directly into Midjourney on the Discord app and didn’t provide any further prompt directions, and it spit out these initial basic images:

Through some variations and regenerating the same prompt with upscaling, I got a bunch of actually useable images rendered, without any Photoshop!

You can bet that I’ll be doing more of this in the future – and then combine some of the other AI to help me tell a story. This is really a creativity jumpstarter!

What to expect in the coming months?

AI Technology has certainly made its mark and will continue to be a presence in the digital industry. It is no wonder that claims of AI being a fad were quickly squashed, as it has provided endless tools for production assistance and marketing analytics that have been vital to our creative processes. With the ability to customize AI according to our needs or workflows, this technology looks like it’s here to stay. In my upcoming posts, I will dispel any apprehensions you may have about using AI and will provide insight into some of the specific type of Artificial Intelligence that can benefit you and your projects/marketing endeavors. So join me as we explore this fascinating world of Intelligent Machines! We’ll chart a course for productivity by diving deeper into ideas such as Machine Learning, Neural Networks and more, so stay tuned for more info, updates and articles. Let’s see what the future holds!

UPDATED: Here’s a more complete list of AI Tools that’s updated regularly.

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now