By the time I finish writing this article, most everything in it will be old news by the time you open it. That is just how fast AI technology is developing and it’s a bit exhausting – while incredibly exciting at the same time. In the past two weeks, we’ve seen the release of GPT4, Midjourney V5, Runway Gen2 and Adobe launched it’s Firefly AI beta.

What I set out to accomplish in January in AI Tools: Part 1 by providing our readers with updated information about emerging AI technologies and how they can be utilized in the creative content and video production industries – followed up in February with AI Tools: The List of relevant AI tools to watch, I’m attempting to do a little deeper dive into the turbulent waters and pull up a few pearls.

I see now, though, that moving forward, perhaps I need to get smaller digestible articles out quicker -perhaps in a different format. Like possibly weekly updates on the top tools, or more intense look at individual tools and resources.

I’m going to take a moment to share this video from Kirby Ferguson, producer of the Everything is a Remix series, about the state of AI in our industry and how it affects us and also helps us understand that these tools, are really a collection and culmination from that which we have created – and will only assist us in developing better content even quicker and more enjoyably. If we get on board with it, that is. Because like it or not, this train ain’t stoppin’ for nobody!

So buckle-up. We’re only just getting started…

Midjourney V5

Pricing: Free trial/$10mo (Basic)/$30mo (Standard)/$60mo (Pro)

I assume that since you are reading this article, you will have heard the name “Midjourney” and the images shared globally from this AI image generator. If you want a little more background, check out my article in January, AI Tools: Part 1 – Why We Need Them and get caught up.

Where it Started – How its Going

When I first started messing around with the first open beta back in July 2022, we had no idea what to expect or what kind of results we might get with the simple prompts we were inputting in the Discord server. It was literally the wild west and the craziest images were coming out that sometimes resembled what we were asking of it but often times not. People often had three eyes and distorted figures and objects looked like you were viewing through shattered glass.

As the software evolved, the images lowly got better. Faces were intact with only two eyes, but rarely was anything photorealistic.

For an example, this was one of my very first prompts – something as absurd and outrageous as I could throw at it:

“Uma Thurman making a sandwich Tarentino style”

The max size output was 512 pixels. Rarely did we ever get hands at all – usually just distorted nubs. Then slowly month after month, we were getting more images with the correct number of limbs and facial features but we still had too many fingers on the hands. Lots of fingers. And too many teeth.

Enter the recent release of V5 and so much has jumped even further in only a couple months. More often than not, we’re getting better proportions and the correct number of fingers and teeth and arms and legs. Not always, mind you, but the scales have definitely tipped in our favor.

So tonight as a test, I entered the exact same prompt with V5, “Uma Thurman making a sandwich Tarentino style”

What a difference 8 months can be, in the AI development timeline! Even though this is still a wacky composition, they look more realistic than ever before. And these were just super simple prompts entered on my iPhone. (actually, about 90% of my experimental prompts are made on the Discord app on my iPhone. I get a strange thought or concept and enter it before I forget or just want a quick image to see what it looks like. The more serious stuff I create is usually work-related and testing to see if I can get better results with more advanced prompting.

But not all results from V5 are great though. I started a series of crazy images with simple prompts for “People of Walmart” early on and they were really cartoonish and ridiculous. They’re still ridiculous now, but some details are better than ever before. I’m not sure why it put people inside the shopping carts, but hey – it IS Walmart after all!

The magic is in the prompt

An area I’ve yet to truly explore, due to time commitments of my day job and also working on a feature doc in the evenings and weekends, is more advanced prompting and negative prompting to develop deeply engaging and more accurate images. I see people in many of the AI Art groups sharing some incredible imagery from hours and hours of “prompt engineering”. Much trial and error comes before multiple re-renders and refinements come around to produce amazing works.

I dabbled in some ChatGPT and Midjourney prompting awhile back, but I ran into this amazing video series on YouTube from Kris at All About AI that has me intrigued into thinking of ways to train ChatGPT to be my prompt-generating partner and getting into developing rich AI generated images. Even if you scrub through this video until you see his results, it’s totally worth a look as a passive explorer. For me, it excites me to dig deeper and I really can’t wait to share my results with you in the coming weeks/months!

Using a photo as a reference in Midjourney V5

I played around a bit in earlier versions of Midjourney using an image as a reference prompt to build on and the results were… meh.

But now with V5, I’m quite impressed with the results and it’s actually fun doing creative things with example images and illustrations as a seed source.

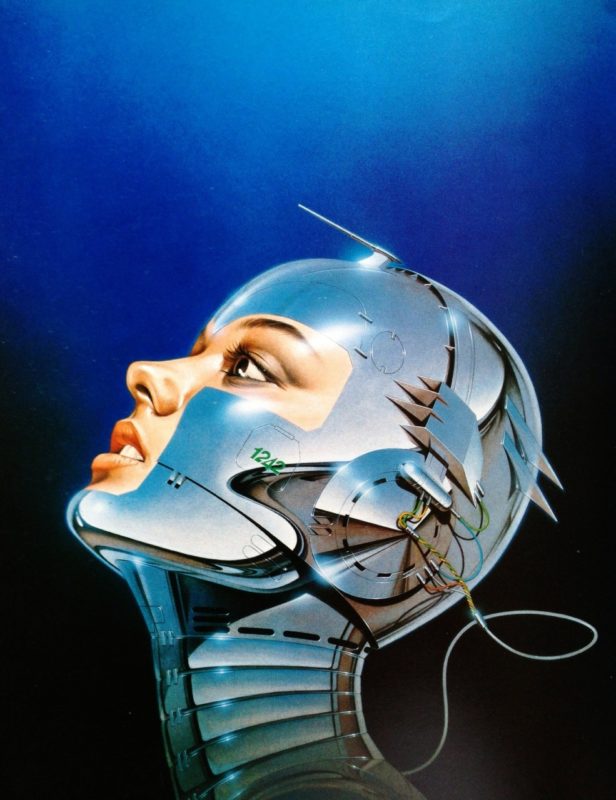

I started with this image from one of my inspirational airbrush artists in the 1980’s Hajime Sorayama’s “Sexy Robot” as my seed image source:

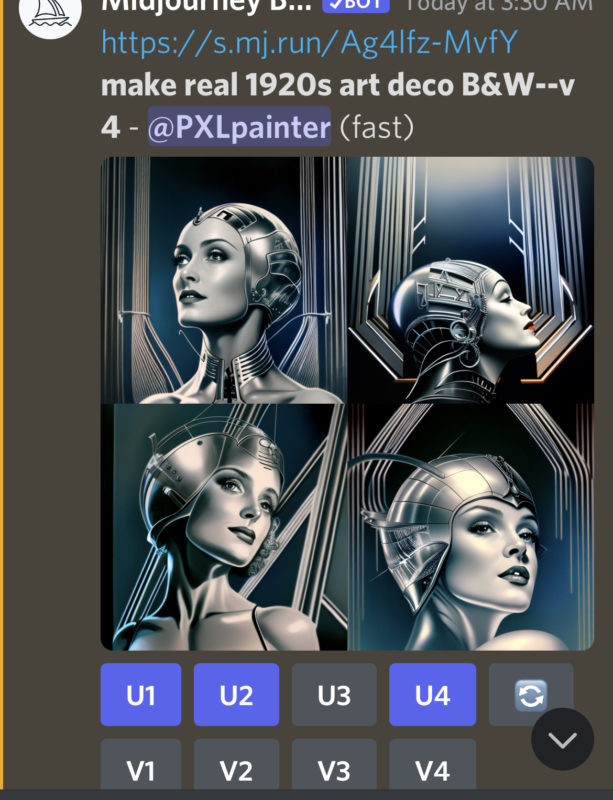

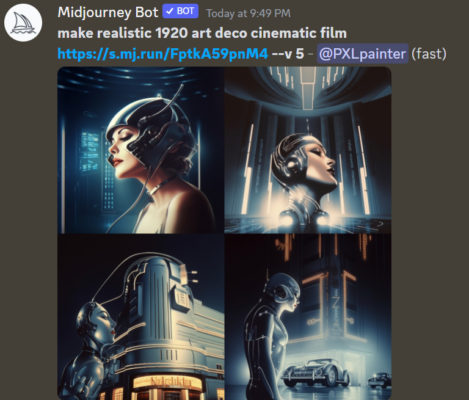

I then added my prompt for a realistic 1920 art deco cinematic image in color and B&W and got these results:

Without any further prompting I am thrilled with the results and could imagine going further down this rabbit hole and combining results in composites in Photoshop someday.

Here’s a quick tutorial on how to use an image as a reference seed source for Midjourney:

So let’s give each other a high-five and let the dog smell your hand!

Adobe Firefly beta

Pricing: Free (public beta – upon request)

Firefly is the new family of creative generative AI models coming to Adobe products, focusing initially on image and text effect generation. Firefly will offer new ways to ideate, create, and communicate while significantly improving creative workflows. Firefly is the natural extension of the technology Adobe has produced over the past 40 years, driven by the belief that people should be empowered to bring their ideas into the world precisely as they imagine them.

You can get access to Adobe Firefly beta from the website and by clicking the Request Access button at the top of the screen and following the instructions. If needed, disable any popup blockers before clicking or tapping the “Request access” button.

Firefly can be accessed on the web and supports Chrome, Safari, and Edge browsers on the desktop. We do not currently support tablets or mobile devices. You can use the download button or the copy button in the top right corner of the image.

Adobe has great plans for incorporating this technology throughout their suite of tools eventually – like text-to-image generation, image inpainting/outpainting (background fills and extensions), text-to-vector/brush/pattern/template generation, sketch-to-image illustrations, 3D to image generation, and image enhancements just for starters.

However, in this public beta, only limited text-to-image generation and type effects are available. That will soon be expanded, but check out this video to see what Adobe’s vision is for our future:

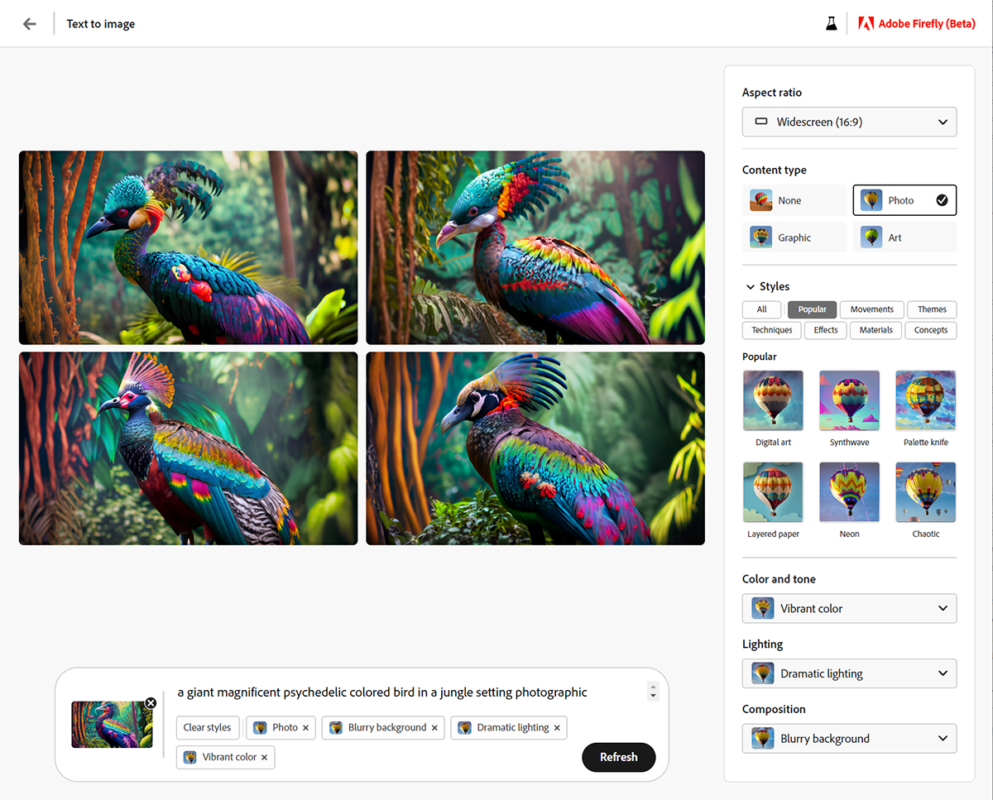

Text to Image

Users can create beautiful images by using a text prompt or by selecting from a list of appealing styles or inspiration.

How do styles work?

Firefly has a number of unique styles that create images with specific characteristics or visual treatment. If there are more styles you would like to see, please let us know using Discord or the Adobe Community forum. When applying a style or another setting from the properties panel, Firefly will generate new images that combine the original scene description with the added styles. They will be like running new prompts from scratch, as we’re trying to keep the images consistent between styles.

Why does Firefly generate totally new images when you change the aspect ratio in the text-to-image beta?

Aspect ratio (the relationship between height and width in an image) is one of the pieces of data the Firefly training model takes into consideration when generating images. Firefly will regenerate images when you change the aspect ratio to produce images with the best possible composition and overall quality.

As anyone who knows me can attest to, I have a love/hate relationship with food – so naturally I’m obsessed with it. I’ve used food examples in my books and training since the early 90s – and it’s always something that people can relate to, so why not?

Kids also love their food. And while food and fingers are getting better in AI generation, there’s still a few “oopsie” details, but OMG how far have we come in mere months?! These results are also simple prompts: “young child enjoying their [orange juice/spaghetti] at the kitchen table.” The kid with his face in his plate would be me. And not just as a child!

Dogs take their food seriously as well…

And dog racing is more fun in AI too!

At my day gig, I lead a dept. creating more serious content for a biotech company, so we’re always in need of images of scientists in a bio lab for marketing and messaging. We buy some stock photos at times but they’re all so overused and not a good selection of options to meet our specific needs, so we often have to get some of our own folks to take time out of their day to strap on a lab coat and pose for a few dozen images.

I’ve tried earlier versions of DALL-E 2 and Midjourney with limited success way back in January (LOL) as shown in some examples in my Part 1 article. I tried a very simple text prompt in Firefly: “portrait of a [mature/young] scientist in a lab coat in a biotech lab” and was pleasantly surprised at the results as I kept going and regenerating new images. Adobe not only draws upon it’s own licensed content library for image resources, but it also appears to be diverse in its output. I got a lovely mix of people of all races and genders and they look quite fabulous too!

While these are just simple generic tests during beta (only non-commercial use in this program) I can see this being a real plus once it’s integrated into Photoshop and we can outpaint a lab around them and include other elements such as the equipment we manufacture.

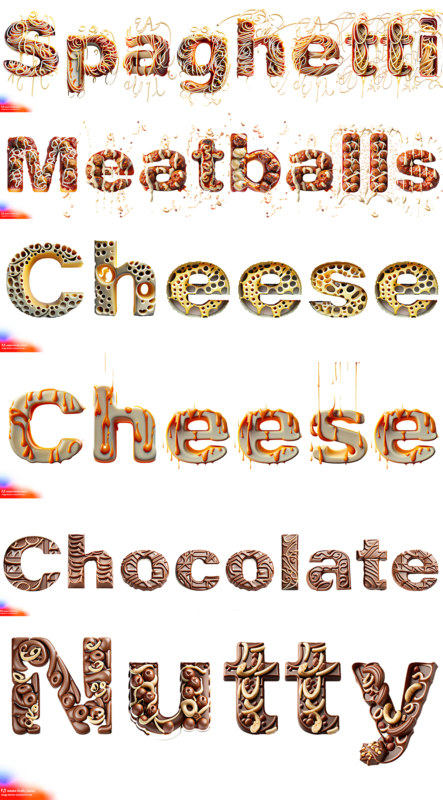

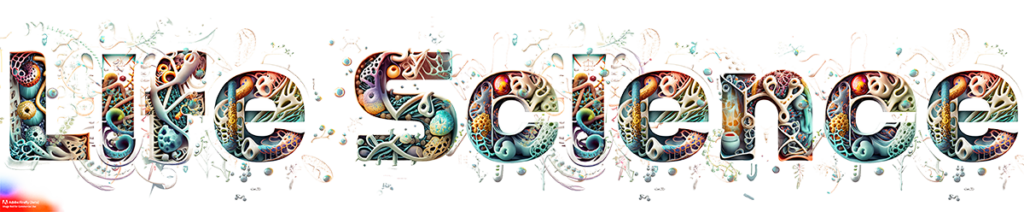

Text Effects

Users can edit a single line of text (up to 20 characters) and stylize it with a text prompt or by selecting from a list of appealing styles or inspiration. Set the background color to transparent, then use the download button to preserve transparency. Alternatively, copy the image directly to your clipboard, then paste it into applications like Photoshop and Express. Text effects are meant to produce a variety of appearances. In the future we’ll investigate offering options for producing more uniform output.

While it’s still quite rudimentary in the options of type and how it applies the imagery to the letters, I can definitely see where this can go in time… and again with the food obsession… BUT – go ahead and download this image if you’d like to try it over other backgrounds to see the transparency in the PNG files that were automatically generated from Firefly.

And of course, a non food example for the industry I work in. My only prompt for this was “Life Science” and it generated lots of great cells and genomes and more in this texture.

A few things you should know going into this beta:

Is Firefly English-only?

Currently, Firefly only supports English prompts, but we expect to expand support to additional languages. You can make requests for language support via the Adobe Community forum (once your access request is approved) or Discord.

Firefly’s data

We train Firefly using Adobe Stock and other diverse image datasets which have been carefully curated to mitigate against harmful or biased content while also respecting artist’s ownership and intellectual property rights.

Limitations:

Firefly does not currently support upload or export of video content.

You can’t use Firefly to edit or iterate on your own artwork at this time. Firefly has been developed using content available for commercial use, including content from Adobe Stock.

NOTE: Firefly is for non-commercial use only while in beta.

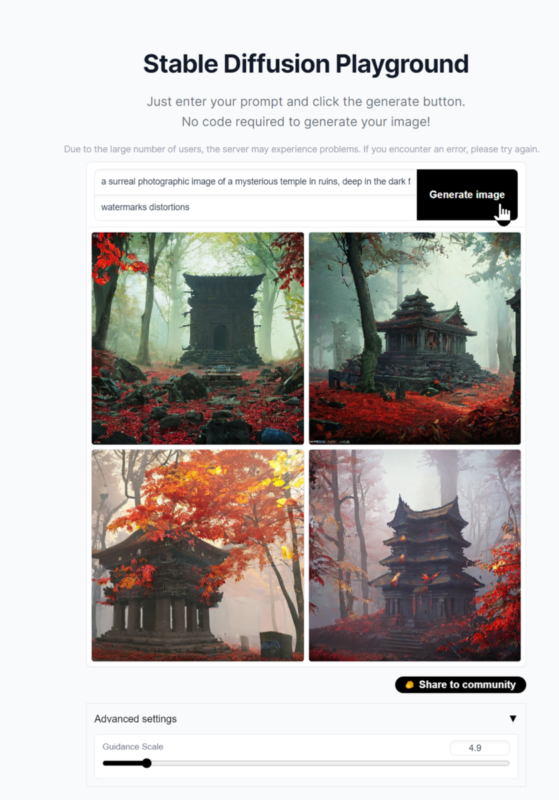

Stable Diffusion in Motion

Pricing: Free/Open Source

“Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input, cultivates autonomous freedom to produce incredible imagery, empowers billions of people to create stunning art within seconds.”

Well, that’s what it says online anyway. It’s really much more complex – and the stability AI models are under the hood of several developers’ AI generation tools.

Well, that’s what it says online anyway. It’s really much more complex – and the stability AI models are under the hood of several developers’ AI generation tools.

The web portals like Stable Diffusion Playground and DreamStudio beta allow us mere mortals to generate images with simple text prompts.

There are several portals and tools for generating better prompts and negative prompts (called prompt engineering) such as Stable Diffusion Prompts, the Stable Diffusion Art prompt guide, getimg.ai guide to negative prompting, and a paid tool for all AI prompt enhancements, PromptPerfect.

But many advanced users are installing it on their local computers and running their own training models and mixing with other tools like Deforum and ControlNet to generate moving pictures and animated productions.

One such power user is our friend and colleague, Dr Jason Martineau – an amazing artist, photographer, pianist and composer. Not to overlook his background in computer science and instructor at the Academy of Art University in San Francisco.

His YouTube channel is filled with beautiful work – all compositions and imagery are of his own creation and imagination. I’m going to share just a few of my favorite short compositions/animations created in AI from his imaginative input and sources. Once an animation is completed he takes his inspiration to composing various soundtracks to complete each experience. Most of these are around a minute long. Enjoy.

Within the past couple weeks, a new extension for Stable Diffusion called ControlNet has been making a lot of buzz in the AI community. This uses video as a source and and then further smoothing with EBSynth to smooth out the results. It’s all a bit over my head for now, but for those out there who want to dig into this technology, this video from Vladmir Chopine will guide you through the process.

And even more info on gaining image consistency with ControlNet and Stable Diffusion in this tutorial:

Runway Gen1/Gen2

Pricing: Free/$12mo (Standard)/$28mo Pro plus Enterprise programs

This is more of a trial/overview of features than it is a deep dive right now. I will need to load up on quarters to ride this adventure as the meter runs out pretty quick while running lots of tests, but my next part in this series will most likely go much deeper and get some advanced examples to try. It is by far one of the most impressive AI online tool systems I’ve seen to date – and it has intuitive user interfaces as well.

From my exploration and testing of a few of the tools in this portal are really impressive. Not perfect (yet) but impressive for a cloud-based multi-modal AI processing service. It’s probably because it’s still so new (basically evolving beta) but from the few projects I’ve tried to test so far, it’s pretty slow. I think some processes crashed and there doesn’t appear to be any way to recover the process or get your paid credits back. Perhaps the site is just overloaded and suffering growing pains – which is a good thing for a startup technology, so I’m not really going to judge just yet.

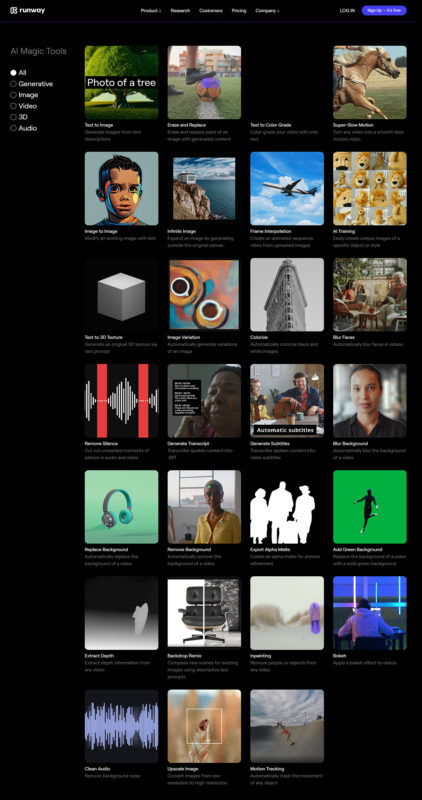

So what IS Runway?

It might be easier to list what it isn’t. There are dozens of “AI Magic tools” in this portal specifically for video editors, animators and content creators and anyone who just wants to make cool stuff!

-

Text to Image

Text to Image- Erase & Replace (Photo or AI Generated Image)

- Text to Color Grade

- Super-Slow Motion (Video)

- Image to Image Modification

- Infinite Image (Outpainting)

- Frame Interpolation (Video)

- AI Training

- Text to 3D Texture

- Image Variation

- Colorize

- Blue Faces (Video)

- Remove Silence (Audio)

- Remove Background (Video)

- Export Alpha Matte (Video)

- Add Green Background (Video)

- Extract Depth Map (Video)

- Backdrop Remix

- Inpainting (Object Removal – Video)

- Bokeh (Video)

- Clean Audio

- Upscale Image

- Motion Tracking

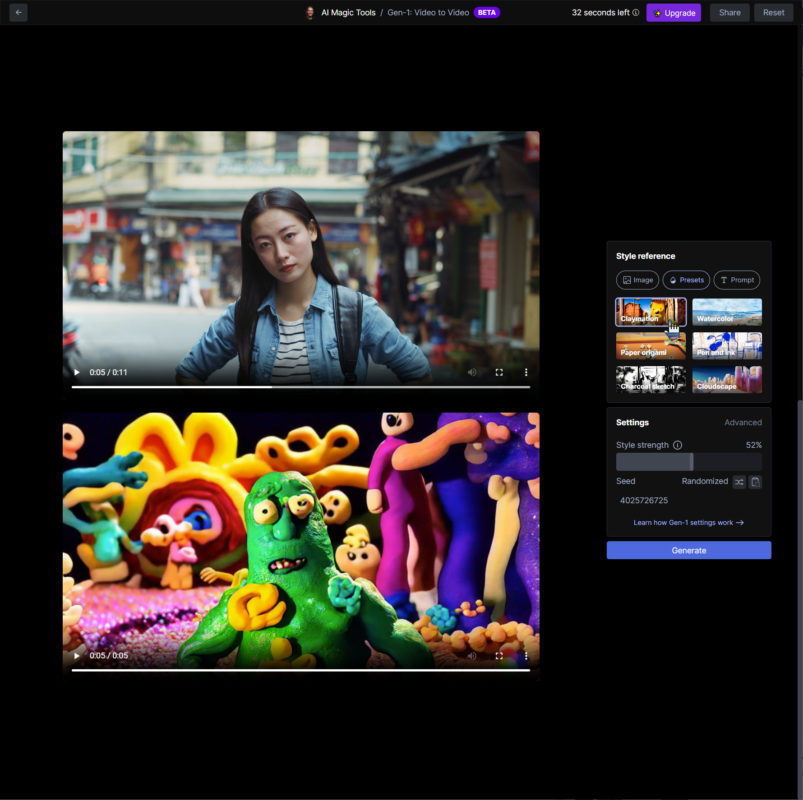

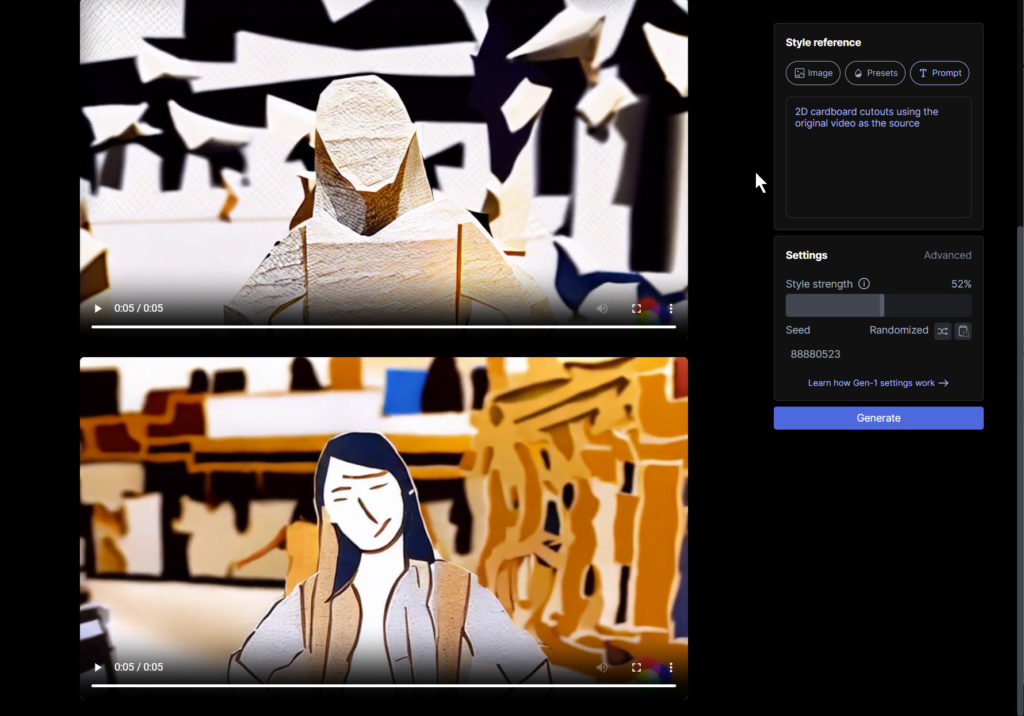

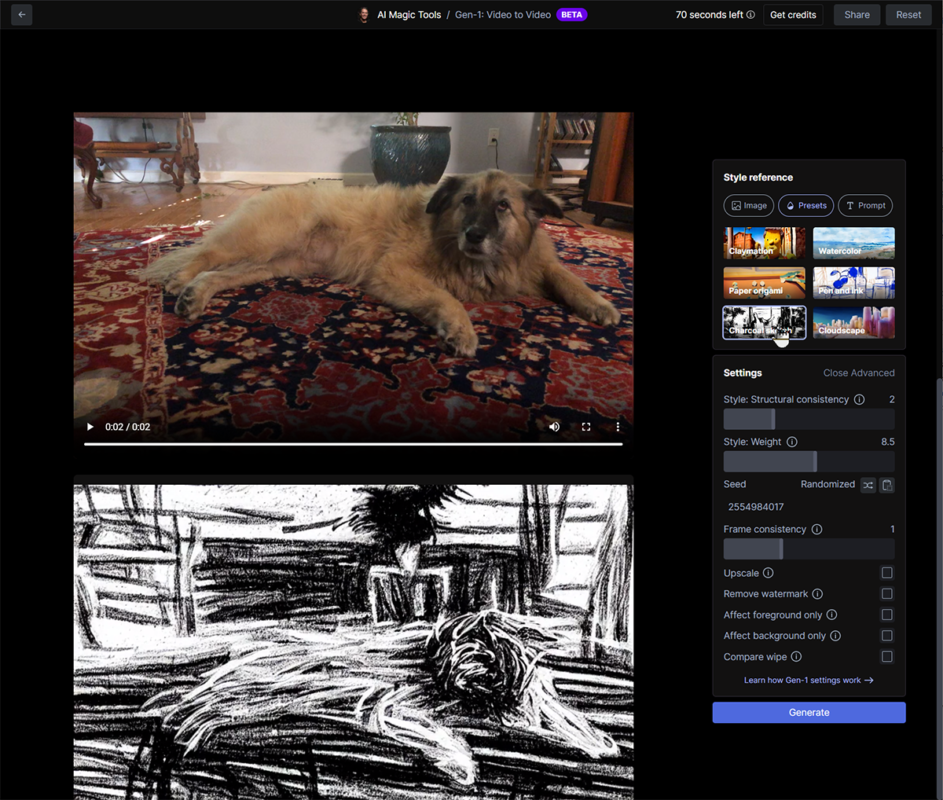

Just a couple tests I was able to get through this round, playing with the Video to Video (beta) generator. Currently it only creates 5 second passes but you can see how interesting the results can be when you really dig in.

I tried a single clip from their demo resources and applied the simple presets in the panel just to see what they do. Pretty crazy but cool!

The results are a bit crude and somewhat unexpected, but can obviously be refined with some tweaking – but still fun to be surprised at some of these looks from presets and a couple text prompts:

I tried a short clip of our dog yawning to mess with the advanced settings a bit to see how to refine the effect so it looks more like the original video clip but filtered. Again – this was just modifying the preset. I’m looking forward to using more refined Text prompts for more detailed results in the near future.

Obviously, several early adopters have been busy generating some amazing work using Runway already at the first AI Film Festival this year – and you MUST check out these winning works!

But wait… there’s more!

Runway Research Gen 2

https://research.runwayml.com/gen2

Merely weeks later, further developments are making this technology even better and more powerful… I told you it was going to be hard to keep up with this! Gen-2 looks quite promising indeed, and I’ll be really digging into Runway in the coming weeks as well!

ElevenLabs

Pricing: Free/$5mo (Starter)/$22mo (Creator)/$99mo (Indie Publisher)/$330mo (Business) and Enterprise plans

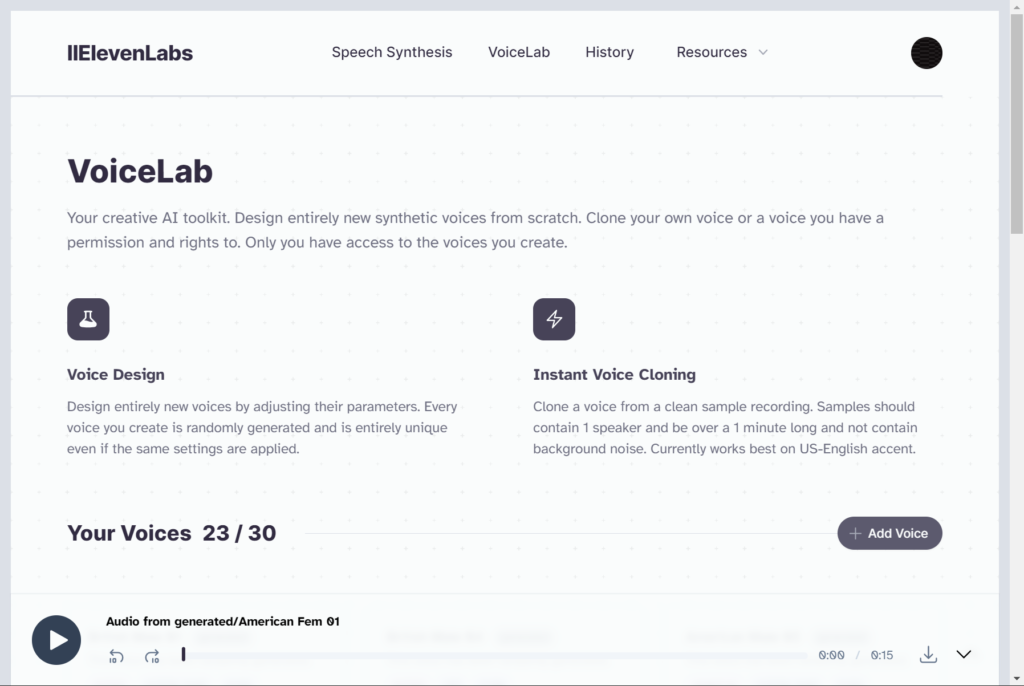

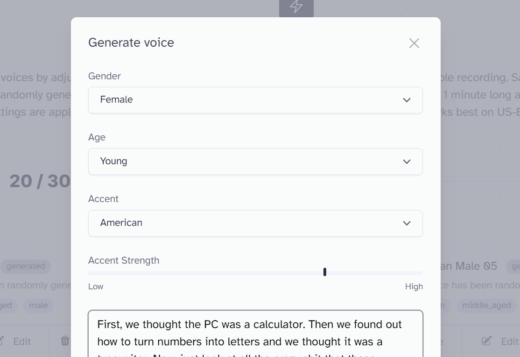

We’re getting so much closer to really good, useable TTS (Text To Speech) with ElevenLabs. Still waiting for more controls for voice pitch and rate and customizable tools like inflections, etc., but it’s so good already that you probably won’t even know what you’re hearing isn’t live recorded in most cases.

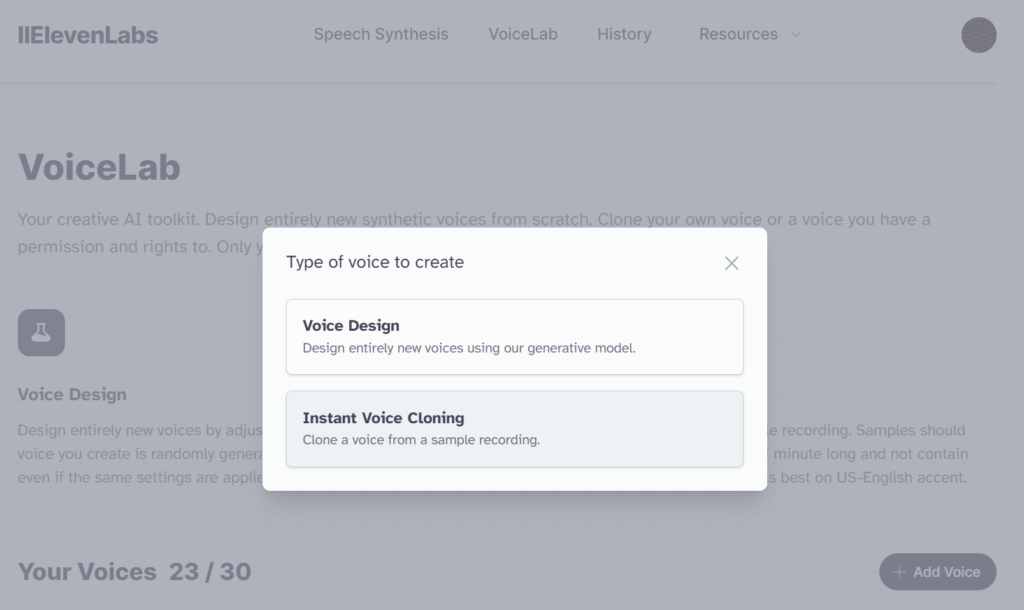

Unlike other TTS tools, ElevenLabs allows you to either generate your own synthesized voices or clone (legally & ethically) a recorded voice and modify it. They do have some preset voices generated ready to use when you’re testing or don’t want to create you own.

In the following examples, I share how to both design a new voice for your personal library, or create a clone from a recorded sample. Playing with the Accent Strength slider can vary the results quite dramatically, so sometimes you can generate several voice from one seed.

In the following examples, I share how to both design a new voice for your personal library, or create a clone from a recorded sample. Playing with the Accent Strength slider can vary the results quite dramatically, so sometimes you can generate several voice from one seed.

In these examples I used video clips from YouTube for examples of a couple voices I cloned, but note that you need to agree to having permissions to use the cloned samples. I’ve found that the cloned voices don’t necessary sound EXACTLY like the person – especially if you’re familiar with their voice, like a famous actor or your family or colleague.

To give you a good example of several generated voices and dialects, I’ve created a video showcasing about 9 different voices I’ve generated. I started with a VO script I made in ChatGPT with the direction of a short home cooking show on baking a chocolate cake. Some things needed to be re-phrased phonetically because fractions, like 1/2 is one half so it’s pronounced correctly.

Adjusting the Voice Settings is key to a good, natural result. The default almost always sounds robotic in my opinion. I tend to lower the Stability to under 25 and the Clarity + Similarity all across the board until it sounds right. I’m not sure how/why these terms were used in the software UI, but they’re not intuitive – you really have to just play with the controls until it feels the most natural and sometimes that’s only 1 degree one way or another.

Once there’s and updated version with more controls available, I can append this section.

I can’t even begin to tell you what’s coming up in April yet. So much to unpack from March still so I’ll be tracking updates to these popular tools of course and I might start breaking down some of the biggest tools into their own articles.

Be sure to keep up with “The List” as I try to update it weekly with new tools that emerge!

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now