Here we are at the end of August 2023. So much is happening so fast in Generative AI development, that I obviously can’t keep up with everything, but I will do my best to give you the latest updates on the most impressive AI Tools & techniques I’ve had a chance to take a good look at. Starting with this fun example made with ElevenLabs AI TTS and a HeyGen AI animated avatar template:

While the animated avatar is “fun” it obviously still has a ways to go to serve any real application for video or films purposes, but see the first segment below and get a peek into the near future of just how realistic they’re going to be!

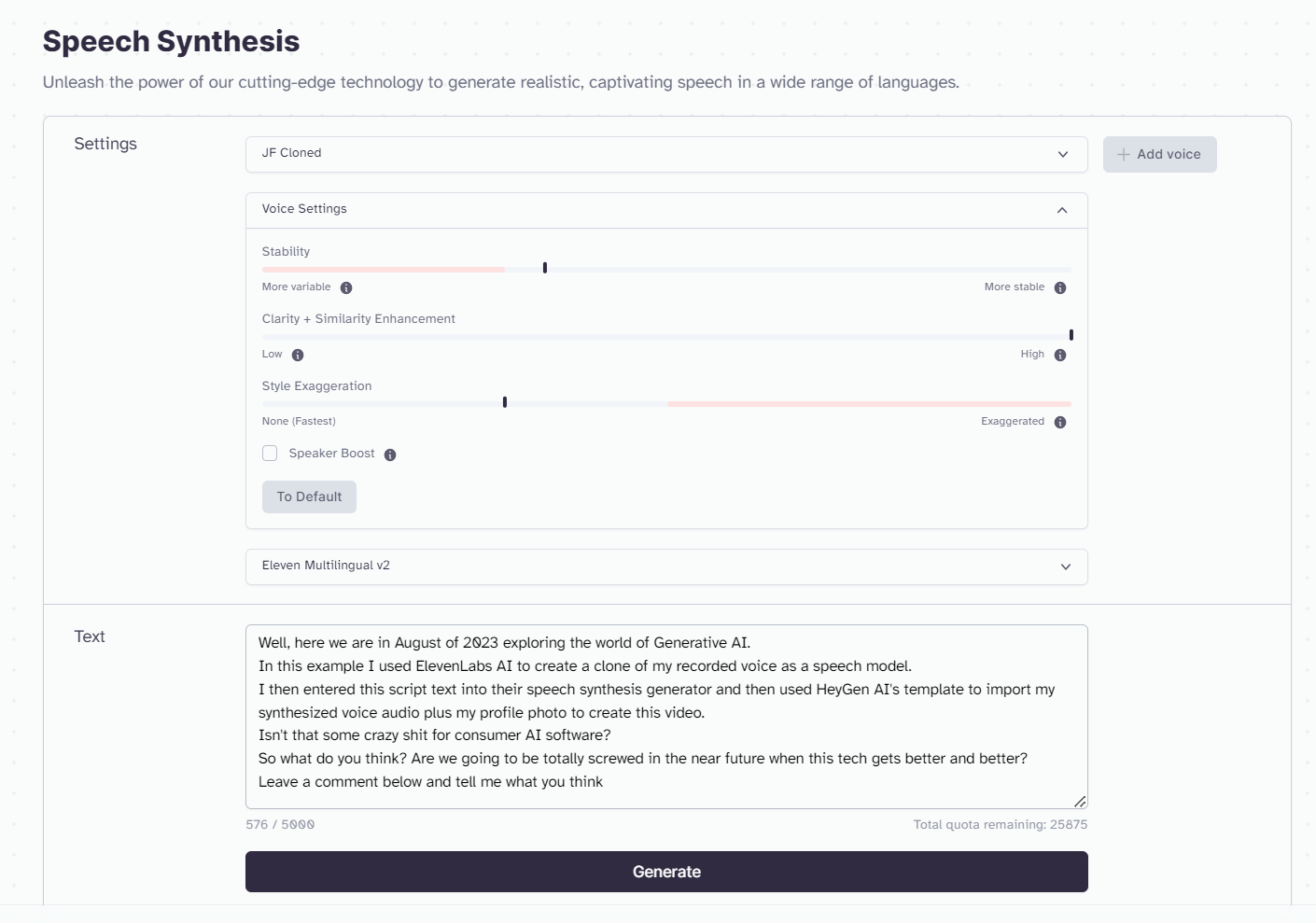

But let’s focus on that cloned voice recording for a minute! I’ll go into more detail about cloning in the AI Generative Audio segment in this article, but just to show you that this was completely AI generated, here’s a screenshot of that script:

Exciting times for independent media creators, trainers and marketers to be empowered with these tools to generate compelling multimedia content!

I’m not going to add a lot of hypothetical fluff and opinion this time – you can get plenty of that on social media and continue the ethics debates, etc. on your own (including who’s on strike now and how AI is going to take away jobs, etc.). I’m just here to show you some cool AI Tools and their capabilities for prosumer/content creators.

So in case this is your first time reading one of my AI Tools articles, go back in time and read these as well and see just how far we’ve come in only 8 months this year!

AI Tools Part 1: Why We Need Them

AI Tools Part 2: A Deeper Dive

AI Tools Part 3: The Current State of Generative AI Tools

And ALWAYS keep an eye on the UPDATED AI TOOLS: THE LIST YOU NEED NOW!

AI Tools Categories:

Generative AI Text to Video

In this segment we’re going to look at one of the leading AI tools that can produce video or animated content simply from text. Yes, there are several who claim to be the best (just ask them) but from what I’ve seen to date, one stands out above them all. And the best part is if you have some editing/mograph production skills, then you can do much more than just use a standard templates, as I outline below. And it’s only going to get much better very soon.

HeyGen (formerly Movio.ai)

https://app.heygen.com/home

I’ve seen several AI text to video generators over the past several months and frankly, most of them seem rather silly, robotic and downright creepy – straight out of the Uncanny Valley. And granted, some of the basic default avatars and templates in HeyGen can take on that same look and feel as well. For now, that is.

I tried a couple of their templates to test the capabilities and results, and what I found might be okay for some generic marketing and messaging on one’s customer facing website if they’re a small business or service. And that definitely serves the needs of some businesses on a budget. But I found their TTS engine wasn’t all that great and the robotic effect of the avatar is only more distracting by the robotic voice. I then ran the same script through ElevenLabs AI to produce a much clearer and human-like voice and applied it to the template as well and the results are more acceptable.

In this following example, you’ll hear the HeyGen voice on the first pass, then the ElevenLabs synthesized voice on the second pass.

Of course, that was the same process I used in my opening example video at the top of the article, but I used my own cloned voice in ElevenLabs to produce the audio.

To take this production process a step further, I created a short demo for our marketing team at my day gig (a biotech company) and included one step at the beginning – using ChatGPT to give me a 30-second VO script on the topic of Genome Sequencing written for an academic audience. I then ran through the process rendering the avatar on a green background and then editing with text/graphics in Premiere.

The result was encouraging for potential use on social media to get quick informative info out to potential customers and educators. The total time from concept to completion was just a few hours – but could be much faster if you used templates in Premiere for commonly-used graphics, music, etc.

But just wait until you see what’s in development now…

The next wave of AI generated short videos is going to be much harder to tell they aren’t real. Check out this teaser from the co-founder and CEO of HeyGen, Joshua Xu, as he demos this technology in this quick video:

So yes, I’ve applied for the beta and will be sharing my results in another article soon! In the meantime, I was sent this auto-generated personalized welcome video the HeyGen team – which serves as yet another great use for these videos. This is starting to get really interesting!

Stay tuned…

Generative AI Outpainting

Since Adobe launched their Photoshop Beta with Firefly AI, there have been a lot of cool experimental projects shared through social media – and some tips are pretty useful, as I’ve shown in previous AI Tools articles – but we’ll look at one particularly useful workflow here. In addition, Midjourney AI has been adding new features to its v5.2 such as panning, zoom out and now vary (region).

Adobe Photoshop

Adobe Photoshop Beta’s AI Generative Fill allows you to extend backgrounds for upscaling, reformatting and set extensions in production for locked-off shots. (You can also use it for pans and dolly shots if you can motion track the “frame” to the footage, but avoid anything with motion out at the edges or parallax in the shot.

For my examples here, I simply grabbed a single frame from a piece of footage and open it up in Photoshop Beta, then set the Canvas size larger than the original. I then made a selection just inside of the live image area and inverted it to select a Generative Fill to paint out the outside areas.

Photoshop Beta typically produces three variations of the AI generated content to choose from, so make sure you look at each one to be find the one you like the best. In this example, I liked the hill the best of the choices offered but want to get ride of the two trees as they’re distracting from the original shot. That’s easy with Generative Fill “Inpainting”.

Just like selecting areas around an image frame to Outpaint extensions, you can select objects or areas within the newly-generated image to remove them as well. Just select with a marquee tool and Generate.

The resulting image looks complete. Simply save the generated “frame” as a PNG file and use it in your editor to expand the shot.

I really wish I had this technology the past couple years while working on a feature film that I needed to create extensions for parts of certain shots. I can only imagine it’s going to get better in time – and especially when they can produce Generative Fill in After Effects.

Here are a few examples of famous shots from various movies you may recognize, showing the results of generated expansion in 2K.

(For demonstration purposes only. All rights to the film examples are property of the studios that hold rights to them.)

Midjourney (Zoom-out)

In case you missed my full article on this new feature from Midjourney AI , go check it out now and read more on how I created this animation using 20 layers of rendered “outpainted” Zoom-out frames, animated in After Effects.

There are also two new features added since for pan/extend up/down/left/right and Vary (Region) for doing inpainting of rendered elements. I’ll cover those in more detail in an upcoming article.

Generative AI 3D

You’re already familiar with traditional 3D modeling, animation and rendering – and we have a great tool to share for that. But we’re also talking about NeRFs here.

What is a NeRF and how does it work?

A neural radiance field (NeRF) is a fully-connected neural network that can generate novel views of complex 3D scenes, based on a partial set of 2D images. It is trained to use a rendering loss to reproduce input views of a scene. It works by taking input images representing a scene and interpolating between them to render one complete scene. NeRF is a highly effective way to generate images for synthetic data. (Excerpted from the Datagen website)

A NeRF network is trained to map directly from viewing direction and spatial location (5D input) to opacity and color (4D output), using volume rendering to render new views. NeRF is a computationally-intensive algorithm, and processing of complex scenes can take hours or days. However, new algorithms are available that dramatically improve performance.

This is a great video that explains it and shows some good examples of how this tech is progressing:

Luma AI

https://lumalabs.ai/

Luma AI uses your camera to capture imagery from 360 degree angles to generate a NeRF render. Using your iPhone, you can download the iOS app and try it out for yourself.

I tested the app while waiting for the water to boil for my morning cup of coffee in our kitchen and was pleasantly surprised at how quick and easy it was from just an iPhone! Here’s a clip of the different views from the render:

View the NeRF render here and click on the various modes in the bottom right of the screen: https://lumalabs.ai/capture/96368DE7-DF4D-4B4B-87EB-5B85D5BDEA37?mode=lf

It doesn’t always work out as planned, depending on the scale of your object, the environment you’re capturing in and things like harsh lighting/reflections/shadows/transparency. Also working on a level surface is more advantageous than me trying to capture my car on our steep driveway in front of the house on our farm.

View the NeRF render yourself here to see what I mean – the car actually falls apart and there’s a section where it jumped in position during the mesh generation: https://lumalabs.ai/capture/8AE1BF7D-5368-427F-8CC4-D02B1B887D31?mode=lf

For some really nice results from power users, check out this link to Luma’s Featured Captures page.

Flythroughs (LumaLabs)

https://lumalabs.ai/flythroughs

To simplify generating a NeRF flythrough video, LumaLabs has created a new dedicated app that automates the process. It just came out and I’ve not had a chance to really test it yet, but you can learn more about it – the technology and several results from their website. It’s pretty cool and I can only imagine how great it’s going to be in the near future!

Video to 3D API (LumaLabs)

https://lumalabs.ai/luma-api

Luma’s NeRF and meshing models are now available on our API, giving developers access to world’s best 3D modeling and reconstruction capabilities. At a dollar a scene or object. The API expects video walkthroughs of objects or scenes, looking outside in, from 2-3 levels. The output is an interactive 3D scene that can be embedded directly, coarse textured models to build interactions on in traditional 3D pipelines, and pre-rendered 360 images and videos.

For games, ecommerce and VFX – check out the use case examples here.

Spline AI

https://spline.design/ai

So what makes this AI based online 3D tool so different from any other 3D modeler/stager/designer/animator app? Well first of all, you don’t need any prior 3D experience – anyone can start creating with some very simple prompts/steps. Of course the more experience you have with other tools – even just Adobe Illustrator or Photoshop, the more intuitive working with it will be.

Oh, and not to mention – it’s FREE and works from any web browser!

You’re not going to be making complex 3D models and textures or anything cinematic with this tool, but for fun, quick and easy animations and interactive scenes and games, literally anyone can create in 3D with this AI tool.

This is a great overview of how Spline works and how you can get up to speed quickly with all of its capabilities, by the folks at School of Motion:

Be sure to check out all the tutorials on their YouTube channel as well – with step-by-step instructions for just about anything you can imagine wanting to do.

Here’s a great tutorial for getting started with the spline tool So intuitive and easy to use:

Generative AI Voiceover (TTS)

There are several AI TTS (Text To Speech) generators out on the market and some are built into other video and animation tools, but there is one that stands-out above all the rest at the moment – which is why I only focused on ElevenLabs AI.

ElevenLabs Multilingual v2

It’s amazing how fast this technology is advancing. In less than a year we’ve seen the results of early demos I shared in my AI Tools Part 1 article in January 2023, to now having so much more control over custom voices, cloning, accents and now even multiple languages!

With the announcement this week of Multilingual v2 out of beta, they offer these supported languages: Bulgarian, Classical Arabic, Chinese, Croatian, Czech, Danish, Dutch, English, Filipino, Finnish, French, German, Greek, Hindi, Indonesian, Japanese, Italian, Korean, Malay, Polish, Portuguese, Romanian, Slovak, Spanish, Swedish, Tamil, Turkish & Ukrainian (with many more in development) and join the previously available languages including English, Polish, German, Spanish, French, Italian, Hindi and Portuguese. Check out this demo of voices/languages:

Not only does the Multilingual v2 model provide different language voices, but it also makes your English voices sound much more realistic with emphasis and emotion that is a lot less robotic.

As I’ve mentioned in my previous AI Tools articles, we’re using ElevenLabs AI exclusively for all of our marketing How-to videos in my day gig at the biotech company. What I’m most impressed with is the correct pronunciation of technical and scientific terminology and even most acronyms. I’ve rarely had to phonetically spell out key words or phrases, though changing the synthesized voice parameters can change a lot of inflection and tone from casual to formal. But retakes/edits are a breeze when editing! Besides, some of the AI voices sound more human than some scientists anyway (j/k) 😉

Here’s an example of a recent video published using AI for the VO.

Cloning for Possible use with ADR?

As you can hear in my opening demo video (well, those that know me and my voice that is), the Cloning feature in ElevenLabs is pretty amazing. Even dangerously so if used without permission. That’s why I’ve opted to NOT include an example from another source in this article, but only to point out the accuracy of the tone and natural phrasing it produces on cloned voices.

For film and video productions, this means you can clone the actor’s voice (with their permission of course) and do dialogue replacement for on-air word censoring, line replacements and even use the actor’s own voice to produce translations for dubbing!

I recorded a series of statements to train the AI and selected a few for this next video. Here is a comparison of my actual recorded voice compared to the cloned voice side-by-side. Can you guess which one is the recorded voice and which one is AI?

You can probably tell that the ones where I spoke a bit sloppy/lazy pronunciations were my original recorded voice, which makes me think this would be a better way to record my voice for tutorials and VO projects so I can always have a clear, understandable voice that’s consistent – regardless of my environment and how I’m feeling on any given day.

So to test the different languages, I used Google Translate to translate my short example script from English to various languages that are supported by ElevenLabs, plugged that into Multilingual v2 and was able to give myself several translations using my own cloned voice. So much potential for this AI technology – I sure wish I could actually speak these languages so fluently!

Again – this would be great for localization efforts when you need to translate your training and tutorial videos and still stay in your own voice!

JOIN ME at the AI Creative Summit – Sept 14-15

I’ll be presenting virtually at the AI Creative Summit on September 14-15, produced by FMC in partnership with NABshow NY.

I’ll be going into more detail on some of these technologies and workflows in my virtual sessions along with a stellar cast of my colleagues who are boldly tackling the world of Generative AI production!

Register NOW! Hope to see you there!