As an awe-struck, six-year-old boy watching George Lucas’s Star Wars, Rob Powers dreamt of being involved in filmmaking. He could not have imagined, nor could anyone have predicted, however, that in the years to come, he would join forces with another groundbreaking director to help revolutionize filmmaking forever.

“I have wanted to be involved in filmmaking since I was a child,” says Powers. “Star Wars was my earliest inspiration.” He even set his sights on attending Lucas’s alma mater, USC Film School, where he studied cinema film production. After graduation, he continued his formal film school education at the American Film Institute. At the time, the digital side of filmmaking didn’t exist and no schools offered programs in computer graphics (CG) animation. Self-taught in digital filmmaking methodologies, Powers is among the pioneers of the CG revolution.

Presenting Powers

In the ’90s, few likely knew his name but many heralded his work. At Encore Digital, for example, Powers was the lead animator for the 3D dancing baby and myriad other effects that caught the attention of the public on Ally McBeal‘s first season. He joined Jeff Kleiser and Rich Kempster at Kleiser-Walczak Digital Effects, where he cut his teeth on stereoscopic technologies and projects. Powers soon started his own company (Ignite! Digital Studios in Glendale, Calif.), and his stereoscopic experience caught the eye of James Cameron, who was working on Aliens of the Deep, an IMAX stereoscopic film.

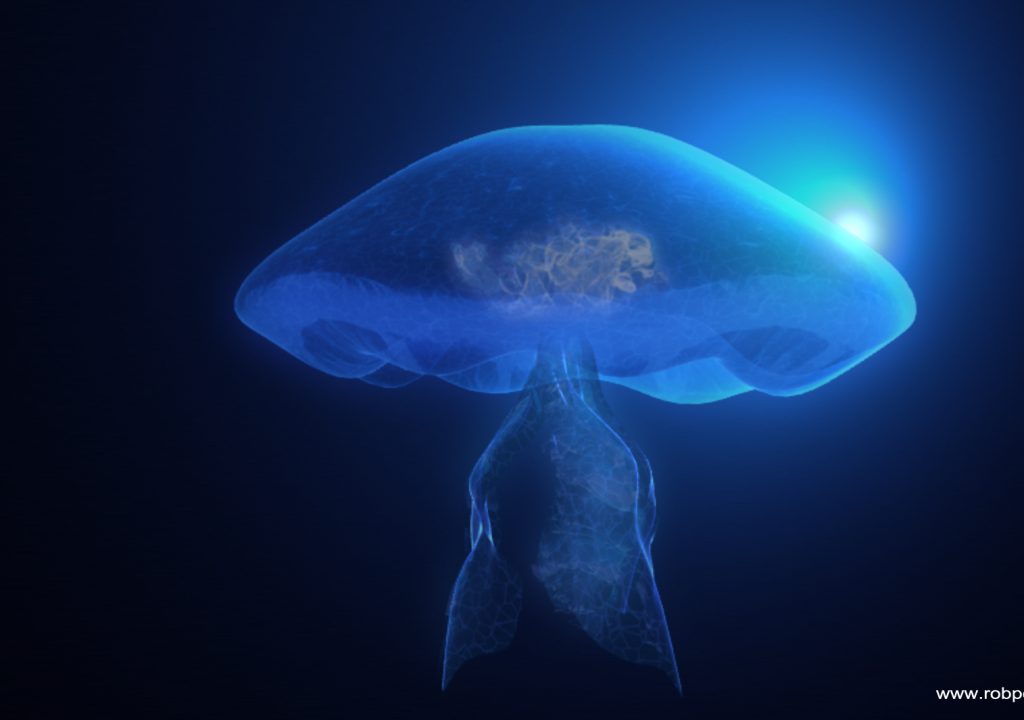

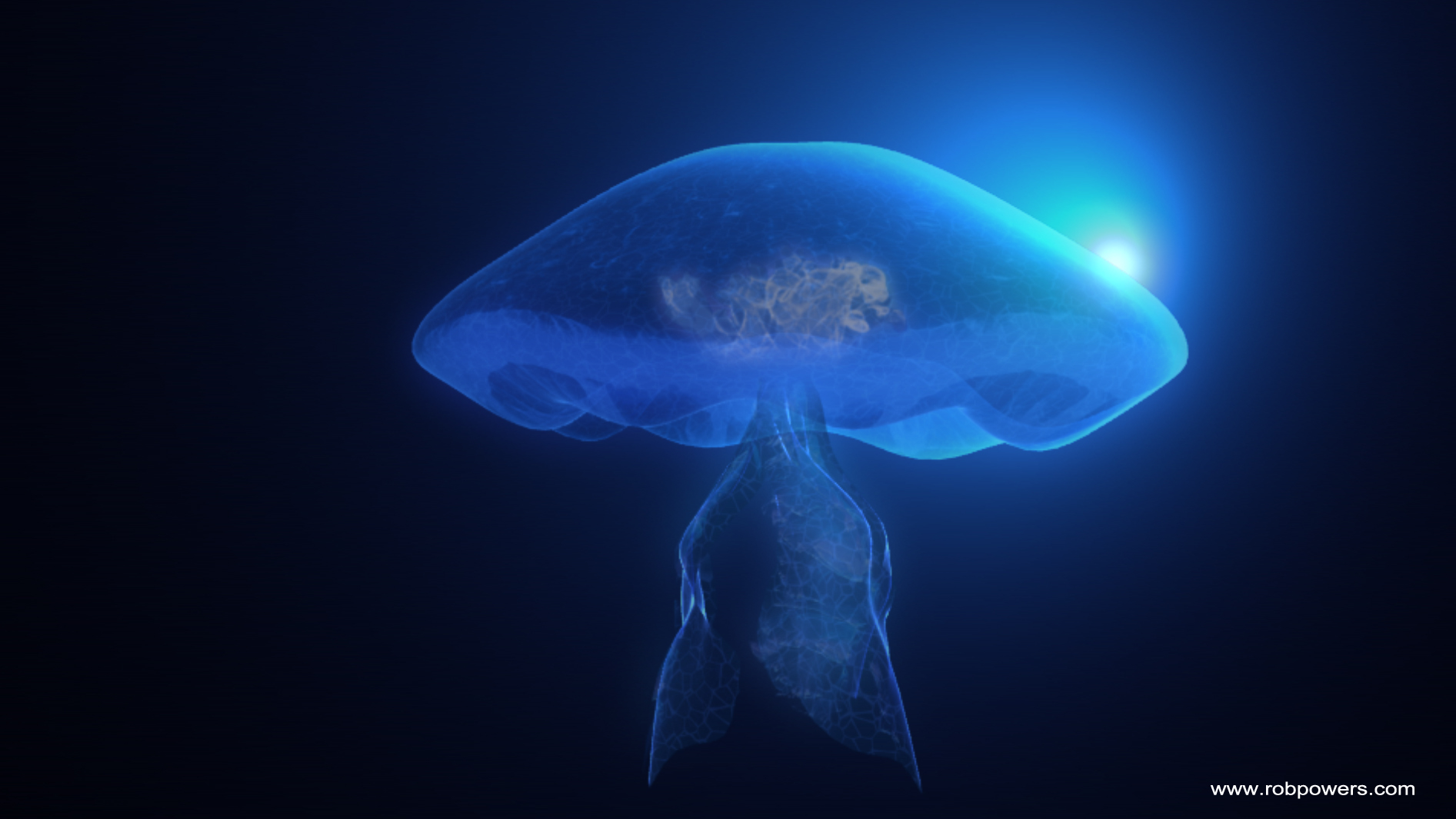

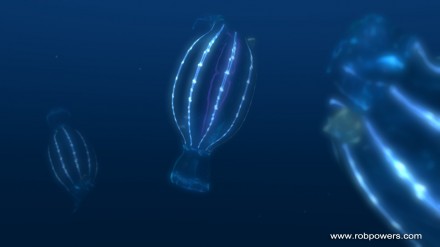

“I became the lead creature supervisor on that project for the award-winning sequence that involved an alien under the ice surface of Europa,” says Powers, who did the model, structure, animated rig, and final render work. Cameron continued to award Powers and his firm more and more work, including the main title design for the film. “Jim [Cameron] asked me to supervise the stereoscopic main title, which was based on the creature I did earlier and involved a lot of bioluminescence and underwater sea life–in the theme of what Jim has been known for and the stuff that is really close to my heart.”

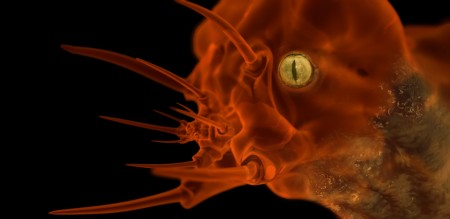

It is of little wonder then, why Powers was the first CG artist that Cameron asked to begin working with his team of artists on Avatar. “When I started on the film, it was at [Cameron’s] house with a small team of concept designers led by people like Wayne Barlowe, a famous sci-fi illustrator and designer and someone I have always admired. I was working closely with Neville Page, Yuri Bartoli, and Jordu Schell; all the designers and the production designer; and directly with Jim on creature designs, 3D versions of creature motions, motion tests, and concept environments.”

Early Avatar

Powers was involved from the very earliest stages and one of the key team members that helped create the Pandora environment for Avatar. As a result, Powers was in a unique position to assist Cameron when the project started moving toward production. “Jim wanted to put together a system that would combine different technologies, and basically develop a workflow that didn’t yet exist for virtual production,” Powers explains.

Powers, tapping his extensive experience with a wide range of software and his knowledge of Pandora’s environments, devised a test scenario. “At that time, it was in question as to how much art-directed, artistic relevance that real-time feedback would have for [Cameron] and still be functional, so I did a test with one of the first major environments. It is the glowing purple mushroom environment that appeared in the film, and it proved not only to get Jim excited, but also the level of atmosphere, composition, art-directed assets, and all the things that filmmaking is really all about could be done in a real-time, interactive, director-centric workflow.”

Cameron, realizing what he could achieve in a nonlinear real-time workflow, asked Powers to start an entirely new department on Avatar and take charge of all the environments for Pandora. “That is when I transitioned to the role of Virtual Art Department supervisor,” he says.

Workflow Genesis

The Virtual Art Department grew out of “that initial discovery of how far we could push the limits of the digital and virtual workflow,” Powers notes. “It was the catalyst for the department.”

The novel department employed a complex pipeline, in which almost all the assets were created in NewTek’s LightWave using polygonal geometry. “I chose LightWave when I was in the earliest design phases because I was really the only 3D artist-other than Andrew Cawrse, who was on loan from Industrial Light & Magic (ILM) doing some Zbrush sculpting,” Powers says. “LightWave was the smartest choice for me because I did not have a whole team of technicians or scripters supporting me; it was just me.”

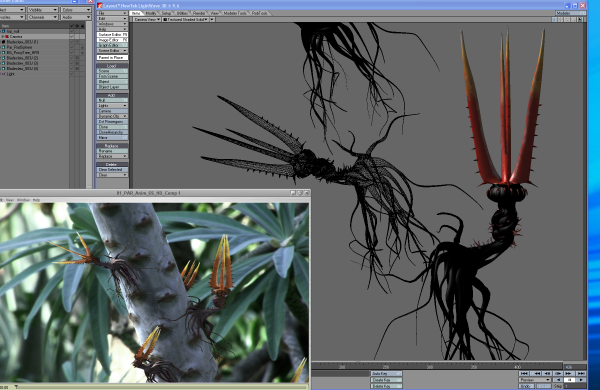

Powers was doing myriad tasks: creature concepts, texturing, lighting, rigging, and walk cycles. “I did all the first animations working with Jim,” he says. “I textured, lit, and animated the first living motion of the Leonopteryx-the first time it flew and took an x-wing attack position. I worked out whether the direhorses should have four or six legs and how they should be offset. I modeled, textured, animated, and rigged the direhorses, including the first time they walked and ran, based on the design from Wayne Barlowe. I did concept animations and renders of alien epyphyte creatures and things in the world of Pandora that had to look really good, really quickly.

![]()

“When I had to get an animation of the Leonopteryx done in a couple days-and that means modeling, rigging, texturing, and rendering it-to show James Cameron, I couldn’t rely on other people to do things for me, such as creating scripts or tools to do things. I had to do it myself, so I chose LightWave as a key item in my toolkit and used it with great plugins like Maestro, Fprime, and Vue Xstream to create a wide range of creature and environment tests, animations, and imagery. I was able to do it in a couple days with LightWave; I could model, rig, texture, animate, and render to the level that it looked photo-real. It was a tool that allowed me to do it all very quickly and efficiently, and to get things done that kept Jim happy.”

At this early stage of the production we had to be fast and flexible, able to accommodate changes that seemed to be continually coming down the pipeline. “The way that LightWave’s particular rigging

setup works, I was able to swap out models seamlessly and instantly with no glitches,” he says. “If a new version of the Leonopteryx came, I could just swap it instantly.”

Seamless Integration

Throughout the concept and production process, Powers continued to use LightWave to interface with programs such as E-on Software’s Vue software and xStream plug-in, Pixologic’s Zbrush, and Autodesk’s MotionBuilder. “LightWave’s integration with programs like Vue and MotionBuilder worked so seamlessly for me that it was a no brainer choice because I had everything I needed at my fingertips. It was a strong tool set for what we were basing our pipeline on and it made sense.

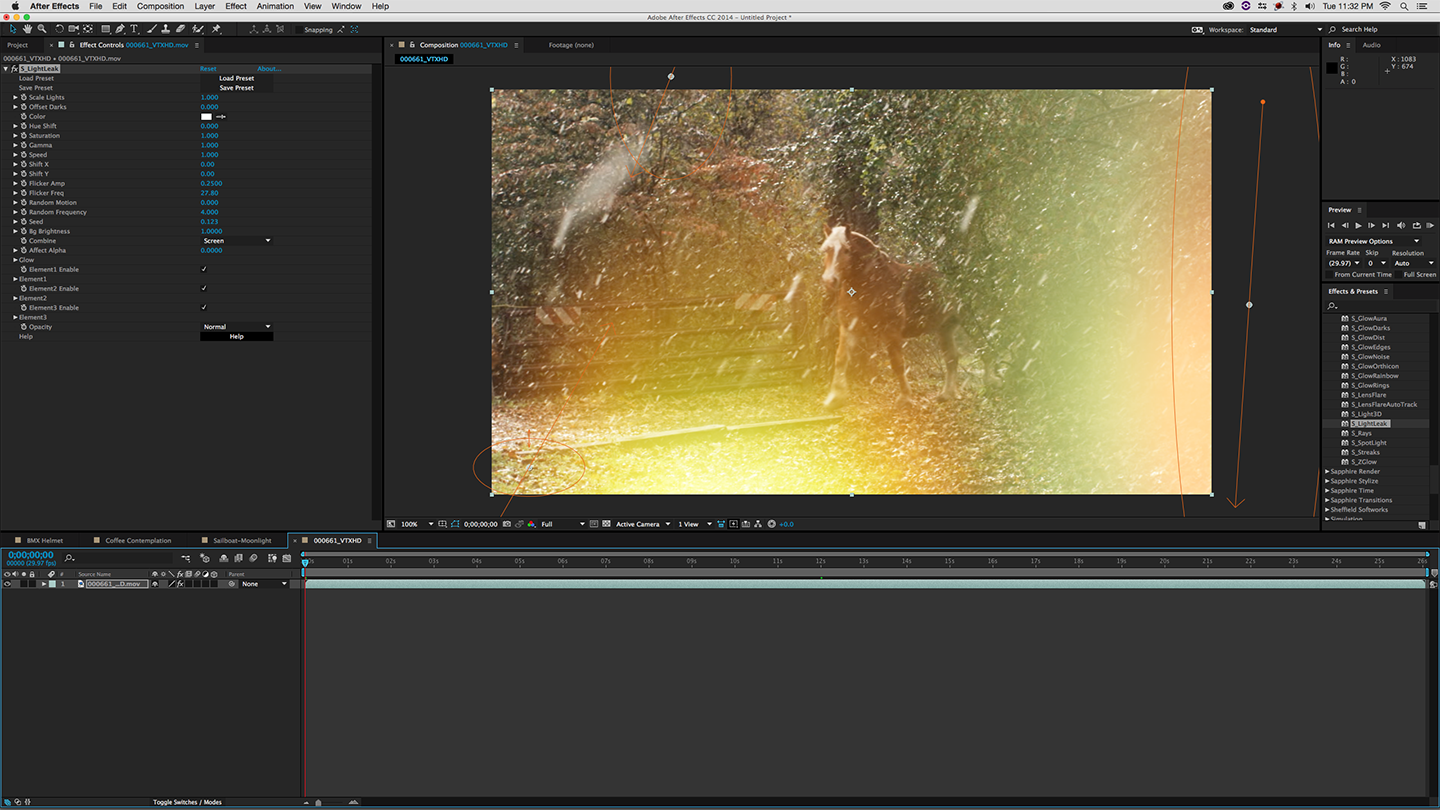

“When I needed to jump out of LightWave and do crazy augmentations for the environments, I could jump over to Vue; and, when I needed to go to the MotionBuilder workspace to get some rigging and motion capture, it was easy to interface with that, as well. All the interaction that Jim had was in the MotionBuilder real-time engine with environmental assets that were primarily created in LightWave for the environments.”

The Virtual Art Department staff concentrated heavily on producing extremely efficient polygonal-based models and baking textures in LightWave’s renderer. “LightWave has advanced radiosity, high dynamic range, ambient occlusion, soft shadows, and beautiful lighting that we baked into the textures,” describes Powers. “We were able to incorporate some of the benefits of LightWave’s renderer by baking its beautiful lighting into the texture maps used on our 3D models. This helped us make the assets and the quality of the environments in MotionBuilder look as good as possible.”

And this-the technical process of creating the assets-was just the first phase of the Virtual Art Department’s workflow. In the second phase, Powers, Cameron, and others continued to think outside the box.

Terrestrial Techniques

The Virtual Art Department devised a “light-switch” technique in which artists could take any Pandoran jungle, for example, and instantly turn it into a bioluminescent, night version with essentially just the flip of a switch. “In essence, it would take a whole set and make two sets out of it,” Powers describes. In this way, they repurposed assets, using them in several areas of the script. “We were duplicating the usefulness of the set by implementing this fairly complex structure that allows instant access to the bioluminescent, night version. When the button was pushed, it would change the lighting, the texture map, and all these settings, and the jungle could be used as a possible alternate set. The bioluminescent, night side was featured heavily in the film and just to be able to implement that in real time and switch between day and night depending upon what the scene called for was a powerful part of the workflow.”

The department also used “perimeter optimization”, a baking technique that determined particular parameters through the placement of an interactive sphere. “It would create a virtual-reality sphere around the perimeter of a specified location, and it would look like the environment went on forever,” he explains. “It enabled us to optimize real-time performance by breaking huge environments into manageable chunks. It was just invaluable.”

Another interesting technique arose out of a problem the artists encountered when several characters would run across the huge tree branches of Pandora. “I came up with a technique in which the characters’ performance capture would actually emanate the environment from their body.” When a character would move through a performance, everything that they touched or interacted with was created by their body motion. For example, the very branch that they ran across was generated by the characters’ footsteps. This helped avoid problems where the character’s feet would penetrate the geometry but also created some very interesting environmental assets like giant branches that became important visual elements in the final shots.

Directorial Dream

The Virtual Art Department delivered “a very efficient workflow that gave [Cameron] exactly what he wanted in a real-time workspace; and, if he didn’t want something, he would change it,” Powers says. Cameron, while shooting live characters on a motion-capture stage, could see CG representations of the aliens and environments in real time in-camera, and he used these CG assets for composition and blocking.

“With all the light shafts, all the trees, and all the jungle around him, [Cameron] was able to make immediate directorial decisions. It wasn’t just a bare motion-capture stage. He didn’t have to completely imagine what it would look like and what the postproduction studio Weta Digital would deliver a year in the future. He was able to actually make his movie in the moment.”

Cameron and his Virtual Process delivered to Weta Digital detailed templates that invoked all the art direction, lighting, composition, camera motions and placement, and performances that Cameron wanted.

“Directors are familiar with live-action shooting and their creativity is often sparked by the creative discovery process in the moment. They can see the immediate feedback of the actors’ performances,” Powers explains. “With effects-heavy and animated films, all that goes out the window because they are in this unnatural workspace where they have to wait months or years to see the final shots. When decisions are deferred until months later as part of a postproduction process, it becomes not only an unnatural filmmaking process that is alien and disenfranchising to their immediate creativity, but also expensive and time consuming.

“When the director is able to see everything and make informed decisions with a camera in his hands, alongside the team he has put together, he is actually making his movie much more in the way filmmaking has always been done,” Powers continues. “It is a step out of visual effects dark ages, in which directors had to endure a laborious and alienating process. The virtual workspace instead promotes the immediate discovery that film directors crave.”

Fully Evolved

The Virtual Art Department and its real-time, interactive workflow are as innovative and imaginative as Avatar itself. Together with James Cameron and his team, Powers helped to create a fully realized virtual filmmaking workspace.

“This is an evolution of the entire industry moving forward,” Powers admits. “It is evolving into a fully virtual, interactive workflow where everything is nonlinear and all the departments are able to converge and interact as they have never done before. Avatar was the first huge step toward that; there has been nothing like it before Avatar. From first-hand experience, I know that the final film was different because this workflow was employed.”

The real-time workflow and tools employed “cut through the mess and enabled artists to really connect with their art with an unobtrusive way,” concludes Powers. “I continue to use the best tools for the job, and oftentimes that’s LightWave.”

Pro3D note: Rob Powers is such a believer of NewTek LightWave software, he recently joined the company and is bringing his high-level real-world production knowledge to the forefront of development for LightWave software. He is leading the development efforts for LightWave 10, which is now available. For more, visit http://www.newtek.com/lightwave/lw10.php.

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now