By now, you’ll have likely seen an article or social post about Cinematic Mode, the new computational background blur available on the iPhone 13. Before actually using this feature, the received wisdom of filmmaking forums was that this “portrait mode for video” would be a fake waste of time — and indeed, it’s not perfect. Edges can be fuzzy, and it only works at 1080p30 for now. But while it’s easy enough to find this new feature’s flaws, it would be a mistake to focus on them. Cinematic Mode doesn’t have to be perfect to be good enough for many, and computational photography brings benefits which a traditional camera can’t touch. Let’s dig in to what Cinematic Mode means for video professionals.

Shooting with Cinematic Mode

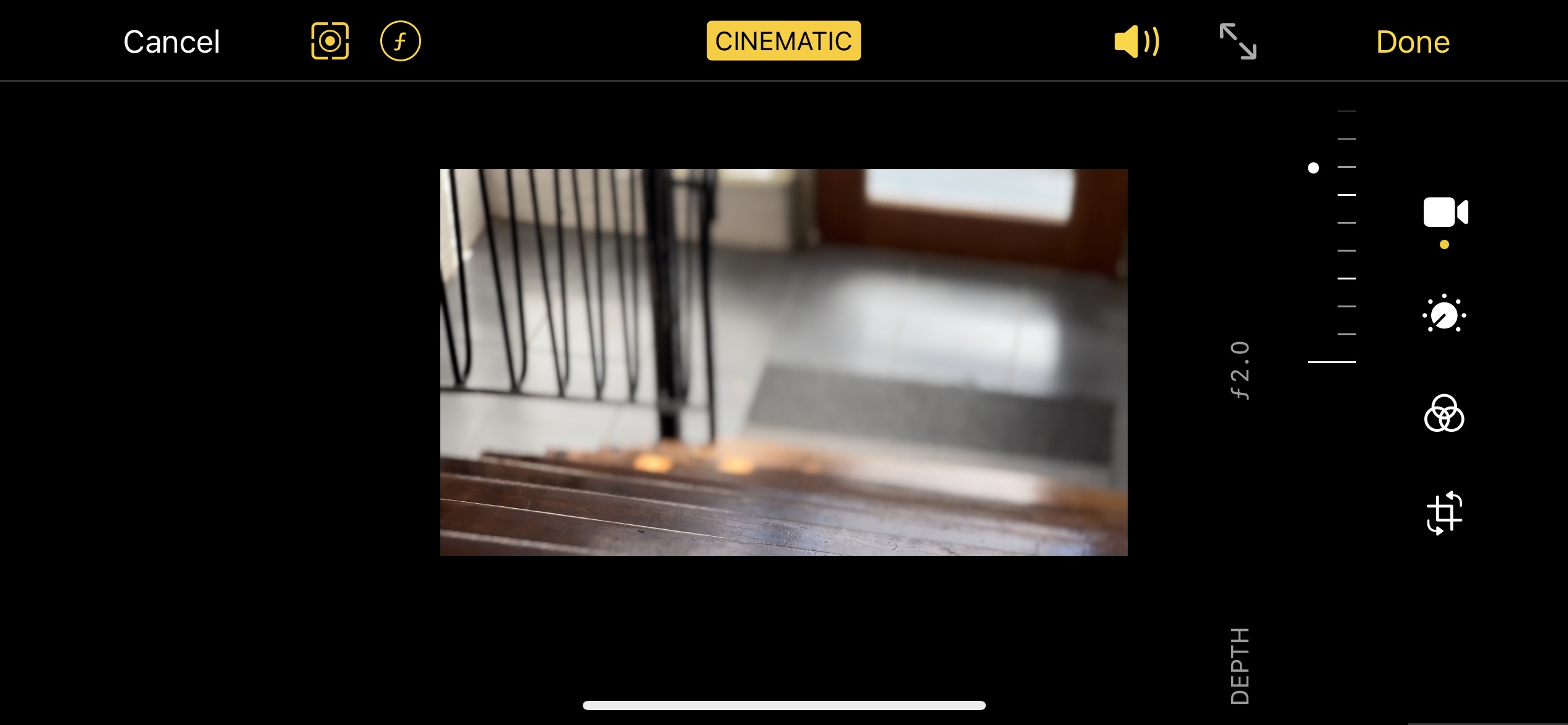

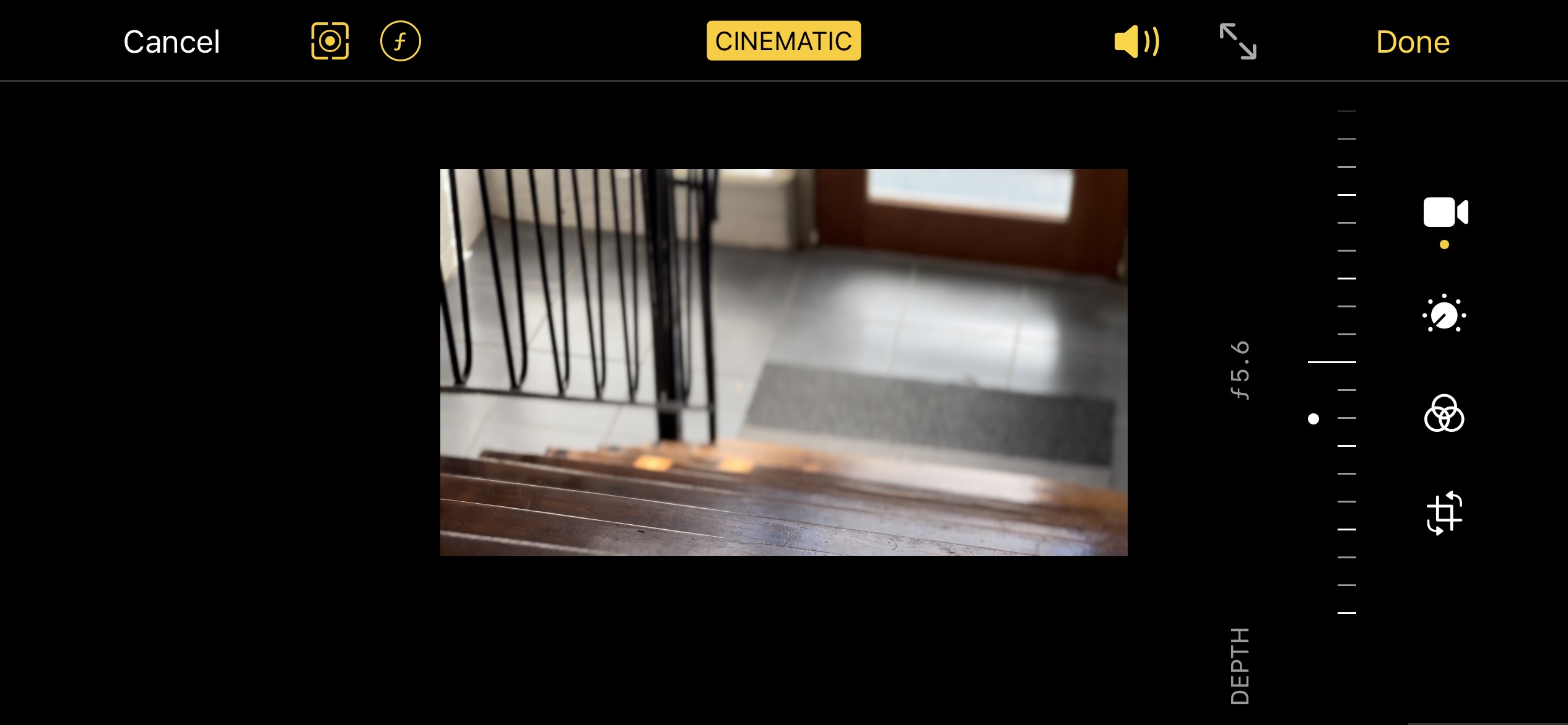

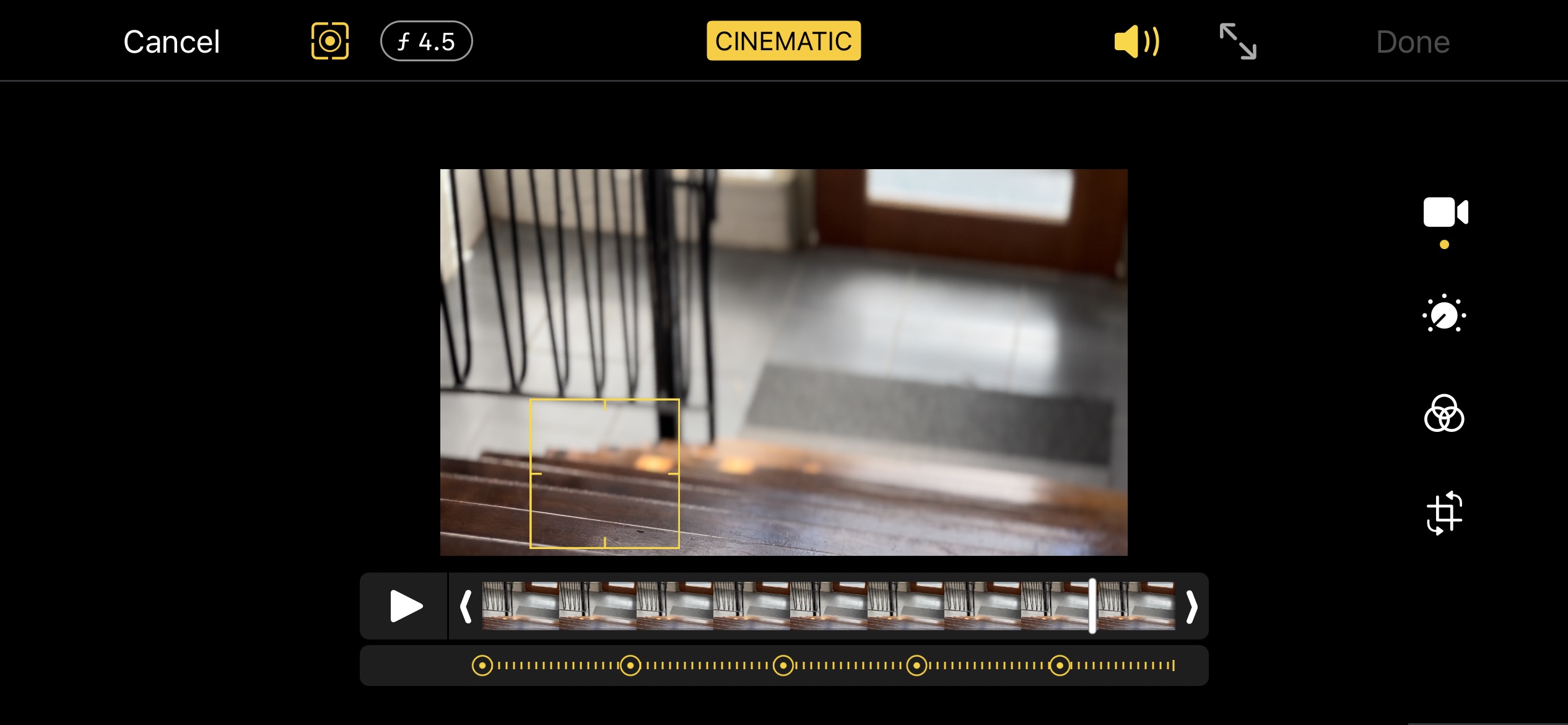

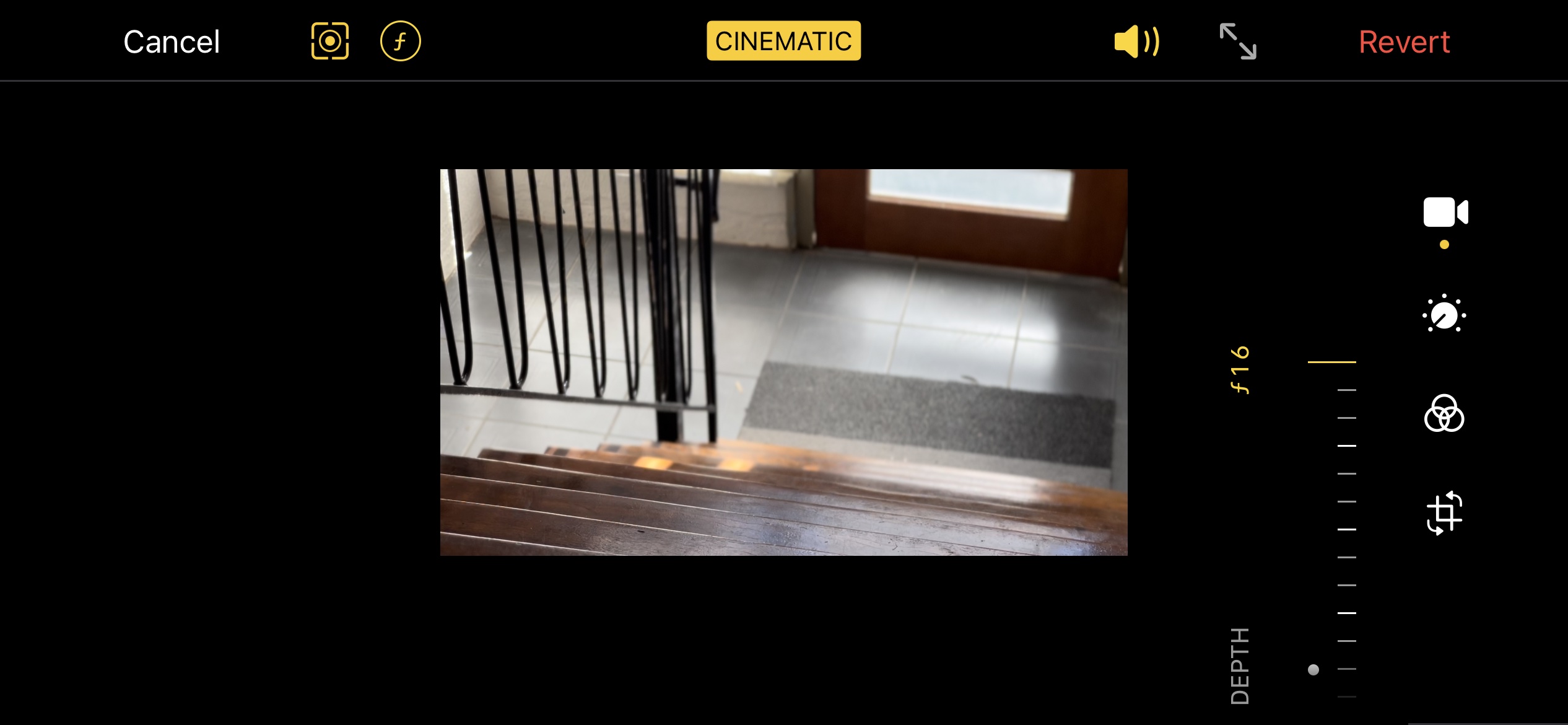

In classic Apple style, the feature is easy to use. Open the Camera app, swipe to Cinematic, tap on what you want to focus on, hit record. If you’re shooting people, the focus will move to a person looking at the camera, and rack focus to another person if the current subject looks away. You can also double-tap to lock on to a subject and track them around the shot. Magically, the original un-blurred shot is recorded, and the blur is applied live during playback. That means that a virtual f-stop can be adjusted, from f2.0 all the way to f16, to instantly change how blurry the background appears.

The first time you use this, you’ll have a hard time keeping a smile off your face. It’s pretty cool to have the bokeh of a much larger sensor on a thin slab with a large screen that’s always in your pocket. It won’t take long to find the limitations of the effect, though, especially if you shoot subjects with fuzzy hair. Edge transitions are where the illusion breaks down, and on the widest apertures, only smooth objects look real.

Fussy backgrounds can also sometimes be interpreted as part of the foreground, so be sure not to place your subjects in front of anything too complex. Errors in edge calculation are far more obvious at wider f-stops, too. Super-shallow depth-of-field is unlikely to look great, but if you choose a moderate virtual aperture like f5.6 or f6.3, most edge flaws will be well hidden. The old adage holds true: a lie is easier to tell if it’s close to the truth.

Still, it’s not hard to see how many existing shooters might use this. With a standard camera as the main angle, an iPhone in Cinematic Mode is probably a perfect support camera for b-roll. It’s easy to cut from an interview to a shot with a similar amount of blur, and you won’t need to worry about fussy edges if you’re not shooting head-and-shoulders. Happily, you can also dial in a precise amount of blur after you’ve shot it.

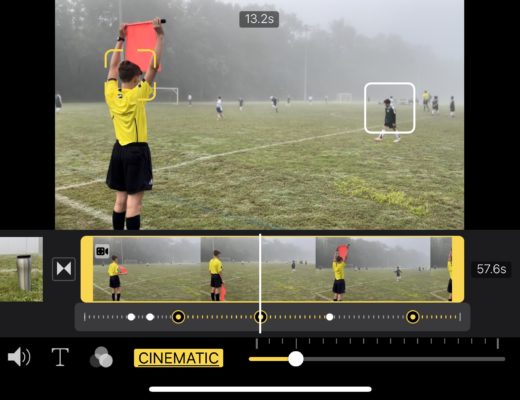

Changing focus after shooting

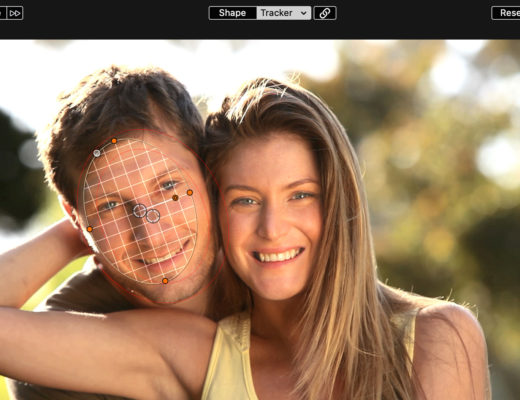

Editing a Cinematic Mode shot on your iPhone or iPad reveals a new interface, effectively introducing “focal point keyframes” along the timeline. Each one connects a point in time to a position in the frame and an aperture. Moving to a time and choosing a new focal point will create a rack focus, but you can also rack aperture without affecting exposure. This decoupling of blur from exposure means you’ve got one less thing to worry about on set, but you can’t ignore focus entirely.

Though an iPhone’s small sensor means that the focal plane is pretty deep, it’s not a pinhole camera. If one subject is far closer to the camera than others, you won’t be able to keep everything in focus all the time. Focusing on a near object means that far objects need no digital assistance to fall out of focus — it’s just optics. If you try to focus on them after the fact, you’ll simply reveal their blurry nature more clearly. Perversely, this means that if you really want maximum bokeh control in post, the focus in your original shot needs to be pretty deep.

Right now, on iOS, you can choose a new focal point and adjust aperture. Once it arrives on the Mac too, I’m hopeful that we’ll also be able to tweak the depth map to correct issues, either by manual rotoscoping, or by throwing a bit more computing power at the problem.

Computational photography

Thought Cinematic Mode is probably the most dramatic example of computational photography that Apple’s released, it’s hardly the first. The iPhone punches above its weight because its superior computing power (more than most laptops) allows it to do far more than your typical camera can. It’s shooting several exposures for every frame to increase dynamic range. It’s using gyros to combat shake. It’s making sure that skin tones stay natural even while exposure is balanced across a shot. These aren’t improvements that normal cameras can make at all, and they’re not even changes which most photo post-processing programs offer to do for you.

While many phones can do smart things with still images, only the iPhone 12 Pro and up offer Dolby Vision HDR video recording — and there are new features every year. Anything available for stills now can reasonably be expected to turn up for video once computational power is available.

That’s not to say that professionals will be able to tweak all these settings very much, though; if history is any guide, the built-in options will probably remain fairly simple in scope. Full-fat professional-level control over color correction is likely to be found only on computers, in post-production. That’s a shame, because it’s only in the field that you can get the most out of all those extra sensors, in real-time.

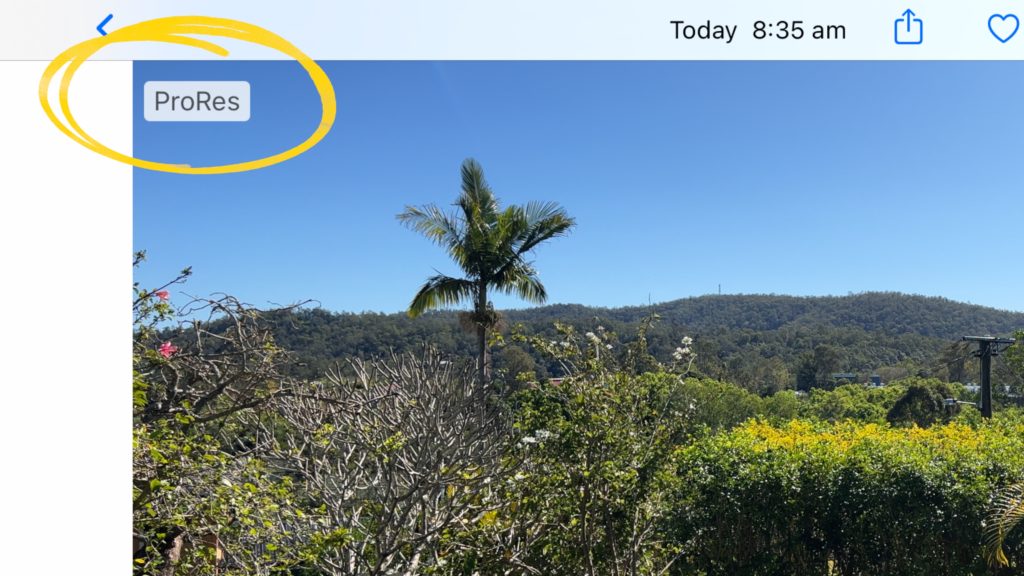

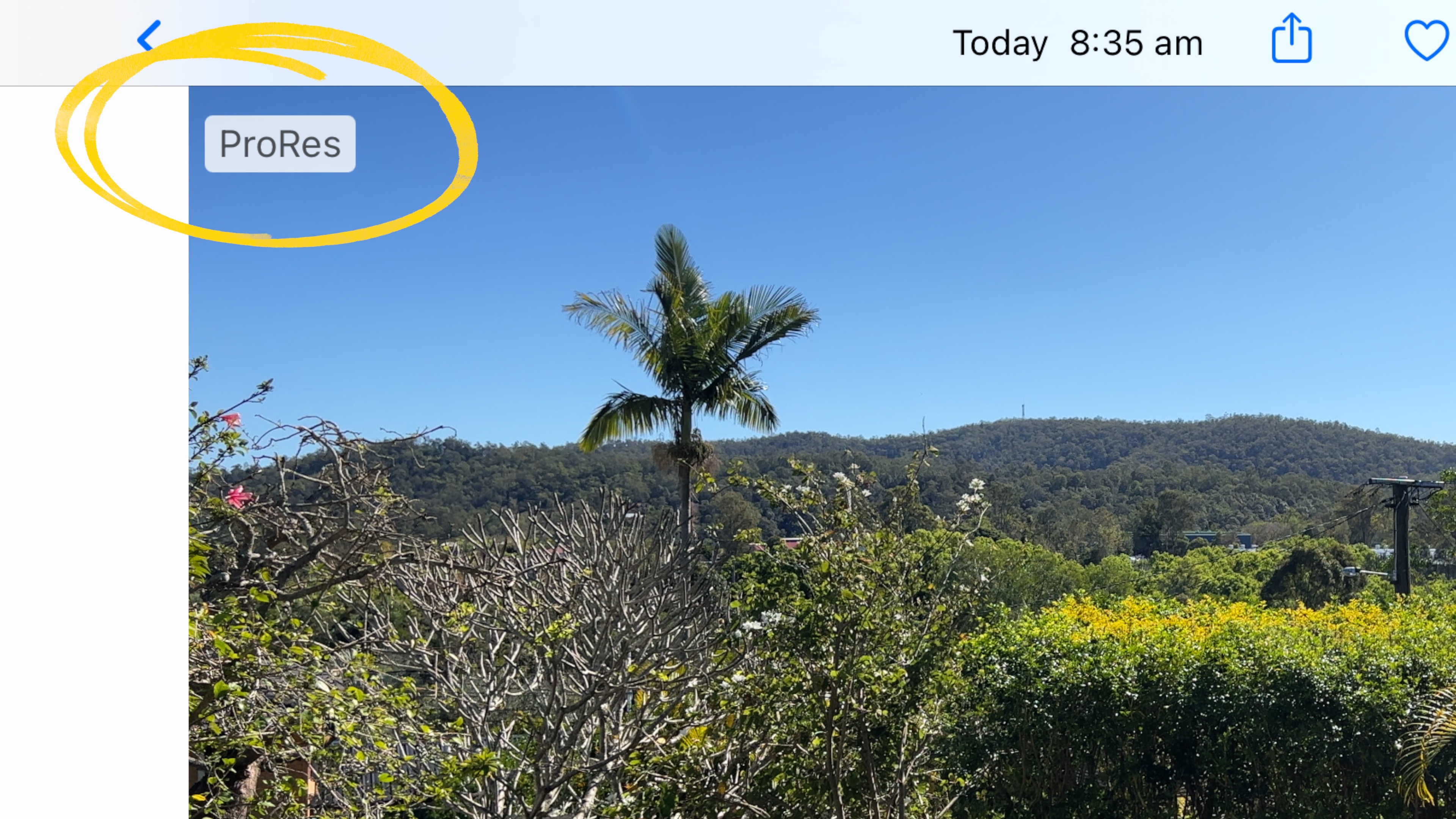

It’s gotten better since the iPhone 12, though. Recording in Dolby Vision does give more leeway for correcting colour, and it’s pretty easy to work with HEVC on modern Macs. But ProRes support is already in iOS versions of iMovie and Clips, and is coming soon to the Camera app too.

Now also available — ProRes

At some point soon, you’ll be able to use the standard Camera app to record ProRes on iPhone 13 Pro, but if you have Filmic Pro, you can play right now — the update just went live. While this has the potential to open up whole new workflows, be careful with the size of files you’re making, and make sure to leave time to offload them. From early tests it looks like ProRes makes a pretty clear difference to image quality, though the differences may be less pronounced in a simpler image where compression doesn’t have to work as hard. And yes, it’s still a tiny sensor with only just enough pixels for 4K; my GH5 still produces clearer images, at a much lower data rate than ProRes. Worst of all, without fast USB-C to offload, it could to take a while to get your clips off your phone if you have to use a cable.

To be honest, I don’t have an issue with compressed footage from my GH5, but I’ve always found iPhone footage to be a bit soft, and the cause is split between the low data rate and the small sensor. The 12MP sensor, with around 4000 pixels across, doesn’t allow for oversampling in the video capture, resulting in a loss of fine detail across the image. Compounding this, the default data rate for HEVC Dolby Vision iPhone footage is on the low side, less than the 100MB/s minimum on my GH5, and many times smaller than standard ProRes. This can smear fine details further, to the point where you can see it after YouTube upload and recompression. The increased data rate of ProRes is, of course, a two-edged sword, increasing quality while hugely increasing file size, and you’ll need a larger iPhone to even consider using it. Unfortunately, the 128GB 13 Pro models will be limited to 1080p ProRes, and given the space requirements, not much of it.

In an ideal world, I’d prefer to just dial in a higher data rate for HEVC rather than deal with the mega-sized ProRes files. But at the moment, it looks like it’s a choice: ProRes or Cinematic Mode, and there’s no confirmation that we’ll be able to use them both at the same time. I’ll definitely still be using a “real” camera for A-roll interviews, and I’ll have to make a choice between 4K ProRes and 1080p Cinematic HEVC for quick iPhone B-roll. But there’s always next year.

Here’s how the GH5 stacks up against HEVC and ProRes on an iPhone 13, at 4K. Click on each image to see them in full.

Next year and beyond

Every year, the iPhone’s camera gets a small bump; sometimes a larger bump. Year-on-year those changes might not seem exciting, but they’re far more frequent improvements than the glacial pace of the rest of the industry. If next year’s iPhone has, as rumoured, a higher resolution 48MP sensor, then perhaps oversampling will solve the issues around fine detail. That could move the image from “great for a phone” to simply “great”. Maybe we can keep the awesome exposure control but turn down the noise reduction. Maybe Cinematic Mode will work at 4K24. Maybe we’ll be able to shoot straight to a USB-C connected SSD. But maybe not. The iPhone is likely to remain a tool for the masses; a camera always with you.

Convenience always wins

The iPhone has always been about convenience, and as it’s become better over the years, it’s displaced real cameras in more and more situations. There will always be traditional camera enthusiasts, just as there are people who still shoot movies on film stock — if you don’t want to change, don’t. But new technology brings convenience along with quality improvements, and the look of older mediums can be imitated.

Digital shooting took a long time to become accepted, but it’s brought huge benefits. You instantly, really, know if you got the shot; no out-of-focus surprises in tomorrow’s dailies. That’s not just convenience, it’s a huge money saver. Many other improvements have changed the way that movies are shot (green screen, digital grading, VFX) and I’m sure many filmmakers would embrace a smarter camera, no matter how big or small it is.

But as much as most filmmakers (myself included) would love to deliver beautiful 4K images all the time, many clients today still only want pretty 1080p images. If your target is social media, the majority of your potential audience is on phones, where the difference between 4K and 1080p is much harder to see. It’s confounding that the device making convenient 4K video capture possible is also the device making it less important.

Conclusion

If computational photography means you never have to compromise on performance because of focus or lighting issues, I can see smarter cameras taking over — so long as the image is good enough. Give Apple a few years, and the iPhone could easily displace many more mid-range cameras. It already has today, and we can expect public perception to shift further.

Video professionals won’t be out of a job, though. They can still bring additional gear and experience with lighting, framing, and audio to produce a higher quality result — even if they have the same base camera that the client owns themselves. And this is nothing new! Cameras have always gotten better, and consumer cameras have always been just a few years behind the professional ones. This time around, the computational improvements of Apple’s chips mean that the consumer iPhone is much smarter than a professional camera.

To be clear, pro cameras will always be ahead of the iPhone on many metrics, but we may reach a point where the pro cameras aren’t far enough ahead to outweigh their disadvantages. We live in interesting times, and it’ll be fascinating to see what happens next.

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now