Now there is a Chrome extension that adds a 3D photo effect to Instagram pages. It all started with an experiment by a team of researchers from Virginia Tech, the National Tsing Hua University and Facebook.

Now there is a Chrome extension that adds a 3D photo effect to Instagram pages. It all started with an experiment by a team of researchers from Virginia Tech, the National Tsing Hua University and Facebook.

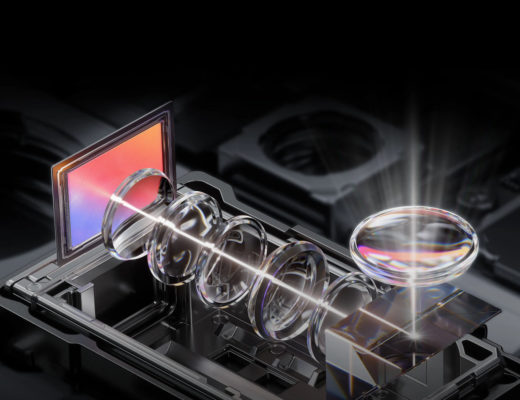

Tridimensional photography used to need special equipment and sophisticated processing methods to achieve results, but more recently we’ve seen how 3D photography has become more accessible through the use of some smartphones and special apps. The authors of the study now published also mention that “In the most extreme cases, novel techniques such as Facebook 3D Photos now just require capturing a single snapshot with a dual lens camera phone, which essentially provides an RGB-D (color and depth) input image”.

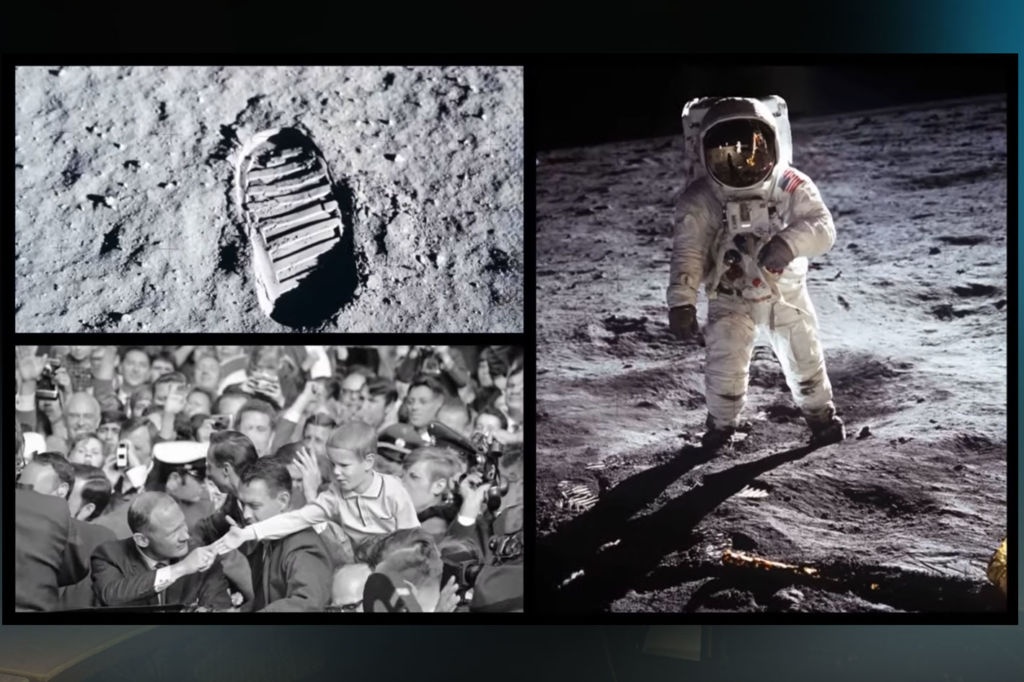

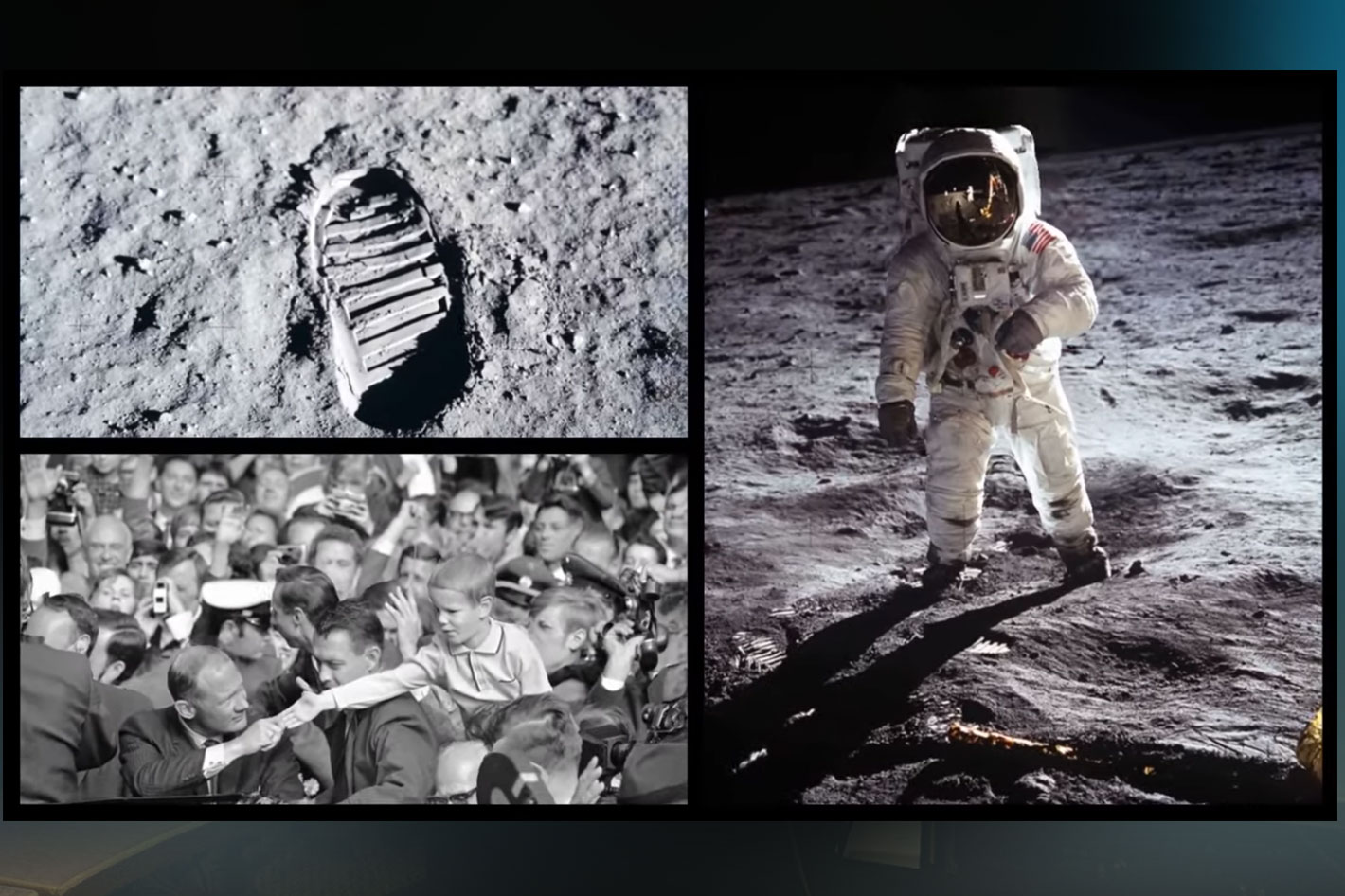

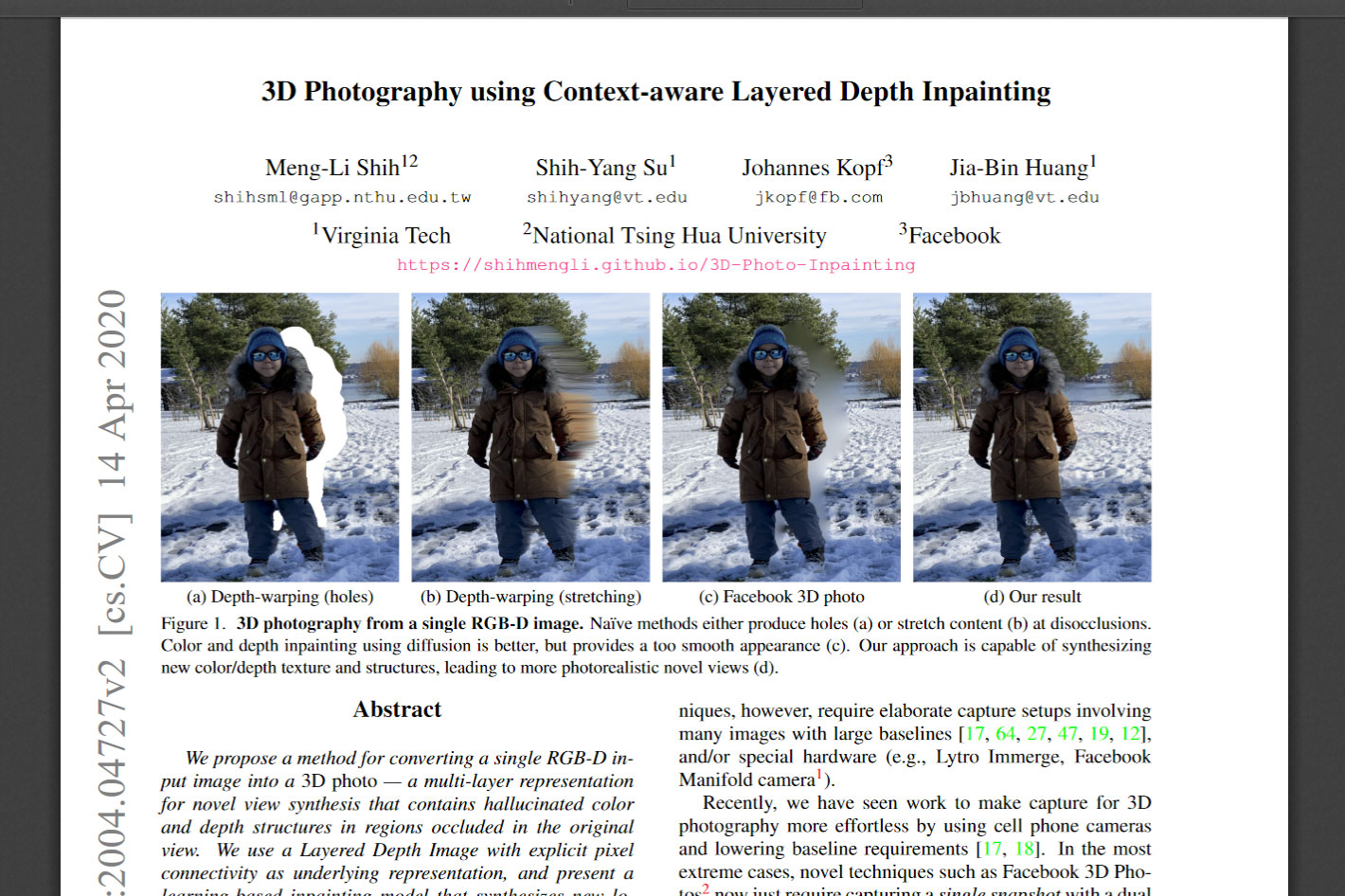

The paper “3D Photography using Context-aware Layered Depth Inpainting” published this April by researchers from Virginia Tech, National Tsing Hua University and Facebook, reveals another method to obtain a 3D photograph, through the conversion of a single RGB-D input image into a 3D photo. The team developed a deep learning-based image inpainting model that can synthesize color and depth structures in regions occluded in the original view.

A single RGB image as source

A single RGB image as source

What’s interesting about the new method is that it allows the use of either a RGB-D image from a cellphone or a single RG image. “Classic image-based reconstruction and rendering techniques require elaborate capture setups involving many images with large baselines, and/or special hardware,” the researchers stated in their paper, 3D Photography using Context-aware Layered Depth Inpainting. “In this work, we present a new learning-based method that generates a 3D photo from an RGB-D input. The depth can either come from dual-camera cell phone stereo or be estimated from a single RGB image.”

Compared to previous state-of-the-art approaches, the method, which is based on a standard CNN, shows fewer artifacts during the image conversion process. If the subject interests you follow the link to download the whole paper, 3D Photography using Context-aware Layered Depth Inpainting, a 15-page .pdf document that explains the whole method.

In the paper the researchers present an algorithm for creating compelling 3D photography from a single RGB-D image. As stated, the “core technical novelty lies in creating a completed layered depth image representation through context-aware color and depth inpainting. We validate our method on a wide variety of everyday scenes. Our experimental results show that our algorithm produces considerably fewer visual artifacts when compared with the state-of-the-art novel view synthesis techniques. We believe that such technology can bring 3D photography to a broader community, allowing people to easily capture scenes for immersive viewing”.

Turn Instagram photos in 3D images

Turn Instagram photos in 3D images

“Unlike most previous approaches, we do not require predetermining a fixed number of layers. Instead, our algorithm adapts by design to the local depth-complexity of the input and generates a varying number of layers across the image,” the researchers stated. “We have validated our approach on a wide variety of photos captured in different situations.”

The model was trained using an NVIDIA V100 GPU with the cuDNN-accelerated PyTorch deep learning framework. The model can be trained using any image dataset without the need for annotated data. For this project, the team used the MS COCO dataset, with the pretrained MegaDepth (MegaDepth: Learning Single-View Depth Prediction from Internet Photos) model first published by Cornell University researchers in 2018.

According to NVIDIA Developer News, to show the potential of the project, a separate researcher from Google has taken the code and developed a Chrome extension that turns every Instagram post into 3D images. Developers interested in setting up the extension can follow the instagram-3d-photo tutorial to set up and run the project using NVIDIA GPUs on the Google Cloud Platform.

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now