Panels, live sessions and tech demos on immersive graphics, real-time virtual production, 3D rendering and more will be presented at the Media & Entertainment sessions during the GTC 2019.

From Lucasfilm’s ILMxLAB sharing its experience about how virtual and augmented reality provide new ways to tell stories, to how Pixar uses GPUs to enable art and creativity in animated and live-action films, the next GPU Technology Conference, from Nvidia, will showcase the latest experiences in Artificial Intelligence and other advanced technologies, and how media and entertainment professionals rely on them.

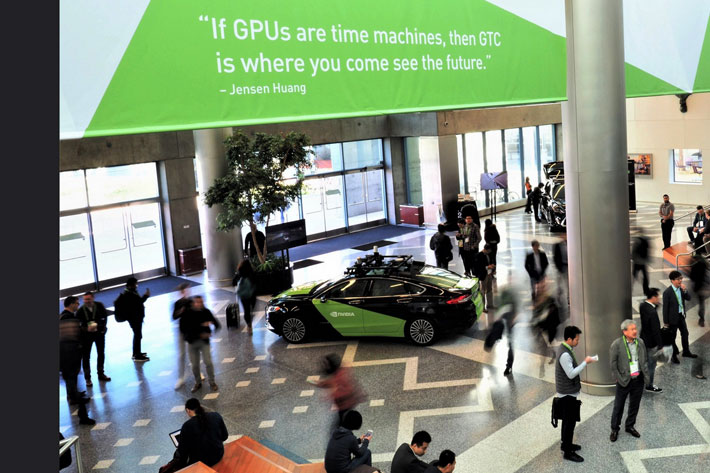

These new tools are being used in everything from content creation and 8K HDR video production to real-time ray tracing with physically-based materials to create complex effects and animations for Emmy-winning TV shows and Academy Award–winning feature films. The Media & Entertainment sessions happening during the GPU Technology Conference, March 18-21 in San Jose, will cover how to effectively develop and create with today’s storytelling tools.

Real-time photorealistic rendering

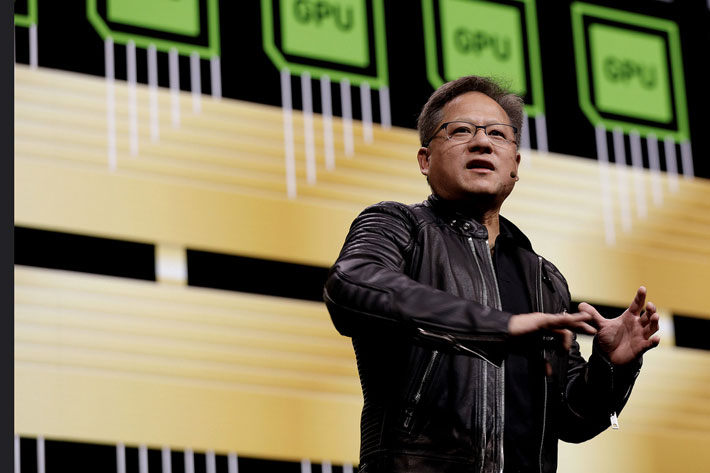

As covered in multiple articles here at ProVideo Coalition, game engines have transformed production workflows. From game engine-powered virtual production to interactive characters and deeply engaging experiences, media and entertainment have, in fact, drastically changed over the last few years. Graphics cards play an important part in this transformation, and Nvidia is using this year’s conference to promote its new RTX technology, a platform that fuses ray tracing, deep learning and rasterization to fundamentally transform the creative process for content creators and developers through the Nvidia Turing GPU architecture and support for industry leading tools and APIs.

We’re at the start of a new path, with the introduction of RTX graphics cards, but what Nvidia promises is that “applications built on the RTX platform bring the power of real-time photorealistic rendering and AI-enhanced graphics, video and image processing, to enable millions of designers and artists to create amazing content in a completely new way.” That’s what this conference is all about, in multiple areas where AI and the new tech is being used, but for filmmakers the sessions on anything related to story telling will be crucial to have a glimpse of the future.

A look towards the future

GTC attendees will, according to Nividia, “gain insight into the world of filmmaking and visual effects through sessions that delve into new techniques in immersive graphics, real-time virtual production, 3D rendering and much more”. It’s a unique opportunity to “learn more about immersive storytelling and real-time ray tracing from industry luminaries” and “find out how studios are incorporating advanced technology to produce stunning visuals”.

Unless you have the gift of ubiquity, it will be hard to follow all the events happening during the conference, so here is a suggestion about sessions you should not miss.

Tuesday, Mar 19, Vicki Dobbs Beck, Executive in Charge at ILMxLAB will present “The Journey to Immersive Storytelling” during which she will show how emerging technologies such as virtual and augmented reality offer powerful new ways to tell stories. By enabling us to be in these worlds and connect with characters, – she says – we can transport people to places and times in history as never before. We’ll discuss ILMxLAB’s quest for sustained innovation and its journey of discovery since its launch more than three years ago. We’ll also provide a look toward the future.

Genesis and mixed-reality

For a glimpse of the latest technologies in filmmaking there is another session the same day, under the name “Genesis: Real-Time Raytracing in Virtual Production”. Marco Giordano, Senior Developer at MPC Film, Francesco Giordana, Realtime Software Architect at MPC Film and Damien Fagnou – SVP Technology & Infrastructure, Production Services at Technicolor will be the speakers during a session that shows Genesis, MPC’s virtual production platform, designed as a robust multi-user distributed system that incorporates both modern technologies like mixed reality and more traditional techniques like motion capture and camera operation via encoded hardware devices.

During the session at the 2019 GPU Technology Conference the speakers will talk about how they are improving the quality of the real-time graphics, with a special attention to lighting, and explain how they have started incorporating elements of real-time ray tracing into the platform, from a live link to Renderman XPU and their own Optix-based path tracer, as well as a hybrid approach based on DXR running inside Unity.

Pixar and Technicolor

The following day, March 20, there is also space to discover other uses for the latest technology in filmmaking. It’s during a session named “Prism & RTX”, presented by Damien Fagnou, SVP Technology & Infrastructure, Production Services at Technicolor and Victor Yudin, Lead Software Developer at Mill Film. The session will serve to introduce Prism, a Technicolor initiative to produce a high-end Optix-based path tracer for a fast preview of element, shots or sequences. It incorporates open source technologies like Open Subdivision Surface, Open Shading Language, and Pixar USD to produce a high level of fidelity and realism. The speakers will explain why they chose to develop a modern GPU rendering system and the advantage of using it in collaboration to RTX graphic cards.

Pixar uses GPUs to enable art and creativity in animated and live-action films and Max Liani, who is senior lead engineer for RenderMan at Pixar Animation Studios, where he leads rendering technology research, will talk about the company’s experience in “Adding GPU Acceleration to Pixar Renderman”. The session, March 19, will be the ideal space to discuss photo-realistic rendering in modern movie production and present the path that led Pixar to leverage GPUs and CPUs in a new scalable rendering architecture. It’s the right place to find more about RenderMan XPU, Pixar’s next-gen physically based production path tracer, and how the company solves the problem of heterogeneous compute using a shared code base.

Creating Thanos in Avengers

Max Liani will also be part of another session, “The Future of GPU Ray Tracing”, with a series of experienced professionals. Before joining Pixar, Max Liani was lighting technology lead at Animal Logic, where he created the Glimpse production renderer used in the Lego movie franchise. Earlier, he worked in the film industry as a CG artist, lighting supervisor, and engineer for two decades.

March 20 there is also a session named “Event-Driven Human Performances Using NVIDIA Technology” where Aruna Inversin, Creative Director & VFX Supervisor at Digital Domain share how Digital Domain and Nvidia create compelling digital characters that are used for feature films and real-time performance events. Participants will learn the history of some digital characters from Digital Domain’s library and see compelling behind the scenes footage. From Tupac at Coachella to the machine learning algorithms that helped drive Thanos in Avengers: Infinity War, Digital Domain is pioneering how machine learning is used to drive real-time performances, both on-stage and off.

GPU ray tracing is the future

The Future of GPU Ray Tracing, a panel already mentioned, runs on March 19 at the 2019 GPU Technology Conference. It looks into the future of GPU rendering, through the words and examples of the world’s leading game-engine and GPU ray-tracing technologists from Pixar, Autodesk, Chaos Group, REDSHIFT, Otoy and Nvidia, who will discuss how GPU ray tracing is transforming the creative process in design, animation, games and visual effects.

NVIDIA’s 2019 GPU Technology Conference (GTC) has more to offer, as a global conference series providing training, insights, and direct access to experts on the hottest topics in computing today, but these topics, some of a series related to media and entertainment that you will find on the webpages dedicated to the event give an idea of the importance of the meeting.