In the work-world of movie-making, our community has become accustomed to an eighteen month product release cycle marked by the calendar of trade shows. In the constant stream of technological product announcements, “technology” really means “new technology”. Gadgets are announced, showcased and promoted. Some new devices are useful, some are immediately dismissed, and others live a marginal life as an interesting curiosity that never quite found a niche. Deciding whether something was innovative is often a case of hindsight.

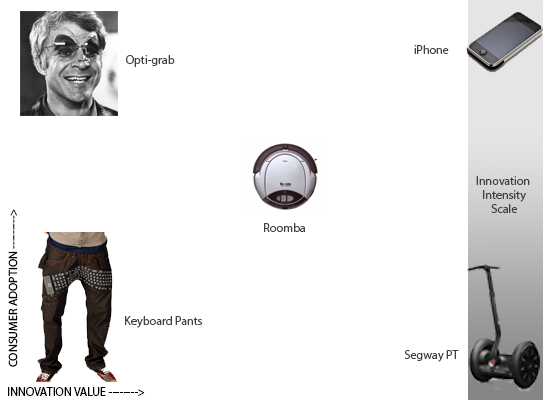

I map out these products on my own personal utility metric called the “Segway-iPhone Innovation Intensity Scale”. I measure the effect rather than the hype of any specific device because that often has little bearing on how well the device is adopted by the public or whether it's actually useful. The X axis charts “innovation”, while the Y axis maps the “consumer adoption” rate. By plotting the relative innovation-intensity positions of new gadgets, a line can be drawn between two iconic devices: the Segway PT and the iPhone, hence the name of my scale.

On the bottom the scale is Dean Kamen's personal transportation device, the Segway PT. A product that had more hype than nearly any new device in modern memory before it was released in 2001. The Segway was released to a chorus of bewildered laughter, and with questionable utility for the masses, the device hasn't succeeded beyond the niches of mall cops and tourists. (Search “Segway Fail” on YouTube. You're welcome). The one thing that is not in question is the actual innovation of the device — there is simply nothing else like it in the world of transportation devices. On the top of the scale is Apple Inc's iPhone, released in June 2007. A much rumored, hyped and speculated device before it's release. The iPhone was innovative, useful and you probably have one in your pocket right now (or you're reading this article on it).

Which brings me to the latest gadget that has received boat loads of hype, the Leap motion controller from Leap Motion. The Leap is a small, USB peripheral device that brings markerless, realtime motion capture to your computer. It's similar in concept to the Kinect camera for the Xbox, but it tracks hand and finger movement rather than full bodies (and the Kinect uses a stereo pair of cameras). The Leap was announced in June 2012 and I immediately got on the pre-order list. I was smitten by the hype and years of subliminal conditioning by modern science fiction films to expect an entire universe of devices controlled by a wave of my hand.

Before opening up my Leap, I had convinced myself that waving my hands around in front of my computer is the more efficient and natural way to edit video. The inherent non-computeriness of hand-gestures-in-the-air has to be a more human way of interacting with a machine. In all this anticipation of the future, I never really considered that any way I, a human, interact with my computer is inherently a human interaction. That's the snag. The keyboard, mouse and trackpad are human input devices, each with its own quirky history. One device isn't a more or less natural way or interacting than any other device.

The idea that learning a set of distinct hand-choreographies (gestures) will some how make working with my computer easier should have set off my hype alarm. Using motion capture based gestures is an interesting, and sometimes fun way to do something different with your computer, but it's not a better way to work. It's not about to sweep your keyboard, mouse and trackpad into the dust bin of technical history. Furthermore, don't expect this technology to upend NLE's, Color Grading Suites or mixing consoles anytime soon. Editors, colorists and sound mixers aren't complaining a ton about how clumsy their input devices are, and in all fairness, the Leap was not designed with the needs of this community in mind specifically. The Leap, according to Wikipedia, was a response to a workflow frustration in 3D modeling and animation (although currently there is only one plug-in for one 3D app in the Leap store), but is now positioned as a device for general computing uses. The largest single category of apps in the store is “Games”.

Setting Up The Leap for Premiere Pro CS6

While there is a plug-in being developed for Leap control of FCPX, it's not out in the wild yet. So, using BetterTouchTool v 0.97, the free and recently Leap-enabled gestural control app for MacOSX, my Leap and 13″ MacBook Air, I embarked on my voyage into the future of video editing. Since no one has released a comprehensive, app specific set of gestures for Adobe Premiere CS6 (I tried used FCPX but it just kept crashing my machine with the Leap connected), I created my own. I mapped a dozen of the 25 possible gestures (aka hand-choreography) to the things that seemed most logical (five-finger swipe right to Insert a clip from the viewer to the timeline).

Once configured, I printed out my list of gestures, so I wouldn't forget, and went about putting together a quick show in the timeline. I wanted to 1) open a project

2) select clips from a bin, 3) Set in and out points, 4) Drop clips into a timeline and 5) playback the show full screen.

This is a very frustrating way to edit video on a computer. It can be done, it sort of works, but it's slow. Even after making a lot of tiny adjustments to Leap placement and room lighting (it is sensitive to IR), tracking speed and other settings, I was only able to slightly improve my ability to execute actions efficiently. While the Leap is a remarkable piece of technology, I still think the concept of motion capture gestural user input is very early in its development. There are a couple of hurdles that the tech needs to find solutions to, the first being haptic feedback. Even the smoothest physical device or control surface provides some resistance to let your brain and body know where you are in relation to the rest of the Universe. We evolved with stuff in our hands, waving imaginary objects in mid-air is disconcerting and difficult to master — far from natural.

One possible solution is something the Leap dev community calls “table mode”, which means flipping the Leap on it's side and having it track your hand movement across a solid surface: a tabletop solution. With the right alignment, I could see some smart developer bringing digital life back to an old typewriter with a keyboard table mode app as an alternative to those expensive hipster typewriter mods. Or mash it up with a pico projector and turn any surface into a “Surface”. These kinds of applications would provide something physical to interact with and not abstract “gestures” to learn

Which brings me to the next hurdle — the gestures. The most reliable gestures right now, and despite the Leap's obvious high resolution, are the broadest movements in my experience. The broad sweeping hand movements are also the opposite of why we use keyboards, mice and trackpads in the first place. A wave of the hand is really an arm movement. It's slow, wasting time and energy, and takes me away from the work on the machine. Again, it's clear that the Leap is capable of tracking very small, precise and subtle gestures, so I look forward to the day when that kind of capability is available more broadly. Right now, Leap video editing is less Minority Report and more waving your arms around in front of your computer trying to make things happen than you can do more easily with the tools you already have.

Where would I place Leap on my chart?

Leap is innovative, there is no question in my mind. How well motion capture user input will catch on is really the question and depends on a lot of factors. While it's only been available to the public for a month; works best in dimly lit rooms; only has a modest number of non-gaming apps in it's app store; and still operates like a product in beta; it's too early to tell how widespread this technology will be adopted. Leap Motion has partnered with the computer and display manufacturer ASUS to build Leap units directly into new products. It will be interesting to see what these new machines can do with a unit integrated directly into the design. I am also excited to see what the developer and hacking community will do as the underlying software both matures and evolves.

The “killer app”, for me, with motion capture gestural user control would be a device that did not rely on learning a series of abstract choreographed gestures. Rather when you reached for something on the screen, your brain was convinced that your hand actually grabbed that thing. I don't think it's a case of waiting for holograms on the laptop (that may take a while), or haptic feedback gloves (that's been tried). I'm not sure what that kind of truly intuitive and natural UI would look like, but the Leap is a step in that direction.