In an exclusive edition of Editors on Editing, Glenn Garland interviews the editorial team behind the new blockbuster, Avatar: The Way of Water. Read the transcript or subscribe to our podcast, “Art of the Frame.”

In an exclusive edition of Editors on Editing, Glenn Garland interviews the editorial team behind the new blockbuster, Avatar: The Way of Water. Read the transcript or subscribe to our podcast, “Art of the Frame.”

AVATAR: THE WAY OF WATER

Glenn: Welcome to the Editors on Editing podcast in collaboration with American Cinema Editors and Pro Video Coalition. I’m Glenn Garland and I’m joined by James Cameron, Stephen Rivkin, and John Refoua.

James’ credits as director, writer, and producer are legendary. As editor, his credits include Titanic, for which he was co-nominated for the BAFTA and won the Eddie and Oscar, Avatar for which he was nominated with Steve and John for the Eddie, Bafta, and Oscar.

Steve’s credits include My Cousin Vinny, The Hurricane, Ali, Pirates of the Caribbean: The Curse of the Black Pearl for which he won the Eddie, and the two Pirate sequels: Dead Man’s Chest and At World’s End for which he was co-nominated for the Eddie, Avatar, and Alita: Battle Angel.

John’s credits include Reno 911, CSI: Miami, Avatar, Olympus has Fallen, The Equalizer, Southpaw, The Magnificent Seven, and Transformers: The Last Knight.

Now they have collaborated to bring one of the most mind-blowing epics of the year: Avatar, The Way of Water. Jim, John, Stephen, it’s such a pleasure to have you guys. I just loved what you guys did with Avatar. I was just blown away.

Jim: Oh, thanks.

Glenn: Thank you so much for joining me.

Jim: It’s our pleasure.

Stephen: I want to add: There’s a missing member of our crew, David Brenner, who we lost last February. And he is very much here with us today.

Glenn: Yes. I wanted to talk to you a little bit about that. There was a beautiful mention of him at the end of the film. He was an amazing editor, a beautiful man. It’s a real loss.

Jim: He was a critical member of the team. And did actually, some of the most interesting and difficult scenes in the film just happened to fall on his plate.

It might be good to just get an overview of the process so that some of the things we mentioned or talk about have a context. I think it behooves us to talk about how our process diverges—and is similar.

Stephen: Yeah, I’ve got to say, there is a point where making a movie like this becomes like making other movies, but everything that comes before that is completely different. All of the performance capture that’s based on reference cameras of the actors, and the edit that goes along with it, and all the preparation for virtual photography is quite unique to our workflow. And that could be years in a preliminary process, but only then when Jim picks up the virtual camera, does it become more like a conventional edit.

The basics of performance capture and building a cut using the reference cameras was something we perfected by the time we finished Avatar one. Jim likes to joke that by the time we were finished, we figured out what we were doing.

Jim: Technically, the quote is, “The only time you’re qualified to make a movie is when you’ve just finished it.”

Stephen: That’s right, right. So I thought we were going to take all that knowledge and things were going to be easier on Avatar 2. Well, of course, Jim wrote a script that involved many, many more characters. And combining live action with virtual characters, that complicated the process exponentially.

But that’s getting a little ahead of the game. So for now, I’ll just say that the basic performance edit is John, David, myself and Jim, looking very carefully at the reference cameras. Now, these are reference cameras of the actors’ performances. They are not shots, but they are reference cameras.

And most of the time, every camera would be assigned to a character. They would shoot a big close-up of their face because it is essential to have good facial reference of what’s being recorded in the volume. Now, the volume is a big studio stage that’s empty, and these actors are sort of black box acting.

It’s Jim and the actors not worried about shots or dollies or lighting or anything. It’s just purely director-actor relation. Right there. And when we get into virtual photography, it’s very liberating because he doesn’t have to concern himself with the performance because we have picked all of the very best from every actor.

Jim: From a directorial standpoint, I understood very early on that the reference cameras were absolutely critical. Our reference camera team I should point out, on Avatar 2, was 16 camera operators.

Glenn: Oh wow.

Jim: We always shot between 12 and 16—these were HD video that had a very long zoom lens so that these guys could zoom in. Typically they would be handheld and they would move around as a very agile crew, limited only by their cabling.

It was the funniest thing, because I’d come in in the morning, I’d rehearse with the actors. We’d just kind of noodle around for a while. And then once I started to lock in on a staging, and the second I defined an axis, they would all move like a flock of geese to one side of that line and start picking off their angles that they knew were gonna be needed. So somebody would grab what we called the stupid wide, just so that we had a reference point for everybody. But the key to it was that I knew standing there as a director that later wearing the editor hat, if I didn’t have that closeup I wouldn’t be able to evaluate the actor’s performance. And this is all about evaluating a performance that later would run through a big complicated system and come out the other end as the actor’s performance translated into a CG character.

So my role there as a director was not only to get the performances, but to make sure that they were shot in a way that the editorial department could use. And the critical thing to understand here is I’m not shooting the final images for the scene at all.

I’ve got that in the back of my mind. It’s informing what I’m doing. But that’s not the goal. The goal is to preserve those performances in an edit friendly way, so that we can look at them in the cutting room, see where everybody was, see what they were doing, and have a tight closeup of every single split second of the actor’s work.

Glenn: Yeah. And the performances are so excellent throughout. I know that sometimes people get worried about mocap not feeling real, but there were a lot of emotional scenes and you really felt for the characters.

Jim: Thank you.

John: Yeah. I think one of the things that’s really important to recognize in this movie—all these movies—is that the actor’s performance is what drives everything. It’s a one-to-one—as much as possible—relationship between what the actor did on the empty stage and what you see on the screen with all the effects going and everything like that.

And, for everything that you see on the screen, there was an actor that did that.

Glenn: So nothing’s manufactured.

Jim: No, no.

John: It’s not animated. I mean, the animation is part of the process of all the backgrounds and all that, but the actors’ performances are a genuine one-to-one ratio with what the actors did.

Glenn: Fantastic.

Jim: Animation plays a huge role in the movie when it comes to the creatures: the flying creatures, the swimming creatures, how they jump, how they move, the forest creatures, all that.

![]() That’s pure keyframe animation. But anything that one of the humanoid characters is doing, any one of the Na’vi or the Recoms, that was performed by either an actor, or a body double, or a stunt double, and it’s a human performance no matter how outlandish the action appears to be.

That’s pure keyframe animation. But anything that one of the humanoid characters is doing, any one of the Na’vi or the Recoms, that was performed by either an actor, or a body double, or a stunt double, and it’s a human performance no matter how outlandish the action appears to be.

It’s a human performance.

Glenn: Yeah, that’s, I’m sure why it just feels so real and so emotional.

Jim: Exactly.

Stephen: After a very careful analysis of all the dailies of the reference cameras, we build an edit that represents that scene. And we’ll do it with a combination of just cutting the reference—as Jim said, the dumb wide shot—just to show where everybody is.

And then we’ll use those cowboy size shots and closeups to try and emulate an edit of the scene. And we put sound effects and music in and everything, and try to build something that Jim can look at and say, “Okay, let’s turn that over to our internal lab for processing.” And it may involve combining takes from one actor from Take one, one from Take five… It may involve stitching different takes together to create something that represents all the very best. There is one virtual camera that Jim usually shoots during the capture process that shows the environment, that shows the characters in their virtual form. So we do a combination of peppering all this stuff together to create an edit for each scene.

John: Yeah, I mean, it took us a few months to figure out what the hell was going on…

Jim: The thing is that you got 16 cameras operating. So what I’m seeing on stage is what we call a matrix.

And the matrix is basically a multi-screen of 16 images. I have to look all over that 16-image matrix to follow a given character, and then we have to go through that process again in the cutting room.

So, the very first step is to look at that matrix, and then the assistants will break it out into individual quads where you can see four images. And we’ll go through the quads and we’ll look at performance playback, basically. Right now we’re just looking at raw, performance coverage. So then I make all my selects. It’s like, “Okay, I like what Sam did there. I like what Zoe did there.” And then maybe we’ll have a discussion, and this will be done as a group thing with Steve and John and David. And we’ll say, “Well, we could, maybe stitch Sam to Sam.”

Like, “We really liked the way he did the first five lines. The scene started strong in take three, but then in take five he did this amazing thing. Let’s see if we can find a place to stitch Sam together across those.” And then of course it’s up to everybody to figure out the logistics of keeping it all in sync so that the other actors who might also be from different takes are in a reasonable sync with each other.

This is kind of similar to what you do when you cut normal picture. That you’re using an actor from different takes to create the full scene. But we’re doing it in a way that is shootable. That in theory, I could come in and do it as a one-er. Right? So in theory I could come in and do some steady cam shot that just never stops.

And Steve may say, “Well, that closeup is so good, that wants to be a closeup moment. And if I’m willing well before the fact to commit to that being a closeup, that frees us up to create a sub master on either side of that closeup that could be done as a one-er up to that point, and then as a one-er after that point. And that that takes pressure off us trying to build these hellacious constructions.

All the constructions are truthful in the sense that we don’t mess with what the actor did, but we try to order it in a way that we’re getting the best out of the actor. And by the way, you might have six actors in a scene—like that beach scene that had all those characters in it.

All the constructions are truthful in the sense that we don’t mess with what the actor did, but we try to order it in a way that we’re getting the best out of the actor. And by the way, you might have six actors in a scene—like that beach scene that had all those characters in it.

Glenn: And those 16 cameras are capturing those six actors at that time.

Jim: Yep.

Stephen: You can imagine the logistics of accounting for every character in a section when they come from different takes and making sure that they relate to each other in a similar timing that they did in the original native take.

Imagine it as a multi-track, and each character has their own track and you’re trying to line them up to each other. Now, Jim has the ability to slip their relationships downstream. If he wants an answer to a line to come quicker, he can actually manipulate the time.

Jim: When you’re in an over and the actor took a long time to respond, you cheat a line onto the back of somebody’s head to tighten up the timing. It’s the same thing. You know, like taking a three second pause out of a scene.

Glenn: It almost sounds like three dimensional chess…

Stephen: Yeah.

Glenn: …to figure this out.

Jim: It’s four dimensional chess.

John: Yeah, we haven’t even gone through some of the other stuff that’s going to happen once we get past this stage of the process.

Stephen: So, downstream, let’s say months later, after all of this preliminary work is done and Jim is signed off on these performance edits, we’re building the film in performance-edited form, so there’s always something represented once we get the whole picture laid out. And then it’s a process of shooting these scenes for real with the virtual camera—and creating the shots that go in the movie.

And this is where Jim explores the scenes. And any reference shot can become a closeup, a medium, a wide shot, a crane shot, a dolly shot. And as he mentioned before, every actor has their best moment, that can be the closeup or a wide shot, so you don’t have to worry about, “This was their best take, but the dolly guy bumped the dolly,” or the lighting wasn’t great. None of that exists yet.

Glenn: So are the actors then reperforming it?

Jim: They’re gone. They’re on other movies. They’re hanging out in Tahiti.

John: At that point you’re basically using the reference camera edit that you have to generate an EDL for the lab to pull up their files of the motion capture and assemble them as in the order of the edit. The motion capture files are actually being accessed, and they’re put into the right environment and all that. And then at that point, Jim can come in and shoot his virtual camera, basically.

Jim: Right. So on the day that we capture with the actors, what we get is a bunch of data. We get body data and we get facial data.

So we just use the reference cameras as a kind of intermediate language between us and what we call our lab. But our lab are people that will take those performances and put them into a landscape or into a set that’s a virtual set, meaning it’s 3D geometry that the virtual art department has created.

It could be a forest, it could be a ship, right? It could be an underwater coral reef. So they’re taking the data that’s been captured and they’re putting it into the environment, and then they’re going to manipulate it so that feet and hand contacts are worked out properly, and it’s all lit beautifully.

And then I’ll go out into an empty volume. There’s literally nothing there. And I’ll just walk around with the virtual camera and handhold all the shots for the scene. And that’s what Steve called the exploration. Like I could do a helicopter shot that starts a thousand feet out and fly in and land on the bridge of Sam’s nose for a line of dialogue. I could do anything I want.

Glenn: Woh.

Jim: At that point it becomes the creative process of photography. So the lighting, the camera movement, all those things are now being thought of. Stuff that I would normally—as a live action director—be doing at the same time as my performance stuff. Basically what we’ve done is we’ve separated the act of performance with the actors, from the act of photography.

Sometimes this is months—or even years, literally years plural, later. I’ll go back into the scene with my virtual camera. Then it becomes the second stage of editing. And what we do is we have either Steve or John or David—when he was with us—would sit in an open Zoom session with me all day long while I go through these cameras and I pick them off one by one. And at this point, John would constantly be sort of tugging up my sleeve—metaphorically speaking—and saying, “You know, this load is really for the moment where Jake does this or that.” And he’d remind me because I’d get so fascinated by the image I’d forget what the performance moment was for. So it becomes a highly collaborative process with the editor right there at the moment that the shots for the movie are being made.

And even Steve or John or David would say, “Hey, you know, if you ended the shot on this character, that would make a better cut to this other character over here. So I’m keeping my editor hat on while I’m directing.

They’re putting a little bit of a director hat on while they’re supporting me and there’s no giant crew and a thousand extras standing around. It’s just me and five or six people at what we call the brain bar who are running the system. And me and the virtual camera and the editor—who’s in by Zoom usually, just because I was in New Zealand and they were working out of LA. When we didn’t have COVID and we could all be in one place, the editor would literally just stay on the set with me and support me from 10 feet away.

Glenn: Wow. Fascinating.

Stephen: I want to interject something. This director-editor interaction is quite unique and happens very seldom in movie making, where you’re as an editor able to participate in the shots that are being created and actually assembling them as they’re being shot. And Jim is looking at the progress of these scenes and getting ideas of how he may approach shooting it, maybe in a different way.

And it’s quite amazing to see the evolution, the way these scenes develop during this virtual camera process. And it is very gratifying to be involved in the actual shot creation process.

Jim: Well, sometimes stuff doesn’t cut the way I think it’s going to when I create two virtual shots. I’ll say, “Hey, just cut that together and make sure that works.” And then we try it and it’s not all we hoped and dreamed. And so then I’d go back out and I’d try a different approach that maybe cut better. And sometimes we’d wind up—as you always do—with competing cuts of different sections of a scene. Steve, do you remember the Quaritch self-briefing scene where Quaritch is watching the video of himself, and how many gyrations we went through to get that to cut smoothly?

Stephen: Yeah, absolutely. And one other thing is that during this process, there are many times where you will say, “I want to bifurcate my idea,” or even, you know, two, three, four different ideas and cutting patterns. We would develop all of these ideas and present a slew of alternate sections and edits for scenes for Jim to look at that would follow each of his explorations into how to cover and cut a scene, which is also very unique.

Glenn: Yeah. Almost choose your own adventure.

Jim: This is all happening in this kind of real-time collaborative way. That’s pretty cool.

Glenn: Yeah, I mean, it sounds a little bit like animation cutting in a way where you’ve got the storyboards and you keep altering them to find the very best film. But it’s happening immediately.

Jim: And it’s not storyboards, it’s actual shots. At this point, we’re looking at an image that’s probably, I would say about half real, like half or maybe one third of reality, reality being our final goal. But we have what we call Kabuki face.

And the Kabuki face is a projection map of the actor’s facial performance camera, projection-mapped onto a blank model of the face. So we actually see the expressions, we hear the dialogue, we see eyes flicking from one character to another. We see everything we need to see from an editorial standpoint and it’s the characters, actually. all up fully clothed with all their props in the environment, properly lit with shadows, with smoke, fire, rain, all that sort of thing. It’s just not photoreal yet, but it’s a shot. It’s not previs, it’s an actual shot and it’s the actual performance.

The editors are getting it as a stream within a couple of minutes, and then by the next day, those streams are cleaned up as proper stage renders, and that’s what we live with. Sometimes we live with that for a year or more until we start getting the stuff back from Weta. And it’s an excellent proxy. This is basically, very finitely—almost to the pixel—the final shot. It’s just not a photoreal render.

Glenn: Incredible.

John: And that is what we call that template, the shot that Jim comes up with on that stage. We carry that in our timeline all the way through. And Jim refers back to it every once in a while when shots come in from Weta as to, “Why is it four pixels over?”, to that precise a degree. Weta tries to match that template basically, that Jim shot on the virtual camera.

Glenn: Mmm.

John: And the great thing about that day when we’re shooting cameras is that the performance is the same. So we have a recording of the performance and you can play back precisely the same thing over and over again until you get the right shot the way you want it to be.

Glenn: Wow.

Jim: But the cool thing here, at that moment in time when I’m standing there on that stage working with the editor, going through the virtual cameras, we can literally say, “Hey, would it work better if this scene was a night scene? I know we conceived it as a day scene, but what if it was a night scene?”

And then they’ll go off for a couple hours and come back and then all of a sudden it’ll be a night scene. And it’s like, “Well, what if it was raining? Would that add something? Would that add a sense of somberness or whatever?”

So they’ll just add rain and so we have all this “authoring” capability at that stage. It’s highly creative. And then we gotta think about, “Okay, what are the consequences if we’ve just been at a dusk scene?” So it has a ripple effect through the film.

And we were making some pretty radical decisions. Doesn’t change the actor’s performance at all, but it definitely changes your perception of the movie.

Glenn: Mm-hmm.

Stephen: Try doing that with a live action film.

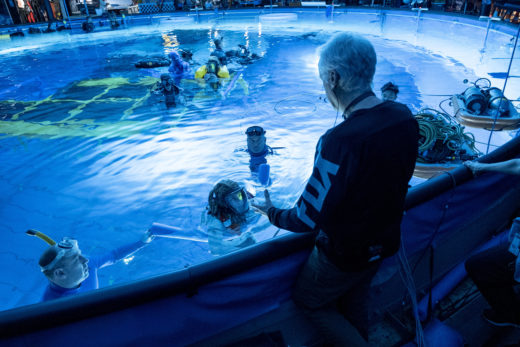

Glenn: Yeah. And then how do you deal with the water? I’m assuming that’s not CG…or is that CG? You guys are shooting in tanks? Or how’s that being done?

Jim: Well, we would capture in a tank, and it’s critical to remember it’s capture not shooting. Although we are shooting reference angles, we’d capture in a tank. So everything that you saw somebody doing—jumping in the water, swimming at the surface, diving under, riding a creature—that was all performed. We had creatures that were machines that were piloted, machines that people could ride at high speed around the tank and surface and dive in the water and all that sort of thing. So everything you see a character doing was performed by a human being, in the tank. So we had a big capture tank that was a hundred feet long.

We could generate a six foot wave, we could crash it onto a shoreline. We could build coral sets underwater and all that sort of thing. David for some reason drew the short straw. John and Steve let David figure out the first big underwater scene, which was very daunting because we hadn’t done underwater capture before. And we had all kinds of different performance stuff. And sometimes we’d take a piece of swimming that was you know, Bailey Bass, the young actress that played Tsireya. And we might like one of the stunt doubles for one of the other characters.

And we’d be making these kind of “mashups.” But the difference is, [in a traditional capture volume] they’ve got floor marks and they’re located spatially by a physical set. When you start combo-ing people together that are in free space, sometimes they crash through each other, and the lab has to kind of sort it out so that it all works.

So oftentimes David would propose something. He’d say, “I think this could be really cool,” and then we’d give it to the lab and it would turn into kind of a mess, and then the lab would kick it back to David and he’d make some timing adjustments. Or he’d take somebody from a different take. So it became a refining, like a closed loop between editorial and the lab before it got to me. They would solve all these problems so that when it got to me, I wasn’t bogged down for hours and hours trying to solve technical problems.

Glenn: Wow.

Jim: Wouldn’t you say that’s accurate? In terms of the underwater stuff that you guys put together?

John: Yeah.

Stephen: It should be noted Jim was pioneering something that I don’t believe had ever been done before. This idea of doing performance capture underwater. It was kind of an insane thing. And of course it was proposed to him shooting dry for wet and hanging actors on wires, moving them.

I think we did a low tech version of this in Avatar 1, where Sam was in a river and his character Jake was floating through some rapids and they put him on a chair with wheels and had him float down. And the capture worked great, but this involved swimming and diving and riding creatures.

And it was a challenge to get good facial reference. I mean, they had underwater reference camera operators and underwater cameras. They also had windows on the tank that they could shoot through to shoot the reference of the motion that was being captured.

There was a whole layer of complexity that went along with both capturing underwater and editing the reference from the underwater capture. This was something brand new to Avatar 2, and it was monumentally challenging for the entire crew.

Jim: One thing we haven’t talked about is the use of picture in pictures and how you guys had to become the masters of multi-screen formats with sometimes up to, I don’t know, maybe like eight or ten picture in pictures. In the same master image.

Stephen: Yeah. Sometimes accounting for all the characters and showing their facial performance… Because we didn’t know who Jim wanted to be on at any one given moment, so it was good to be able to look at those reference cameras. John, do you want to talk about building those PIPs for the scenes? When we built performance captures?

John: Yeah. The PIPs are very valuable because it’s hard to see what everybody’s doing. So there would be a picture-in-picture at a smaller size of every actor that’s in that scene. And if they’re from a different take, then that would be indicated. So a lot of times when we’re looking at things, I would say, “Jim, make sure you like this.”

And we’d look at one of those squares, either the whole performance of Sam throughout the whole thing, or the whole performance of Zoe. Because as you progress to the scene, you’re cutting back and forth and maybe you miss something while you’re cutting away, of something the actor did.

And so, those picture-in-pictures were useful in pinpointing the exact time that you want to be at. You don’t want to miss that, for example, if you saw something you liked.

Stephen: Yeah, well, it would remind Jim of the performances that we had selected together, months, sometimes years before. And he’d rewatch the performance edit and know what it was that was important to him.

And when he picked up the virtual camera he’d say, “Okay, I know I want to be on Neytiri for this closeup because I can see in that PIP that her performance is extraordinary.” So everything may revolve around a moment in a scene. And the picture-in-picture is important when we’re dealing with two different takes from different actors.

So you know that you want to be in take B5 for the Zoe moment where she says ‘this.’ So it really does inform what to shoot when we get to virtual. And again, I’ll say, “Any shot can become a close up,” but also Jim’s very big on having foreground backgrounds, so we can never as editors depend on the fact that this will be a single close up. Because quite often that performance is going to be stacked against someone in the foreground. So we had to account for everyone.

Jim: When I do the virtual cameras, now, we got dailies. Now we got a ton of dailies. And basically it just exists as a big string out.

Glenn: And you cut that before it goes to Weta?

Jim: Oh, absolutely. Yeah. So what we call the template is a finely edited scene. And I mean to the frame, because Weta does not give us handles. So we have to know that our cut is a hundred percent frame accurate. That’s the movie that we’re making. Before we hand it to Weta, we have to love it.

Glenn: And why no handles?

Jim: Well, because it costs money.

Stephen: Yeah, it’s like something over a thousand dollars a frame.

Glenn: Oh, wow.

Stephen: You don’t really want to ask for 24 frame handles on each side.

Jim: Well, it’s over a million dollars a minute for their finished process. I won’t say how much over, but the point is that we made up our minds a long time ago that our template process could be precise enough that we didn’t need handles.

I mean, every once in a while when you see the final render, you see some tiny nuance of performance that makes you want to extend a shot by six frames just for an expression to resolve that was a bit too subtle when working in our proxy environment. But I think we might’ve done that six or eight times across the whole 3000 shots. And so typically what would happen is that the three editors—John, Stephen, David—would propose various versions of a scene and I would get into it and I’d play around. This is me now putting on my editing hat for the first time really, because I didn’t deal with the performance edits and getting it all teed up for the virtual process.

I’ll look at their proposed cuts and then I’ll pick and choose from different cuts. Every once in a while I’ll go right back to the dailies and I’ll do my own selects because there’ll be maybe things I know I’ve shot that I had an idea for, and I’ll do my own little couplets and triplets and sections and then I’ll mash it all up.

I’ll take their cuts and my cuts and I’ll mash it all up into a few-ish cuts, and then I’ll bring them in and I’ll say, “Well, what do you think of this? What do you think of that? What do you think of that?” And we’ll talk about it. And they’ll say, “I like this version better.”

And I’ll go, “Okay, all right, let’s do that.” So then I’ll take that version and then that’ll kinda stand for a while. But sometimes I’ll give it back to them and say, “Well, why don’t you try to perk this up? Or play with a music crescendo that maybe moves the picture cut a little bit.” So it becomes a back and forth looping process.

And then we’ll wind up with a fine cut out of that. And then that’s what gets turned over to Weta effects.

Stephen: I was just going to interject one thing. The fact that we had a principal character who would ultimately be human, required an extra layer of basically capture and editing. So the Spider character was captured along with the other characters in a scene and acted his whole part.

And he was quite a bit younger, and his voice hadn’t changed yet, but Jim worked very closely with us signing off on the CG version of Spider in every scene that he was in. And that became a blueprint for the live action photography, so he actually acted every scene at least twice.

Jim: Right. So we already had a cut of the scene. We already knew all the shots, and now we just had to shoot Jack for real, instead of having Jack’s captured performance. So we had Jack’s captured performance at the age of 13, and we were shooting Jack at the age of 15. But it was the same moment, so these were the actual final performances of all the other actors that appeared as Na’vi or avatars. They were the final performances. So Jack had to hit his marks and he had to hit eyelines for performances that we weren’t going to change because we had captured those with our key cast a year and a half earlier.

Jim: Right. So we already had a cut of the scene. We already knew all the shots, and now we just had to shoot Jack for real, instead of having Jack’s captured performance. So we had Jack’s captured performance at the age of 13, and we were shooting Jack at the age of 15. But it was the same moment, so these were the actual final performances of all the other actors that appeared as Na’vi or avatars. They were the final performances. So Jack had to hit his marks and he had to hit eyelines for performances that we weren’t going to change because we had captured those with our key cast a year and a half earlier.

It was an interesting process. Because it was extremely precise, they could take my virtual camera in that rough edit and actually program the techno dolly with it. And that could be our shot. But it was a very time-consuming process. I’d say we spent probably 40% of our time on 20% of the movie, doing the live action stuff.

There’s one other thing we haven’t talked about, which is FPR, which is kind of interesting. Do you want to mention that Steve?

Stephen: Well, you know, in traditional movie making, there’s ADR and we had the capability of doing what we call FPR, which was facial performance replacement. So there may be an instance where Jim decided that he wanted one of the principal actors to say something else, either because of story clarification, or we cut something out and we wanted to take a line that was removed elsewhere and put it in an another scene so that the idea was still represented.

And the actor could come into our studio, put on a face rig—exactly the same face rig they wore when we did the capture. And they could say a different line and we could put it on their body. Unlike ADR, where if you were going to change a line, you’d have rubber sync, they could not only restate the line in its new form, but all the expressions, the eyes, the mouth, every facial nuance would be recorded and basically planted on their own body again. And then we’d turn a separate face track over to Weta and they would process it. And it would look like it was the native performance. It was an amazing process.

Glenn: Wow.

Jim: We figured, ‘Why try to sink to a piece of facial capture and then not know if it was good sync until we went through the whole process with Weta?’ Let’s just replace the whole face sometimes just to make sure that we had good sync.

The other thing is that when you do a live action movie with stunts, the stunt players gotta kind of hide their face. Well, we don’t have that problem. We can use FPR to have any actor put their actual facial performance, their grunts and groans, and efforts and yells and all that onto a stunt performance. So all they have to do is match the physicality and timing of the stunt player, and then it’s actually Sam, or it’s actually Zoe, or it’s actually Sigourney.

And that way I could literally go from a stunt right into a closeup in one shot.

Glenn: Wow.

Jim: FPR turned out to be a powerful tool—not one to be abused. Obviously you never want to go against the intention of the actor’s performance, but I won’t let them do action that might injure them. And we almost always do any kind of body doubling with the actor right there. The actor could say, “Hey, you know stunt person so-and-so, can you do it a little bit more like this?” So that it’s the way they would do it. I gave Sigourney a lot of latitude to instruct her body double, a performer named Alicia Vela-Bailey.

Because there were a lot of things at 70 that Sigourney couldn’t do like a 14 year old or like a 15 year old. I said, “Sigourney, you direct Alicia. And make sure that what she’s doing is your interpretation of the character.” And so Sigourney really liked that idea. It took the pressure off her to not necessarily try things that she couldn’t do.

Sigourney did maybe 85% of it herself, because she had a full body facial performance. She played the character, but every once in a while…I mean, she couldn’t lively hop up onto a log at 70. So she would tell Alicia what she wanted to do and then she would FPR that moment.

Stephen: One of the things I want to interject here: Traditionally in filmmaking when you have a stunt, you would have a wider shot show the stunt, have a little bit of overlap of action and cut to the actor, right? So in this instance, we could actually create a stitch to the actor, and have it all one piece with their face planted on the stunt body so that we don’t force a cut.

It could be all in one shot. Now that’s pretty revolutionary.

Glenn: Mm-hmm.

Jim: We might find later that a cut works fine there, but the way we built these loads was to try not to force a cut any more than we had to, because that gave me wearing my director’s hat a lot of freedom for how I shot the scene.

Stephen: By the way, if all of this sounds complicated, it is.

All: (Laughter)

Stephen: I gotta say I don’t think there’s any more complicated way to make a movie than what we’ve done. And hats off to everyone involved because you’ll look at it on the screen and you don’t know how it’s done and you appreciate it for whatever it is, but those of us who’ve been through this process know pretty much there is no more complicated way to make a movie than this.

Jim: I just want to say though, that for every time that it’s a giant pain in the ass, there’s a time when you’re doing something so cool that you could never have done in live action or in normal photography or even normal effects. So it balances out. I mean, we get pretty excited and we get pretty far down our own rabbit hole, but I would say when we discover a new way of doing something and then it actually works, we get pretty excited about it.

Glenn: Yeah. And then talk to me about the 3D, because that was so organic looking, and 3D is typically very tricky because it can make you feel dizzy and it can give you headaches. And this just felt so integrated.

Jim: Well, we made an interesting decision. I mean, AVID supports 3D and you can run 3D on your monitors and all that. We made a decision not to mess with it in the cutting process, to literally just leave it out completely and have it be a parallel track. So what we did was we had a cleanup team in LA that we called Wheels.

Wheels is a department that does some motion smoothing and some cleanup on camera movement and so on. And at that time, that’s when they look at the stereo. We have a guy named Jeff Burdick, who’s vice president at Light Storm, but he’s been through all of the 3D right from the beginning, from when we first started experimenting with the cameras back in 1999.

And he was the one that supervised the 3D conversion for Titanic and for Terminator 2. And he’s been right there, with every moment of live action photography, watching in a little projection space that we call the Pod. And he looks in real-time at 3D projection of the live action scenes.

And he’ll call me on the radio if he thinks the interocular is off, or some foreground branches are screwing up a shot. But he also is what I call my “golden eyes,” the guy that goes through and sets the interocular for every single 3D shot. So by the time it gets to me and I’m looking at finished 3D shots or proposed finished shots coming back from Weta, it’s done.

I very rarely make adjustments at that point. So in a funny way, it was the least of my problems. Now I compose for 3D when I’m doing live action. I look at the 3D monitors. I double check my 3D at the time. But when I’m doing virtual production, which was about 80% of the movie, I don’t look at the 3D.

I compose for it in my mind. I’ve got a pretty good visual spatial imagination. And I’ll often say to the team while I’m doing a shot, “This’ll be good in 3D,” and sometimes even when we’re working together as editors I’ll say, “Let’s hold on this shot a little longer because this will be a good 3D moment.”

Because part of good 3D is about holding and trying to let two shots do the job of three on a normal movie. And our overall cutting rhythm is far below the industry average for an action movie. And we do that by design.

Glenn: And you’re doing all this without the 3D while you’re cutting?

Jim: Yeah, but I can see it in my head. But the quick answer to your question is that it’s institutionalized. It’s part of our culture that we’ve developed for over 22 years at this point, and everybody up and down the chain knows their 3D so well that there are no surprises for me as the director. And I’ve encouraged everybody to be more aggressive with the 3D on this film. We were very conservative on the first Avatar because we didn’t know; a two hour plus 3D movie had never been done.

Glenn: Mm-hmm.

Jim: We didn’t even know if people could hold the glasses on their noses that long.

Glenn: Yeah. that’s another thing. The movie’s over three hours long, but it felt like it was two max. It just flew by. The pace that you guys created was incredible.

Stephen: Look, Jim, made a decision he was going to deliver a film that was approximately three hours long. And of course, as editors, we can make suggestions, but he really felt that in this day and age with all of the Marvel-like films…

Stephen: Look, Jim, made a decision he was going to deliver a film that was approximately three hours long. And of course, as editors, we can make suggestions, but he really felt that in this day and age with all of the Marvel-like films…

And has always made the point, when the studios say, “Well, you’re going to lose a whole show capability on a Friday or Saturday,” he’s always pointed out that the most successful films of all time have all had long running times. You know? And it didn’t impact Avatar 1 being some two hours and thirty-eight minutes.

And he believed that today more than ever with the way people binge watch things, that it was not going to be an issue. And of course, as I said, we would suggest cuts and some of them he’d say “Yes,” and some of them he’d say “No.” And as Jim rightly has said many times, it’s not about length. It’s about engagement of the viewer.

Glenn: Relating to the characters.

Stephen: If they’re engaged in the characters and the story, you won’t feel the time.

Glenn: And there’s a lot of world building too with the whole island culture, you had to build all that.

Jim: Yeah. So I like engagement better than pace. A lot of people would say, “Well, it’s not about length, it’s about pace.” But pace implies a kind of rapid image replacement that has kind of a rhythm to it. And I think it’s more about engagement. And sometimes engagement can be holding on a shot and letting the eye explore.

But I think it has to do with the replacement of ideas. Like, ‘What’s your new idea?’ And then what are you doing with that idea? And then how does that idea recur? You know? So we spent a lot of time talking about this stuff after we’d screen the picture for ourselves, and then we’d have these day-long discussions about where it’s flagging and what can we radically do to just take some big chunk, just haul it out bleeding, and see if it still works.

And we did stuff like that. But here’s one interesting thing: If you ask people, “Where was a movie slow?” You’ve put the answer to the question in the question, which is: People are going to think of something as slow.

And so the answer we always got was there was ‘too much swimming around,’ too much underwater, too much exploring. And yet, if you ask anybody what they really loved the most from the movie, it was the swimming around. It was the underwater, it was the exploring, it was the creatures. So if you literally just acted on the notes, you’d have cut out the part that people liked the best.

And so the answer we always got was there was ‘too much swimming around,’ too much underwater, too much exploring. And yet, if you ask anybody what they really loved the most from the movie, it was the swimming around. It was the underwater, it was the exploring, it was the creatures. So if you literally just acted on the notes, you’d have cut out the part that people liked the best.

And we found exactly the same thing in the first movie. The studio is adamant that we had to cut the flying in half. I said, “We’re not going to cut a frame of the damn flying! The flying is what people are going to come out of the theater talking about.” That feeling…It’s about lingering in a space. It’s about lingering in an emotional state. Right?

John: Yeah. I’ve got to give a lot of credit to Jim in insisting that certain things stay in there. We had a lot of discussions about, “What can we take out?” and the things that Jim insisted should be in there and leave in there. And now I’m hearing back from people saying, “Well, that part was a little slow, but I loved it. I didn’t want to get out of that scene.”

Stephen: Yeah, there’s also the experience of being immersed in a cinematic experience in 3D that people I think are hungry for after years of being shut in and perhaps not seen a movie on the big screen. The timing of this and people coming out, it’s almost like the rain stopped, the the sun came out, and now they’re going to the cinema. I think it’s encouraging.

Glenn: Oh, absolutely. And there haven’t really been any 3D movies in a while, so I think people are really excited to experience that. And also just to experience Pandora again, but in a totally different way with the whole water angle, which is incredible.

Jim: It’s interesting, the 3D, I mean, for me, from an authoring standpoint, it’s 10% of my consciousness, if that. You know? I’m focused on cinemascope composition versus 16:9.

Here’s an interesting thing that nobody commented on on the first movie—and to date, nobody has commented on on the new movie: We literally released in two formats simultaneously. Scope and 16:9, based on the individual theater and the nature of its mechanized masking. This is absolutely true. We contact everything single theater in North America and we find out if they pull their masking open, left and right for a cinemascope frame, we give them a Cinemascope DCP because it’s more screen size.

If they pull their masking open vertically, or leave it fixed at 16:9, we give them a 16:9 DCP—which I happen to like better for 3D, but I don’t like it as well for 2D. We literally deliver a DCP to each theater based on how their masking is set up.

I don’t think anybody’s ever done that in history and we did it 13 years ago on the first film, and nobody commented. I mean, they literally were seeing two different movies in two different theaters and nobody commented. It’s the strangest damn thing.

Glenn: It’s great that you do that because we’ve all been to theaters where they don’t open up the screen and part of the image is cut off. And I’m sure that when you created the 16:9 and the scope version, you watched those to see how they translated.

Jim: Right. So we’re literally authoring every step of the way, along the way, for both aspect ratios.

Glenn: Smart.

Jim: The other thing we did: We set up our viewing system such that we had an additional—I think it was 6% of overscan available. So Weta was actually delivering an image that was a 6% overscan image. And then we could actually use that to slightly reposition the shot vertically or horizontally right up to the end.

Literally right up to days before the final delivery. I was doing little tiny readjustments of the frame just for headroom and for composition and for cut-ability and things like that. It’s easy enough to blow up shots, but you don’t normally come wider on a shot. But sometimes there’d be a little bit of a slightly bumpy cut, and I could zoom in on the A side of the cut and come slightly wider on the B side of the cut, and then all of a sudden it flowed beautifully.

Glenn: Interesting.

Stephen: You’re getting not only the Jim Cameron as editor, but as virtual camera operator, director, writer…You’re getting a lot of information that is not just editing here.

Glenn: Fantastic.

Stephen: And it’ll be a great insight to a lot of people to have a window into the process that drove this entire project. And that primarily exists in Jim’s head and I think—what, did we have 3,800 people working on this film? Something like that.

Jim: Yeah. 3,800 people. Not all at the same time. It definitely peaked in the last year with Weta having about 1200 to 1300 running. And that’s across the virtual production: Our lab in LA—which was 300 people, the live action crew of about 500 down here in Wellington, and then the editorial team, which was not insignificant. I mean, I think we had about 10 or 12 assistants at our peak.

John: We had about 22.

Stephen: Yeah. More.

Glenn: And with those assistants, how were they helping you guys? As far as this process?

John: You know, the assistants were always busy. There was so much work to do just from when the first batch of reference cameras came in. You had to organize them and prep them so that we could edit them and we could look at them, all the way through to the virtual cameras coming in.

They all had to be organized. And then when it comes time to send things to the lab, they had to turn what we as the editors did into something that the lab could understand.

And so that was a very time consuming process also. They’re doing capture, the editors are editing, and stuff is being turned over to the lab all at the same time.

Stephen: The other factor is that when a scene is ready to go to Weta Effects, everything has to be vetted. So if it’s a 10 character scene, every single piece of motion and face track has to be accounted for in the count sheets. So there’s a very, very complicated turnover process. And the assistants did an amazing job vetting every piece of motion and face.

And, you know, there were times where Jim would move lines around and he’d say, “Match what I do in the audio.” So we don’t want to find out when a shot comes back from Weta that the face track was not in sync with a line that got moved 10 frames, you know what I mean?

Jim: Sometimes I’ll just slip the audio, especially on a background character. And then the assistants will catch that, that there’ll be a sync mismatch. And they’ll either move the full character, face, and body in sync to the line.

Because you see, the thing is, it’s like multi-tracking. So we can move people around in the background all day long, change their timing, change their spatial position, change their temporal position, and change foreground characters that we’re using as an out-of-focus wipe across the foreground to improve a cut.

So I’d be constantly firing off these render requests and then the assistants would take them to the lab, bring them back, put them back in the cut, as part of the finishing process. So it’s kind of hideously complex, but it also gives you all these amazing choices.

Glenn: Yeah, you’re not locked in, which a lot of times…We try all kinds of tricks when we’re editing live action to get around, but don’t have that kind of capability that you’re talking about.

Jim: So we had this really experienced team and we had this culture around how we did things. So obviously retention was really important. We wanted people to enjoy their working environment and enjoy what they were doing. We didn’t have much turnover.

And that’s across a five-year span, and everybody was busy as hell the entire time. It’s amazing how labor intensive the process is. But it was really critical for us, once people knew how to do this. And it usually took—what do you think, Steve? Six months to figure it out?

Stephen: I have to say, I remember when David came on and you[James Cameron] said, “You work with Steve and John and try to figure this whole thing out, because they’ve been there before and you’re probably not going to be of much use for six months.” And he picked it up very quickly, maybe half the time. He was an expert; it was phenomenal.

And it’s a testament. His skill, how smart he was, how adaptive he was. But I also want to add, we had well over 20 assistants and VFX editors and assistants on the LA side, and we probably had six or so in New Zealand.

And we were able to work in either LA remotely, because once the pandemic hit, we were working from home and remoting into either our studio at Manhattan Beach or in Wellington, New Zealand. And we could work in either place, which I think is amazing. I mean, the technology at our time now, I can only imagine the disaster this would’ve been if it happened 10 or 15 years ago.

Jim: Five years ago.

Stephen: …Or even five. Yeah, right. But the fact that we could actually jump on each other’s Avids regardless of whether we were here or in New Zealand…We could communicate through the various softwares like Slack, Blue Jeans, Zoom, Evercast…It gave us the ability to jump in and review sequences together. The timing was perfect that we could actually continue working.

Jim: Remote controlling the Avid in LA from here—I’m in Wellington, New Zealand right now—and I could actually drive the Avid on the stage in LA, and I could direct scenes in LA—capture scenes—and I could run the Avid to play back for an actor in LA to do FPR.

So we wound up doing something kind of crazy. You know the normal ADR booth kind of setup where you hear “beep, beep, beep,” and then you talk?

Glenn: Sure.

Jim: We didn’t do that at all. We did a hundred percent of our ADR on this movie using what I call the “Hear it, say it,” method.

Glenn: Mm. A loop.

Jim: Like old style looping, right. I literally would just put an in and an out mark on the line I wanted the actor to say, and I’d push play in Wellington and the Avid would play back in the recording room in LA, and the actor would hear the line and say the line. That’s how we looped the entire movie.

Glenn: I think that style of looping is really effective.

Jim: And especially working with the kids, right? We had a seven-year-old, we had four or five teens, and these kids had never looped before in their lives. And I wasn’t going to put them through ‘Beep, beep, beep…Okay, now act!” All they had to do was hear how they said it, you know?

Glenn: And was there any lag with your AVID in LA from Auckland?

Jim: It was like a quarter second, half second, something like that. I couldn’t see sync in real time. I’d have to wait until they played it back for me. Like it would be out just enough that I literally couldn’t see it in real time. But that’s okay.

Glenn: One of the things I thought was so great about this movie is sometimes you watch action scenes and it just seems like it’s chaos. And here there was a clarity of action. There were really good peaks and valleys that kept your interest and you always had a point of view as to who you were following and made you really care about the action scenes.

Jim: Well, we were blessed on this movie that we had three really good action editors. The thing that I like about Steve and John and David is that they’re all highly humanistic, emotional, full spectrum editors, but they can all cut action. I like cutting action myself.

Sometimes I’d break off a sequence and do my own riff on it, but then I’d go back and I’d look at like, the akula chase that David cut and I’d go, ‘You know, he juxtaposed something there that I wouldn’t have thought of.’

Then I’d do like a mashup. I think we’re all pretty good action editors, and I call myself an ‘Axis Nazi.’ Stuff has got to be on axis for us to understand it spatially. And I think a lot of these big multi-camera shoots, they get a lot of cameras that are over the line and stuff is going this way and then that way and I lose the geographical relationship of what’s going on.

Glenn: Geography’s huge.

Jim: Yeah, I’m probably pretty pedantic old-school in terms of axis. Steve, you are pretty much as well, I think.

Stephen: I really do love the fact that you are as dedicated to the orientation of axis. I agree with you: There are many, many movies that will have action scenes that are, in my mind, incoherent. They may still be very entertaining. It may be more like a montage of action, and it still works in its own right.

But I’m a classic kind of action guy. I have a need to understand the geography of the action, and I really do love that you feel that way too; and I know John does as well, and certainly David did. We were all classical cutters in that sense, you know? And it’s great that we were all on the same page.

John: We always talk about, you know, “The axis is wrong. We’re going to have to fix this.” To me, I just want to know what’s going on. I don’t want to get blasted with a bunch of fast cuts…that I have no idea what happened

Glenn: Sure.

John: And it was great that Jim was concerned with that, and that was always a topic of discussion when we were doing cameras. Or quality control cameras to set things up for Jim.

Stephen: It wasn’t just in the action, by the way. It wasn’t just in the action. It was any scene. We couldn’t violate the basic rules of axis. And as John said, we knew that if something crossed axis and it was confusing, that Jim was going to need that fixed. Interesting to point out as well, that the reference cameras—although they tried—there were moments where we had a closeup in the wrong axis.

Now, it didn’t mean that that performance could be ruled out. Because remember, it’s just a reference of what’s actually recorded in the volume.

So any shot, as I said, could be a close-up/medium/wide shot, but also could be shot from any axis. There were times in a performance edit where we would put a performance in and—just for the sake of making the performance edit play—we’d flop the shot to the correct axis. Knowing full well that didn’t have to be that way during the virtual photography process; it would just represent the shot from a different axis.

Jim: Here’s where it gets interesting. If John or Steve encountered a problem that they couldn’t solve with one of my virtual cameras, I encouraged them to go to Richie Baneham—who was doing the QC cameras ahead of me, but he was also doing his own kind of second unit stuff and just feeding shots into the movie.

They would say, “Well, hey Richie, can you just bang me off a shot that’s on this axis or is a little looser, a little tighter?” Or whatever. And so they would literally just be ordering shots and sticking them in the cut. So it kind of went beyond editing in the classic sense.

Glenn: Like you said, they did a little bit of directing as well.

Stephen: We could also fix motion by the way. So if we needed to cut out of a shot early, but we missed an arm movement or something that may cross a cut and make a cut smoother to blend it, we could work with Richie to advance the A side of the cut so that we could retain that action across the cut. It’s an editor’s dream to be able to fix motion across cuts, and we were able to do that.

Glenn: Wow.

Stephen: Because we could compress the time. Like, let’s say you take three seconds off the end of a shot, but it had something that bridged to the next shot. So you could actually pull up the tail of the shot three seconds or back up the motion from the incoming shot into the outgoing shot, and have them blend the motion to retain what you cut out.

Jim: You gotta think of every shot as an approximation of what it could be, right? You could slip the sync of the camera, move relative to the action of the characters. Or you could slip the sync of an individual character relative to the other characters in the shot so that they completed an action such that it cut to the next shot as a proper flow. So there’s a lot of flexibility. You never lose a take because it’s out of focus, because it’ll always be in focus. You never lose a take because of something that happened that you don’t like, you just fix it. So when you have infinite choices, it really forces you to think, ‘All right, why do I want this shot?’ It kind of forces you to really think editorially in terms of the narrative. Like, ‘What is the shot doing? What is this shot doing that another shot doesn’t do quite as well? And if I really like this shot and I’m willing to build a structure around this shot, what am I sacrificing on either side of this shot by committing to this particular angle here?’

You run through this endless kind of ‘program’ in your mind of what is best for the scene, what is telling the story most clearly.

Because, you know, picture editing on a live action picture, I think is a process of, all right, you start by throwing out the bad performance takes. Then you start by throwing out the out-of-focus frame ranges. Then you start by throwing out the stuff where the extra dropped the tray in the background, or some stupid bullshit happened, and you get down to a relatively limited number of ways to try to tell your scene. And we don’t have any of that. So it forces us to be a lot more analytical about our narrative process. You keep mentioning kind of animation, and I think what you mean by that is an animator can do anything. They can work from thumbnails, from storyboards, from previs. You’ve got an infinite number of choices, and that’s kind of a problem and a blessing at the same time, but it forces you to be really analytical about your storytelling.

Glenn: Yeah.

Stephen: Yeah, there are no limits here. There’s nothing that has to be thrown out. It can be manipulated. So with the infinite possibility, to maximize the storytelling, the characters, the performances…You begin to understand why it takes so long to make a movie like this.

Jim: I think we go into it with open eyes, knowing how complex it is, knowing what the possibilities are, and believing that we can achieve something that’s dreamlike on the screen, that puts the audience into a state of cognitive dissonance where they’re looking at something that cannot possibly be real, and yet they can’t figure out why it looks so real. So that’s a cognitive dissonance. They’re looking at something truly impossible. Our characters have eyeballs that are volumetrically four times the size of a human eyeball.

It’s impossible and yet you see it. And there’s something compelling and empathetically triggering about that. And they have cat ears, and cat tails, and these lithe bodies and it’s not achievable any other way.

You couldn’t do it with makeup. You couldn’t do it prosthetics. There’s no live action way to do it. If you did it with conventional animation, it would feel animated and your cognitive dissonance wouldn’t be triggered. So we exist in this narrow bandwidth where you’ve got human actors performing characters that are humanoid enough to justify that, and yet different enough from human to justify all of this process.

And we ask ourselves if it’s worth it, and then we put it up on the screen and we go, “Holy shit, we just did that.”

All: (Laughter)

Jim: And then we all know the answer.

Stephen: Yeah. We’re all crazy to embark on this. But it is extraordinary. And one thing I just want to go back to is: One of the most exciting things for me is to look at the final render and see every nuance, every eye movement, every little facial movement and expression of these actors—that we picked years ago—come back in full render. And every detail is evident on the screen. And to see that come back, all that full expression in every actor’s face that we saw years ago, is extremely rewarding.

And I just want to say that it is heartbreaking to me that David Brenner did not live to see all of this hard work come to fruition. And I’ve got to believe that somewhere, maybe he’s got the best seat in the house, but I really hope he can see it some somehow.

Glenn: Yeah.

Jim: IMAX is brighter and bigger wherever he is watching from. Yeah. That’s kind of one of the heartbreaking aspects: He never got to complete the cycle and see, because every time these shots come in, it’s like Christmas morning. Suddenly seeing these characters popping to life in just shot after shot after shot.

And you know, as rigorous as our process is, we kind of live for that moment. I remember John particularly was a Nazi when we were working on the set, like, “Now don’t forget this little thing that Jake does here.” And I wouldn’t see it, I wouldn’t see it on my virtual camera because the image is too coarse.

As good as it is, it’s still coarse when it comes to very, very fine expression. But you know, all the editors really helped me through that process of really remembering what the moment was. And that all culminates when we see those final renders coming in from Weta.

John: It’s very satisfying. It is. It’s a lot of hard work, but when you see the final product, you go, “All right, that’s good stuff.”

Stephen: It’s amazing. It’s an amazing transformation between template and final render.

Jim: Well, I always say about Weta: First of all, that it’s extraordinary what they’re able to do with their toolset and their people. They’re unparalleled in the world. There’s a moment where we throw it over the fence to them, and then they start their magic.

And I always say excellence in yields excellence out. It’s like the garbage in, garbage out thing. But the corollary of that is if we put into them the maximum amount of excellence that we can muster, then they’re starting their process already at a very high level. And then what comes back from them is that much more extraordinary as a result.

Glenn: Yeah. That’s fantastic.

Stephen: Just want to give one last shout out to our amazing editorial crew, both in LA and New Zealand. And our additional editors—the next generation of Avatar editors: Jason Gaudio and Ian Silverstein. We work so wonderfully as a collaborative team, and we can never thank our assistants enough for the huge undertaking in helping us to get this film to the screen.

Glenn: Well, I love talking to you guys. This was fascinating. People are just going to be blown away by what you guys just described, and I love the movie. I love the cutting. And thank you so much for your time.

Jim: All right. Thanks Glen.

Stephen: Thanks.

Jim: I’m glad that we got to talk in detail.

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now