CopyCat is machine learning in the hands of the average creative. Not a computer scientist masquerading as a creative, not even a creative who has forced themselves to learn Python. Just your typical “I’d prefer a paper and pencil to a mouse and keyboard if I could make money at it” creative.

And here’s where things veer towards witchcraft: Feed it a few before and after images, wait a few minutes (hey, I guess even witchcraft takes time), and watch as it applies the same creative touches to the rest of your images. The Foundry have commented that the tool is useful for things like garbage matting, beauty fixes, deblurring, and so on. But from what I can tell, the system is limited only by your own intuition and the number of sample images you’re willing to feed it.

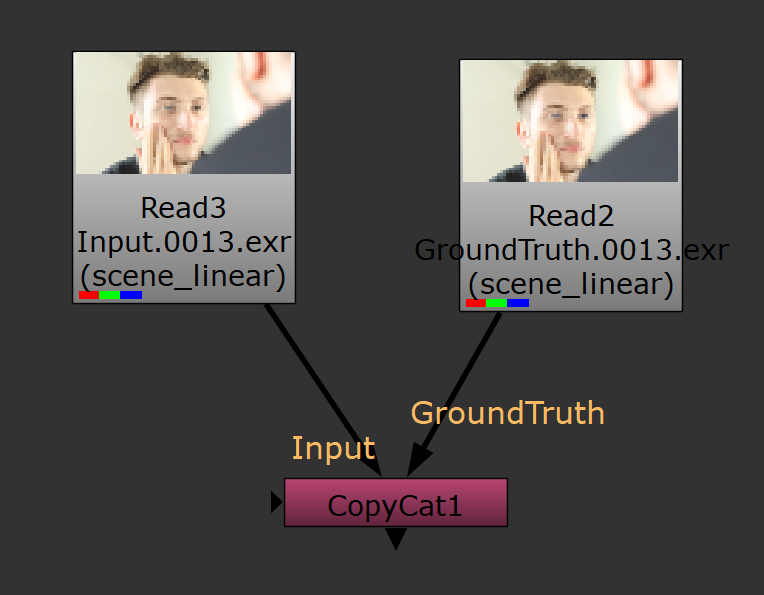

Now lots of other DCC packages have created machine learning-enhanced tools (Flame, Resolve, Premiere Pro) but what makes CopyCat unique is that it’s a neural network that artists can train themselves. All you need is a set of before and after images and the neural network will train itself to apply the same changes to new images.

Eye Candy: Instant colored contacts

For a first run I picked a task that was reasonably straightforward, but would potentially take an artist hours to complete: changing eye color. I’ve actually demoed the process involved in a previous article and video training. Rather than track a sequence I simply picked 24 representative frames and manually painted the eye color to blue, using a luma keyer to protect the specular highlights from tinting blue. The paintwork probably talk me about 10 minutes for the 24 frames.

I chose frames that I thought were good representations of variations in the eye appearance. A few frames with the eyes wide open, a couple with the hands obscuring the left eye, a couple with them obscuring the right eye, some squinting, some blinking, and even a frame where the eyes were closed and so the “painted” version was identical to the original (i.e. there were no irises visible to paint in that frame)

I decided for my first run just to feed the images in at the default settings (10,000 epochs). I wanted to see how well it would perform out of the box without a lot of intuition on the part of the artist. I honestly expected the system to fail; the eyes are such a small part of the HD frame I used, I expected that I would ultimately need to crop the frame before running the training.

I clicked “Start Training” and about an hour later (on my RTX3090—older gen graphics cards probably would take quite a bit longer. Also there’s no multi-GPU threading currently available) it was done. What’s fun is that the viewer shows you updates of the segments of the image that its processing and how the predictive results currently match the ground truth.

I then added the “Inference” node, the tool that actually applies the learned model and selected the final .cat file generated by the CopyCat node. (As an aside, don’t be confused by the multiple .cat files; Nuke iteratively saves out various .cat files along the way. Presumably this is so that you can stop the solve early if you see that it’s already guessing things correctly, or so that you can choose an earlier iteration if it ends up oversampling.)

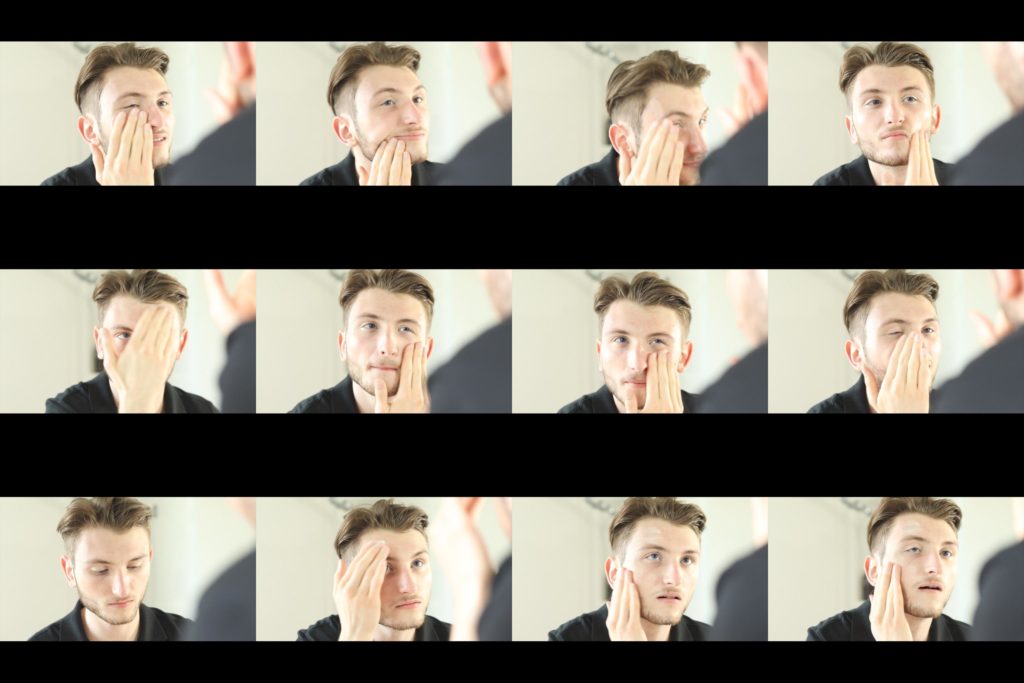

And the results? I fed in the entire 700 frame sequence and the eyes were perfectly changed to blue (well, it turned out I picked an odd hue when I did my quick paint trial, but that’s my fault, not the CopyCat trained model’s). The highlights were left untinted and the frames where the eyes were partially covered were still correctly tinted. My mind was a little blown. I had to check to make sure I wasn’t somehow looking at my original painted frames by mistake.

The final raw feed directly out of the intuition node. No roto or tracking required! (NOTE: The weird hue here is the result of me not bothering to dial in the color properly before training; not an issue with CopyCat itself.)

This might not seem like a big deal to the uninitiated. But when you understand the amount of roto and tracking involved to get 700 frames of eye color change looking natural, it’s an incredible feat. The application of the learned model by the Intuition node was close to real-time.

Now imagine a two hour movie where the actor’s eyes need to be changed in every frame (think “spice” eyes in the movie Dune). The effects budget just for that one change could easily run to six figures. With this tool the entire process could require no more than a couple of hours of an artist’s time.

Here’s the rub: I think there are enough applications like this that I can see an editor who knows nothing about Nuke dropping the cash for a license for the CopyCat tool alone.

The Bling Filter

Suitably impressed with its practicality, I thought I’d push CopyCat more into the creative realm. I decided to try and create a “bling filter.” I wanted something that would add a glow to highlights, but very specifically jewelry. Typically a luma key is applied to an image and then a combination of blurs and color corrections is added to create the bloom. The problem with this approach is that often the brightest parts of an image are a kicker light raking across the side of an actor’s face, or a T-shirt catching the full impact of the key light.

So in 14 random images I hand-painted glows onto any jewelry (watches, earrings, necklaces) the people in the photos happened to be wearing. My sample included grainy images, black and white images, and four or five images where people weren’t wearing any jewelry (and so nothing was painted). All I used for the effect was a blurred brushstroke as the matte feeding into Nuke’s “Glow” node.

The results? Again, surprisingly good. Not perfect-it still glowed up non-bling items like fingernails, but given that I really only fed it 9 images out of the 14 with actual jewelry it still did well. I suspect if I really wanted to create a production-worthy bling filter, treating around 100 still images to start with would be enough to create a discriminating effect.

This raises all kinds of possibilities for automatic looks. One of the reasons we always have to dial in the parameters of filters is because context, contrast, exposure vary from shot to shot. If you train a neural network on various shots where the effect is dialed in for the specific exposure, color, and contrast of each image, you create an automatic filter that doesn’t require tweaking.

Interestingly, I found good temporal consistency playing back sequences using the Inference node to apply the trained effect. (Bear in mind that I’ve so far only tested two clips, so the jury is still out on general temporal consistency.)

Getting access to CopyCat

CopyCat is just a single node in Nuke, the industry-standard VFX compositing package. There are no announced plans to sell it as a separate effect, although there’s no reason this couldn’t ultimately run as a plugin for Photoshop or After Effects.

As it stands you have two options if you want to use CopyCat right now:

If you’re an up-and-coming artist with an annual gross revenue under $USD100,000 you can subscribe to Nuke Indie for $USD499 per year (limited to 4K output).

If you make more than $100K, you’ll need to drop just under $USD10,000 on a NukeX license.

NOTE: Unlike most other Nuke X features, the CopyCat node is not available in the non-commercial version of Nuke.