The Tech Retreat is an annual four-day conference for HD / Video / cinema geeks, sponsored by the Hollywood Post Alliance. Day 3 covered a legislative update, post on demand, ACES, the VFX business, file-based architecture, IMF, working in raw, digital intermediates exchange network, the cloud, and what comes after file-based workflows.

Herewith, my transcribed-as-it-happened notes from today's session; please excuse the typos and the abbreviated explanations. (You can follow the Tech Retreat on Twitter with hashtag #hpatech13, thanks to various Tweeters in the audience. I post my notes at each day's end.)

Washington Update

Jim Burger, Thompson Coburn LLP

IP issues: What happened in Congress this year? Nothing! Regulating IP and the 'Net was the third rail.

Aereo: Networks sued Aereo, public performance copyright infringement. Aereo's defense relies on Sony and Cablevision cases; claim no public performance. Single antenna, single performance? Not guilty. Appealed to 2nd Circuit.

Fox TV vs. barrydriller.com / Aereokiller (Aereo-like service). Ruling: No, that one transmission is a public performance (then, is Slingbox illegal?). Appeal filed, but could have a circuit split, one for infringement, the other against.

DISH Hopper (commercial-hopping DVR) “Auto hop” litigation: If you enable the DVR, it'll store up to 8 days of shows, and auto-skip commercials. CBS/Fox/NBC filed copyright lawsuits. May see the Sony Betamax ruling revisited (which was: if the device is capable of non-infringing use, it doesn't matter if some people use it to infringe). Fox lost preliminary injunction; judge said “all the Hopper is, is Sony on steroids”. Fox is appealing.

Kim Dotcom / Megaupload. Dotcom arrested on criminal charges, fighting extradition from NZ. Fight over servers; Megaupload rented servers from US firm Carpathia. Now Megaupload is shut down, customers can't get access to their 25 Petabytes of data on Carpathia's servers. Dotcom out of jail but still fighting extradition. NZ High Court ruled that the search of his home was invalid; evidence illegally seized; release to FBI violates treaties; NZ's PM apologized to Dotcom. Extradition judge calls the US “the enemy”, recused. Still up in the air. Dotcom has now opened a new upload site with servers OUTSIDE the USA.

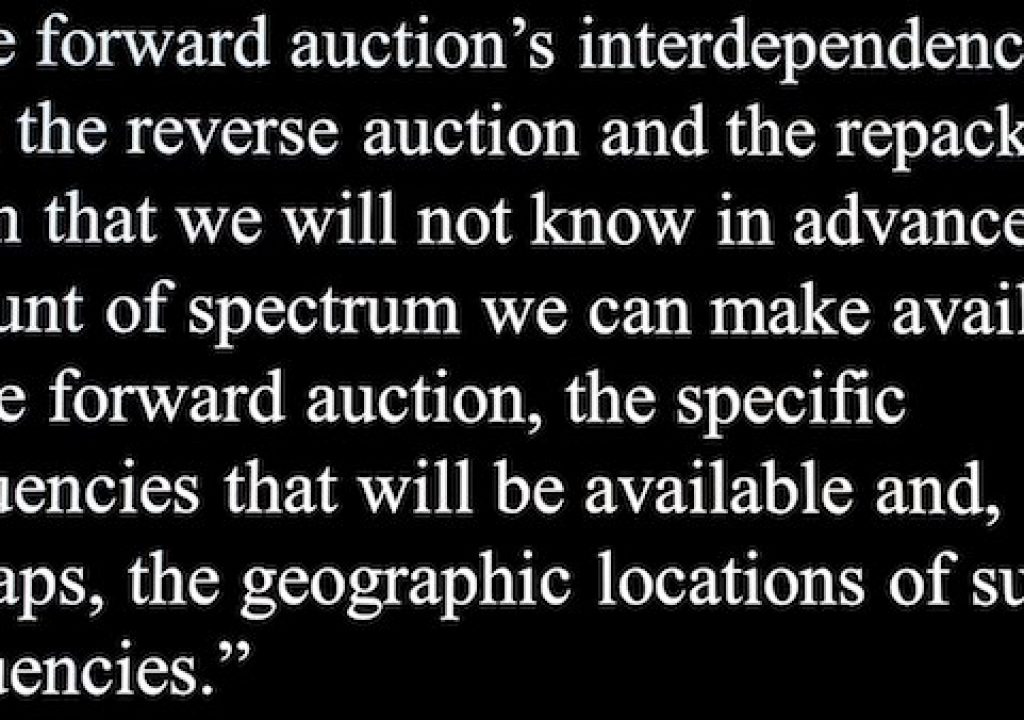

Telecomms: Spectrum for sale. Congress finally acts, Spectrum Act of 2012. Authorized FCC to conduct auctions for mobile broadband; reverse and forward auctions, followed by repacking. Involuntary relocation compensated and protected.

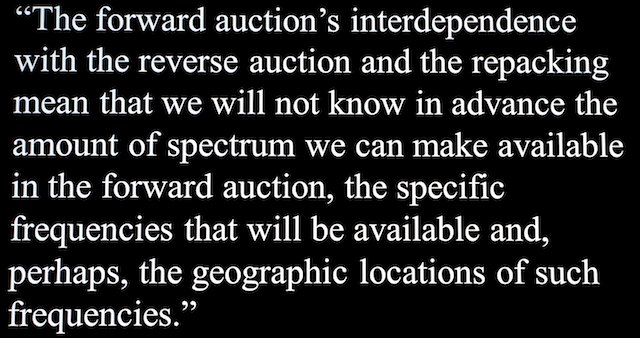

Also, coordinating with Canada and Mexico will be tricky. 205-page NPRM, with lots of comments. 600 MHz Band plan? 5 MHz blocks, separate uplink/downlink bands:

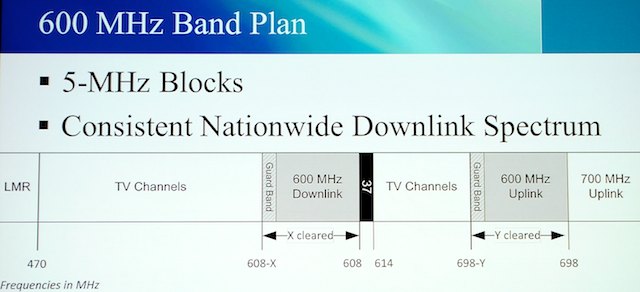

FCC auction process:

Looks very difficult to meet these deadlines.

To encode or not to encode? How many copies will copy-protection allow? '92 Telecom Act: assure commercial cable STB availability and power to restrict cabled encryption/scrambling for TV compatibility. Memorandum of Understanding: encoding rules (ER), like HBO allows one generation of copying. 2002 FCC ruling; DISH sues, saying FCC has no direct authority (DISH isn't cable [paraphrasing. -AJW]). Is interpretation reasonable? Ancillary authority? Court said no. FCC says, we were removing a stumbling block to cable box availability; court disagrees. FCC says 2002 rule can't work without ER; so court vacates entire 2002 rule!

Q: If the sequester happens, will they furlough all the lawyers at the FCC?

Post on Demand panel

Moderator: Chris Parker, Bling Digital

Callum Greene, Executive Producer, “Pacific Rim”

Richard Winnie, VP Post Production, NBC Universal

Gavin Barclay, Co-producer, “Suits” and “Covert Affairs”

Chris Jacobson, VP Creative Services, Colorist, Bling Digital

So what's changed in post?

Paradigm shift. Was a clear break between production and post, when the can of film came off the camera and got the “exposed” tape on it. Now, more blurred, with DITs, on-set work, etc.

Move away from bricks & mortar, with portable post in hotel rooms, on laptops, on-set carts.

Reduction in workforce, perhaps a bit too far (like one DIT doing everything).

It's not all about the hardware; one size doesn't fit all.

So what is post on demand?

One of Bling's P.O.D.s in the demo room.

Callum: Did portable lab in CT about 5 years ago, both for tax reasons and to get closer to the director (shooting on Genesis); was a big deal to do this. Now, “Pacific Rim” shot in Toronto, no question about shooting in digital and having near-set or on-set lab. The change in 5 years is night and day. If you can work close to the set it streamlines things, improves communication, saves money. Would be very odd now to go back to using more traditional bricks & mortar lab.

Richard: Every situation is different, look at every show on its own (snowflake workflows). Enormous convenience not having to ship drives, saves a day. anything you can do for the production folks, not having them drive all over town, hard to get 'em to look at a cut anyway. File-based made pricing structure more tractable, but had to rework workflows (RED didn't make it easy on us!) Bling was the first place we found with a focused approach. We do so many shows in Canada now. You have to have the right people on the show if you're going to try something new; have to have someone there gung-ho on the new workflow. If you can do it, no reason not to. Increased efficiencies.

Chris Parker: It doesn't have to happen overnight, don't have to ignore existing regimented systems. Have to take baby steps, look for opportunities where the right team is in place to push the envelope a bit.

Gavin: If you have a savvy team it opens a lot of creative doors you wouldn't find otherwise. “Covert Affairs” has a tight schedule; producers want more international shooting, that would require shutting down, moving, etc. 15 countries in 3 seasons, turning around on a 10-day cut. Last shot in Amsterdam, had 9 days to lock the cut. A problem in the traditional way, shipping dailies back home or looking for a Dutch post house. Now, send a guy along to a hotel room with his gear, transcode dailies there, see them the next day. All we need to do in advance is find a fast Internet connection.

Chris Jacobson: Show in Miami, we're in LA. We could create LUT for camera tests all in the same day, shipping over high-speed Internet. Working in the finishing pod with editorial, editor wants to try something new, we can just collaborate there in the office, try pushing it, then render it out on location to a DNX, makes it easier for them to show to producers. Things like VFX-heavy shows, would cut the VFX into the offline, hope it works. We cut 'em right in in parallel in the online, saves time and money.

Chris Parker: how were VFX handled on “Pacific Rim”?

Callum: ILM did most VFX, we were shooting in Toronto, office in LA, everyone able to dip in and see how the shots worked. Everyone talked, worked essentially in real time, posting in LA, we need that speed. We'd still go to SF once a month to be with director, that tactile one-to-one is the best, but taking advantage of the tech to bring cities closer is huge, made it all possible. We were able to send footage back to LA, get a quick cut, see the scene is OK, and you can strike that set.

When you have a DIT on-set every day, a discussion goes on, and it's very beneficial.

Re-illustrates that it's a work in progress among all of us. That's why Tech Retreat is so useful, we come here, discuss, then go back and work things out.

What if something breaks? Build redundancy in pods, so if a computer goes down or a SAN breaks, gear is so inexpensive you just have backups in place.

So many of those finger-pointing exercises are gone; just walk down the hall, with production and post all being on the same team.

ACES Audit

Josh Pines, Technicolor, and Howard Lukk, Disney

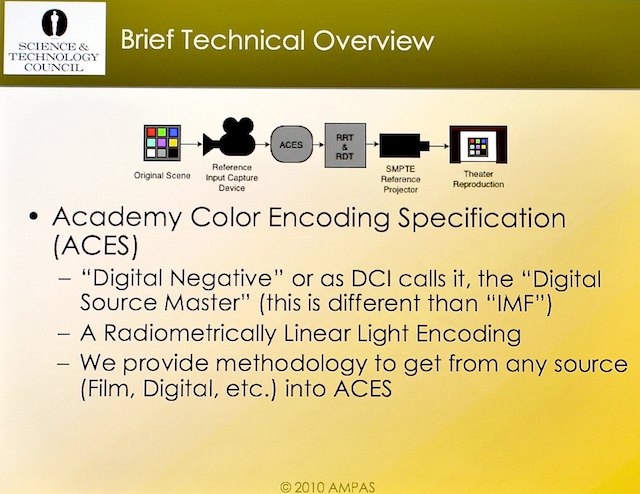

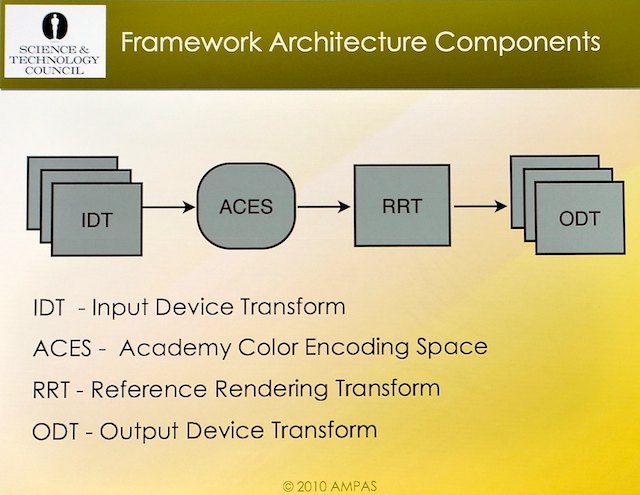

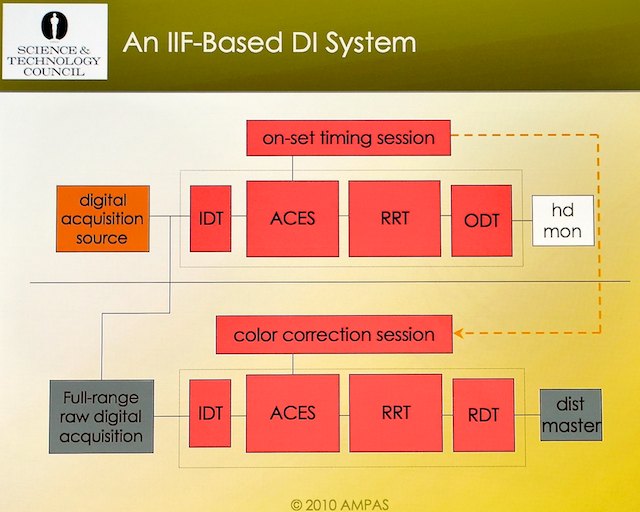

Characterize digital camera to determine IDT. IDTs provided in CTL Color Transform Language, camera vendors are doing this. Not in a format that plugs into all the color tools, needs translation.

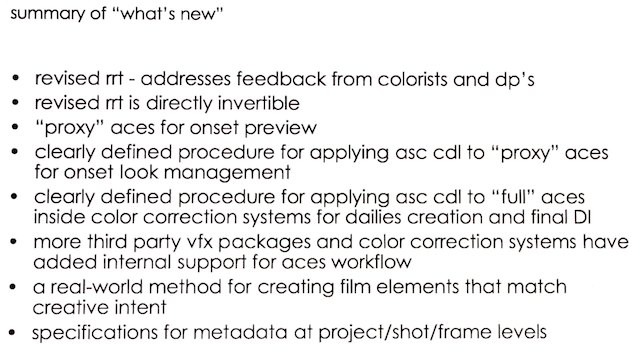

RRT: reference rendering transform. Some liked it, some not; new RRT coming out. RRT has to be invertible, new one will be, so those working in 709 can convert back to ACES color encoding.

Needed way to preview on set. All color correctors have their own way to deal with ACES internally, but CDLs weren't portable. Added “proxy ACES”, cameras can apply a 3×3 LUT, and a clear spec for translating proxy ACES decisions to color correctors. Spec in process.

Disney's perspective: biggest drive from archival. Currently transfers have been done with proprietary LUTs and secret sauces. “Everything we have in the vault isn't worth anything, because I don't know how to re-encode it.” Huge step: “101 Dalmatians” release in 2011, was able the use ACES RRT to generate 709 output. “Santa Claus” has lots of red, in first RRT reds go red-orange, so was completed traditionally instead. “Roger Rabbit” worked with RRT. Try a full-on short production, “Telescope”, F65 4K ACES all the way. Super-saturated color palette, “saturation to 200%, and it never broke a sweat”. After Effects does ACES wonderfully, Photoshop not at all: why not? Realtime OpenEXR 4K playback “challenging”. Overall, this is a really great space to work in.

Comment: Camera IDTs sent to academy as CTLs, but to color corrector folks as part of their SDKs. ACES to Rec.709 works well, if OTD gets you where you want to go. Doesn't eliminate need for a trim pass (but may eliminate need for secondaries in trim pass).

Next: VFX: A Changing Landscape, and lots more…

VFX: A Changing Landscape

Moderator: John Montgomery, FXGuide.com

Simon Robinson, The Foundry

Patrick Wolf, Pixomondo

Mike Romey, Zoic Studios

Todd Prives, Zync

If Oscar-winning film's VFX house is declaring bankruptcy, there must be something wrong.

Simon: Trends: pre/on-set/post, episodic and short form, “cloud”, “open, scalable, modular, fast” workflows and pipelines (industry is pretty good at this stuff).

Pre/on-set/post: Nuke used in story development, previz, on-set, in post. How could Nuke be a better tool for these uses? Is there a better tool for each stage of the work? Making things modular; speed; continuity from story to finishing.

Episodic: quality bar getting higher, some film crossover. Speed; “the generalist”; workflow & pipeline.

Cloud: scalable processing and application delivery; pipeline?

Bring it together: modular, but serving the “generalist”; speed & scalability; workflow & pipeline from story to finish.

Nuke 7 uses CPUs and/or GPUs with no visible difference in rendering. New denoiser using this framework. Can write raw code in Nuke, compile it and run it in realtime. “But why would we make that?” “Because it's f*****g cool.”

Patrick: Pixomondo has 12 branches in 5 countries, 660+ people. Challenges: tracking distributed projects, exchanging data, maintaining standards, bridging environmental differences, deploying tools, utilizing remote resources, not drowning in tickets on the helpdesk!

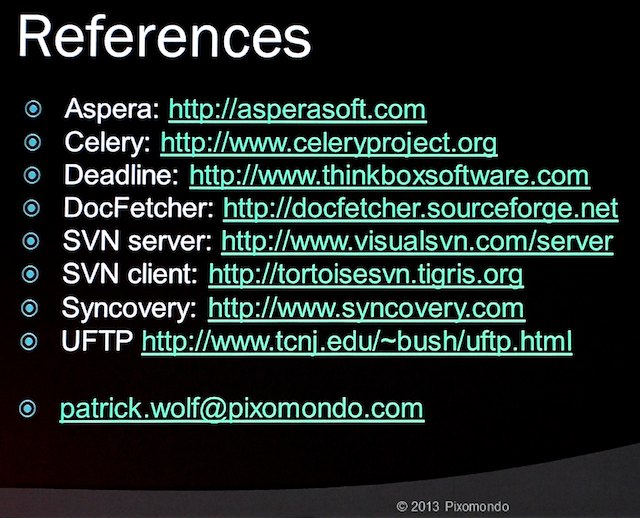

Enforce strict naming conventions, prioritize base toolset (3dsMax, Nuke, Maya, Shotgun, Deadline, RV), Communication wiki and helpdesk, infrastructure with shared APIs, mount points; enrolling SW vendors to evolve products.

Old methods: develop tool, test, zip up and ship to 12 branches, if it didn't work… Now, use Subversion (version control system) with two repositories, one for dev and test (“dev”), one for deployment (“bin”); all branches (offices) pull from deployment repository. Indexed and searchable, can see where code is being used.

Similarly, automated process for remote rendering, instead of zipping scenes and shipping 'em around manually, zipping up results and shipping back. Scalable, automatic rendering in background, distribute one job across multiple render farms using Amazon SQS. In the future, auto-select render farm based on specific criteria. So now it's: submit, render, QC frame, QC job; very simple.

Todd: Zync is a cloud rendering solution. 3 years old, developed in-house for a prodco. Designed to replicate feel of a render farm without the costs. Over 6 million core render hours completed on 11 features, 100s of commercials. All VFX shots in “Flight” rendered in cloud. Rendering is a utility, like electricity or water. Pay as you need it, only when you need it. Infinite scalability. Flexible for a distributed workforce. Rendering licenses on demand (The Foundry, Autodesk, mental ray, V-Ray, etc.)

Challenges: Financial objections: changing the mindset from all-you-can-eat to pay-as-you-go. Bandwidth: how do I get all that data up and down reliably and fast? Security: is my project safe and secure?

Financial: extensive billing tools, metrics to predict tipping point comparing costs between local and cloud rendering. Give an estimate based on a 10-frame test render. Software gives up-to-the-minute billing statistics. Cloud benefits mostly to small / medium facilities with sporadic rendering needs.

Bandwidth: biggest challenge. Smart platform design. Big files, you have to upload 'em. Intelligent job tracking to prevent re-uploads of same assets for different artists, or keeping intermediate files online for later work. Workflow modifications, like pre-uploading large assets in the background so they'll be available when needed.

Security: using Amazon web services; very good on security, undergone 3rd party audit.

zyncrender.com

Mike: Zoic Studios 50% episodic, commercial 23%, also films, games, design.

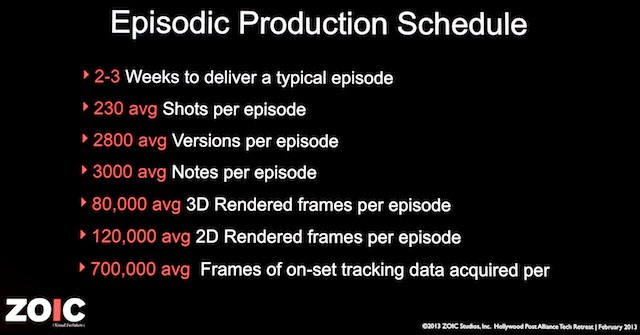

Episodics: True Blood, Once Upon a Time, Falling Skis, etc. Once Upon a Time 6-7 days greenscreen work, 2-3 weeks to deliver, 230 shots VFX, 2800 shot versions per episode:

Typical work for 'Once Upon a Time'

High volume editorial conform workflow. Create, transcode, send for approval, do it again the next day. Now using The Foundry's Hiero: basically an NLE with Nuke as a back end. Used for 7 months; 25 licenses. Most NLES are black boxes, not scriptable or extensible. We have 40+ custom tools written for Hiero.

Database and plate preparation: typically delivered as plates and EDLs. Get QT from client, get EDLs, find the source, prep an overcut. Days of working through EDL, searching and cleaning up. With Hiero, talks to Shotgun to fetch shot (?); another tool automates plate prep, generates previews, plates, proxies, degraining, etc. As soon as EDL comes in can bring 200-300 shots online in 20 minutes.

Conforming and overcutting: Avid can't keep up with the iterations of versions. Hiero custom tools query backend for version info, lets artist pick a QCed version (via Shotgun database) and conform it directly, creating notes in database that are available then in Maya, etc. Auto-create burn-ins, transcodes, all different flavors of overcuts. Off to the render farm it all goes. Composites are all done in Nuke, and Hiero puts a timeline on top of it.

Panel comments:

Big challenge, cloud gives ability to scale big, but how to scale smaller: can we just make a render happen in 12 minutes with GPU instead of 1 hour on CPU; how do you reduce the size of your footprint?

Before we had Hiero, we used After Effects. We spent a lot of time handling color management from After Effects, used various programs, had to track in asset management system. The idea behind building a pipeline is to build in best practices, educate artists, and avoid problems.

Nuke GPU-rendering in beta now. Magnitude of speed improvement? Not known yet.

What about tax jurisdiction, with work going “out of the country” to be rendered in the cloud? Interesting question; not sure.

Most rendering software is still CPU based “and will be for five years”.

What about the Xmas Amazon failure? Not a huge concern, looking at data redundancy, but no one else has the scale of Amazon.

File-Based Architecture: End-to-End

Jim DeFilippis & Andy Setos, Fox

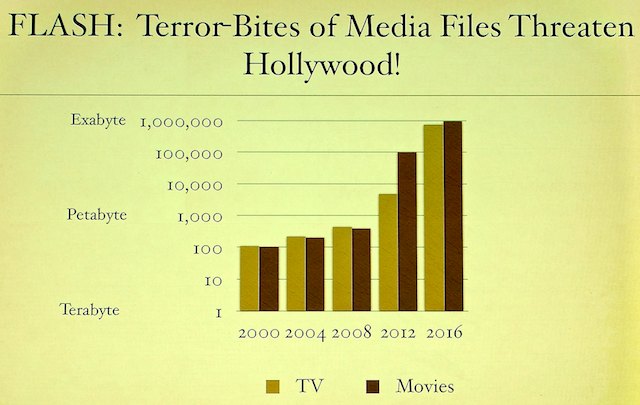

Seems like there plenty of TV shows and movies coming out… but there's a data tsunami coming. The storage challenge: archiving, speed, file format anarchy. A TV show generates 30 hrs @ 200 Mbps. A season is 60 TB. Movies can be 1-2 Petabyte; more for 4K, 3D, HFR.

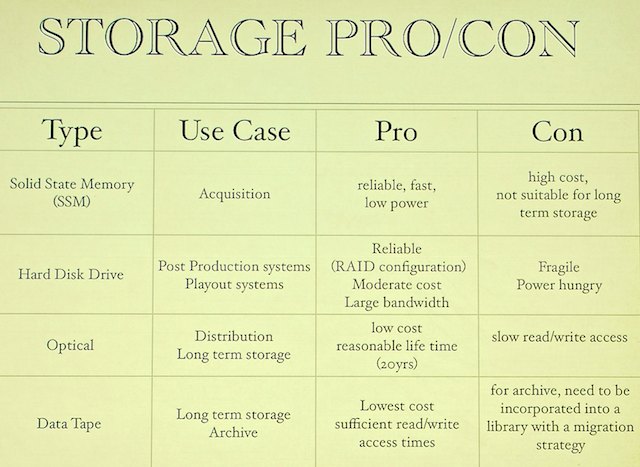

How to store: spinning disks? tape? cloud? (Cloud is one of the most dangerous phrases, especially when a client asks about it.)

A TV episode is a “large object” at 2-4 TB.

(Also shown: the cloud, but it wasn't onscreen long enough to grab!)

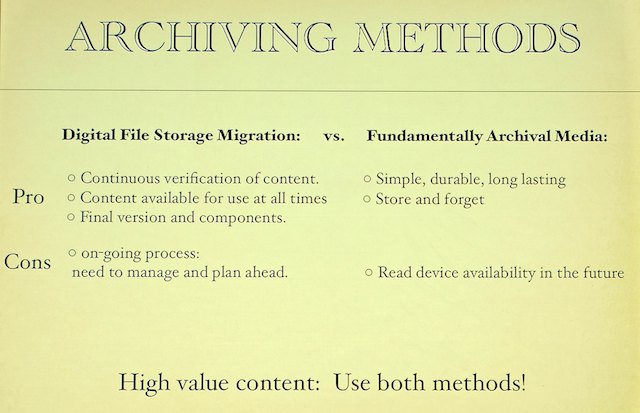

Archiving: bits vs atoms (migration vs fundamentally archival long-lived storage); commercial interest of ~90 years; how to fund; lifetime of playback / read-back capability; time to migrate (outflow must keep up with inflow); could the Internet be a good solution?

Fundamentally archival media: cave paintings (30,000 years old); stone tablets (5,000 years); film (1,000+ years… we think… mylar and silver).

Digital, file-based media: data tape, drives; fragile and short-lived. It's all about the bits, the media file, not the storage mechanism.

Archiving methods:

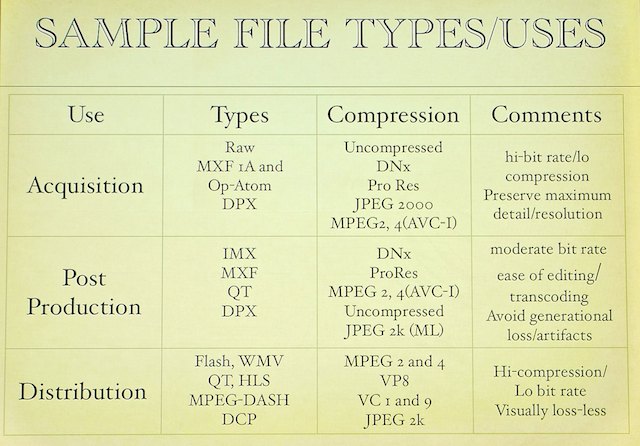

Why so many file formats? Different requirements at different stages of production; competitive pressures (e.g., Sony vs Panasonic); diverse distro types.

Will there ever be one common file format? Well, no. But we should minimize the number of types, be mindful of the “waterfall” effects in transcoding.

What do we do? Go back to 35mm film? Too late! Need to invest in making digital work.

Summary: no simple solutions. Need to carefully select storage, network, and compression choices. Don't get hung up on past solutions for archiving; face reality. For each stage in production, there will be different storage / compression requirements. While there may never be one file format, work hard on minimizing the number of codecs / formats in use.

IMF: Where Are We Now?

Moderator: Jerry Pierce

Simon Adler, AmberFin

Henry Gu, Green International Consulting

Mike Krause, Disney

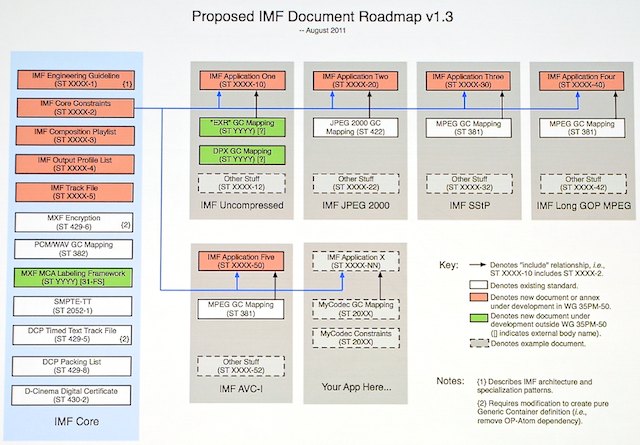

Interoperable Master Format, our 5th HPA presentation on IMF.

Jerry: IMF is similar to DCP for distribution of final video products, a tape replacement. Not for origination or mastering. 35pm50 is the SMPTE group.

(Yes, it's hard to read. It's a mighty complex roadmap.)

Simon: Making IMF Boring. AmberFin does ingest, transcode, QC. Why boring? Because it should just work; things that just work (e.g., plumbing) are boring. Right now, though, it's exciting.

We should learn from MXF rollout in 2005+. Avoid vendor-specific and user-specific enhancements. Fix the specs, and improve interoperability.

Fix the specs and then don't change them. Many MXF files created before the spec was firmed up. We don't want another “6 different J2K implementations” situation.

Specs have holes; software has bugs; it takes three or more vendors working on a spec together to get coverage (W3C model). Two vendors working in private doesn't cut it.

Software buyers and software vendors need to cooperate, work together, not rush into things. Have open discussions.

MXF started out well but then interest declined (lack of user input). That's when the “custom enhancements” crept in. Instead let's codify IMF development practice. Physical and virtual plug-fests important to make the spec real. Publicize them, get vendors and users together, really keep the communication going.

Open, publicly available test clips are essential. The more, the better.

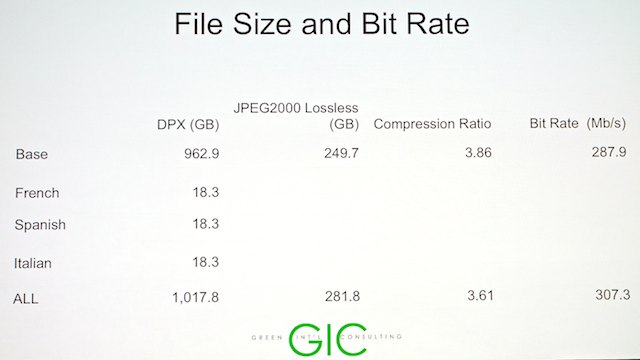

Mike (live from Burbank's Disney studio): 35,100 reasons why Disney wants IMF. Partners: Disney, GIC, Pixar, others. Test: make 14 versions of “The Incredibles”, two sets of IMFs, one lossless RGB, the other lossy YCbCr. HD 1080p. English plus 13 other languages; only the different-language bits (main titles, translated graphics, etc.) are re-encoded to save space on disk. Total of 28 versions. IMF works: standard isn't finished, but what exists now works well. The basics are solid, and we have a good foundation to build workflows around, much better than with current systems.

Henry: IMF – a Real World Application. Working on “the Incredibles. Started with “a bunch of disks”, DPX, JPGs, TIFFS, etc.; wav and wav and wav…; hundreds of thousands of frames; different timelines in different languages.

Separate base and insert materials. 2,227 shots for base, 22 insert shots (titles, signs, newspaper images) for each language, 33 audio files for each language. Some components in full-range, some in video range; some in 2.39:1 and others in 1.77:1.

Most time spent just looking for files. Encoding was the simple bit. QC for all the base media was the tricky bit.

Dan: Making 4K work with IMF. Sony Pictures wanted to produce over 40 4K features in 4 months. Using Application 4 of IMF (wide color gamut, 4K).

4K @ 16 bits files, color to match Blu-Ray masters. J2K Broadcast level 5, UHD (quadHD) files. 16TB compressed down 12:1 with no visible losses. At 20:1, some very slight visible losses. QC on Sony consumer 4K set. Create deliverables from IMF using DVS Clipster. Well on target to get it all done on time.

Not just about movies: all the Sony 4K TV shows we talked about on Tech Retreat Day 1 are being put into IMF.

John: three vendors in demo room working on IMF: DVS, AmberFin, and GIC. Two have fully implemented ingest, workflow, output. One hasn't, but only due to lack of customer demand so far. Not yet boring: audio channel legaling (?); timed text (captions, subtitles), incredibly wide implementation profile.

Next: Working in raw, and lots more…

Working in Raw

Moderator: Paul Chapman, FotoKem

Marco Solorio, OneRiver Media

Thomas True, NVIDIA

Paul: why work in raw? better image quality, greater exposure control, access to full sensor data, path to ACES is easier. Challenges: render times, archiving (do you have to archive the entire softwre environment used to extract the data? Or expand to DPX instead?).

Who supports raw? Colorfront, DVS, FotoKem, nextLAB, MTI, (more).

Multiple vendor SDKs for raw extraction. Some are implementing their own raw developers. Version skew; may have to revert, SDK release schedule random and unpredictable. Should it wind up in open source?

Three places to touch the data: dailies, VFX, finishing. They may use different methods (RED software vs RED ROCKET) with different results!

May you live in interesting times: RED lawsuit against Sony.

Marco: Working with Raw. Acquisition: Sony, Arri, RED, BMDCC. I've been using the BMDCC for a while. Uses CinemaDNG format. Raw shooting no longer the preserve of the elite. For editors, 2013 will introduce a flurry of raw acquisition; make sure your systems are up to the task; make sure the added time and costs fit in your budget.

Raw has greater flexibility, no baked-in color balance, ability to pull image data “from the dead” (e.g., where it would be lost in Rec.709).

(Sample images showing gross exposure changes successfully accomplished: exposing to the right and pulling down in post; doing day-for-night and keeping detail; recovering “blown” highlights seen through window.)

Disadvantages of raw: huge image size, non-realtime playback, a return to proxies for editing. Even if your NLE can handle raw in realtime, it's not an efficient way to work. Budget for added time, storage, archival.

Transfer: use ShotPut Pro, Pomfort Silverstack, etc. Raw can take a LOT of time to transfer. Back up to archival media ASAP.

Software: Speedgrade, Resolve, Scratch, REDCINE-X, Lightroom, Nucoda, Sony RAW viewer, Lustre.

Resolve lets you import all raw formats, preview video and audio together, good transcoder for proxies, features for metadata text overlays, very affordable.

Workflow scenarios: “Telecine” pre-grade to edit, e.g., with Resolve to create ProRes. Pre-grade through Lightroom. “Adobe” workflow. Transcode to Cineform raw and use any NLE. Make proxy, edit, then conform the raw sources. First and last the most common in our facility.

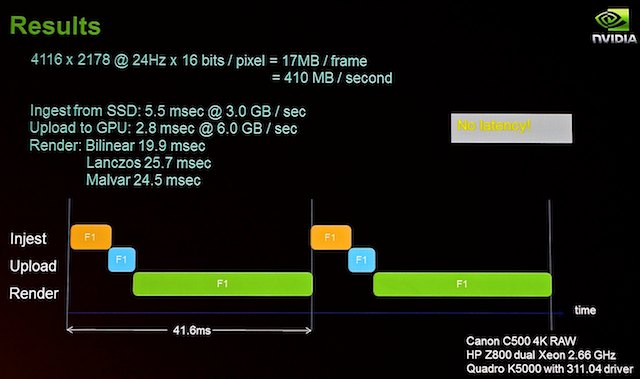

Thomas: Best practices for GPU-accelerated 4K raw post. Ideally it's a non-destructive workflow, with raw files and metadata flowing together.

Get raw image, linearize and adjust tonal scale; deBayer; white balance and color space conversion; tone mapping / gamma correction. As this is pixel-based, ideal for parallel processing. GPU: highly threaded streaming multiprocessors. 64-bit memory with high bandwidth, fully pipelined ALU, special function units.

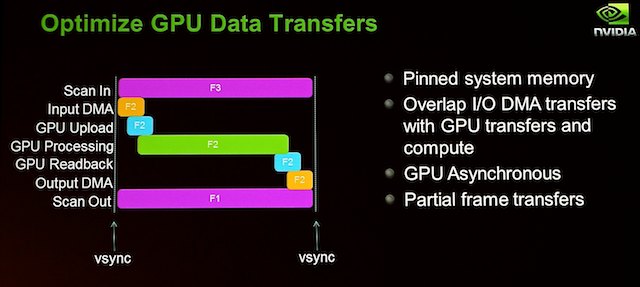

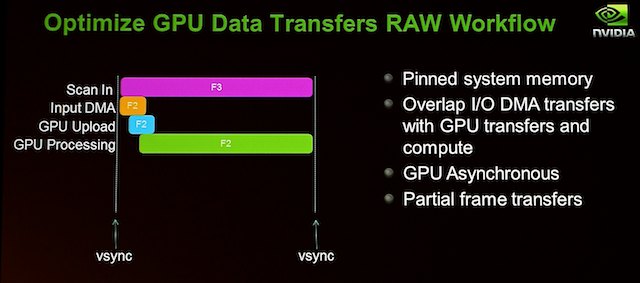

If we optimize our workflow so we can leave the image in the GPU for further processing, that gets simplified further:

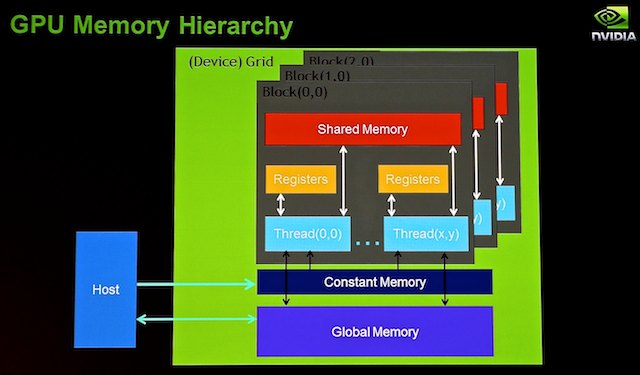

Direct GPU data transfer; shared memory with DMA to memory, DMA to GPU. Threading: IO thread for DMA to memory; DMA thread for DMA to GPU; draw thread. Each thread computes components for a photosite quad (2×2). Avoids thread divergence since all have same complexity of work.

Shared memory is very fast to access. Global memory is fast but read-only.

Texture memory: separate texture cache with out-of-bounds handling, interpolation, number format conversion.

Constant memory: 64kB/device, cached, as fast as a register, use for passing data to all threads.

Optimize compute to reduce global memory access. Shared memory is about 3x faster. Avoid shared memory bank conflicts: use four different color planes to store raw pixel values (R, G, G, B).

Qs: archive the raws in case of a grading change ten years later? Yes. But some want to bake in the grading decisions and never let them change.

RAW is not an acronym for Really Awesome (or Awful) Workflow.

Save raws for future-proofing? Only if you save the software tools for decoding it [and then you need the contemporary hardware and OS, too. -AJW]

What about a standard raw format, like CinemaDNG? BMDCC uses CinemaDNG.

Digital Intermediates Exchange Network

John McCluskey, Nevion

Using J2K realtime for remote collaboration.

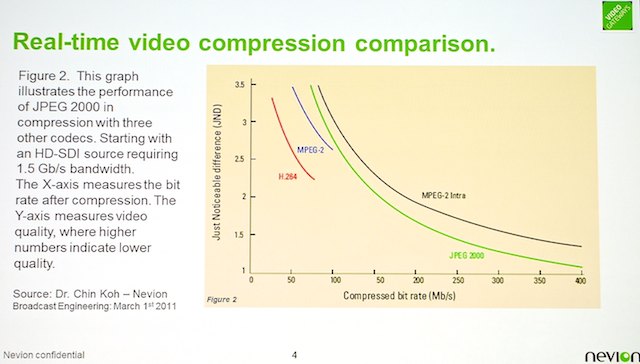

J2K (JPEG 2000) for interchange, for real-time applications. One advantage is very low latency, 2-3 frames only (unlike MPEG-2, MPEG 4 with 1-second latency).

Quality is subjective and bit rate is content specific. Lots of details, high spatial activity is expensive for wavelets and require more bits. No effect from motion because it's all intraframe.

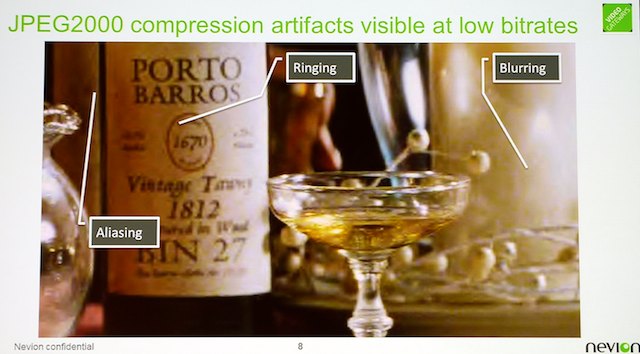

General softening, ringing, blurring as bit rate is lowered.

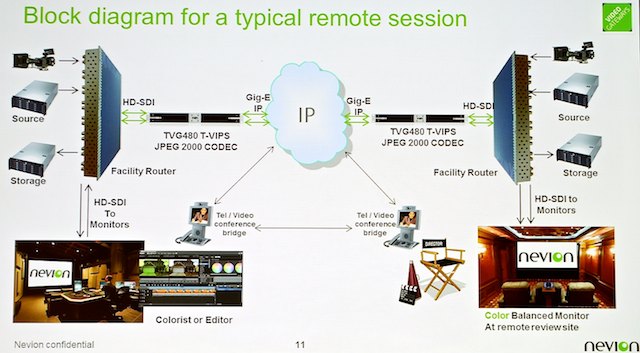

Remote viewing of editing via IP networks. Link two remote locations with TVG480 encoders on the 'Net, even multipoint collaboration. Remote color grading using JPEG2000 IP Video Gateways.

Typically this is done over a private network with Gigabit speed.

99% of the time the challenge is the IP network, and how you troubleshoot that to get the necessary quality of service.

Film-production operators aren't network-aware or too technical, so system needs to have easy to understand scheduling system. Set up scheduled event, pick a source and a destination, create salvoes of routing commands. Every equipment vendor has its own control interface; we want to expand this via SNMP to bring those other routers under common control (and do things like firmware upgrades remotely). Network topology designer app, to build network routing through valid paths with sufficient bandwidth. Also use SIPS (Streaming Intelligent Packet Switching) to provision multiple paths: in case one path falls down, there's a secondary path.

With a centralized management interface, the push of a single “router button” configures all the on-site, remote, and internet routing systems, storage and display configurations, and establish a fully-configures session.

IP nets offer increased flexibility and considerably cost savings over legacy wide-area transport systems.

Qs: How are you using MXF in your network? The TGV480 uses MXF to wrap video compressed file and audio data for transmission over IP networks. Same for 3D, wrap LE and RE streams to ensure they stay in sync over the 'net. How to ensure monitors at both locations are equally calibrated? Plan is to be able to store and recall that via SMNP. What about the third-party telepresence? We'd like to integrate that so it's just like starting a web call.

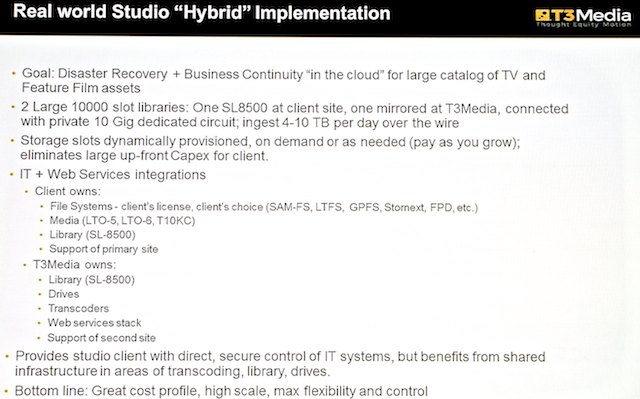

Professional Forecast: Cloudy but Clearing

Moderator: Seth Hallen, Testronic Labs

Al Kovalick, Consultant, Media Systems Consulting

Robert Jenkins, CTO, CloudSigma

Mark Lemmons, CTO, T3Media

Sean Tajkowski, Design Engineer, Tajkowski Group

Steve Anastasi, VP Tech Ops, Warner Bros.

Seth: VUCA (US Military acronym): Volatility, Uncertainty, Complexity, Ambiguity. Convert it to Vision, Understanding, Clarity, Adaptability? Sorry, the world moving forward is always going to be in constant flux. How does this change the way we work? What are the challenges and opportunities?

“The Cloud”: Topic titles: lost in the clouds, get your head in the clouds, obscured by clouds, cloudy with half a chance.

Sean: merging of IT and media industries. Two HD systems trying to access same storage. By evolution it got more and more complex, how to glue those two industries together.

Key differences between IT and media production? Media industry has unique challenges, that's why it uses different gear. Same difference moving to cloud, challenge of cloud providers to provide the service levels media industry needs. Data volume just going to get worse. How can we get media up and down reliably, how do we get the workflows coordinated in the cloud? Frameworks to make it work.

Problems not unique to cloud; there are problems with facility SANs all over LA. Shift of responsibilities, going to MIS and saying “here's what we need”. We see this with games, everyone hitting server at the same time. Google, Facebook have the same interest in uptime / reliability as we do; when they're down, costs millions per hour. Am I as reliable as a cloud vendor? Can I prove it? The cloud vendors are probably more concerned about uptime than your IT people are.

Off-the-rack cloud solutions are less appropriate for our industry; not all cloud vendors are the same. Cloud usually designed for enterprise needs, not media needs. Need companies that are malleable. Negativity is due to running up against deficiencies. Psychological element: because it's outsourcing, you don't feel as in control. Yes, it's a loss of control, but it's worth it. Like driving yourself vs flying commercially: you feel in control in your car, but you're actually safer in the plane.

20 years ago studios built their own cold-storage vaults, they didn't trust outside vendors–what if they go out of business? [Think of the Megaupload file-retrieval issues. -AJW]

Financially, the cloud is much more cost-efficient than building your own storage (including disaster recovery, migration, geographic diversity).

Trust has to be built, won't happen overnight.

We're already ten years in, media are already failing. It's no longer store-and-forget as with film. On the production side, digital is cheaper. But on the back end it's another story.

Working to improve SLAs (Service Level Agreements) so that they actually have useful metrics. Technology helps: moving to SSDs on our cloud, now we can offer SLAs on storage. With software-defined networking, we can offer SLAs on networking.

Economic implications: are some companies in trouble if they don't embrace the cloud? If you're compute-intensive; what's your workflow? If very elastic workflow, do you have to buy the maximum capacity, or do you use the cloud (in whole or in part) to provide elasticity of resources. For a small company it can be transformative (and avoids, “oh, the client went away, and now I've bought all this gear!”). Difference between selling software up front vs. pay-as-you-go model. Difficulty buying a transcoding solution and trying to run it in cloud (licensing problems). Difficulties moving/communicating between different vendors' clouds (we're back to proprietary storage again). In our industry, 90% of what we generate is never used again. In our mind, that's a good model to implement in Amazon storage (cheap/free to store, pay to retrieve).

In production, movement of media and metadata around the set. The flow of metadata with files through post and into archive is an unsolved problem (how to keep all the needed metadata with the files and keep it searchable). Without the metadata, we can dump all we want in the cloud, and never find it again! Connectivity to do this is a small cost, but productions don't budget for it. You have to put money in production for archiving, because archiving is part of production nowadays. The cost has to be written into the contracts as deliverables.

Having standards at the metadata and web-services level is essential for interoperability.

On set, film was delicately taken care of. In digital, bringing multiple systems on-set, in a rough environment, you'll just blow up multiple systems. Need to bring data center in a box, controlled environment, but no one has the budget.

The ecosystem : who feeds it? Public cloud providers, media owners, media service providers, consumers. Real example: Customer in LA with a tape library. Ingest, private 10GigE line to data center in Vegas, then to cloud and use distributed services (transcoding, color correction) and a CDN (content delivery network) for video streaming.

Qs: The reliability you need to look for isn't IT networking, but something like TV transmitter reliability. It's not that the gear is any more reliable, it's more a question of culture and the people using the gear. Have to realize that not everything needs umpteen nines of reliability. Using online screeners rather than DVDs, sometimes the service runs a bit slow, we call up, they fix it. But DVDs take days to deliver, not a few minutes. Execs are getting used to the immediately-there model, even if sometimes it takes a few minutes.

The cloud isn't always the right solution for every problem, but neither is local storage. Each is a pressure reliever for the other.

Standards? Governing bodies? Still evolving. The challenge is to build standards that are extensible/evolvable, not ones that ossify the status quo. 90% standardized: TCP/IP, HTTP, the switching network, the PHY layers. We're arguing about high-level tools for management and security. OpenStack, CloudStack, de facto standards to make it easier to move between clouds.

So, What Comes After File-Based Workflows

Joe Beirne, Technicolor NY

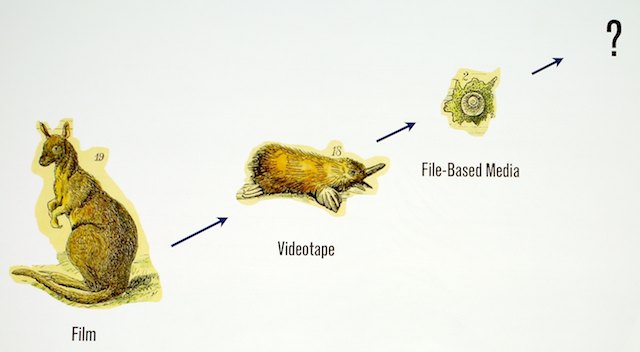

Evolution: more radical and inflected that in nature. Punctuated equilibrium.

Progression of media evolution as well as mass and bulk of the medium. (The reverse progression is that of long-term survivability!)

What comes after? The cloud. But that's the answer to every question in our business now. But, it's not the only answer.

Cladogenesis: when organisms find themselves in a new place, or the environment changes radically. Two environmental changes in our industry: the threat of the SAG strike (moved TV prods from film to digital); the tsunami (killed SR tape production, moved people to tapeless).

What's the new breakthrough? Some possibilities…

Hyper-media (embedded links), Aspen Mountain map (1978, 16mm stop-motion cameras, like an early Google Maps street view map of Aspen on laserdisc).

Trans-media, the first form of storytelling that's native to the Internet (2003, “Transmedia Storytelling”, http://www.technologyreview.com/news/401760/transmedia-storytelling/) such as the Lizzie Bennett Diaries.

Pervasive Media / Collaborative Media, panoptic surveillance and bottom-up captures of world events from citizen reporters.

Computational Media, Nick Negroponte, media that will assemble and explain itself, that will be able to scale itself for the display tech. Big-data visualization. Environmental media displays. Metadata / VR / augmented-reality overlays on images / scenes.

Entangled Media (in the quantum sense). It's already necessary that we create two sets of source files. They can be updated in two different places by a modest flow of metadata. It might even happen at the quantum level at some point; we put so much energy into creating a color negative, then a photon hits the emulsion, then we spend a lot more energy fixing that image. DNA storage?

What Just Happened? – A Review of the Day

Jerry Pierce & Leon Silverman

Cloud is “remote storage”.

When you don't know what it is, it's magic. When that happens in post, it's the cloud.

Leon asked Stephen Poster ASC how many more VFX in his films now compared to 5 years ago. Answer: An order of magnitude more.

What didn't we discuss? How are the people using this tech being trained? Can we get a policy to turn off the camera after the take, not just let it run because digital is cheap?

What should be be thinking about for next year? Pilot season starts earlier, we should move the Tech Retreat earlier. How will the effect of “House of Cards” change how we produce shows? Are we collecting enough of the right analytics to know we're developing tech in the right direction? New forms of storytelling enabled by the cloud. Look at other ways of making better pix other than increasing resolution. The increasing complexity of digital cinema (frame rates, resolutions, brightness, accessibility, sound systems, etc., and “we have to QC all of that sh*t!”). Research into what the millennials want.

All screenshots are copyrighted by their owners. Some have been cropped / enhanced to improve readability at 640-pixel width.

Disclaimer: I'm attending the Tech Retreat on a press pass, but aside from that I'm paying my way (hotel, travel, food).

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now