The Tech Retreat’s third day covered regulatory issues, HDR imaging, using a plasma for reference monitoring, SOA, networking, file-based workflows… and Mo Henry.

(As usual, these are barely-edited notes, scribble-typed as people spoke. Screenshots are copyrighted by their creators; poor reproductions thereof are my own fault.)

Jim Burger, Dow Lohnes, gave the Washington (legal) update. Due to snow, Washington has been closed, so nothing bad has been happening (grin). Elsewhere (internationally), three-strikes laws for Internet piracy and ISP filtering are going strong.

Cartoon Network vs. Cablevision: case involving “remote DVR” functionality. Claims: content was stored in buffers (thus, copied); charge of “public performance.” Second Circuit Court decisions: buffers don’t equal copies (too transitory); playback copies are allowed; private playback is not the same as a public performance.

ACTA: Anti-Counterfeiting Trade Agreement, secret international agreement (!) which includes clauses on ISP liability for third-party conduct, secondary liability. Causing consternation!

Net Neutrality; Comcast vs. FCC (the “BitTorrent case”). FCC says Comcast violated principles by messing with P2P packets; Comcast says FCC can’t enforce “principles”, FCC has no jurisdiction over Internet. FCC rulemaking; old principles: customers can access any lawful content, connect legal devices, use apps/services, have ISP competition. New: ISPs can’t discriminate, and must disclose network management. Rulemaking still in play, rules may come out after 5 March, or may wait for court ruling in Comcast-BitTorrent.

Comcast-NBCU merger: $30 billion deal, Comcast majority partner, GE minor. Comcast promises: increased kids, local, and VOD content, etc. Subject to FCC, DOJ appeal. FCC jurisdiction based on OTA NBC TV station transfer (not cable, where FCC has no power).

Spectrum war: “looming spectrum crisis”, broadband wants 800 MHz. Where from? Broadcast? Satellite? Claim that we need mobile broadband. Stimulus bill requires FCC “National Broadband Plan”, due to Congress in March. Battle cries: CTIA, CEA, T-Mobile, AT&T, Verizon, etc.: we need more spectrum, OTA (over-the-air TV) isn’t watched. NAB & MSTV: we just transitioned to digital, don’t take it away; more folks are watching OTA; wireless isn’t using all the spectrum they already have! CTIA/CEA: move OTA to low-power distributed broadcasting; reduces interference and need for guard bands / white space; opens room for wireless broadband. Broadcasters: still interference with 8-VSB, tower siting issues, hugely expensive. So what happens? Mandatory clearing of broadcast spectrum isn’t likely.

CALM Act: directs FCC to regulate commercial loudness. In 1983 FCC had been getting complaints “for at least 30 years” but declined to regulate. ATSC adopted loudness guidelines in 2009, but House passed CALM on a voice vote, referred to Senate.

Aside from that, “I have never seen so much gridlock in Washington.”

High-Dynamic-Range (HDR) Imaging Panel, Moderated by Charles Poynton, with

Stephan Ukas-Bradley, ARRI – High-Dynamic-Range Image Sensors,

Yuri Neyman, ASC, Gamma&Density – Interpretation of Dynamic-Range Capabilities of Digital Cameras,

Dave Schnuelle, Dolby – The Tyranny of Dark Rooms: Why We Need to Replace the CRT Display.

Poynton: in imaging, DR has a specific meaning. There’s a hard clip in highlights, and noise in the shadows. The standard deviation of the noise defines the size of the “noise-valued increment” and the number of those between shadows and clipping is the DR. RED is ~66dB, 2500:1, or 11 bits, so the 12th bit is always noise (assuming full exposure, of course). In processing, a DR number is uncertain at best. In display, contrast ratio is the preferred metric. Tone mapping used to map legacy low-dynamic-range (LDR) material to new HDR displays, also used to map HRD to LDR displays. In 1990, the iTU defined BT.709; “we screwed up” by not specifying the display gamma (“electro-optical conversion function”).

Ukas-Bradley: ARRI’s new Alexa cameras use 3.5K Bayer-mask CMOS with 10% overscan (for viewfinder look-around area). 35mm sensor size, compressed onboard recording. EVF models (Alexa EV, ALEV) use 16×9 EVFs, optical VF (OV) uses 4×3 finder for use with anamorphics. Target output formats are HD and 2K. Larger pixels have higher sensitivity and lower noise; 8.25 micron pixels with 800 ISO, 70% fill factor and 80% quantum efficiency. Dual-gain architecture (DGA), highlights constructed from low-gain path, shadows from high-gain path, Output is 10-bit HD or ARRIRAW. ALEV III Show Reel, looked pretty impressive. ARRI DRTC (dynamic range test chart, demoed at HPA last year) can show more than 15 stops; ALEV reads 11.6 stops on this chart.

Neyman (DP on “Liquid Sky”): published camera latitude numbers don’t match cinematographer practice. Best possible dynamic range in video is 6.3 stops. Hypergamma 3 (Sony F35?) shows 10.3 stops. Using ARRI DRTC charts, 5.7-5.8 stops measured. 5217 negative film: -3, +4 stops. Printed, get 6.5 stops total (practical range for negative is 7.5 stops, print 5.5 stops). Human eye can only handle 5 stops when resolving details / optimal visual contrast. Steps are not stops and this may be where the confusion sets in. RED ONE 4.7 stops. ODR (observable DR) vs PDR (potentially recoverable DR). Testing film cameras and digital cameras for speed: F35 is ISO 2000, Viper is 170, RedSpace is 35, RED raw is 78. A plea for objective measurement. (Very fast-moving—and more than a little confusing—presentation; need to research this to see why Mr. Neyman’s assertions differ so markedly with everyone else’s numbers!).

Schnuelle: replacing the CRT. Color CRTs with us a long time, but they’re a poor match for current monitoring needs. Several Bluy-rays had to be be recalled because of too much noise, excessive sharpness seen on consumer LCDs & plasmas, stuff not seen on mastering CRTs.

Fixed-pixel displays lets things pop out that CRTs hide with their gaussian MTFs. Gamuts are all over the map in consumer displays (not wide-gamut, but wild-gamut, according to Poynton), they’re not the SMPTE C or EBU phosphors on mastering CRTs. Local contrast is much better on consumer displays; no flare. (However there’s a trend in some consumer displays to highly reflective panels, so environmentally reflections can be problematic; Poynton notes that these specular reflections aren’t the same as diffuse reflections, which degrade black levels). “A real reference display has no knobs, but this assumes a matching reference viewing environment.” Important to know the creative intent, and we have a standardized display environment, but that’s a CRT in a darkened room—we’re still standardized on the limitations of early CRTs.

Discussion: immediate questioning of Neyman’s figures; Morton’s figures (discussed by Neyman) included specular highlights. Poynton: latitude in old days was margin of error for exposure setting, now we say total stops top to bottom. Question: how do we move HDR images around and convert to/from 10 bits? Peter Symes, quoting Dave Bancroft: what we do with new displays should not cause us to look at existing programs and say “ah, we need to change that.” Poynton: yes, but not to the point of limiting things. Schnuelle: fine, but no one looks at old content on old-style displays, it simply doesn’t happen. Director should be able to see something representative of the displays people are going to see the content on. Q: is the Alexa’s sensor cooled? A: yes, D21 cooled to 32 degrees Celsius, Alexa heated or cooled to 32 degrees. Putman: measured consumer sets at 450 nits or less, 200 nits in movie mode, so mastering at 350-400 nits is probably OK. “Tanning lamp mode” or “torch mode” is 450 nits, not as high as published figures of 500-1000 nits.

Pete Putman, Science Experiment: Low-Cost Plasma as a Reference Monitor. Yes, it’s time to toss the CRT.

A pro reference monitor must be able to track consistent color, neutral gray, wide dynamic range without clipping or crushing, consistent viewing over wide angles, precisely calibrate-able. Grayscale is the hardest thing, especially in digital (Pulse-width modulation (PWM) as used on plasma & DLP is the hardest). Shadows are always difficult; grayscale may wander around the ideal. Consumer sets often white-clipped, S-curves, tend to be too hot / too bright / high midtones, inconsistent black levels. Rolling back to 100 nits helps a lot. CCFL backlights bad for color, LEDs much better. Plasma pretty good, too. Plasmas use PWM for tonal control, >600Hz.

Q: is a $2000 industrial plasma good enough for critical monitoring? (LCD problems: costly, high black levels, off-axis color/tone shifts, bad color gamut on CCFL backlights). Took stock Panasonic TH-42PF11UK and tweaked with calibration tools. Got stable gamma with a couple of “speed bumps”, consistent if not perfect RGB tracking, great blacks. Color gamut exceeded 709, even large portion of P3. Green was a bit shifted towards cyan (for brightness). Gamma at 120 nits was 2.5; looked very smooth (movie mode), a bit of a bump in 2.2 gamma. Max gray drift was 145K in the shadows; bit of a blue bump around 70%. Brightness 100-120 nits (29-35 ft-Lamberts), contrast 1189:1 (checkerboard), 11370:1 sequential (gamma 2.2), black level 0.124 nits.

Wanted better; got a Cine-tal Davio using a 3D LUT to correct residual color and gamma errors (as seen in the demo room). After calibration, color accuracy was comparable to reference-grade CRT. Best of all: a very cost-effective solution.

Sara Duran-Singer & Theo Gluck, Disney, Classic Animation Restoration. Disney started in ’37 on nitrate, transferred to safety film ’52-’56. In ’93, one of the first DIs at Cinesite. Now in multi-year resotarion of all animations, 4k scans and digital cleanup. Fantasia now, the Winnie the Pooh. Six years ago made decision to archive everything filmed out to successive-exposure B&W films. Issues: nitrate deterioraion; how to scan? Steamboat Willie orig neg lost, some 1934 dupe neg also no good.

When Snow White was remastered bad tape splices, lots of perf damage. Thus need to be very aggressive in preserving library. Recall all nitrate, scan, fixup, film out to successive-color B&W film. Technicolor: 125 lbs, 750 lbs blimped. 1932 short “Flowers and Trees”, was 1st Technicolor release. Alignment of 3-strip records problematic.

Successive exposure developed at Disney, red, green, blue frames one after the other.

Sleeping Beauty: Technorama (sideways) 7.62 files of film, new 7.1 mix from original 3-track sound. Horizontal scratches confuse stock anti-scratch software! Original AR was 2.55, new restoration recovered that from the nitrate neg (not seen in previous re-releases, from 1987 film dupes scanned in 1993). Scanning from the successive B&W neg fixed RGB registration errors from previous re-releases.

Screened raw scans / fixups from Bambi, Pinnochio. Freakin’ gorgeous.

Rescanned nitrates sent out to new successive-color B&W films for archiving without fixups; don’t want to bake in today’s technology in case the future has better restoration tools. Fixed-up films saved at 2K to LTO-4 tapes and a new color neg is struck.

Mo Henry speaks – Mo Henry is a negative cutter, appearing in more film credits than just about anyone else ever.

Mark Schubin introduces Mo Henry.

Mo Henry speaks.

She told us a bit about her life. Babysitter for John Wayne’s kids. Tallest kid in 12th grade. Started at 18 in neg cutting, the 3rd generation Henry in neg cutting. First gig: Jaws.

Got bored, left the business, was real estate agent in Beverly Hills in the ’80s: big hair and a Cadillac convertible. First big client was a Mafia kingpin selling a house: had to go in to his house before a customer and hide all the guns, cash, and drugs, then replace them after the customer left. When kingpin went to buy a house, Henry was hired, and was told that if anything was missing after the move, she would be buried in the back yard! After that, became producer for commercials and rock videos. Worked on “Fruit of the Loom” spot; was attacked by a kid with a loaded gun on location but a cop deflected the kid. Henry, pushed to the ground, thought: What a way to go: lying on Hollywood Ave surrounded by guys dressed like fruit!

Having OCD is very helpful for a neg cutter!

Lived next to Timothy Leary; had a neg cutting business in London until partner had heart attack at dinner and collapsed face-down in the pasta. Left-handed (but has to cut with right hand) and a voracious reader despite being half-blind from neg cutting.

IMDB is incorrect; has only 60% of the films she’s done. Also worked under the alias “Ruby Diamond” when cutting porn: hey, a negative is a negative…

Now working in archiving at Sony and Warner.

Next: SOA, collaborative networking, file-based workflows, sync & timing…

SOA, Collaborative Networking, File-based Workflows, Sync & Timing…

SOA What? Panel Moderated by John Footen, NTC, with David Rosenberg, Azcar; David Carroll, Sony; Felix Froede, Ceiton; Joey Faust, NTC. SOA is “service oriented architecture”, a (mostly) standardized, web-based way to link together disparate systems to automate business processes.

Froede: SOA can reduce dozens of different protocols to a single standardized interface. Business process management needs a focus on dependencies, not just functions. For example, in DVD production, function/task groups include subtitles, memos, video processing, audio, etc., with many cross-functional dependencies.

The coordination of complex processed can be automated, letting folks focus on doing their individual functions, improving control, reducing manual handoffs, and better tracking. None of this is new; logistics / chemical / automotive industries have known this for a while.

Rosenberg: SOA business drivers: reduces complexity of existing systems, lower costs though use of common standards, integrate info from disparate sources, increase flexibility and agility, extend lifetime of legacy systems, reduce custom software. File-based workflows let you develop new, more efficient workflows. Corporate IT is very good at keeping email running, etc. but not so good at media-related workflows. Media-centric IT skills include knowledge of broadcast workflows, deep understanding of component software and web-based services, experience with XML / SOA / object-oriented systems.

Is SOA right for you? Start with a readiness assessment; do a process decomposition to identify shared services, with good documentation and understanding. Identify bottlenecks and points of failure. Know the capabilities of legacy systems. Accept the need for change; engage executives (there will be turf battles and pushback); know 5-year roadmap. Understand that SOA is a way of thinking, it’s not a product on a CD. It’s complex; it’s not for everyone (size matters). Start small. Success requires highly skilled SOA developers, which most media organizations don’t have—so you may need to hire consultants, making sure that they know the broadcast business as well as SOA.

Faust: SOA makes cross-device compatibility easier, increases application agility, multi-purposing, software as a service. But can we trust the vendors to do a good job? Is the “rewrap everything” model workable? Will there ever be a “big SOA” project that’s financially justified?

Example: two transcoding systems from different vendors. Both do the same thing, but have very different interfaces and message protocols. Re-wrap with an SOA layer to make compatible? Possibly, but it may be a lot of work.

Will we ever be able to design generic classes of media services? Yes, possibly, but probably not for everything we do. How do we deal with it: big web-services layer with a SOAP interface, or something else? Consider REST (representational state transfer): get, put, post, delete. Constrained number of operations. Simple to understand. “Fractal realization” in that same ops repeat at various levels. Moves standardization problems into the metadata problem: what to ship around.

Unilateral case for business SOA not likely in most organizations. Start smaller and incrementally; consider REST; the metadata problem may wind up eating the SOA problem.

SOA is inserting a layer of abstraction, exposing services with common interfaces. A change from tightly-coupled systems that require complete re-architecting if workflows change.

Carroll: file-based workflow hasn’t delivered on all the problems: it’s not really faster, cheaper, easier after all. Many different workflows (different workflow on every project), security issues, metadata problems. Media SOA is designed for media transactions: long-running processes. Resource management, dynamic resource allocation, queue and priority management. SOA controls file access and movement, handles security, is aware of hierarchical storage management. SOA workflow manages assets and tasks, automates non-creative work, shares resources more effectively, automatically repurpose content, speed throughput.

Example: “2012”: 30 million frames, 347 hours of content, 240 TB @ HD res, 1.44 PetaBytes at 4K res, all online 6-18 months. How to move data between different 4K islands? Can’t sneakernet tapes around, after all.

The holy grail for media SOA is a graphical workflow UI, so you can automate workflows with drag ‘n’ drop without writing a line of code.

Discussion: Cross-vendor media SOA tends to work using introspection (“what services do you offer?”) whereas IT SOA tends to develop extensions of SOA using WSDL. How does SOA differ from automation? Automation is sort of a blind sequential process; SOA is a more flexible business-rules-driven workflow, or an event-driven workflow. Problems with changing people’s workflows? A lot of turf protection; have to convince folks that the media assets and workflows belong to the organization, not to them personally.

Collaborative Networking Panel Moderated by Leon Silverman, Disney, with Peter Wilson, HDDC; Laurin Herr, Pacific Interface; Bertrand Darnault, SmartJog; Anthony Magliocco, Entertainment & Media Technology Marketing.

Silverman: In the ’80s I discovered the modem, and all of a sudden my computer wasn’t just sitting by itself… in the mid-’90s 1.54 Mbit/sec for $2500-$3000/month was a big deal… it’s become essential.

Wilson: London MUPPITS (Multiple User Post Production IT Services) project for collaborative networking, started in 2008 to delegate rendering and storage around town and out-of-town. Partners BBC, DTG, HDDC, IT Innovations, Molinare (post house), ODS (Texas oil company IT co), Pinewood Studios, Smoke & Mirrors (post house), SoHoNet (hard-drinking post industry high-speed networking infrastructure), Technology Strategy Board. SOA-based wide-area networking

Offers security, management, service control, collaboration, automation, and marketplace (since everyone is networked, it’s easier to share jobs). Core is GRIA (http://www.gria.org/), multiple data warehouses, a rule engine, resource tracking (for billing). Typical rule: “send proxy of last take to director’s iPod”. Based on SOA, allows productions to choose desired services wherever located as long as it’s on a high-speed net.

Two main thrusts: remote rendering (includes rendering estimator so you can actually suss out how long it’ll run and how much it’ll cost), and tapeless workflow (BBC very interested, using BBC Ingex; see http://ingex.sourceforge.net/). http://www.MUPPITS.org.uk Fundd research project ends in about six months but everyone expects the MUPPITS project to continue independently and invites interested parties to join up.

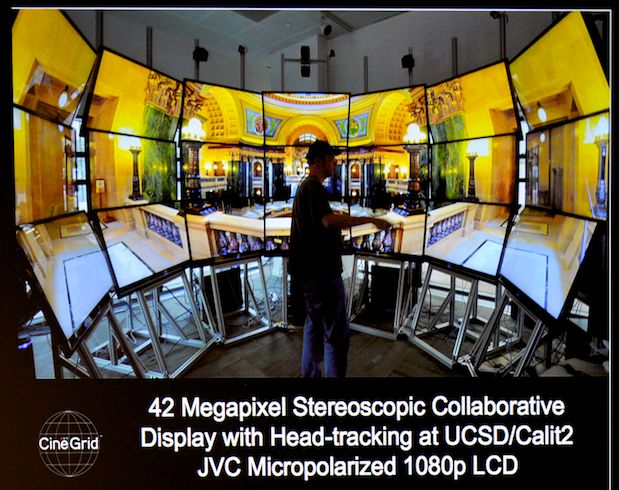

Herr: CineGrid is a university-driven non-profit organized in 2004, for folks who want to work (non-commercially) with media over high-speed networks. 1 Mbit/sec and 10 Mbit/sec over Global Lambda Integrated Facility, GLIF. Uidn MPEG-4 and JPEG2000 codecs. Worldwide demonstrations, like shooting DALSA in Prague, deBayering in San Diego, color-correction in Canada, all networked.

CineGrid Exchange: distributed media repository, by spring 2010 will have 256 TB with 10 GigE infrastructure, nodes in Toronto, Prague, Chicago, Hollywood, and Monterey. Collaborative 4K grading, sound mixing: studios synced within 1 audio sample at 48kHz, which required careful latency evaluation across the network and even within the mixing studios (acoustic delays).

Darnault: On connectivity: IP over satellite has great coverage, good for one-to many multicast. Leased lines: dedicated circuits, clear QoS, very predictable. Internet: cost effective if/when available, but no guarantees. Community networks (SohoNet, etc.) have high bandwidth, good community connectivity. Transfer apps: depends on the network and its problems. Need reliability, traffic management, flexibility, and proof of delivery. UDP is often better than TCP but sometimes (e.g., in Asia) TCP multi-connection is the best protocol. Multi-stream trunking is often the most cost-effective high-speed link; two DSL lines paired may be faster/cheaper than a single higher-speed line.

SmartJog software, tools, and automation: automated rules-based transfers, transcodes, burn-ins, and a web services API. Example: send transcoded media to 10 different recipients with a common tracking watermark (burned in at the sending server) plus a per-recipient watermark (done at the receiving server).

Magliocco: Private Cloud for Content Creation. EMTM design goals: use standard IP protocol, interoperates with common tools, but uses a private network. Multiple security levels, low cost flat rate billing, international connectivity. Players: AboveNet, SohoNet, etc. Gigabit as a minimum, 10 Gig preferred (or faster). Example: MediaXStream Studio Culver City, CGI crated in San Francisco, sound in Culver City, Sound in Santa Monica. Another: feature film with Scratch system in LA, SF interconnected. 40 Gig networking now available in some markets.

Discussion: Cost of MUPPITS? Multiple millions. What bridges these different networks? CineGrid members peer with each other. Different vendors need to interoperate. Ethernet’s spread from LANs to WANs makes interop easier. Still making phone calls to get people to patch nets together.

File-Based Workflow Panel Moderated by Howard Lukk, Pixar, with Brad Collar & Michael D. Smith, Warner Bros; Gary Morse, Fox; Bob Seidel, CBS; and Arjun Ramamurthy, Fox.

Collar: Digital End-To-End (DETE) eMasters and eDubs (Warner Bros has 500 different transcoded formats) with eMasters stored in a digital vault to strike dubs to whatever distributions are needed. Standard-def over 15k eMasters. 22 min TV show delivered in 25 min (best case) to 8 hours (worst case). HD eMaster: 1920×1080, 4:2:2 10-bit 23.976fps, uncompressed audio 16/224bit 48/96kHz. Why not 4:4:4? Service masters are simply for dubbing from, not original sources. JPEG2000 in MXF wrappers.

Testing: what’s the bitrate, quality, and efficiency of eMastering? 100 Mbit/sec CBR works, but variable PSNR (peak signal-to-noise ratio); so tried VBR (peak limit 250 Mbit/sec), with wildly varying VBR but more consistent (and higher) PSNR.

What’s the impact of processing for eDub quality? Focus on Bu-ray testing, both created from eMaster, and from high-quality source material without eMastering. Tested at 15 Mbit/sec and 23 Mbit/sec average data rates. Overall, about 0.044 – 0.060 PSNR difference with VBR eMasters (negligible); 036-0.43 PSNR diff with CBR eMaster encoding: about eight times worse!

Why such a difference in VBR vs. CBR results? JPEG2000 flicker artifact: same frames but with small, localized difference, say a small graphic element that changes from a moon to a cloud, and from a simple to compress item to a difficult to compress item. Thus the small change will rob bits from the rest of the frame, so even the unchanged areas will show a degradation. This artifact isn’t visible in the eMaster, but it winds up leading to “bit waste” in motion-compensated codecs like h.264, so it degrades prediction—in effect, every block in the frame changes from that slight degradation in the eMaster.

Morse: Fox initiative to review entire ecosystem. Endless changes ahead! An explosion of formats… Videotape is losing its ability to keep pace with the 24/7 On-demand world; stuff needs to be turned too quickly for tape ingest to keep up, especially for international clients already 8 hours ahead of us. Distro game plan: Digital Delivery Initiative. Effective 1 June 2011 everything will be delivered as files. Faster, higher quality, better security, “green” (currently industry deliverables are 85% tape, 15% digital; with manufacturing, shipping, disposal, tape ain’t green!). “It’s a Mad, Mad, Mad, Mad, Multiformat World”: Files, files, and more files; small ones, big ones, metadata, other ones… Capturing HD, capture once at a high bitrate (looking at JPEG2000), store under Fox control, create deliverables from that single capture. Components: digital media processing facility, catalog management, digital vault, and work order management (old catalog on 23-year-old IBM AS/400 system!). Security: watermarking, fingerprinting, encrypted delivery. Final thoughts: JPEG2000, disaster recovery, file-based network deliverables.

Ramamurthy: file-based workflows orchestrated via a BPMS (Business Process Management System). Sine 2006 180,000 files created and delivered, sent out to a multitude of destinations. Why BPMS / SOA? Integration through watch folders results in redundant copies, it’s hard to manage and forecast, no standard dashboard control, but it’s dead simple to set up and deploy. With BPMS / SOA, an ESB (enterprise service bus) connects all elements and it all just works (more or less, grin). How to implement? Identify workflows, map process flow, understand roles, define monitoring , identify toolchest maturity and API maturity. Looking at even simple workflows reveals a lot of complexity, a lot of dependencies; do you want people to change roles or assume multiple roles? SOA requires web services, SOAP/XML over HTTP. But most of our devices have command-line interfaces, serial lines, custom APIs. Need WSDL, UDDI. Stated integration with Digital Rapids (encode/transcode), Baton (QC), Rhozet (transcode), custom delivery engine, custom asset management systems.

It’s just gone live; come back next year to find out how well it’s working.

Seidel: “Pitch Blue” realtime HDTV store-and-forward delivery system. In April 2009 three companies found a need for HD distribution: GDMX, Ascent Media, CBS Worldwide Distribution formed a company to distribute to 800 TV stations. Each station gets a free MPEG-2 TS (transport stream) recorder (TSR) fed from satellites. Fully automated, can “call home” if there are TX/RX errors and ask for a re-send. All video is 1920×1080 @ ~15 Mbit/sec, Dolby 5.1 or L/R mix. All boxes monitored every 10 minutes. Boxes have 3 TB of storage, 100 hrs of SD or 370 hrs of HD. Station can choose 1080i outputs or downconverted outputs. Three disks, plus a flash drive for rebooting if all hard disks get trashed. Box has FTP connectivity to other play-to-air servers if using box’s playback isn’t done.

Discussion: CBS et al. built their own TSR box because many of the third-party solutions had exorbitant costs. Fox used Oracle middleware for their ESB after fairly extensive tests; also, Fox folks already knew Oracle.

Peter Symes, SMPTE: SMPTE Action on Future Timing & Synchronization. Current references are about 30 years old, based on color black. Not easy to sync with audio, lock 50 Hz and 60 Hz sync together. Timecode is also 30 years old, designed for edge track recording, tops out at 30 fps, getting creaky: we need new timing and sync.

User requirements on TRL: Time Related Label. Master clock feeds a Common Sync Interface, which feeds “some sort of transport” like Ethernet. Includes clock data including time zones, etc. Over 100 interested parties from broadcast, telcos, IT. Data structure for labeling video frames; resolution up to 1000 fps, sub-division down to fields & audio sub-frames. Track 2:3 pulldown, rate indication, multiple “timecodes”, varispeed and slo-mo support, etc.

User requirements on sync: value & economy; deterministic phasing, absolute time reference, external lock, Time-Of-Day info, leap second management, time zones. Need sub-nanosecond precision for subcarrier-based work (NTSC through a switcher) but want ability to extract/generate lower-precision sync at lower cost. Prefer to use common, non-dedicated transport (Ethernet?), have a 1 km distribution range, fast master/slave lockup and relock after disruption.

Three parts: Master unit with a CSI, a sync transport (e.g., IP network), and a slave with a CSI. Proposed format: a data packet with the CSI dataset, and a time reference when the dataset is valid (IEEE 1588).

What SMPTE needs is input for the contents of the time label, to make production’s job easier. Two TRL types proposed: Type 1 is a pure time stamp, nice for absolute timing; type 2 is start time plus media unit count (e.g., frame count), nice for counting frames. Technical Committee 33TS, also working groups. Questions remain: does CSI design meet user requirements? How to use IEEE 1588? And so on; if you have ideas, please participate in SMPTE’s process.

What Just Happened? – A Review of the Day by Jerry Pierce & Leon Silverman. Q: how many folks here have Blu-ray? Most. How many have used BD-Live? Perhaps half. More than one? Only a few.

Dynamic range is definitely controversial, often misstated, often misunderstood… One thing we’re seeing is a desire to defer grading changes as late as possible; want enough bit-depth to be carried through. In the ASC camera assessment test showed is that the chokepoint is the 10-bit DPX file: the limiting factor in end quality (!) as most cameras capture much more nowadays. ASC IIF may have some solutions… Social networking works: the Mo Henry wikipedia page was updated within one hour of her speech here… Demo room hits: Joe Kane’s Samsung projector (discussed in my final report after tomorrow’s sessions)… file-based transfer from ProRes into a DCP (easyDCP)… Panasonic’s 3D camera out of its glass case, making pictures.

Day 4, Friday, is the final day; my report will appear over the weekend.

More:

16 CFR Part 255 Disclosure

I attended the HPA Tech Retreat on a press pass, which saved me the registration fee. I paid for my own transport, meals, and hotel. The past two years I paid full price for attending the Tech Retreat (it hadn’t occurred to me to ask for a press pass); I feel it was money well spent.

No material connection exists between myself and the Hollywood Post Alliance; aside from the press pass, HPA has not influenced me with any compensation to encourage favorable coverage.