On this fourth and final day of the 2011 Tech Retreat, we learned about standards activities, 3D ghosts, camera arrays, automated audio “recognition”, a new method for making film protection masters, how bending a cable affects its performance, and a whirlwind tour through TV Tech history. Also: the death of tape… for real this time?

These Tech Retreat posts are barely-edited stream-of-consciousness note-taking; there’s no other way to grab all this info in a timely manner, get it published, and still get enough sleep for the next day’s sessions!

I often use “distro” as shorthand for “distribution”, and “b’cast” for “broadcast”. You have been warned.

What’s Happening at a Standards Organization Near You – Peter Symes, SMPTE

Brief overview: lots going on; Wendy promoted to Exec VP; Hans Hoffmann of the EBU now Engineering VP.

Annie Chang and Howard Lukk chairing the IMF working group (see yesterday’s coverage of IMF).

SMPTE working on: ACES, ADX, APD; 3D Home Master (single-point delivery master, like IMF for 3D); 3D disparity map representation. BXF: Broadcast Exchange Format (traffic info to automation systems), XML based, over 80 companies participated, being widely adopted; BXF 2.0 under way. ST 2022-x, Video over IP, in conjunction with VSF.

Synchronization and time labeling: Successors to color black and timecode. Why base digital timing on color subcarrier frequencies? Slower progress than expected. IEEE 1588 developing in right direction. More IT-based than previously expected. May wind up with IEEE 1588 with a bit of video-oriented metadata added. May work out that all gear—60Hz, 50 Hz, 24fps—happily synchronizes to the same signal. Should be low-cost, too.

Time Labeling: a new timecode? Won’t replace SMPTE 12M TC as it’s far too widely used, even outside of the industry. Originally designed as what can be recorded on the edge of a quad tape, and what can be displayed on nixie tubes, neither of which is very important nowadays! Nice to have more info; to maintain original camera time along with program time. Need good input from the post community; HPA and AMPAS organized a day-long meeting for discussion and feedback; very productive. Q: Do we need a frame count, or just a high-precision time reference? A: We NEED frame counts! More info “when we have a strawman.” If we’re replacing something 30 years old, we’d better get it right.

Other projects:

Reference displays: need to get EOTF (electro-optical transfer function) right. CRT was incredibly good; other displays emulated CRTs. Differences between pixel-matrix displays are trickier; harder to get consistent display rendering.

Lip sync: perpetual challenge. And when we went to ATSC, which has timestamps to ensure A/V sync, errors went from a few frames to a few seconds!

Dolby E for 50Hz and 60Hz; Archive Exchange Format; 25 Gbit/sec fiber interfaces; accessibility-times text standards for D-cinema and broadband; ongoing D-Cinema, MXF, metadata work.

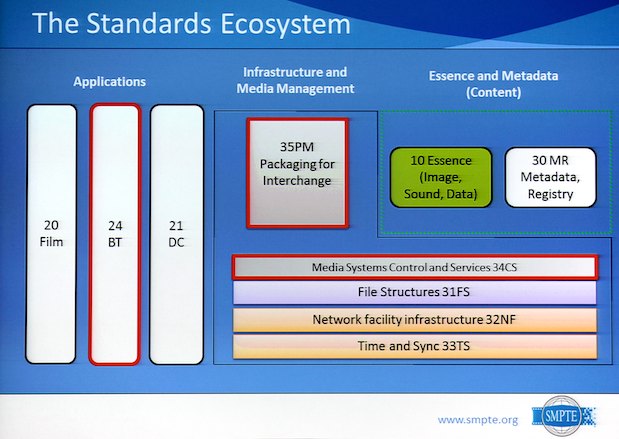

Three new tech committees: broadband media and television; media packaging and interchange (IMF and 3D Home Master); media systems, control, and services.

The standards ecosystem :

Digital Leader: SMPTE has a set of TIFFs and WAVs to make leaders for DCDMs (head and tail leaders).

DPROVE: set of 48 DCPs for for testing / setting up theaters and screening rooms: 2D and 3D, 2k and 4k, multiple aspect ratios, etc.

Professional Development Academies: monthly webinars, free to members, low-cost to non-members; check ’em out on SMPTE website.

Upcoming programs: Digital Cinema Summit at NAB; regional events; conference on stereoscopic in NYC in June; SMPTE Australia in July; annual tech conference in Hollywood in October. Next year: global summit on emerging media tech, with EBU in Geneva in May.

Redesigned website, new member database, digital library with all SMPTE pubs since 1916 online.

Lots happening, please participate!

Q: what about the centennial in 2016? Working on big plans.

Measurement of the Ghosting Performance of Stereo 3D Systems for Digital Cinema and 3DTV – Wolfgang Ruppel, RheinMain University of Applied Sciences

Wolfgang is a “long-term survivor of D-cinema projects”.

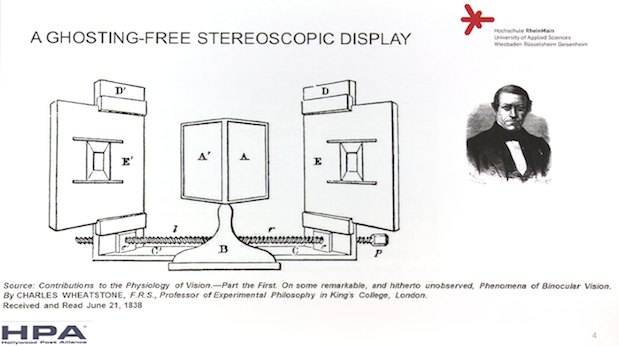

Ghosting is the perception of leakage (or crosstalk) of one channel’s info into the other channel’s eye. Due to imperfect separation of channels, and a main reason for 3D discomfort.

One way of getting perfect separation.

This sort of display is actually used today for 3D X-ray viewing!

Ghosting can be characterized as:

R’ = R + k x L

L’ = L + k x R

where:

R’ = what the right eye sees

L’ = what the left eye sees

R = right eye content

L = left eye content

k = leakage amount

That would be simple, simply subtract k x the wrong channel. But this won’t work for subtracting a bright ghost from black since you can’t go negative. Also, k not constant; depends on angle, brightness, color, ambient light, etc.

How to measure L and R? Linear light, gamma corrected code values? We’re using code values; perception based on lightness, not luminance. Measured crosstalk may be 10% in linear light levels, but is 44% in code values (a proxy for perceived lightness).

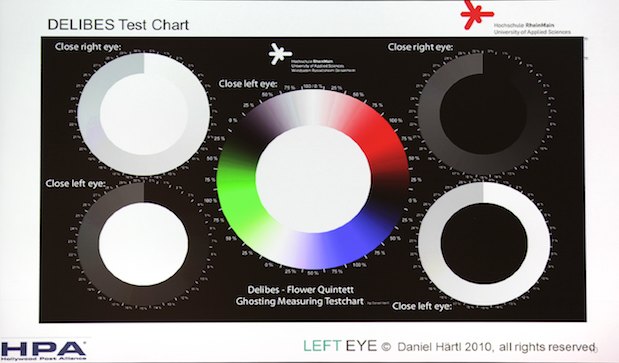

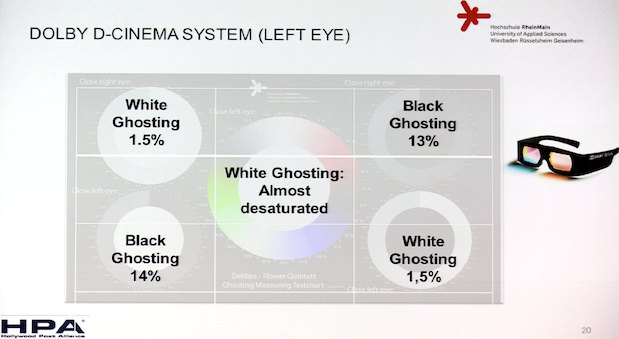

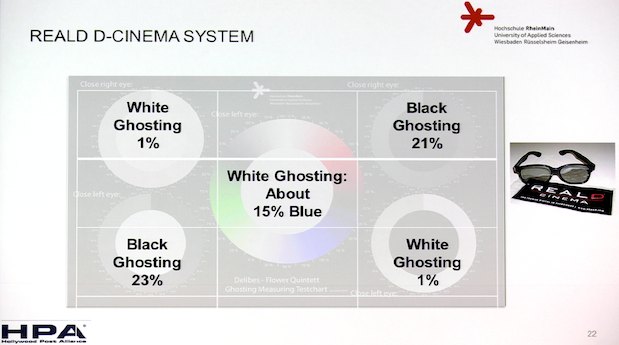

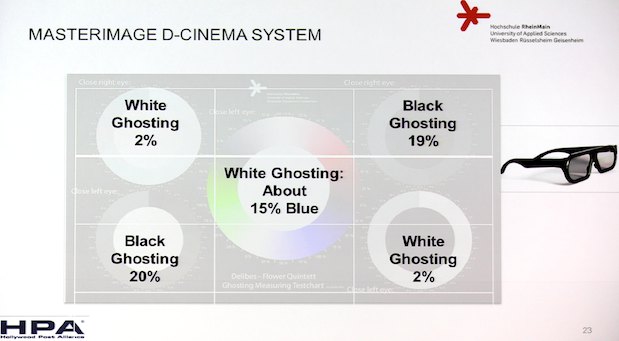

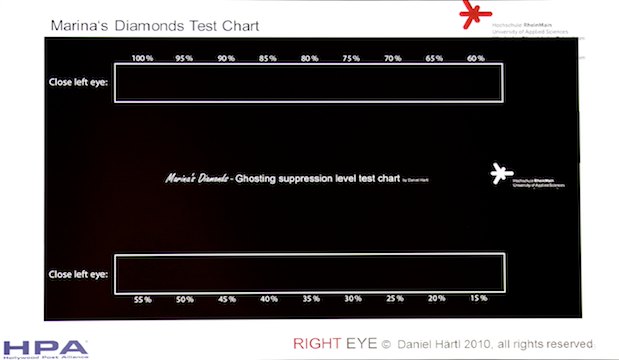

Test chart:

Left Eye chart. Right Eye chart basically swaps white circles for black, and vice versa.

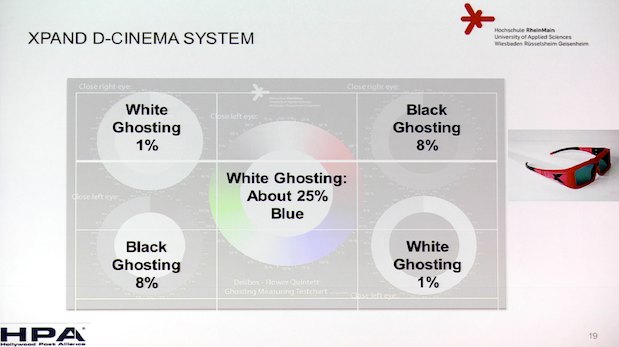

There’s both white ghosting and black ghosting; there’s also color-shifting in ghosting.

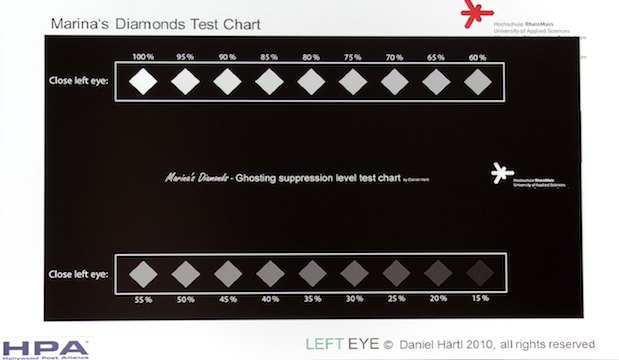

Ghosting suppression level: looking for single-value suppression levels; used charts to see what level of bleed was.

Charts for looking at suppression levels.

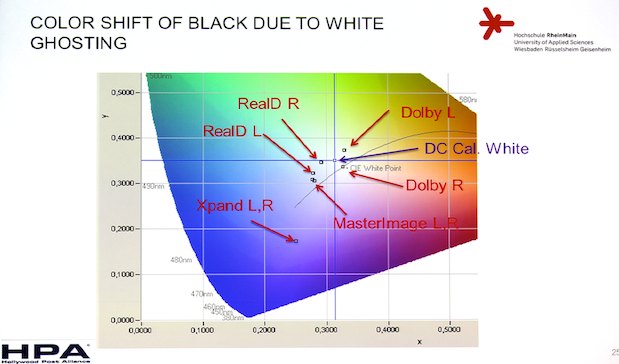

Looking at color changes due to ghosting, too:

Color shifts in black parts of the image due to ghosting.

The white ghosting color shifts were much lower.

Spectroradiometer measurements of ghosting correlate well with perceived ghosting.

Ghost suppression differs a lot depending on color, with different systems having better luck suppressing red crosstalk, others blue; the suppression levels differ depending on ambient light, too. Brighter 3D shows more ghosting than dimmer 3D.

Discussion: please don’t use code values, because they differ between systems, use L* values instead. A: Doesn’t really matter since we can’t control brightness levels across different venues, so code values vs. L* doesn’t make any practical difference.

Photorealistic 3D Models via Camera-Array Capture – John Naylor, TimeSlice & Callum Rex Reid, Digicave

Using purely passive state of the art camera arrays. (These are the folks doing the multiple Canon rigs to shoot Mirage surfing spots; see also how they did it).

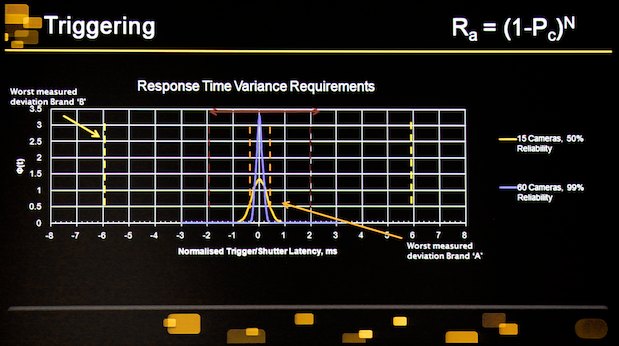

3 points of merit: determinism (must get the shot), getting every camera to trigger at exactly the same time; resolution; number and layout of cameras.

Stabilization doesn’t matter.

Array costs ($400,000?) can be amortized over non-3D jobs. Customer benefit: buy in day rate, no R&D.

Determinism: multiple cams of the same type have generally the same latency, but there will be variance:

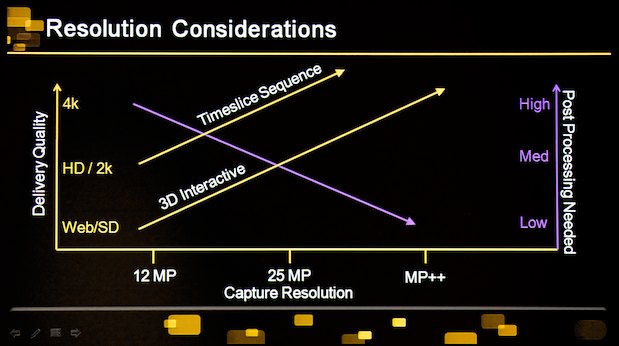

Resolution: the more pixels the better:

Demo: a 360 degree timeslice shoot in Paris. Two hours to rig the cameras using a pre-built support system.

Image interpolation with software from The Foundry.

Test with Digicave, Phantom slo-mo plus 40-camera timeslice.

Digicave: free viewpoint media production. Focus on 3D interactive content. Tech enabled, but not tech focused. 3D body scanning.

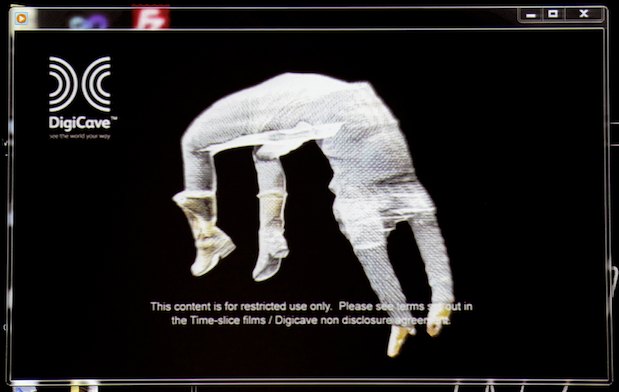

Samples of the 40-camera test with Timeslice; 3D models derived from the pix:

Full wireframe model created from multiple images.

Color panted back on; model can be viewed from any angle interactively.

3D modeling data-extraction software developed over 3 years by in-house PhD guru.

Demos of interactive 3D models, full fly-around from arbitrary angles.

Want to go to motion work; RED cameras because 12 Mpixel images are the minimum required.

Qs: how many cameras used for the 360 shoot? 60. For the all-round view of Callum? 36 cameras. Some touch-up needed on the top. Interpolation is good, but better not to have to do it (two hour step). Higher-res cameras more important than having more cameras. Tested with motion? 16 HD cameras, but they were only 2 MP, and the results look like it. Difficult to ensure consistent color / exposure across cameras.

New Audio Technologies for Automating Digital Pre-Distribution Processes – Drew Lanham, Nexidia

Dialog search, Dialog-to-transcript alignment; audio conform (synching multiple audio tracks).

Originally out of Georgia Tech in 1997, 10 patents, in Avid’s ScriptSync, PhraseFind; also an FCP plugin.

Dialog is a rich source of metadata. 100x faster than realtime, language identification. Able to search for any word of phrase once the index is created. Can use boolean operators and time values.

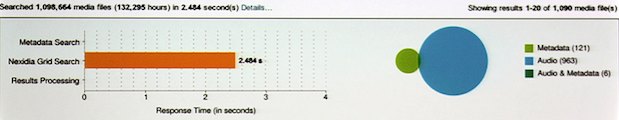

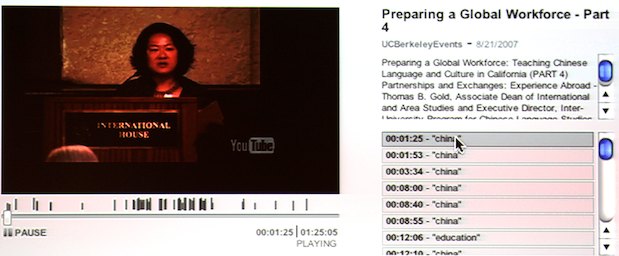

Demo of Nexidia SearchGrid: 130k hours of YouTube clips, searched in about two seconds for the word “china”. Downloaded videos; scanned and indexed, stored metadata, dialog, ID of the video for later recall.

Note the amount of hits returned by audio search compared to metedata search.

Bar graph under video is timeline of search term occurrences in the video.

Dialog to transcript alignment: Avid ScriptSync. Lets you navigate through program by text, or finding text corresponding to a part of the program. Also find places where text and dialog differ in content. Identify changes between versions. Create rough cuts by selecting text. Generate timing lists for ADR, captioning.

Full-length movie took 2.5 minutes to process on a MacBook Pro.

Audio conform: audio based similarity analysis. Can detect “are these assets related?”, can detect drift, gaps, incorrect content, distortion or dropouts. Believe it’s more consistent and accurate than human review. Output is XML based so easy to use elsewhere.

All apps are software based; highly scalable; SDKs on linux, Mac, Windows.

Discussion: search is tweakable for recognition threshold, to trade off false positives and total returned hits. Anyone using it for captioning? Not yet; people are looking into it. Certainly helps for automating the timing process. Phoneme-recognition based, or using dictionaries? Phoneme based, not dictionary based, so it works on slang, which isn’t in the dictionary. Code is about 50 MB, language packs are 10-20 MB.

Archiving Color Images to Single-Strip 35mm B&W Film –

Sean McKee & Victor Panov, Point.360 Digital Film Labs

[Presenter had different name, but I was in the middle of a discussion and didn’t catch it. -AJW]

Visionary Archive. Two methods:

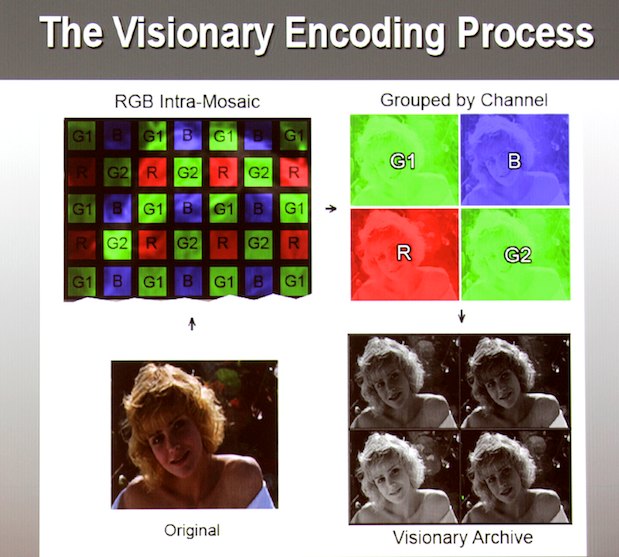

RGB+: HD or 2K full RGB to 3 quadrants of a frame; 4th used used for metadata recording. Added pixel-accurate alignment patterns around each quad to ensure protection from shrinkage and distortion (10-pixel grayscale sinewaves). Also black, white, midgray reference to preserve level (and color) values.

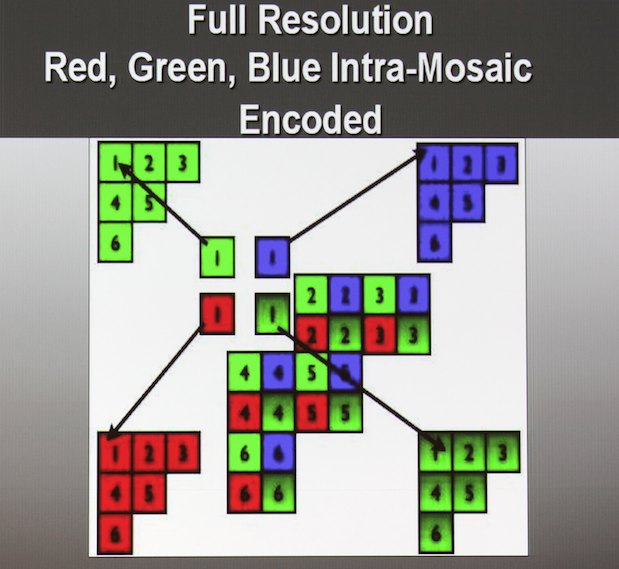

RGB Intra-mosaic: super a Bayer pattern, separate out two Green quads, plus 1 Red and 1 Blue.

Tested on Arrilasser2, Aaton K, Cekloco, etc.; Kodak 2234, 2238, 5269, Fiji RDS 4791.

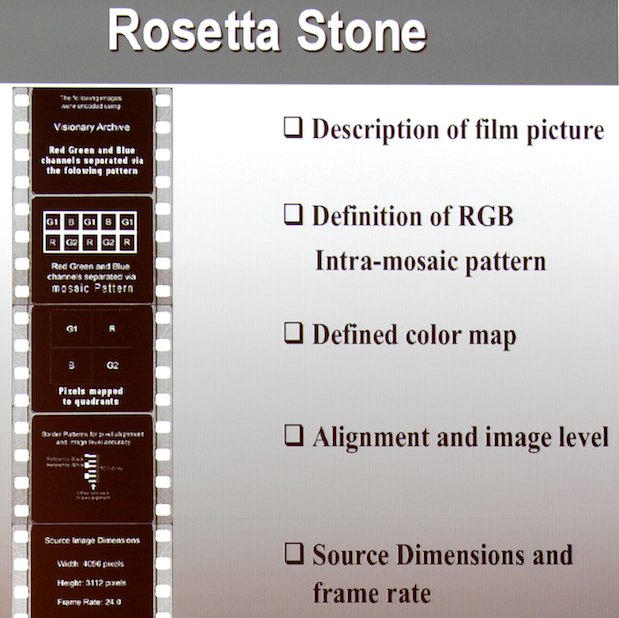

“Rosetta stone” frames at the head so that someone with no knowledge of the format can easily decode it:

Why film? 300 year lifespan, and it’s immediately comprehensible: just hold it up to the light.

Workflow: run files through the Visionary encode software, data-record out to B&W film, store the film. Safe, simple, should last a long time.

Tests: less grain than YCM masters, no re-registration required, warped film not a problem (alignment pattern for de-warping), creative intent preserved (white/gray/black patches preseve color and tone values).

Free source code for un-archiving. Hoping to make it a standard component of film scanner software.

Audio: digital coding in an analog wrapper. Oversampled 2x, all manner of redundancy. 16 channels per frame in a quadrant.

What’s next? 3D stereo archive element; metadata integration.

Playback at the Tech Retreat compared original, versions created from YCM master and from Visionary Archive master. VA master version showed better color fidelity than YCM masters, even after color correction. Said to show lower grain, higher res, but I was in the back of the room so I couldn’t see such finely detailed information.

Discussion: with the 4-quad mosaic method, where’s the audio? Use a separate filmstrip. Mosaic gives higher visual quality than 3-quad version. If the audio is digital, doesn’t that break the discoverability of it? The problem was how do you put something analog on film and have it survive scratches; audio is very sensitive to distortion. Thought of an analog guide track. In any case, human-readable audio is a lot harder than human-readable pix. Nit-pick: most film-out systems already include grayscales and calibration wedges. Yes, but not per-frame. How does the small quad allow you to claim lower grain? The 3-quad system doesn’t, but the mosaic system does; since you’re not shrinking/enlarging the mosaiced samples, there’s no grain enlargement. Any sense in going larger than quads, like 3-perf sequential? Yes, looked at it, but lose the economic advantage: area vs. money!

Next: Bend radius; Schubin and history; final thoughts…

Bend Radius – Steve Lampen, Belden

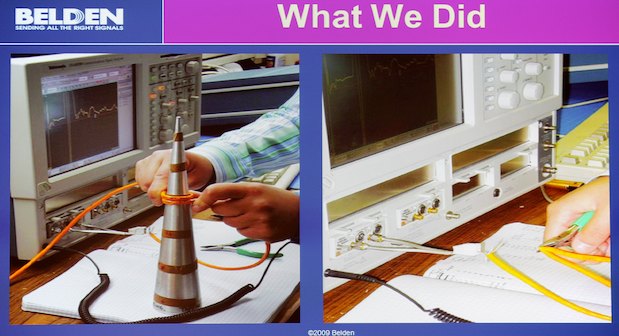

“Don’t bend tighter than 10x the diameter.” But is it 10x? 4x? Tried the experiment, wrapping cable around a cone:

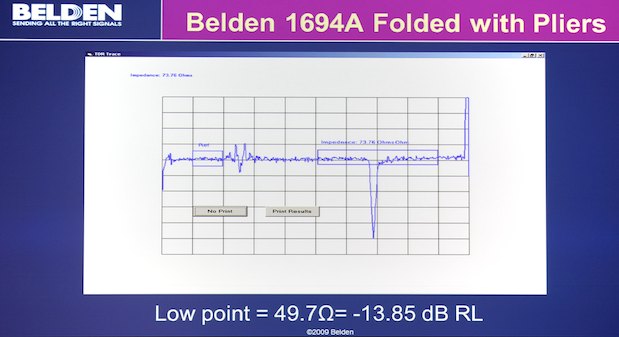

Wrapping cables; also pinching with pliers!

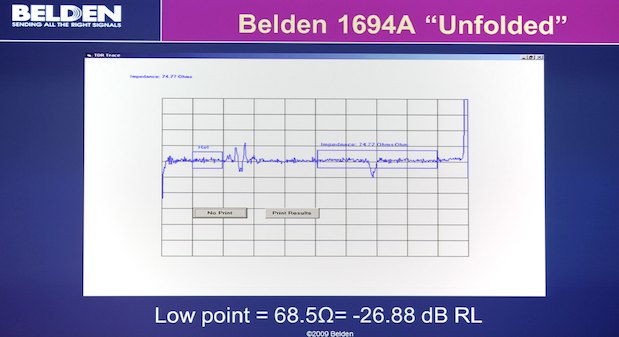

It is claimed that the damage can’t be undone; unbending only hides the damage.

Stranded cables are better at bending, also smaller cables, flexible cables.

Cables designed for a typical -30dB return loss, e.g., 99% signal transmitted, 1% reflected.

Critical distances: quarter wavelength at 3 GHz = 0.984″.

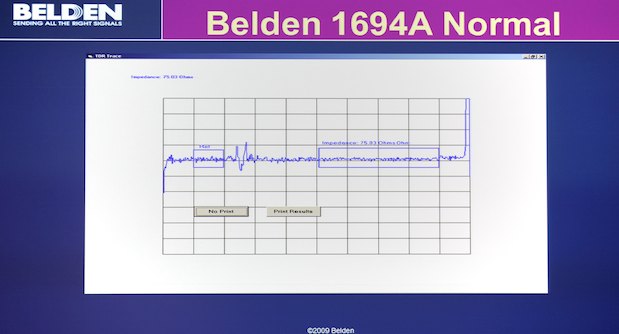

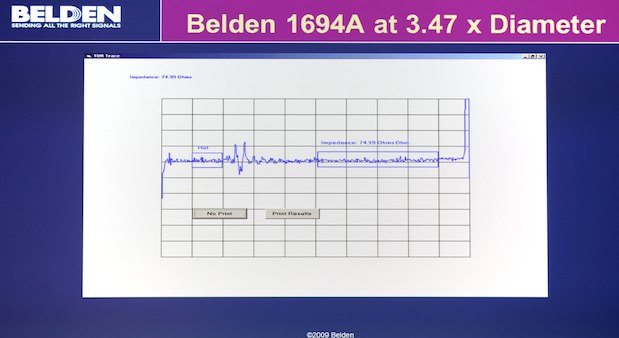

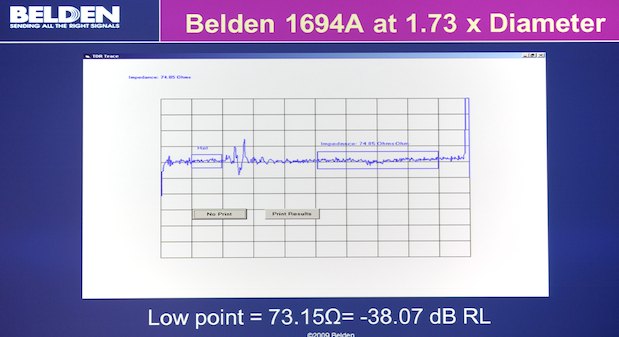

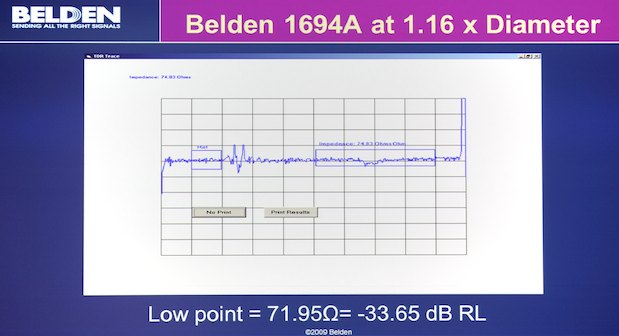

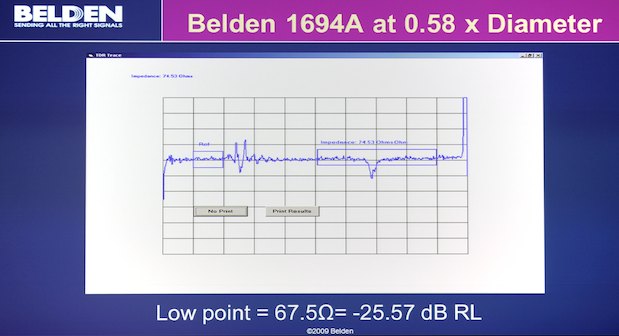

Solid cables: time-domain reflectometry of 1694A (Belden’s RG-6 type coax, pretty much the standard solid-conductor cable for HD-SDI studio wiring):

A 6-inch bend on 1694a is less than 4x the diameter, but the cable was pretty much OK unlil the bend radius was comparable to the cable diameter. Even after folding in half with pliers and unbending, the cable recovered considerable.

Stress whitening shows up on PVC jacket when cable is crunched; if you see a white patch on the cable, you might want to check it.

1505A OK to 1.18x bend, folded with pliers -20 dB RL, unfolded -33 dB RL.

1694F (flexible) OK to 1.16x, even to 0.58x still OK. Pliers make it bad, but straightened out is still pretty much OK.

1505F: OK to 1.18, pliers bad, unfolded, not too bad; 24 hrs later back to -33 dB RL.

So, cable can withstand severe bending, and much performance returns when unbent.

What about Ethernet on twisted-pair? The lower the frequency, the less the effect. A data cable only goes to 500, 625 MHz per pair, which is nothing! What about tie-wraps? The sloppier you are, the better! On multipair data cables, what about changing the geometry from bending? Yes; what degrades on data cables is more crosstalk between pairs, not so much return loss. Have you tried more wraps? Wrapping around a nail? Clove hitch? Bowline? Yes, we tried tying cables in knots, stomping on it, it still worked.

When did we come from – Mark Schubin

(Mark does a “post-Retreat treat” in which he feeds historical info to us at firehose rates, to our general bemusement and delight.)

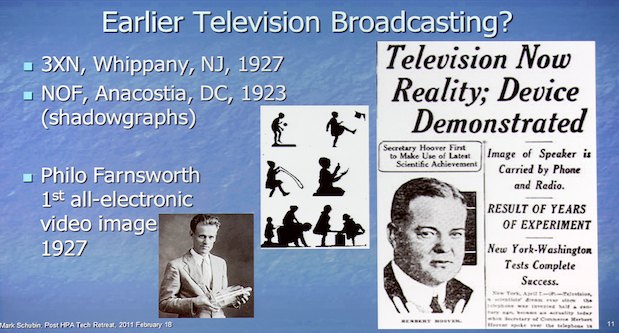

Headlines: “3D TV Thrives outside US”, “3D could begin in the US within a year”: April 22, 1980! Also, Business Week 1953: Jim Butterfields’s 3D broadcast; Modern Mechanix 1931, Baird in 1928, and Baird probably was the first. Active shutter glasses 1922. Live-action 3D movies in 1879?

Electronic TV proposals in 1908, picture tube in 1907. In 1927, intertitles (title cards for dialog). Talkies in 1905, 1900; sync sound in 1894, even (William Dixon playing the violin)! The first edit: 1895, “The Execution of Mary Queen of Scots” (view it on the Library of Congress site).

Edison tone tests, 1910: blindfold tests comparing an opera singer and an Edison phonograph; people couldn’t tell the difference. It didn’t hurt that one of Edison’s chosen opera singer later admitted that she practiced sounding like an Edison phonograph (!).

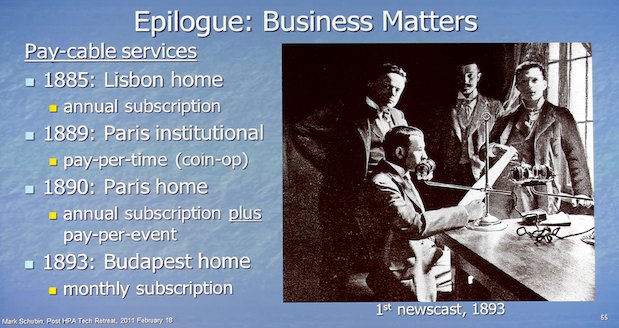

Headphones date back to 1890 (patented then), but used in Lisbon in 1888. The Gilliland harness for telephone operators dates back to 1881 (but weighed 8-11 pounds. Stereo 1881. Pay cable 1885 (audio feeds of operas).

“Television” coined in 1900 to replace earlier words: telephote 1889, Nipkow patent 1885, Sutton’s Telephane 1885 (oil-lamp TV), Robida’s “The 20th Century”, 1882, showed large screens; “an electric telescope” 1879. Punch’s Almanac in 1879 showing “Edison’s Telephonoscope” (but also showed Edison’s antigravity underwear!). Letter to the NY Sun, 1877: “electroscope” for transmission of opera performances (which, Mark notes, is his day job, more than 100 years later).

Electrical telegraphy proposed in 1753. Fax-scanning patent 1843; pneumatic tube 1854; telephone 1876; commercial fax service in 1861 France.

First photo 1825. First transatlantic cable 1858. Photosensitivity of selenium written up in 1972… whereupon a rush to invent TV!

Final thoughts…

The end of the post facility as we know it? The general gist of the first day’s presentations was that a lot of what had been safely ensconced in the post facility is moving on-set or near-set, and that the days of the traditional, bricks-and-mortar post house / facility / lab may be coming to an end.

The irony of this concept being advanced at a conference organized by the Hollywood Post Alliance was not lost on your correspondent…

…but let’s not panic just yet. A quarter of a century ago, Stefan Sargent, founder of Molinare, one of the first heavy-iron post houses in the UK, wrote the article “Buying Obsolescence” for the Journal of the Royal Television Society. In it, he describes walking into the machine room of any contemporary post house (this is the era of 1″ Type C VTRs, Grass Valley 300 switchers, and Ampex ADOs): millions of dollars of equipment, and it’ll all be obsolete in six or nine months!

The equipment has been getting better/faster/cheaper for the past 25 years, yet Molinare is still around. The post business has never been one where you can rest on your laurels; the tech and the business climate have never been stable and secure (even in the film days, as one presenter noted: 150 different film formats in the past 120 years). What differs nowadays, just as in the camera department, is that the barriers to entry are so much lower, more people can get in the game. Post is hollowing out: you’ll have guys in their basements with FCP on a MacBook Pro, and high-end houses with the film scanners, grading rooms with control surfaces, proper monitoring, and real-time high-bandwidth storage—and not much of anything in the middle. Post has always been a high-risk, high-volatility business; it takes a strong constitution and more than a bit of luck to survive long-term, but the one thing that remain constant is that talent, not gear, is the best indicator of success. Plus ça change, plus c’est la même chose…

We used to speak of the end of the format wars, and boy, were we ever wrong!

A few years back, we were talking about seeing the end of the formats wars. Remember when we shot interlaced 4×3 SDTV with a specified colorimetry and gamma to a handful of tape formats, and delivered interlaced 4×3 SDTV to an audience using interlaced 4×3 CRTs? Ah, those were the days… we didn’t know how simple we had it.

Now, let’s see, for acquisition, we have AVC-Intra, AVCHD, HDCAM, HDCAM-SR (in three different bitrates), ProRes (in more than three different bitrates, depths, and color resolutions), XDCAM HD and EX variants, DVCPROHD (still out there), ArriRAW, R3D, Codex Digital, Cineform, uncompressed (S.two; DPXes on SR 2.0), and whatever your HDSLR du jour shoots, just to name a few. And that’s just HD. There’s still SD being produced, and 2K and 4K, in gamma-corrected “linear” or log (LogC, S-Log, REDlog); 4×3, 16×9, 2:1, 2.4:1; 8-bit, 10-bit, or 12-bit; 4:2:0 to 4:4:4; full-res, subsampled, or oversampled (both well and poorly).

If you add in the number of deliverables the various presenters were talking about, and consider all the permutations of inputs and outputs, it just boggles the mind.

The format wars are over. The formats won, all of them. May their tribes increase.

Deal with it.

“The Tape is dead. Long live the Tape!”

Sony’s demo of solid-state HDCAM-SR, a.k.a. SR 2.0, marks a transition from the last professional videotape format we’re likely to see into—finally—a world of totally tapeless acquisition.

The 1TB SR memory card, in front of an SRW-9000 with the solid-state recorder (and a nanoflash proxy recorder!).

The medium (a bit too big to be a “card” or a “stick”, shall we call it a data-slab?) comes in 256GB, 512GB, and 1TB capacities; the big one will record about 4 hours of SR (440Mbps) or twice that of SR-lite (220Mbps) material. Prices were not announced, but the scuttlebutt amongst HPA attendees was around $8000 for the 1TB memory slab.

There’s a solid-state “deck” for the slabs, too; it has four slots, and four IO channels, so it’s really a four-channel video server in the guise of a “VTR”:

One of the demo units was playing out on two channels while recording on the other two.

There’s also a 1 RU box the length and width of an SRW-5800 VTR, a single-slot SR slab player/recorder with 10 Gigabit Ethernet on the back. It was shown atop a 5800, looking uncannily like the HD adapter for a D-5 VTR (an observation which, when offered, brought a slightly pained grimace to the face of the kind Sony fellow showing me the gear); the combo serves as a faster-than-realtime slab-to-tape offload station. The slab reader can also work as a standalone device for network transfers, or as a slab reader for any computer with a fast enough network port.

A portable deck was shown attached to the back of an F35, as well as cabled to a PMW-F3:

Sony says it’ll be in the same pricing order of magnitude as the PMW-F3, making for a very affordable package (comparatively speaking, of course) for shooting dual-link, large-format images to SR. As a camera operator, I appreciate the reductions in size and weight just as much as I like the savings in cost, complexity, and power requirements (theoretically, that is; SR shoots are well above my pay grade).

“Now how much would you pay? But wait, there’s more!” Sony was showing native SR editing (well, decoding, at least) in both Avid Media Composer (via Avid Media Access) and FCP via an updated version of the cin©mon plugin. I saw SR-lite (the 220Mbps version) happily playing natively on a MacBook Pro (and yes, I did command+9 on the clip in the bin, and it came up as HDCAM-SR 220Mbps in the Item Properties window). As for writing an SR file natively? Sony says that’s coming, but a timetable hasn’t been announced.

All the SR 2.0 stuff being demonstrated is supposed to ship “this summer”. And when that happens, SR—the mastering and mezzanine format of choice for most high-end productions—will have a smooth migration path away from tape. “The Tape is dead.”

At the same time, LFTS-formatted LTO-5 is taking off like a rocket. One speaker at the Tech Retreat asked for a camera that shoots directly to LTO-5; 1Beyond had a “Wrangler” system capable, via an HD-SDI connection, or recording directly to LTO-5.

1Beyond’s LTO-5 “sidekick” for backup of disks and cards inserted into the adjacent Wrangler DIT station.

LTO-5 drives were all over the demo room, in boxes from HP, Cache-A, and even ProMAX:

ProMAX ProjectSTOR disk/tape combo box.

Using LTFS, LTO becomes a cross-platform, interchangeable, easily searchable format allowing partial restores—in other words, something fast enough, friendly enough, and flexible enough for uses other than disaster recovery. With LTO already being the overwhelmingly predominant archiving / backup mechanism in high-end production (many bonding companies require it), this only means there will be a lot more LTO tape in our future. “Long live the Tape!”

At least, you’d better hope it lives long, if your irreplaceable data resides on it… [grin].

Everything Else…

I apologize for not covering the demo room in any detail. I had my hands full with all the content from the presentations, and insufficient sleep as it was. Ditto with the breakfast roundtables.

3D? Seems to be dead in the water as far as broadcasters are concerned. Microstereopsis seems to offer so many freedoms in production and post: why isn’t anyone using it?

Washington update: the spectrum grab remains contentions. As to domain seizures, have a look at the most recent instance: Unprecedented domain seizure shutters 84,000 sites.

So gather your metadata ’round (in whatever format you’ve got it) and guard it well; it’ll be even more valuable in the future, because you’ll need to track way too many input formats, an even greater number of output formats, and make mezzanine IMF masters from which every conceivable deliverable, in every possible size, shape, codec, color space and resolution, can be made (IIF ACES will let you preserve enough image data to do so, fortunately). Wrap it all in clever, ever-evolving rights management—in such a way that it won’t infuriate the consumer—and do day-and-date delivery inside of a week to every country on the planet. Don’t forget to back up on LTO-5 and quad-mosaic film protection masters, too (including the 3D subtitled versions), and be ready to do it all faster, cheaper, and better than ever before!

HPA’s master list of press coverage: http://www.hpaonline.com/mc/page.do?sitePageId=122850&orgId=hopa

Disclaimer: I attended the HPA Tech Retreat on a press pass, which saved me the registration fee. I paid for my own transport, meals, and hotel. No material connection exists between myself and the Hollywood Post Alliance; aside from the press pass, HPA has not influenced me with any compensation to encourage favorable coverage.

The HPA logo and motto were borrowed from the HPA website. The HPA is not responsible for my graphical additions.

All off-screen graphics and photos are copyrighted by their owners, and used by permission of the HPA.

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now