How is the Tech Retreat like (and unlike) a location shoot? Why does New York’s Metropolitan Opera put ten minutes of solid white at the tail end of each live HD cinemacast?

The Hollywood Post Alliance 2009 Tech Retreat commenced at 2pm on 17 February 2009, the USA’s analog shutoff day—whoops, its former analog shutoff day. It then ran for about 72 hours (with minor time-outs for sleeping) of conference proceedings, technology demos, roundtables, and (in a break with tradition) bowling (instead of softball).

The Tech Retreat is very much like a location shoot in that one travels to a distant city (Palm Springs), has early call times (7:30am), and endures exciting but exhausting 12 to 14 hour days.

It is unlike most shoots in that the craft services are over the top (internal catering by the Westin resort where the Tech Retreat was held; with all due respect to excellent craft services folks everywhere, where else does the dessert menu feature raspberry chocolate tamales?), and those long days are spent mostly sitting and taking notes: the result is that participants stagger home not only with full brains but with full bellies, too.

It’s not possible for me to properly describe everything that went on, or to adequately convey the animated discussions between technologists, editors, broadcasters, and industry executives. Instead, I’ll just cover a few highlights.

Some of the Trends at TR2009

- 3D is alive and well: there was a panel on 3D production and distribution, and the demo room was full of 3D displays, using polarizing or shuttering glasses, lenticular screens viewed without glasses, and even white-light holograms containing up to 1280 frames of moving video (seen by walking past the hologram). JVC demoed an LCD TV with real-time synthesis of a 3D image from a plain-vanilla 2D feed in real time. It wasn’t perfect (snow on distant mountains came forward too much, for example), but it was impressive. But the compromises involved in 3D presentation (the bother of wearing glasses; resolution loss in lenticular displays; etc.) keep it an open question as to whether 3D in any of its present incarnations will survive as a mainstream phenomenon, not just as a momentary novelty or fad.

- The DTV conversion proceeds apace: Active Format Descriptors for automatic aspect-ratio conversion are now widely available in various bits of the processing chain, and most broadcasters are using them. In 2008, more HD sets were sold than SD sets. But the analog cutoff was delayed (again), and all press pool systems are still NTSC composite 4×3 with mono audio, despite component video being in use since 1981, stereo audio since 1984, and 16×9 appearing in 1985.

- Metadata matters: as we move ever farther into file-based production, more intelligence can be moved into the files and the systems that handle them. “The inflection point between manual and metadata-drive operations has been crossed”, especially in operations like broadcast automation and traffic management, format conversion and repurposing, and archiving.

- Post houses are about community, not equipment: the days of heavy iron for realtime processing may be fading, but the value of the post house is in being a nexus for creatives, clients, and technological infrastructure. Indeed, the post house of the future may be a massively parallel-processing server farm, capable of storing, archiving, and batch-processing editing, grading, and processing tasks dispatched from the MacBook Pros of the editors sitting with clients in the front rooms—which, if present societal trends hold out, will come to resemble nothing more or less than really comfortable coffee bars. (OK, I extrapolated that last bit, but I dare any other Tech Retreat attendees to disagree with me on the fundamental vision!)

- CPUs and GPUs are getting “fast enough” to do really interesting things: all manner of tasks requiring heavy lifting are now running entirely on general-purpose platforms: realtime 3D synthesis, near-real-time HD frame-rate conversion, and 2K and 4K color grading are all taking advantage of multicore CPUs and programmable GPUs, aided by fast storage pools. The expensive, drop-dead-gorgeous control surfaces are still there, but behind them stand off-the-shelf Linux, Windows, and OS X boxes with off-the-shelf AMD and nVidia GPUs in them.

Selected Scenes & Random Revelations

HPA organizer Mark Schubin holds forth in his analog shutoff T-shirt.

Television Engineer Mark Schubin organizes the Tech Retreat. If you read his articles in Videography you’ll get a feel for the breadth and depth of his knowledge and interests, which he applies in his day job at the Metropolitan Opera in NYC. It’s his encyclopedic eclecticism that drives the Tech Retreat program, making it the highest signal-to-noise event around for video technologists.

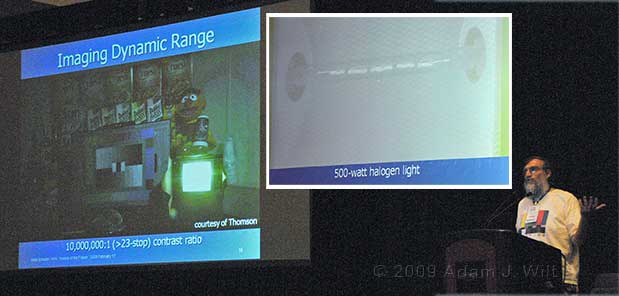

Thomson’s 23-stop image, lit only by the 500 watt light in the image (inset: detail from that same frame).

What if your camera could dynamically control each pixel of its sensor, shutting off integration when it was full? Thomson put firmware in an Infinity camcorder and got 23 stops out of it for their efforts. The sole illumination in the sample frame is the 500 watt lamp aimed at the camera: as the inset shows, the filament in the lamp is resolvable, yet there’s still separation between the darkest two bars in the DSC Labs CDM chart.

TDVision’s 3D compression method: L or R image, plus difference data to synthesize the other view.

This may be the cleverest, most why-didn’t-I-think-of-that? thing at the Retreat: TDVision‘s method for encoding a 2D-compatible 3D image. TDVision puts a 2D image into an MPEG stream in the usual way, and stores a channel-difference signal as an interleaved separate stream (think mid-side recording in audio). Any normal, 2D MPEG decoder sees only the normal 2D stream, and displays it. Any TDVcodec-enabled MPEG decoder (many existing consumer electronics decoders can be TDVcodec-enabled via a firmware update) will be able to reconstruct both views of the 3D image and output it in the form required for the display being used: line-interleaved, checkerboarded, anaglyphic, etc. It’s entirely display-agnostic at the distribution end (Blu-ray, OTA, etc.) even to the point of not caring whether the viewer has 3D capability at all. Brilliant.

JVC’s GY-HM700 1/3″ 3-chip QuickTime camcorder with 20x Canon zoom and optional SxS recorder.

SDHD slots on the JVC GY-HM700

The JVC GY-HM700 is the big brother to the GY-HM100 shown at the FCPUG SuperMeet at MacWorldExpo 2009. It’s a 3-chip camcorder recording long-GOP MPEG-2 at 25Mbit/sec or 35 Mbit/sec to SDHC cards in a QuickTime wrapper, so the clips are directly editable in Final Cut Pro without rewrapping or re-encoding. With the optional SxS adapter, it can record the same material to SxS cards, too. The 700 is supposed to ship next month with a brand-new Canon 20x zoom (the one shown here is the only one in the USA at the moment, or was; it was supposed to go back to Japan as soon as the Retreat was over), and I’ve asked JVC for one to review.

Next: Codec concatenation, a webserver in a Varicam, dynamic-range measurements, Cinnafilm, S.two, a digital test pattern, and why the Opera broadcasts white…

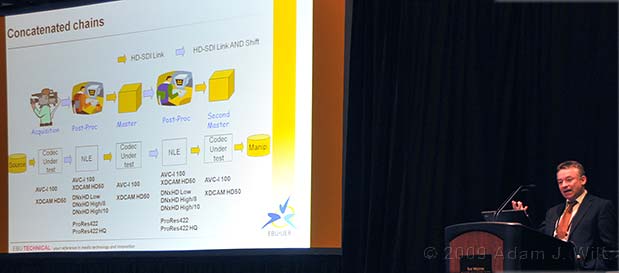

The EBU’s Hans Hoffman discusses codec concatenation testing.

A decade ago, the European Broadcasting Union tested multigeneration performance of various SD codecs. At the Tech Retreat, Hans Hoffman presented an updated study, looking at the performance of 720p, 1080i, and 1080p acquisition, editing, and distribution codecs, including concatenation effects. The EBU again ran a careful and comprehensive test, ensuring that both spatial and temporal offsets occurred between the four to seven generations tested, and having a panel of “golden eyes” evaluate the results on a 36″ Sony BVM CRT, a 1080-line LCD, and two 50″ 1080-line plasma displays. The full test report is available only to EBU members, but anyone can read the summary, EBU Recommendation R 124.

Panasonic’s Varicam 3700 web-server with glowing blue antenna.

Panasonic demoed putting a web server directly into a camera: in this case, a P2 Varicam AJ-HPX3700. A wireless link lets members of the camera department mess about with the rich metadata available in the P2 workflow without having to be physically connected to the camera.

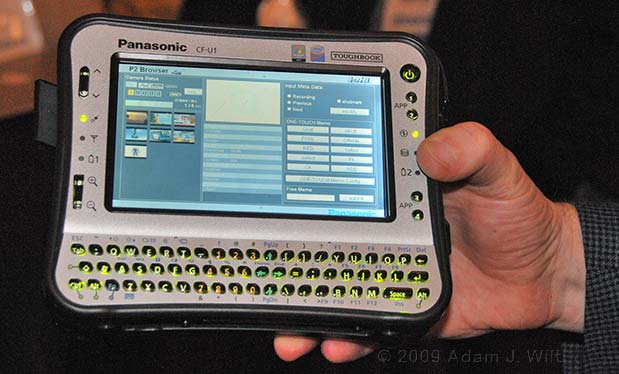

Script Supervisor’s view of the 3700’s clips and metadata.

One client is this nifty Toughbook handheld PC, not seen much in the US outside the medical community. The interface here tracks those available through the camera’s own metadata pages, or Panasonic’s P2 Viewer application, with some live-production tweaks: the UI is customizable with one-button macros to update or edit various metadata fields, mark circle takes, or add timecode-tagged notes to points of interest in a clip. Another client is an iPhone app, with single-touch icons for marking circle takes, points of interest, and the like.

Sorry, no ship dates: it’s a tech demo, not a product… yet…

Arri’s Hans Kiening shows a prototype dynamic-range test target.

How do you measure the dynamic range of a camera? You can shoot a series of exposure tests, opening and closing the aperture a stop at a time, but then you’re assuming that each stop marked in the lens really gives you a doubling or halving of the light, and you run into issues where the camera’s firmware may modify the tonal scale from shot to shot. Alternatively, you can shoot a transmissive test target like a Stouffer wedge or a DSC Labs Combi OSG, but you’re at risk from illumination leakage and from densitometer inaccuracy when measuring wide ranges of transparency.

Arri, in their characteristic German manner, have come up with a transmissive test target that overcomes many of these problems. An Arrilaser film recorder generates transmission wedges within a narrow and well-calibrated density range; to get higher densities, multiple wedges are stacked. Each target area is surrounded by a bore or tunnel to reduce cross-contamination and ambient light spill. The ring of test targets is calibrated while mounted on an integrating chamber that looks like a cross between a diving bell, a comsat, and a stainless-steel pressure vessel. The final test target, shown by Hans Kiening, is a typical Arri product: precise enough for standards-body usage, rugged enough to survive the tender ministrations of a feature-film crew, and probably expensive enough to bankrupt a small country (or, in this day and age, even a medium-sized country). Arri says they’ll donate one to AMPAS, and one to USC, so that those organizations won’t be able to complain that they can’t afford them!

Cinnafilm’s frame-synthesis debug screen, showing motion vectors and red areas where motion blur makes motion estimation difficult.

Cinnafilm showed their HD1 frame-rate-converter, interlace-to-progressive, 60i-to-24p, synthesize-super-slo-mo, you-name-it-and-the-HD1-can-probably-do-it workstation. I saw this thing do a 10x slo-mo on a clip that was hard to believe; there was none of that frame-blending nonsense, just a smooth, crisp, very clean synthesis of all the in-between frames. The debug display (above) shows sample motion vectors during a frame-rate conversion, as well as areas of the image where motion blur made motion estimation impossible with the current settings. Of course the whole processing pipeline is interactively tweakable, so you can optimize the process on a shot-by-shot basis. Frame rate conversion on 1080-line clips runs at about 1.5x real time (e.g., 16 fps or so).

All this stuff runs on a single nVidia GPU, like one you could buy at Frys or Best Buy.

S.two’s current TAKE2 uncompressed recorder, and its FlashMag-based replacement, the OB-1.

Ted White from S.two Digital Filmmaking had the current TAKE2 uncompressed recorder in the demo room, showing off the automated slating capability that reportedly saved David Fincher’s crew 40 minutes per day on “Zodiac”. Sitting beside it was a mockup of the diminutive OB-1 solid-state uncompressed recorder, one that “does everything the TAKE2 does, only twice”—the OB-1 is a dual-stream recorder. The OB-1 will be on the market in the next couple of months; the recorder will be about US$30K and the 500 GB FlashMags will be around $10K each, so they’ll rent for about $300/day and $100/day respectively.

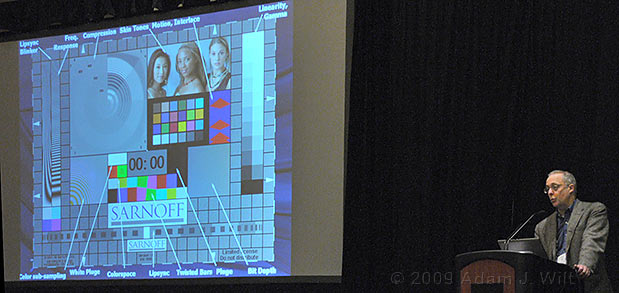

Sarnoff’s Norm Hurst describes “A Test Pattern for the Digital Age”

Bars & tone are so 20th Century. More importantly, the list of things that bars & tone don’t help you to line up in the digital era is embarrassingly long. Sarnoff Labs has come up with the Visualizer Digital Video Test Pattern to fill the needs of this Brave New World. If I got the numbers right, it can be yours for $5000 for a single-site, three-seat license, and for $500 more, Sarnoff will put your name and logo in it in place of their own. Expensive? Sure. Worth it? If you’ve got a digital broadcast plant with any possibility for color space, resolution, resampling, resizing, repositioning, lip-sync, field-reversal, and/or transcoding errors, you’d be silly not to get it.

There was more, so much more… again, this is just as sampling of the ideas, products, experiments, and case studies presented. My apologies to the presenters and demos not mentioned; omissions are due solely to the time I have available to write up the Retreat, not to any lack of interest or importance in the presos and demos themselves. Readers, trust me: if you’re into this sort of thing, you gotta be there.

Other reports (with a tip of the hat to Mark Schubin for the links):

http://www.fxguide.com/article526.html

http://sportsvideo.org/blogs/hpa/

http://www.televisionbroadcast.com/article/74800

http://www.televisionbroadcast.com/article/74816

http://www.studiodaily.com/filmandvideo/tools/tech/10540.html

Why does New York’s Metropolitan Opera put ten minutes of solid white at the tail end of each live HD cinemacast? They don’t know exactly when the live performance will end, and they know that in all the theaters around the world (including those on ships at sea) that are showing the opera, the popcorn boy—erm, the projectionist—is unlikely to be waiting in the booth for the end of the show. So when the show ends, the Met broadcasts full-field white, so the audience has light enough to leave the theater by while the projectionist is off selling popcorn!

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now