AI video leader Runway has added Image to Video as the latest feature in its Gen-2 suite of tools. This feature directly creates moving image from a still, with no extra prompting. While previous text-to-image generation (whether in Gen-2 or a competitor) have generally produced results that are too creepy and crude for professional usage, does this new toolset kind of almost hint at producing synthesized images you could actually use in post-production?

In this short article I’ll explore this question and describe how you can try this feature out for yourself in under 5 minutes, for free. At the end I share some of the more popular examples that have appeared since Image to Video was introduced late last month.

The state of synthesized video in mid-2023

Remember AI in November 2022? Me neither. It’s hard to recall how recently there was no ChatGPT generating professional-sounding text nor Midjourney v4 fooling you into thinking you were looking at the work of professional artists and designers (unless the image contained hands with a countable number of fingers).

But now I feel our collective anxiety. AI in the 2020s looks to rival creative professionals who work with words and images. We feel the necessity to understand these tools while Hollywood wages a war against basic rights of the those who have made its existence possible.

Admittedly it’s cold comfort that early results with text-to-video have ranged from deeply creepy to laughable (and often, hilarious and terrifying at the same time). And this is where a recent breakthrough from Runway in its Gen2 product may be worth your attention.

Runway Gen-2

Runway is among the most prominent startups to boldly go headlong into fully synthetic video and effects. Gen-2 is the name of the current version of their “AI Magic Tools” (as branded on the site). The word “magic” aptly describes the act of creating something from nothing, and Gen-2 initially targeted text-to-video images. This is using type to get a movie with no intervening steps. Magic.

However, if you scan the examples promoted on the Runway site, or at competitor Pika Labs, you may not find much that you could add even as b-roll in a professional edit. The style tends to be illustrative and the results somewhat unstable in terms of detail and constancy. This “jittery and ominous… shape-shifting” quality was used by Method Studios to create the opening credit sequence of Secret Invasion. The Marvel producers wanted a foreboding, “otherworldly and alien look.” While Method claims “no artists’ jobs were replaced by incorporating these new tools,” they have not revealed how the sequence was realized.

So perhaps that was the first and last Hollywood feature to use 2023-era text to video as a deliberate style—time will tell. Meanwhile, solving for the creep factor has been a major focus for Runway, and that’s where this major new breakthrough comes in.

If you’ve ever wished that a still photo you captured or found were moving footage, and who hasn’t, or if you want to play with the pure-AI pipeine of generating an image, here are the steps.

Try image-to-video yourself in 5 minutes or less

Runway operates as a paid web app with monthly plans, but you can try it for free. The trial gives you enough credits to try a number of short video clips; the credits correspond to seconds of output. For the free clips, there is a watermark logo that appears in the lower right which is removed on a paid plan.

To get started, you need an image. Any still image will do, but one in which some basic motion is already implied is a good choice. I with 2 simple nature scenes, one with neutral color range and more detail, the other already more stylized.

If you visit runwayml.com and click on a link for Image to Video or Gen-2, if you’re new you’ll be asked to sign up prior to reaching the Dashboard. Once there, click on Image to Video and you’re presented with a simple UI that looks like this:

The most important thing to understand is that, at this stage of Gen-2 development (which can and will change at any time). you can only provide an image. Any text you add actually spoils the effect we want, which is direct use the original image (instead of using it as a stylistic suggestion). You can choose a length, with the default set as 4 seconds and the maximum currently 16 secs. Other than that it’s currently what you might call a black box; your subject will remain at the center of frame and it and the scene around it will evolve however the AI sees fit. The results are single takes.

There is way more happening with color shifts than would be ideal on the palm trees, but that’s 100% the decision of the software.

The more limited palette and subject of the cactus has a subtler result. The portrait format of the original image was preserved.

The main point here is that the original scene has been retained and put into motion in a way that wouldn’t be possible without an AI. What makes this a potential killer app is the situation where you have a still image reference and wish you had a video version of it, because that’s exactly what this is designed to do.

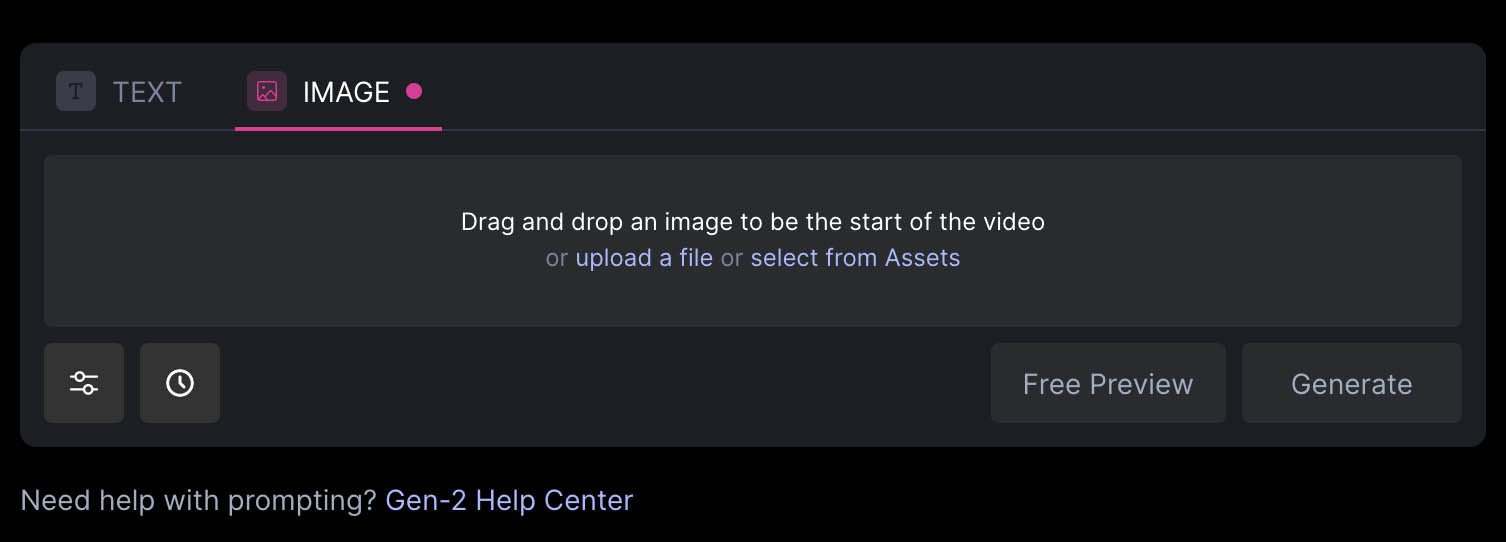

So now let’s look at “imagined” images of human figures.

Here’s what Runway returned:

This synthesized image produced with leonardo.ai creates a compelling moving image, even if the character of the face seems to shift slightly. Keep in mind that although the source image was generated with a text prompt, no text-to-video option (including Runway’s own Text to Video) would achieve this level of fidelity or continuity.

This example is included as a reminder that the toolset is not immune to classic mistakes of anatomy and context.

Next we’ll look at some of the most polished (iterated) examples that have been circulating since this feature debuted weeks ago.

Image to Video

If you’re interested to explore the best I was able to find of what has been produced within the first few weeks of Image to Video being available, here is a collection of posts curated from x.com (formerly called “tweets”). As you study these, I encourage you to notice moments where continuity is maintained well (and when, for example,the expression on a face changes a little too much to maintain character).

Movie teaser (Midjourney & Runway)

I'd like to share with you a project I've been working on for some time now, and which is very close to my heart.

I hope you'll enjoy watching it as much as I enjoyed creating it!

Thank you very much!

Images: Midjourney

Video:… pic.twitter.com/EReiKSuaTL— Anonymouse (@TheMouseCrypto) August 3, 2023

https://twitter.com/JH4TC/status/1687174252502417410

https://twitter.com/SteveMills/status/1688375410906853376

If you saw AI generated film clips as recently as a couple weeks ago, you probably noticed how weird they obviously were

Well, things changed. Now Runway animates from a single photograph. I did these each from a single still image, no effort. Still lots of flaws, but big leaps pic.twitter.com/hj0IVtExiR

— Ethan Mollick (@emollick) July 24, 2023

Are you ready to get weird with AI Video + Music?

The following is entirely AI generated video from photos I created in Midjourney, using Runway's latest img2video capabilities. Needless to say, the results are mind-blowing! I wonder what new styles will emerge from these… pic.twitter.com/4LbBZ8dONQ

— Kiri (@Kyrannio) July 22, 2023

Photo experiments with the new Image to Video mode of Gen-2. pic.twitter.com/ZYebBRQnxB

— Runway (@runwayml) July 25, 2023

So, if you’re reading all the way to the end and weren’t already aware of the breakthrough that Runway Gen-2 has presented with Image to Video, this is a great opportunity to evaluate for yourself. And if you’re reading later summer of 2023, my guess is that many of these limitations will have been addressed. How long will still images remain more effective than text as a starting point for AI-generated video? That remains to be seen. When will you use these tools to steal a shot you can’t find any other way? Well, that’s completely up to you—but the possibility is there.

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now