A few years ago, while on a tech scout, I shot a stunning reference photo with a Canon 5D Mk2. Something just felt different: it had a sense of volume—of space within the scene—that I couldn’t explain. Initially I thought the location had much to do with that feel, but I found that I couldn’t recapture it on the shoot day with an S35 camera. There was something about the depth within that full frame still image that an S35 sensor couldn’t deliver.

It reminded me of the days, long ago, when I shot medium format (120) stills with my old Mamiya camera. The “normal” perspective lens for that format was the 80mm, as opposed to 50mm in 35mm stills format, and my wide lens was a 40mm rather than a 28mm or 25mm. There was something magical about the 40mm lens as it captured a wide angle of view but without any obvious wide angle distortion. This gave the image a “three dimensionality” and sense of volume that I couldn’t capture on smaller film stocks. I never quite understood why this was, and eventually I forgot all about it.

Until now. I see this look in all the images I’ve seen captured by Panavision’s new 8K DXL camera.

Being the curious sort, I became obsessed with discovering why this look, which I associate with epic films such as Lawrence of Arabia and Doctor Zhivago (both shot with Panavision cameras and lenses), is specific to large format cameras.

Now, after a long discussion with Panavision and Light Iron, I think I have my answers: (1) resolution, and (2) optics.

Part 1 of this series is all about…

RESOLUTION: IT’S DETAIL, NOT SHARPNESS

Years ago, a lens expert told me that there are two things in lens design that are mutually exclusive: contrast and resolution. “You can have more of one than the other,” he told me, “but you can’t have lots of both.” And then he walked away and left me hanging.

Thanks to materials provided by Panavision I think I’ve solved this personal mystery.

Nearly two years ago I attended a press presentation on the new Panavision 8K DXL camera. Michael Cioni of LightIron spoke of many things that day, but what stuck in my mind is that “resolution is not sharpness.” He showed examples of 8K images that looked stunning, but didn’t look sharp.

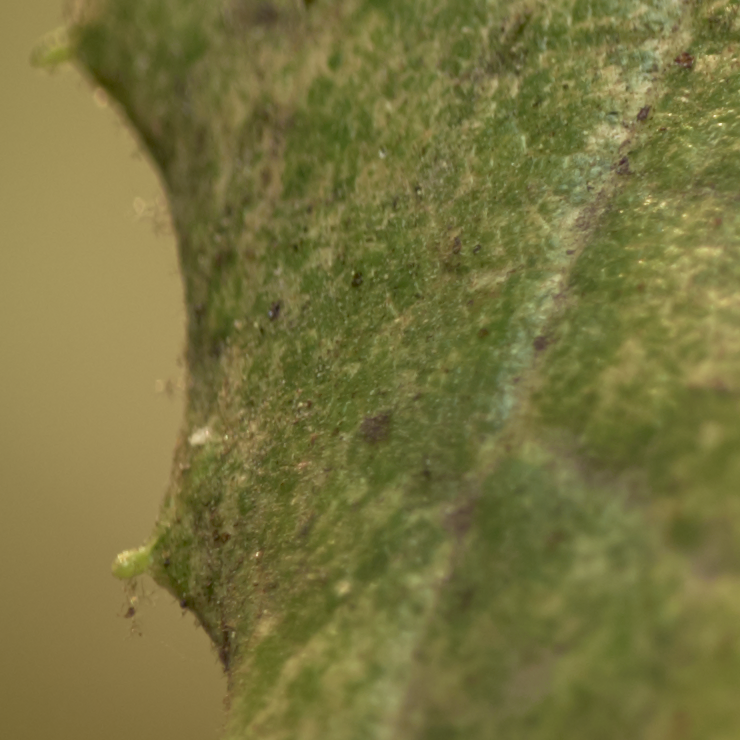

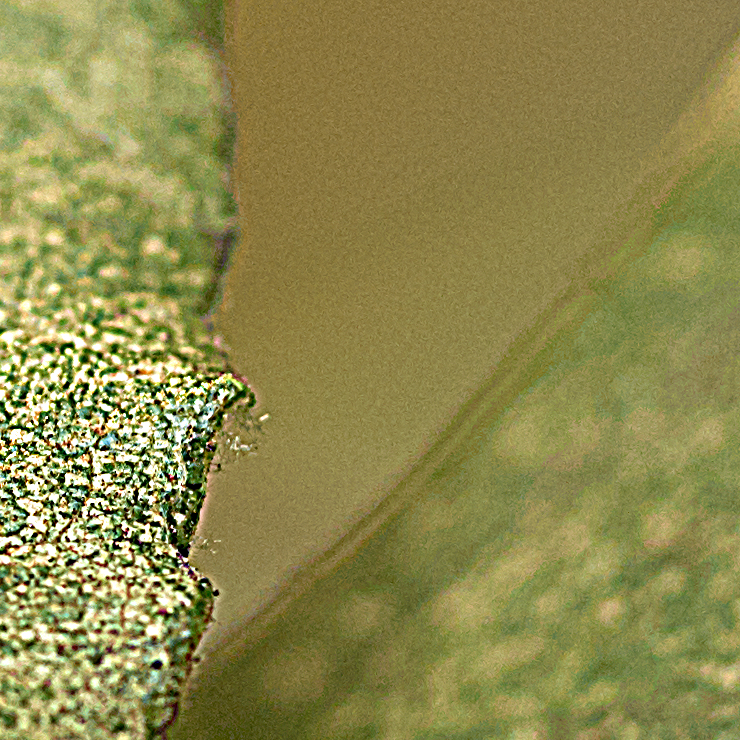

The image above was captured digitally using a Phase One camera with a Leaf digital back. The original file is a 600MB+ file that is too large to host on PVC servers, so I’ve cropped a few 740px wide sections (the maximum width PVC can display within a page) to illustrate that sharpness and resolution are not the same.

Every one of those stills was cropped out of that single large 11K image, but they don’t look sharp at all. And, indeed, any sufficiently high resolution image should not look terribly sharp, because sharpness comes about as the result of contrast. This is one of the better descriptions of sharpness that I’ve found, and the bottom line is that an image with crisp steps between tones or hues is considered “sharp.” That does not mean that high resolution images are automatically sharp, because the more steps a camera and lens system can capture across a transition from bright to dark, or from one color to another, the smoother they will look.

Every one of those stills was cropped out of that single large 11K image, but they don’t look sharp at all. And, indeed, any sufficiently high resolution image should not look terribly sharp, because sharpness comes about as the result of contrast. This is one of the better descriptions of sharpness that I’ve found, and the bottom line is that an image with crisp steps between tones or hues is considered “sharp.” That does not mean that high resolution images are automatically sharp, because the more steps a camera and lens system can capture across a transition from bright to dark, or from one color to another, the smoother they will look.

Low resolution images look sharp because they are unable to capture all the nuances of those tone and hue steps, compressing subtle detail beyond the ability of the system to capture into larger, coarser, more abrupt transitions.

The same is true of lenses. Many are good at what is called “large structure contrast,” which means that they reproduce coarse detail with a lot of contrast but may not capture much fine detail. Tone and hue transitions are abrupt, which results in perceived sharpness. High resolution lenses capture more detail across transitions, with the result that they feel smoother and lower in contrast.

I know of a very expensive line of lenses that have a reputation for extreme sharpness and contrast, and yet if one measures them against a competing brand of lenses that is known for softer images and lower contrast, one will find that the “soft” lenses capture more fine detail than do the “sharp” lenses. There’s nothing wrong with this, as we shouldn’t choose lenses based solely on how they reproduce lines on a chart, but it’s good to understand why one’s tools act the way they do, especially when the reasons are counter intuitive.

Beyond resolution alone, color fidelity is improved because the sensor captures very delicate transitions between hues. The same is true of dynamic range, as capturing more tones in the image results in richer shadows and more detailed highlights. Both of those influence visual “depth cues” that trick our brains into perceiving depth where there isn’t any.

These textures are stunning in 8K, but the background is equally interesting. The subtlety of hues across the out-of-focus background between the leaves is both complex and smooth. It’s unlikely that a lower resolution camera would capture this same richness. That’s important, as many of us choose lenses based on the quality of the out-of-focus image (bokeh). Sensor resolution and image bit depth play an equally important role in reproducing soft backgrounds, although this has not been obvious to many of us as we haven’t worked with moving pictures that approach this kind of color depth and resolution.

These textures are stunning in 8K, but the background is equally interesting. The subtlety of hues across the out-of-focus background between the leaves is both complex and smooth. It’s unlikely that a lower resolution camera would capture this same richness. That’s important, as many of us choose lenses based on the quality of the out-of-focus image (bokeh). Sensor resolution and image bit depth play an equally important role in reproducing soft backgrounds, although this has not been obvious to many of us as we haven’t worked with moving pictures that approach this kind of color depth and resolution.

What’s more, the smoothness of this image will scale to nearly any resolution. For example, the images above are sections clipped from a 600MB TIFF with 16-bit color, but they’ve been saved off as 16-bit PNG files. Most computer monitors fall into the eight to ten bit range, so the images you’re seeing above are not as they were captured. The fact that they still appear smooth on your eight or ten bit monitor gives you some idea of how much smoother those transitions must be in the original 16-bit file.

Oversampling is always better, as much of the character of an image can be preserved through pixel interpolation as they are scaled downwards. Lower resolution images capture less of these qualities, and give them up more easily during the scaling process.

I don’t have a good example of a high resolution image vs. a sharp image, so I tried to make one:

I applied a moderate amount of sharpening to the full resolution TIFF file, and then cropped in to see what I’d done:

I applied a moderate amount of sharpening to the full resolution TIFF file, and then cropped in to see what I’d done:

Contrast has been enhanced, but fine detail has been lost. I know of no way to sharpen this image without losing information. This is true during capture as well: a low resolution image that appears sharp and contrasty may lack detail found in a higher resolution yet softer-looking image. The higher resolution image can always be made to look sharper, but a low resolution image that appears sharp will always lack fine detail.

There was a time when camera sensors were small enough, and transmission standards were poor enough, that every camera came with a “detail circuit.” This bit of wizardry drew an artificial black line around areas of high contrast to make transitions appear even sharper. This technique can still be used in post to add a sense of sharpness to a soft image, but—once added—it can never be removed.

HOW MUCH RESOLUTION IS ENOUGH?

There’s an argument that 8K cameras are overkill. There are no cinema chains that can project an image at that kind of resolution. Few can project 4K images, and most are limited to 2K at best. There’s an argument that projector optics can degrade the image further, as can image compression artifacts, and some say the human eye can’t see 4K or 2K resolution images at common viewing distances anyway.

This may be technically correct, but it is not actually the case. The eye does not function alone. Images are generated within the brain, which has some built-in image enhancing features that allow us to see detail beyond that what our eyes can capture. Resolution isn’t about creating images that appeal to the eye, but to the entire human visual system.

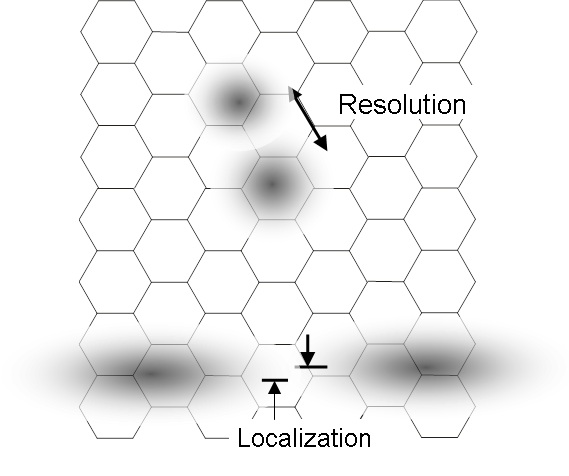

For example:

The two dark spots at the top of the image represent stars in the night sky, as seen by the eye. As long as they are separated by one rod or cone, the brain will see them as two stars instead of one elongated star. That’s resolution.

The lines at the bottom represent vernier acuity, or the ability of the brain to discern a break in a line that falls across multiple rods and cones by detecting where the light center of the lines are, with precision that is greater than the resolution of the eye itself.

It’s not enough to exceed the human eye’s resolution. You have to exceed the resolution of the human eye in conjunction with the human brain’s visual processing power, which has the ability to resolve details beyond the resolution of the human eye.

RESOLUTION: CAPTURING THE QUALITY OF A LENS

High resolution sensors capture lens characteristics better than lower resolution sensors. If one projects a single point of light through a lens, the result can vary wildly depending on the design and desired qualities of the lens.

This distortion is one of many contributing factors that gives a lens its particular look. This pattern will fall across more photo sites on a high resolution sensor than on a low resolution sensor, so a high resolution sensor will better preserve a lens’s unique characteristics. This is important as the quality of a lens is one of the few things that can’t be manipulated in post.

Thanks to advantages resulting from oversampling, as well as the ability of the human brain to discern detail beyond what is technically available, lens characteristics captured in high resolution should scale effectively to any size of screen and still reveal more of that lens’s personality than an image captured at a lower resolution with the same lens.

There’s more to this story than resolution, however. This is where things really get exciting. Click here for part 2.

Thanks very much to Panavision and Michael Cioni for their assistance in helping me research this article. All images ©2017 by Panavision except where noted. Any errors and omissions in this series are mine alone.

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now