You may not be interested in Pokémon Go, but I am sure you’ll want to see the video created by Abe Davis showing how much better that game could be, if it used Interactive Dynamic Video. But there is more to see in the video, and it is related to sound and vision and new ways to create special effects.

Interactive Dynamic Video is, according to Abe Davis, a process allowing us to turn videos into interactive animations that users can explore with virtual forces that they control. It may not, at this stage, sound as something very interesting for many filmmakers, but believe me, it touches so many things related to sound & vision that you may, as I did, browse through the different videos and read some of the papers published by Abe Davis and his friends on this exciting adventure.

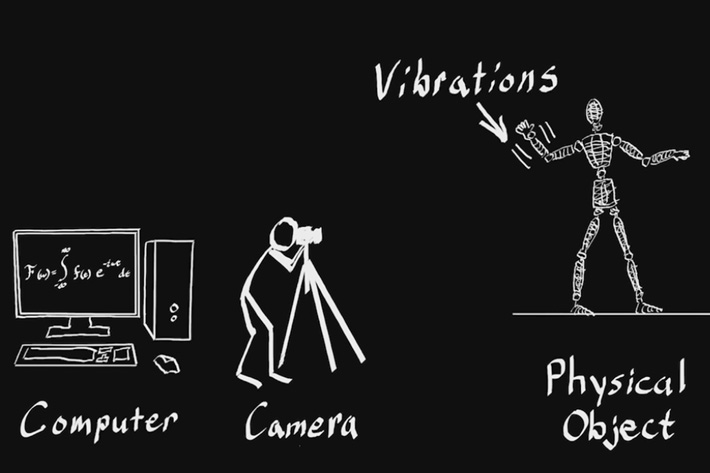

Although Abe Davis has explored multiple areas, as the research page on his website shows, the line conducing us to the Interactive Dynamic Video started, apparently, with The Visual Microphone: Passive Recovery of Sound from Video, a project presented at Siggraph 2014, resulting from a collaboration of Abe Davis with Michael Rubinstein, Neal Wadhwa, Gautham J. Mysore, Fredo Durand, and William T. Freeman. That project explores the potential to use video not just to see, but to listen to the world.

Sound and vision are two elements constantly present in the projects Abe Davis is involved with. He will graduate from MIT in September and move to Stanford University for his post-doctorate. A computer science PhD student at MIT working in computer graphics, computational photography, and computer vision, Davis has done “a spattering of research on different topics. I’m probably best known for my work analyzing small vibrations in video”.

In May 2015 Abe Davis was the guest at a TED Conference, to talk about “New video technology that reveals an object’s hidden properties”. Subtle motion happens around us all the time, including tiny vibrations caused by sound. New technology shows that we can pick up on these vibrations and actually re-create sound and conversations just from a video of a seemingly still object. But now Abe Davis takes it one step further: watch him demo software that lets anyone interact with these hidden properties, just from a simple video. And yes, I do suggest you take the 18 minutes to watch the video.

The TED Conference was just the start, because the team has taken the exploration further and this August 2016 two new videos are available: the Pokémon GO and Interactive Dynamic Video and the earlier Interactive Dynamic Video. Even if you do not care about Pokémon Go, do watch the video, for the technology it reveals. But do not miss the other video, Interactive Dynamic Video, which sums the updated results of the investigation the team is working on.

Even if a regular use of the technologies presented may be distant in the future, the idea is interesting. The abstract for the research document indicates that “we present algorithms for extracting an image-space representation of object structure from video and using it to synthesize physically plausible animations of objects responding to new, previously unseen forces. Our representation of structure is derived from an image-space analysis of modal object deformation: projections of an object’s resonant modes are recovered from the temporal spectra of optical flow in a video, and used as a basis for the image-space simulation of object dynamics. We describe how to extract this basis from video, and show that it can be used to create physically plausible animations of objects without any knowledge of scene geometry or material properties.”

One of the real world applications, and something you may be familiar with, relates to special effects. According to Abe Davis, “in film special effects, where objects often need to respond to virtual forces, it is common to avoid modeling the dynamics of real objects by compositing human performances into virtual environments. Performers act in front of a green screen, and their performance is later composited with computer-generated objects that are easy to simulate. This approach can produce compelling results, but requires considerable effort: virtual objects must be modeled, their lighting and appearance made consistent with any real footage being used, and their dynamics synchronized with a live performance. Our work addresses many of these challenges by making it possible to apply virtual forces directly to objects as they appear in video.”

The document related to this research has the title Image-Space Modal Bases for Plausible Manipulation of Objects in Video and you can read it and download it following the link. If you’re looking for other ways to create a variety of visual effects through the use of what the team considers to be a low-cost process, this can be the start of a new adventure. As Abe Davis writes on the website he created to show the technology, this work is part of his PhD dissertation at MIT. It is academic research – there is no commercial product at this time, though the technology is patented through MIT. You may contact Abe Davis, Justin G. Chen, or Neal Wadhwa (or all 3 in one email) about licensing through MIT.