From Super-8 films and VHS restoration to digital humans and the future of virtual production there are more than 20 conferences related to Media & Entertainment to follow at the GTC Digital.

Among the many conferences planned for this year’s edition of NVIDIA’s GPU Technology Conference, a series is related to the Media & Entertainment industry. While a few of them are too technical, some of the more than 20 conferences offer a perspective into the evolution of computer usage and modernly Artificial Intelligence, in the different aspects of M&E.

The GTC San Jose 2020 was transformed into an exclusively online event this year, rather than a live event, due to the Covid-19 situation. While this has changed plans for many people, the online sharing of the conferences means that they will be available for more people around the world. The conferences included in the GTC Digital cover everything computer and graphics related, but some of the sessions have a direct relation to M&E, and it’s some of those that are presented here.

Under the title AI-Enabled Live 3D Video, Manisha Srinivasan, Product Manager, Minmini Corporation and Siva Narayanan, CEO, Minmini Corporation invite those interested to imagine watching an event (game, wedding, etc.) on the computer or TV, live from home and being able to “dynamically change the field of view and experience the event from multiple angles” for a powerful experience.

In-Camera VFX with Real-time workflows

According to them, “Minmini’s Felinity technology allows us to deploy edge AI devices in a scene and extract interesting features from the cameras (people, ball, vehicles, etc.), estimate their 3D location in space, and reconstruct the scene on the remote viewer’s device, resulting in a live 3D experience.” The poster from the company “describes the components of our 3D visualization technology using an example from the sports industry. We also discuss the current levels of performance and the fields in which products built with the technology could be used.” This on-demand session will be available beginning March 25.

Creating In-Camera VFX With Real-Time Workflows is dedicated to cover advancements in “in-camera visual effects” and how this technique is changing the film and TV industry. David Morin, Industry Management, Media & Entertainment, Epic Games will show how “with software developments in real-time game engines, combined with hardware developments in GPUs and on-set video equipment, filmmakers can now capture final-pixel visual effects while still on set — enabling new levels of creative collaboration and efficiency during principal photography.

David Morin says that, “these new developments allow changes to digital scenes, even those at final-pixel quality, to be seen instantly on high-resolution LED walls — an exponential degree of time savings over a traditional CG rendering workflow. This is crucial, as there is a huge demand for more original film and TV content, and studios must find a way to efficiently scale production and post-production while maintaining high quality and creative intent.”

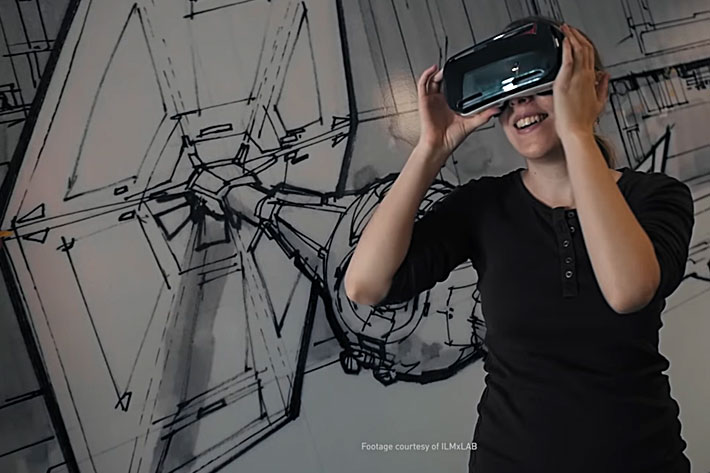

ILMxLAB’s StoryLIVING

In the session From StoryTELLING to StoryLIVING: My Journey to a Galaxy Far, Far Away, Vicki Dobbs Beck, executive in charge of Lucasfilm’s Immersive Entertainment Studio, ILMxLAB, raises questions and shares insights that highlight her 25-plus years with Lucasfilm—most notably the desire to create connected experiences that have the ability to transform places and spaces as we move from storyTELLING to the idea of storyLIVING.

Under Vicki’s leadership, ILMxLAB created the ground-breaking VR installation, Carne y Arena, which was the vision of Alejandro Iñárritu in association with Legendary Entertainment and Fondazione Prada. Carne y Arena was chosen as the first-ever VR Official Selection at the Cannes Film Festival (2017) and was awarded a special Oscar by the Academy of Motion Picture Arts and Sciences, “in recognition of a visionary and powerful experience in storytelling.”

Deep learning applied to live TV production

Implementing AI-Powered Semantic Character recognition in Motor Racing Sports is a long title to explain what David D. Albarracín Molina, Lead Research Engineer, and Jesús Hormigo, CTO, from Virtually Live share in this recorded talk: a use case of deep learning for computer vision applied to live TV production of racing events. Currently, the system can identify, point, and label racers on motor sports footage in a fully autonomous way. Its first real-world application has been in live re-transmissions of Formula E events.

The authors explain the challenges found and solved in the path from polishing a business idea to delivering a working product to the client, Formula E TV, in the first race of the season. They also talk about using Tensorflow to build a state-of-the-art neural network and the particular training pipeline designed for the case, and other aspects related to optimization of their solutions. A 10 minute Q&A about the technologies and the product itself closes the session.

Pixar’s RenderMan and Digital Humans

Next-Gen Rendering at Pixar is the theme of another GTC Digital presentation. Max Liani, Senior Lead Engineer – RenderMan, Pixar talks about the continuing journey to develop RenderMan XPU, “our next-generation photorealistic production rendering technology to deliver the animation and film visual effects of tomorrow. We’ll update you on our techniques leveraging heterogeneous compute across CPUs and GPUs, how we adopted the unique features offered by NVIDIA RTX hardware, and how we combine that with our own solutions to make RenderMan the path tracer of choice for so many productions.”

Photorealistic, Real-Time, Digital Humans: From Our TED Talk to Now is a presentation by Doug Roble, Senior Director of Software R&D, Digital Domain. Back in 2019, during a TED conference, Doug Roble gave a live presentation on stage wearing a motion-capture suit. In real time, a nearly photorealistic version of Doug did exactly what Doug did on stage. The 12 minute example showed how creating a real-time human requires solving many problems, including replicating all the motion of the performer on the digital character, rendering the character so that it looks realistic, and making sure that the character moves like the person.

One year later the author talks, at the GTC Digital, about the experience acquired, and how “since then, we’ve been working on increasing the fidelity of the rendering of the character, creating the ability to easily have someone else drive a character, creating an autonomous character that can drive itself, and creating brand new ways to render the character for ultimate fidelity. We’ll discuss the challenges of DigiDoug for the TED talk and two DigiDougs being driven by Doug and Kenzie for Real Time Live at SIGGRAPH. We’ll touch on some of the latest technology, too.”

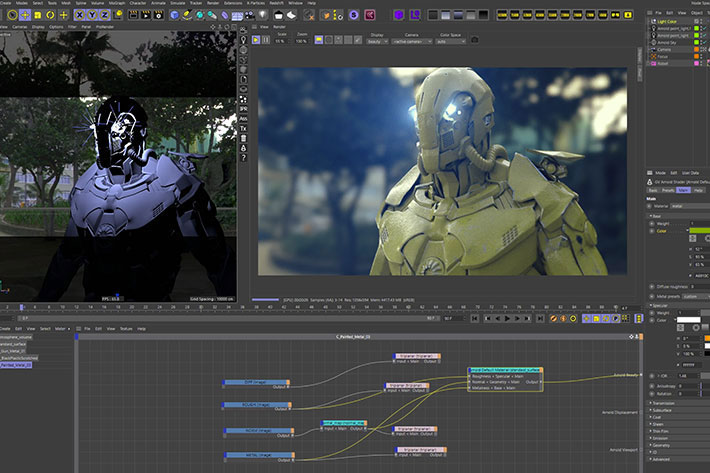

Arnold, a renderer for visual effects in film

Computers and AI serve other, very different purposes, too. Super High-Quality restoration for Super-8 Films and VHS videos Using Deep Learning is such an example. The poster at GTC Digital comes from ARTEONC and is presented by a researcher, Nilton Guedes. It reveals a process that implements a very high-quality restoration solution for image/film/video content based in legacy media like Super-8mm or 16mm movies, VHS, Betamax, or other video media tapes, using a multi-stage deep learning network.

According to the authors, the proposal implements four steps to process the original video input to achieve the high-quality result. These steps are divided by de-noising, stabilization, frame rate adjustment, and color/resolution enhancement.

The list of conferences at GTC Digital does not stop there, and there many other technical presentations, like the one from Autodesk under the title Bringing the Arnold Rendered to the GPU, presented by Adrien Herubel, Senior Principal Software Engineer at Autodesk, or Production-Quality, Final-Frame Rendering on the GPU, from Redshift, presented by Robert Slater, Co-Founder/Fellow, Redshift Rendering Technologies.

The Lion King and virtual production

In the first conference you’ll learn all about Arnold, Autodesk’s Academy Award winning production renderer for visual effects in film and feature animation, and discover how Arnold was instrumental in the shift toward physically-based light transport simulation in production rendering. The session also explores Arnold’s ability to produce artifact-free images of dynamic scenes with massive complexity efficiently, and share an exclusive peek at the latest developments to Arnold GPU, accelerated by NVIDIA OptiX.

The second conference looks at the latest features of Redshift, an NVIDIA GPU-accelerated renderer that is redefining the industry’s perception of GPU final-frame rendering. The talk is aimed at industry professionals and software developers who want to learn more about GPU-accelerated, production-quality rendering.

Among all these conferences from GTC Digital, one will attract the attention of many, due to what it represents regarding the future of the film industry. The Lion King: Reinventing the Future of Virtual Production, brings to GTC Digital the experience filmmakers had while shooting the Disney classic, a story that ProVideo Coalition shared with its readers before.

Hours of learning, one click away

Presented by Academy Award-winning virtual production supervisor Ben Grossmann, Magnopus CEO, the conference takes us behind the scenes of merging classic filmmaking techniques with fully computer-generated productions, like Disney’s “The Lion King,” by employing virtual reality and real-time game engines. There’ll be a Q&A session at the end.

With this note on the GTC Digital conferences, ProVideo Coalition hopes to make readers explore some of the developments related to the M&E industry. GTC Digital is an effort to reinvent, as Nvidia says, the “The Art And Science Of Entertainment”. The online event shines the spotlight on all of the latest advances enabling the future of film and television production, from previsualization and virtual production, through to physically-based rendering and 8K HDR video post-production.

From the latest storytelling tools including real-time game engines, artificial intelligence, and photorealistic ray tracing, all powered by NVIDIA RTX technology, the training, research, insights and direct access to the brilliant minds of NVIDIA’s GPU Technology Conference, everything is just one click away, online. If you’re staying home, because public health is more important than anything else, this is a good way to fill the time learning about things that may change your life when we all get back to “life as normal”.

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now