AI isn’t taking our jobs just yet — instead, it’s helping us do our jobs more efficiently. Jumper is a tool that can analyze all your media, locally, and then let you search through it in a human-like way. Ask to see all the clips with water, or moss, or beer, or where a particular word is spoken, and it can find it, like magic. What Photos can do on your iPhone, Jumper does for your videos. But let’s take this a step at a time.

Analysis

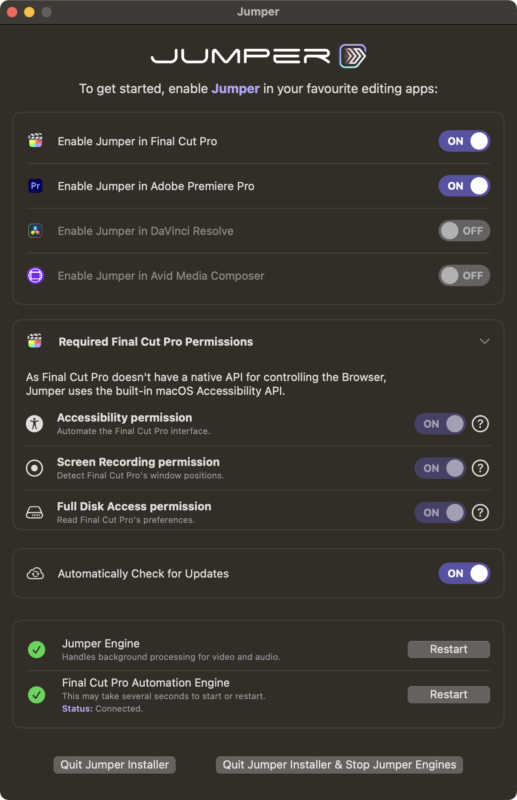

Jumper currently works with Final Cut Pro or Premiere Pro, with support for Davinci Resolve and Avid Media Composer in the works, and it supports Windows as well as macOS. I’ve tested on Mac, where there’s an app to manage installation and extensions to integrate with Final Cut Pro and Premiere Pro.

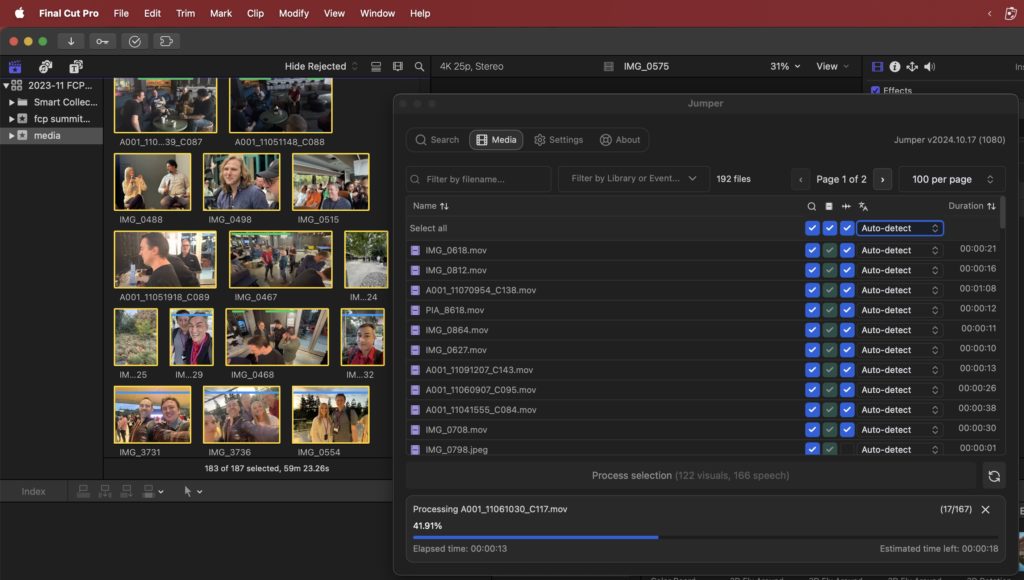

In FCP, you’ll use a workflow extension, available from the fourth icon after the window controls in the top left, and in Premiere Pro, you’ll use an Extension, available from Window > Extensions. In both host apps, Jumper behaves in a similar way, although while Premiere Pro allows you to access any of the clips open in your current projects, you’ll need to drag your library from FCP to the Jumper workflow extension manually to get started. Either way, clips must be analyzed for visual or audio content, so click to the Media tab, choose some or all of your clips, and set Jumper to work.

If you give it enough to do, analysis can take a little while, but on a modern MacBook Pro it’s 15x faster than real-time playback; a decent sized shoot will need you to walk away and let the fans run. When the scan is complete, a moderately sized cache has been built, and this makes all future searches nearly instant.

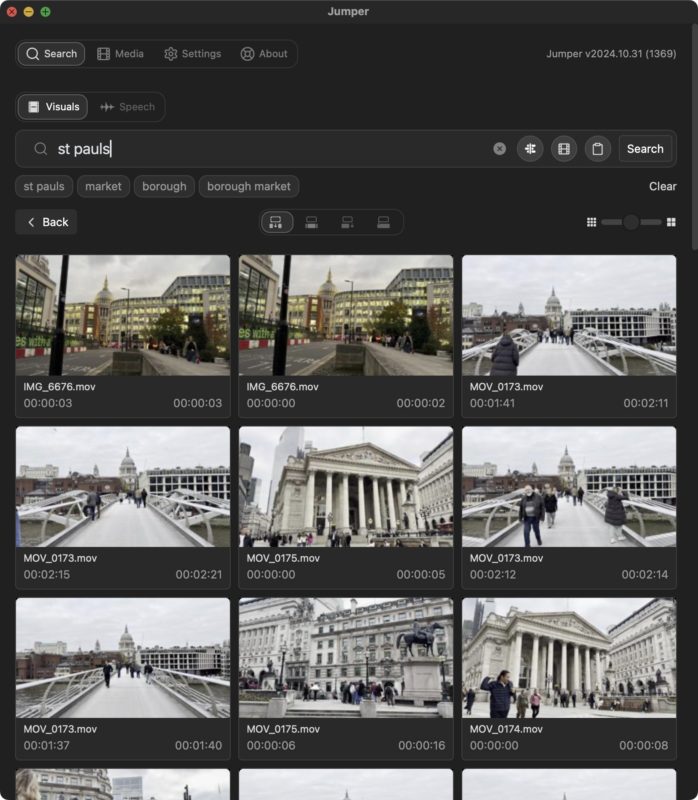

Searching for visuals

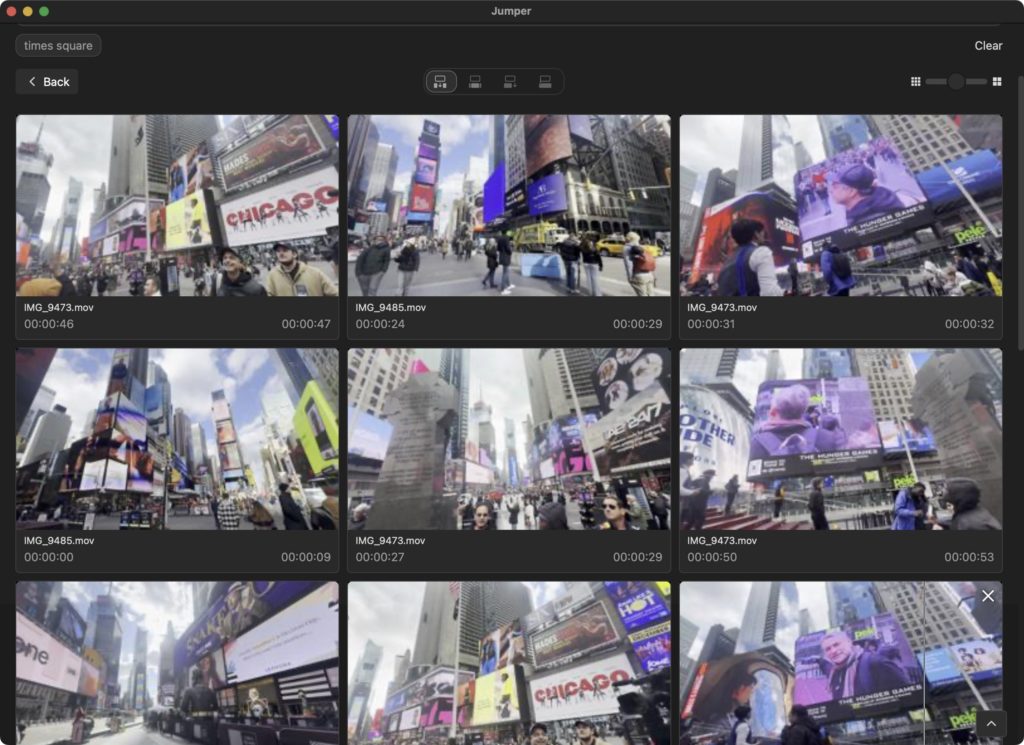

What’s it able to look for? You can search for visuals or for speech, and both work well. Jumper can successfully find just about anything in a shot — cars, a ball, water, books — and because it’s a fuzzy search, you don’t have to be too specific in your wording. You can look for objects, or times of day, or specific locations like Times Square.

The most powerful thing about the visual search is that it’s going to find things you’d never think to categorize, things you might have seen once in a quick pass through all your clips, but might have forgotten to note down. Jumper just does it, finding the right parts of any clips that roughly match.

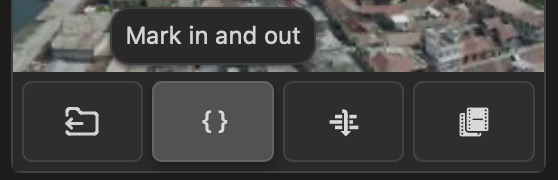

How do you use what you’ve found? Hover over the clip to reveal four buttons at its lower edge, allowing you to find the clip in the NLE, to mark the specific In and Out on that clip, to add it to your timeline, or to find similar clips. That last option is very handy when a search returns somewhat vague results — find something close to what you want, then find similar clips.

However, it’s important to realize that no matter what you search for, Jumper will probably find something, even if there’s nothing there to find. The first results will be the clips that match your search best, but if you scroll to the end of the results and click “Show More”, you’ll find clips that probably don’t match the search terms well. And that’s OK! If the clip is there, you’ll see it; if it’s not, you’ll be shown something else, As with any search, if you ask sensible questions, you can expect sensible results.

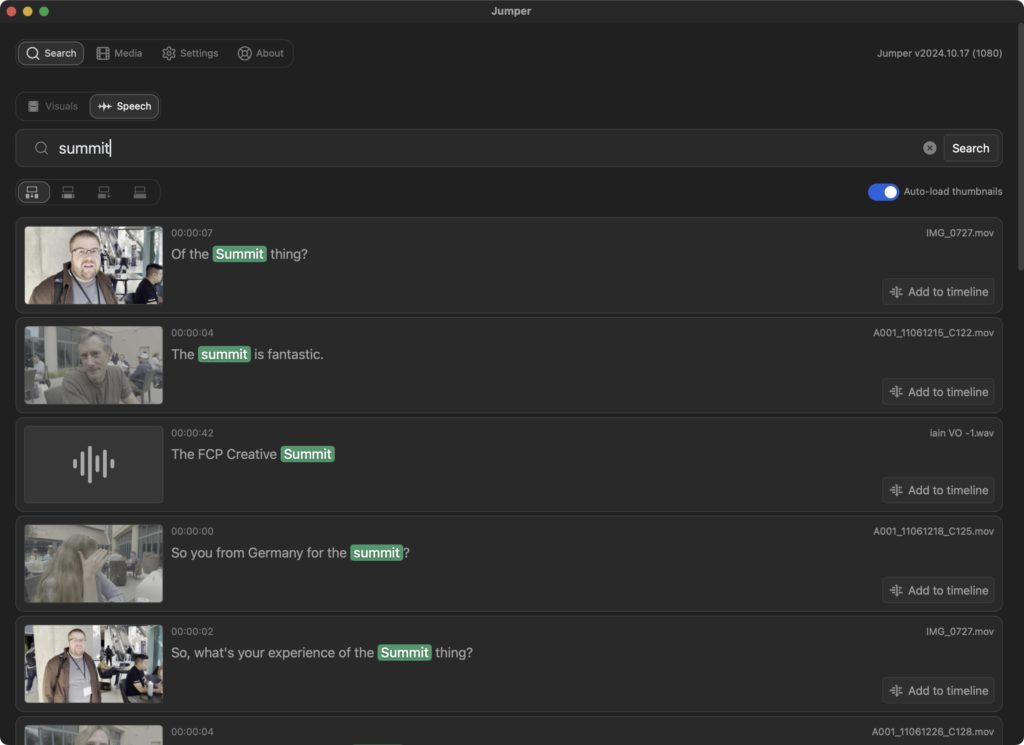

Searching for speech

While this isn’t a replacement for transcription, Jumper will indeed find words that have been said in your video clips or voice-over audio recordings. You can search for words, parts of words or phrases, and they’ll be highlighted in a detected sentence.

I’ve tested this against a long podcast recording, checking through a transcript of the podcast and making sure the same phrases were findable in Jumper, and happily, they were. As with visual searches, the first options will be the best, but don’t look too far down the list, as you’ll start finding false positives. Captioning support is already common across NLEs, so I suspect this feature may not be as widely used as the visual search, but it’s still useful, especially for those who would normally only transcribe a finished timeline.

Is this like a local version of Strada?

At this point, you may be thinking that it sounds a little like the upcoming Strada — which we will review when it’s released early in 2025 — and while there are some similarities, there are significant differences too. Firstly, Strada aims to build a selection of AI-based tools in the cloud, not just the analysis which Jumper offers. Second, Strada (at least right now) is explicit in its categorizations, showing you exactly which keywords it’s assigned to specific parts of your clips. Jumper never does that — you must search first, and it shows you the best matches, in a fuzzier, looser way.

Third, and probably most important, Strada runs in the cloud, on cloud-based media, and is very much ready for team-based workflows, at least for now. Jumper is a one-person local solution.

Conclusion

AI-based analysis is very useful indeed, and it’s going to be most useful to those with huge footage collections. If you’re an editor with a large collection of b-roll that you spend a lot of time trawling through, or a documentary maker with weeks worth of clips you’ve been meaning to watch and then log, this could be life changing. Jumper’s going to do a much better job of cataloging your clips much more quickly than you can.

The fact that Jumper can be purchased outright and then run fully offline (after license activation) will win it fans in some circles. Those same points will give others pause — if you’ve moved to a cloud-based workflow, you’ll have to download those clips and store them on a local drive to be able to analyze them. If you’ve moved to the cloud, Strada might be a better fit so check out their beta now, and look for our review when it’s released.

For anyone who stores their media locally and works alone, Jumper is worth a serious look. Grab a free trial from getjumper.io.

Pricing for one NLE: $29/month, $149/year, or $249 lifetime (perpetual).

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now