PVC: So how did you both get involved in this film?

Merrick: Our film tells the story of a father (played by John Cho) who when his daughter goes missing decides to look in the place nobody else has looked, on her computer screen. The entire film unfolds on his computer screen and other screens. I got on the film because I met the director Aneesh through USC. He had just left his job on the creative film team at Google, and he rang me up with a movie possibility.

Johnson: I went to USC with Aneesh, I knew him from classes, and we were friends. Then he went off to New York to work at Google, and I hadn’t seen him in years. Then I got a call from him asking me to edit “Search,” and asking me to meet up with Will. Will and I met up because we didn’t know each other previously. Then we decided it would work, we could work together.

Merrick: Aneesh was hired at Google after directing a spec spot for Google Glass, and they brought him on to the creative film team. I had edited the spot that got him that job.

Johnson: I think there’s something where you want to find people that are ambitious and are going to put everything they have into a project. I think Aneesh felt comfortable that we’re creative people, Will and I, and we are also hard workers. I think there was this understanding that we were going to go 100 percent the whole time.

PVC: How do co-editors work on a project like this, how does that collaboration work?

Johnson: It’s perfect for this project. There was actually very little discussion about our dynamic. It just kind of organically happened. Because this is such an unusual film, it’s essentially an animated film. The script was incredible, but it was kind of unusual because of it being told entirely on screens. You couldn’t quite break it down into scenes like you traditionally would, so what we did was we just drew lines in the script and broke it into 26 sections. We lettered the sections alphabetically, and Will took section A, I took section B, and then we just moved that way through the movie. Then when we went into start doing the second passes, we just swapped so that we were constantly refining each other’s work.

Merrick: This happened enough times that we really can’t even remember who started what anymore. We both worked on every sequence.

Johnson: Yeah. It got to the point where it’s like, “L has a bunch of notes but Q really needs some work.” Like, “I have Q open, I’m going to take Q, you take L.”

PVC: Were you guys editing side-by-side or the same location?

Merrick: We were working pretty much in the same room.

Johnson: There was a door you could close, so it was actually perfect. What would happen is Sev, Aneesh and Natalie would come in, and they might be doing notes with Will, and meanwhile I would be implementing some notes in the other room. Then they’d come over work with me.

PVC: So the film takes place entirely on computer screens. Can you explain that?

Merrick: Telling a movie through a computer screen is not an entirely new idea. There have been movies like “Unfriended” by Bazelevs, the same company that did ours. Those films tend to be told in real time and in wides of the screen, kind of “Paranormal Activity” style. What we wanted to do with our film was in the same way that early movies were wide shots like theatrical vaudeville acts. We wanted to go in and sort of discover what coverage is in the computer world. We will, for instance, have a FaceTime call where we’ll cut to one person…say John Cho is talking to his daughter, then we cut down to her sort of like a reverse.

Merrick: At first, we didn’t think it would work. We thought it would be against the rules, but we found out we could make a shot reverse work by punching into the small screen where you see yourself and then punching back out to the wide.

Johnson: It was a lot like DP’ing, and we actually have a “directors of screen photography” credit on the movie.

PVC: Is that a thing?

Johnson: It is now. It really was like shooting a movie. It’s challenging because unlike a three dimensional world where you can kind of get off-axis and do your coverage that way, we were all on a two dimensional plane. So the tricky part was the shot sizes had to be significantly different when you were cutting, which gets really hard when you’ve got camera movements happening, and you might find yourself in a little middle area.

Merrick: One of the most mind blowing things we discovered was that in some cases we could cut from one punched-in area of the computer screen to another punched-in shot, without going back out to a wide. You don’t think it will work, but you just try it and you discover you have almost as much film language in there as you do in real life.

Johnson: “Unfriended” is really effective, it’s wide real-time, but we wanted to punch in. We had seen this in a “Modern Family” episode, and in “Noah,” it’s a great short film. “Nerve” did this, but all of those movies wouldn’t cut.

Merrick: They do a fast zoom. “Voosh, voosh, voosh.”

PVC: They were doing that to try to retain the geometry of the scene for the audience?

Johnson: Exactly.

Merrick: Our first cut was like that. It was actually Sev who came in and watched our first cut and told us, “Guys, what are you doing? Just cut.” We were like, “Whoa,” when we started trying it, and it worked.

PVC: So as a DP, one of the tools I can use for covering a conversation is the OTS. It’s a simple way of establishing the geometry of each person in the scene in relation to each other. But you don’t have an OTS on a flat 2D screen.

Merrick: Even when we’re almost showing a video full bleed, we usually show the edges of the video. We’ll frame with edges of browsers or videos.

Johnson: We felt like full bleed was kind of visually uninteresting, and just didn’t keep you grounded in what you’re looking at. In FaceTime calls, it’s nice because you have the little face in the bottom, so when you’re cutting to the one shot, you’ve got both people.

Merrick: It’s like a wide. That wasn’t fun to edit.

Johnson: Those are extremely difficult because unlike a shot reverse, you always have both shots playing at the same time. They have to be timed up exactly right.

PVC: Were those conversations shot in real-time on both subjects? Or were they shot separately?

Johnson: They did in most cases. I think it was helpful for the actors and for pacing, but what we found was even in those situations, the pacing was off a little bit.

Merrick: Like you always want to make the little tweaks while you’re cutting and we didn’t have the freedom to do those at first until we came up with the cutting idea. Because no matter how good your actors are, you do want to play with it.

PVC: Do you do any morph cuts or anything like that?

Johnson: We did some morph cuts. We also used one trick that gave us a lot of, I don’t know, consternation …

Merrick: We were very nervous about it, but we tried “glitching” as a transition. We tested it on a lot of people and we found you can glitch between multiple shots if you’re very subtle about it.

PVC: What do you mean?

Merrick: I almost feel weird even talking to you about it. Occasionally, it freezes up for just a little bit and it’s organic. We data moshed it slightly, just slightly, not crazy.

Johnson: We were watching it and we’re like, “This is super obvious.” I kept telling the producers, “Please ask this in our test screenings.” They were like, “It doesn’t bother anyone.”

Merrick: Everyone in the test screening were like, “What glitches?”

Johnson: We actually ended up compressing it and like pumping out super tiny H.264s to get that kind of blockiness.

Merrick: Yeah, we’d export in H.264 at like .5 bit rate, then export another one from that at like .2 bit rate. Because we used glitch software, but it’s too obvious.

PVC: Okay, so on the live action thing, I’m just trying to wrap my head around this. When they filmed the live action footage for these conversations on screen, did they film it with the actors facing each other? Or were the cameras in separate rooms?

Johnson: Well, the system that they rigged, we had nothing to do with. It was our DP, Juan Sebastian Baron. It’s like genius. I think Bazelevs has kind of slowly created this process.

Merrick: Sebastian rigged a system where basically the actors could look at each other on a FaceTime call while a GoPro is doing the actual filming. They were getting a live feed from each other so they can perform, but they’re in separate spaces.

Johnson: We actually created a full animatic seven weeks before they shot, and we pumped out wides for every scene with information like “Here’s the finder window. Here’s where John Cho will be.” Then the script supervisor was able to show them “This is where your eye line is.” The animatic helped us a lot because the actors’ eye lines were always, for the most part, pretty accurate.

PVC: This sounds like an incredibly post-heavy film. Talk to me about some of the tools you used.

Johnson: Our overall process was in three stages essentially. There was pre-post-production, post-production and post-post-production.

PVC: Oh god.

Johnson: We had rehearsals with Aneesh before we actually got in the room. Then once when we got in the room, we spent seven weeks with Sev and Aneesh, creating an animatic that was used then as a model for shooting. We were using Adobe Premiere Pro for everything at this point.

PVC: For the animatics? How do you do that in Premiere Pro?

Johnson: What we were doing was, we were using ScreenFlow. None of this movie ultimately was screen recorded but we were using ScreenFlow and taking screenshots, and then bringing those assets into Premiere Pro.

Merrick: There’s like no other place where you start with a totally blank slate as an editor. Like we didn’t even have storyboard slides.

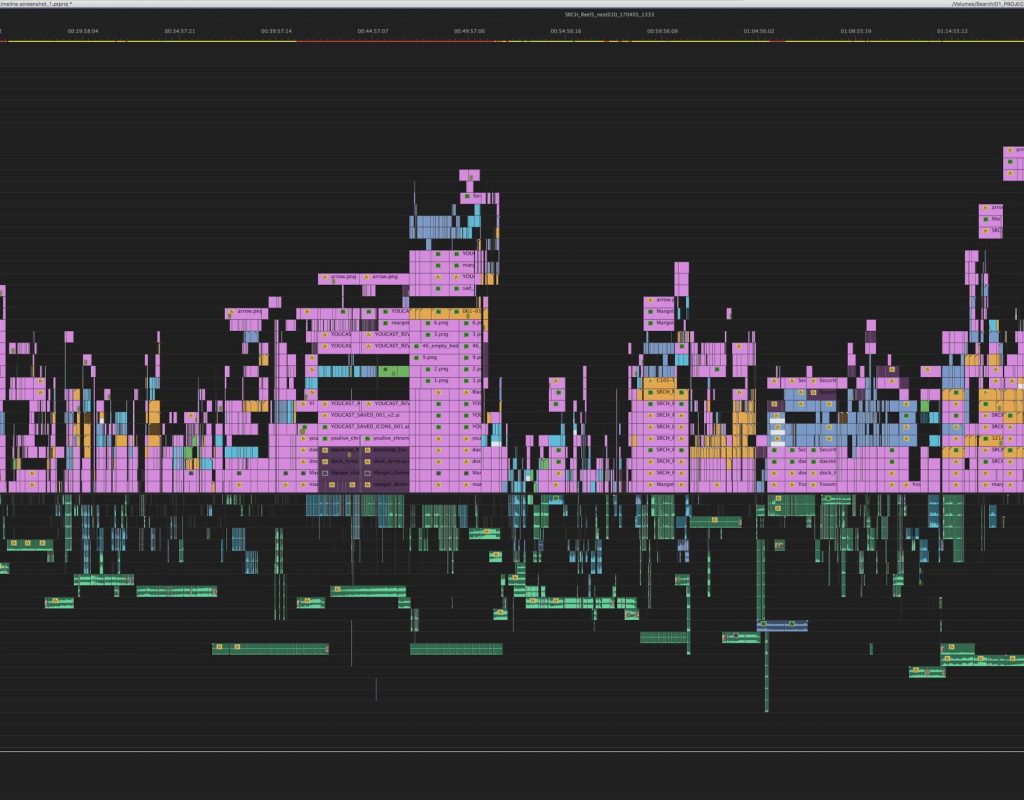

Johnson: You take the script and you’re like, “Well, let’s make this thing.” So we opened Finder, we start screenshotting. We opened FaceTime, Aneesh would take pictures of his face, kind of performing the scenes. He would record a voiceover until we built a full cut that was shown to the crew. They were all sort of like, “Oh god, we have so much work to do to make this play.” The animatic was all these jumbled pieces, screen recordings, like screenshots of Aneesh’s face making faces with his lines underneath it. We nested everything. We decided early on that nesting was the way we had to go.

PVC: Why is that?

Johnson: We needed to be able to shoot around the desktop, so we decided in the nest, we would production design, essentially, a wide of the desktop, put all the icons, we would do all the movements, all the blocking and staging in the wide and then we would nest it and then cover it with the scale and position attributes. Every time we had a timing change, we had to go into the nest, change the time, punch back out of the nest, and move the camera moves for everything.

Merrick: This was one of the weirdest things to get my head wrapped around, because we were editing not only temporally, we were editing spatially in a way. If we moved anything, rearranged anything on the desktop, we would have to then adjust all the timing. Then we also had almost parallel sequences going on in a weird way, because we had the macro edits on the nest.

Johnson: We would duplicate our edit, and we’d have to duplicate every nest inside the edit, or else we would destroy those nests if we made changes. So we had all these macro edits and then if we wanted to make this five frames shorter, we’d have to go into the nest, move everything in the nest over five frames, then step back out and move all the other edits over five frames.

Merrick: A “Null Object” in Premiere Pro would be great. We could have parented everything to a Null Object and been able to just keyframe that around it.

Johnson: Which is how we did it in after effects.

Merrick: The animatic became our foundation for the movie, so as they were shooting we cut the live action into the animatic. Then refined that with Aneesh, Sev and Natalie into like a director’s cut and a producer’s cut, and we showed those to audiences because we knew once we moved to After Effects, we’d be talking months for small edits. So we did a hard picture lock, and moved everything into After Effects where we, with our VFX company Neon Robotic, created Adobe Illustrator files of almost everything in the computer interface.

PVC: Everything was recreated? You did the screenshots, you put the video and all those assets on top of that, you keyframed rough moves, then recreated everything again? Is there a faster way?

Merrick: We always thought there would be. We never found it.

Johnson: If there was a better way to screen record your desktop in Vector, or screen record on a 16K monitor.

Merrick: Bazelevs is actually working on a software that will do this, but it’s just, it’s so extremely hard to create that it just wasn’t ready by the time we needed to use it. Basically, the decision that made us have to recreate everything was they were punching in for so much coverage that even if we had gone for the pixelated look, which we just creatively didn’t want to, it just wouldn’t work.

PVC: So you had to recreate the interfaces themselves in Illustrator, to avoid pixelation when you punch in for coverage. It’s icons and toolbars and all that kind of stuff, right?

Johnson: Yeah, and button states.

Merrick: We have like a Google Chrome bar that has five possible tabs, because we figured out that was the most we ever needed in the movie. It has all the button states for when you close it, click a new tab, we can add new data. Basically, the VFX company we were working with, Neon Robotic, created that large asset with all the tabs and fully customizable. Then we would take it, cut it in After Effects for every scene and make it what it needed to be for that scene.

Johnson: We basically would have like in a folder, 35 Illustrator files numbered in order. So when the the mouse goes up, you got the hover state. He clicks it, and it’s foregrounded. Then also, we had to worry about drop shadows.

Merrick: A funny thing that will never make the movie or the interview is the pixel aspect ratio of the Illustrator files is the same as the size on the computer. Like if there’s a 10 pixel bevel, we just made it 10 pixels in Illustrator, and so we could make things exactly … We made it happen in like a 2048 by 1152 screen, and you could just make it that size in Illustrator and it comes in perfectly.

PVC: Would you have signed up for this if you knew that it was going to be this hard?

Merrick: Barely, but yes.

Johnson: I would say yes. But I was constantly telling myself this could not possibly be the best way to do this. I was like, “There’s got to be a better way.” I would spend maybe a couple of hours digging around, playing, experimenting, trying to find a different way and I’d always just inevitably come back to “This is the way we have to do it. We have to just animate every single state with Illustrator files.”

Merrick: I didn’t even think it would be half as hard as it was but, also I’m really proud because I think we did something that hasn’t been done before.

PVC: Well, that’s the thing. This film is unique it its time, but I think it might also be unique going forward just because the barrier for entry to make a project like this is so much freaking work.

Johnson: To be honest though, as far as if you compare this to animated movies, it’s like probably nothing. It was just the fact that it was me and Will doing all this I think. It was just a two-man sort of job with the help of Neon Robotic on the technical side. Creatively, we had a whole team, but that’s what made it so challenging. So we discovered you can find all of the icons in your computer in a folder in Mac, but they’re maxed out at like 125×125 pixels. And in our punch-ins, sometimes we’re like right on icons.

Merrick: There’s some icons that have never been as big as they are, until this movie.

PVC: I haven’t had a chance to see the film yet, but you’ve got FaceTime calls, you’ve got Finder interfaces, and texting I imagine? I’m just trying to think of how many different communication techniques can you use on a computer? What options did you have to tell this story?

Johnson: I think that’s where Aneesh and Sev, their original script was brilliant. Because I think whenever anyone watches a screen movie, you’re kind of waiting for like, “Okay, how are they going to get away with this? How are they going to cheat?” Sev and Aneesh worked really hard in making a really good narrative and a really good thriller with a great story. Then they worked to find ways to put that on the screen that didn’t feel contrived in any way.

Merrick: Yeah. I think it would be okay to say that at one point in the film, we moved to say news footage, things like that, and you can actually see the actors in there as well. We do have options kind of using video streaming occasionally. They use FaceTime a lot, but the texting…honestly, you can have a compelling scene just all on text messages.

Johnson: That was pretty surprising to us. That was a big challenge, rhythmically you would have a whole dialog scene taking place on chat, and there’s like a whole rhythm that is very foreign. As an editor, you don’t really edit chats like that, like go back and forth.

Merrick: Like if you cut in, and then a message appears, it’s weird. It’s like drawing. You have to like do all these weird tricks.

Johnson: We had a lot of tricks with cutting, because you want to cut on motion a lot of times, but everything happens on one frame in a computer. We didn’t have motion.

PVC: Don’t texts kind of “bloop” in a little bit?

Merrick: They blooped a tiny bit, yeah.

Johnson: Yeah, so we were always trying to exploit those little movements. We used the mouse moving in and out of frame a lot.

PVC: That’s your person wiping the frame?

Merrick: Yeah. Sometimes the mouse just jumps to another place, but you don’t know notice because we’re cutting at the same time.

Johnson: I think you pace it like you would a conversation. You’re not necessarily just stuck in shot reverse, shot reverse. You’re going to have your two shot, you’re going to have your master. Your two shot would be like this wide framing.

Merrick: We tried to keep the frames somewhat narrow and then we would usually start on that and then whenever there was a particularly important line, you could cut in for the closeup. We didn’t do too much cutting back and forth but like you could have one up here, and then like slide over to where they type and see the response be typing.

PVC: Ah, so that would be more like a traditional pan over to a reaction?

Merrick: Yeah, yeah, yeah, yeah.

Johnson: That was something that we had to learn ourselves I think. I totally get the impulse to want to just follow the mouse everywhere and not cut. It took us a while to understand that we can do these things, and by the end it was just like editing a normal movie.

PVC: Ok, so the 16:9 aspect ratio doesn’t exactly fit a computer screen, right?

Merrick: It does actually. We may have cheated it very slightly, but when we go out to the full 16×9 wide, it looks like a MacBook.

PVC: Did you find that wide framing to be an impediment when you’re cutting around stuff? A text message thread is not really that wide, it’s kind of square, right?

Merrick: We don’t go out wide often. Normally it’s for effect.

Johnson: That was one thing that Sev, our producer, really said early on. As soon he saw the animatic, he said we should be reserving our wides for really important beats.

Merrick: Like you never use a wide as your default. You never default to the wide.

Johnson: The nice thing is that everyone’s familiar with the layout of a computer screen, so we can kind of use that to our advantage. It’s not like you need to, like in a normal scene, live action movie, you have to establish the space at some point early on maybe so that people aren’t confused. We could start a scene on a text notification, for instance, and not worry that people are going to be like, “Whoa, where are we?”

Merrick: It’s funny to even play with that sometimes. There are times where we start in closeup, and you don’t know where you are yet, and you slowly find out through context. Our best compliments were after a lot of our test screenings people said, “I totally forgot everything was on a computer screen.”

PVC: So I imagine that you used the full Adobe tool set on this project.

Merrick: Most of it, yeah. Mostly Premiere Pro, After Effects, Photoshop, and Illustrator.

Johnson: Yeah, we didn’t really use InDesign. We used Lightroom a little bit, for some photos we shot.

Merrick: We even used SpeedGrade. It was our DPX viewer.

PVC: This was before … I guess SpeedGrade has since gone away?

Merrick: Yeah, we found a way to get it back.

Johnson: SpeedGrade weirdly was the best DPX viewer we could find. But we did color, we did do almost all of the primary color in After Effects with Color Finesse.

Merrick: We found a colorist, Zach Medow, who could do it.

Johnson: Zach was one of the only people who could listen to our workflow and not laugh us out of the room. He understood what was going on in our sequences. He had to color every live action element separate from the screen without ruining the screen. He was amazing.

Merrick: He had no playback. It was terrible. Just the worst conditions you could have for a colorist. But he did an incredible job. Like he nailed it. We had a pretty short amount of time, and he nailed it.

PVC: Sound design probably plays an important part in this sort of film? Who did sound design and how did that process work?

Johnson: A company called This is Sound Design did it, and this was actually probably the most traditional part of the process. I don’t necessarily want to speak for them, but I think it’s pretty safe to say that they had to also wrap their heads around the rules of this kind of film.

Merrick: Something that was weird…Foley for clicks in a keyboard suddenly becomes very critical, like you have no leeway.

Johnson: There’s a lot of character.

Merrick: You could show emotion through the clicks and through the keyboard, like how hard somebody’s typing. They did a great job with that. Every time we’d go to … I don’t want to explain how we go between computers, but when we do, it’s a different mouse, different keyboard.

Johnson: Each computer has its own unique keyboard sound and click sound.

Merrick: Then they would pan just a slight ambiance around the room, so that you kind of felt like you were in a space and had something to hear. It’s very hard because a lot of computers, there’s not, you kind of have to justify sounds because you don’t want to just sit and it’s quiet. It’s very music heavy too.

PVC: Was there like hum from fans? Did they go that far as well?

Johnson: Yeah, yeah. I think they would have birds, they would have a lawnmower in the distance for like afternoon. Like room tone hum. Honestly, it’s pretty traditional treatment, it was just, I think, tough to wrap your head around the rules, especially with typing and clicking. And I think David’s voice is actually panned a little bit. So you have this feeling that David is right with you, and the people on the computer are just in the center track.

Merrick: They did a lot of great futz work too, because if you’re talking to somebody on the phone, it has to be just distorted enough but not distracting.

PVC: Thanks so much for your time, and good luck with the premiere!

Sony Pictures Worldwide bought the global rights to “Search” the night of the film premiere at Sundance, and a theatrical release is expected.