Happily, we’re now way too smart to need bars and tone recorded at the beginning of the things we make. For the benefit of generation Z, let me explain: a long time ago, people used to record a picture made up of a series of brightly-coloured rectangles at the beginning of a video tape, to act as a reference as to what things are supposed to look like. Back then, video was a lot simpler, but it was still complicated enough that things might need adjusting. Since then, things have become a lot more complicated, and there’s often even more interesting ways to make everything slightly the wrong colour.

They were supposed to be irrelevant. That, it turns out, was overconfidence, given the number of videos there are on YouTube with greyish night skies and safety-orange tomatoes. In the digital world, we’re not dealing with magnetic tape that might degrade with time, or analogue electronics which inevitably have wiggle room, but we are dealing with a lot of different software options which are just as capable of bending everyone’s pictures. And, without some sort of standardised test signal, there’s no way to tell what’s going on beyond taking the opinion that – eh – it looks kind of milky.

What kind of HDR?

Putting black levels might be at 16 as opposed to zero is a common enough engineering choice, although it can become a complicated problem when a piece of material has been badly re-encoded more than once. What’s much worse is that prominent manufacturers have shipped SDI cards for workstations which intrinsically modified the picture along the way in at least some software configurations (it’s long since fixed, so we won’t say who), and this is before we even start talking about how many ways there are for camera gamma curves to be wrong.

The fundamental issue here – it seems almost like a betrayal – is that this is not what we were led to expect from digital media. In the old days, it was instinctive to adjust video (or audio) signal levels to make a vectorscope or audio meters look right. Now, we generally can’t do that even if we want to, even on professional equipment. At best, we can dig into a menu and switch a video display option between a couple of options with vague titles like (in Windows) “use HDR” and “WCG Apps,” as if that tells us much in the context of a video system with at least half a dozen software and hardware components. What kind of HDR? Where is this WCG data coming from? What path will it take?

Kids these days

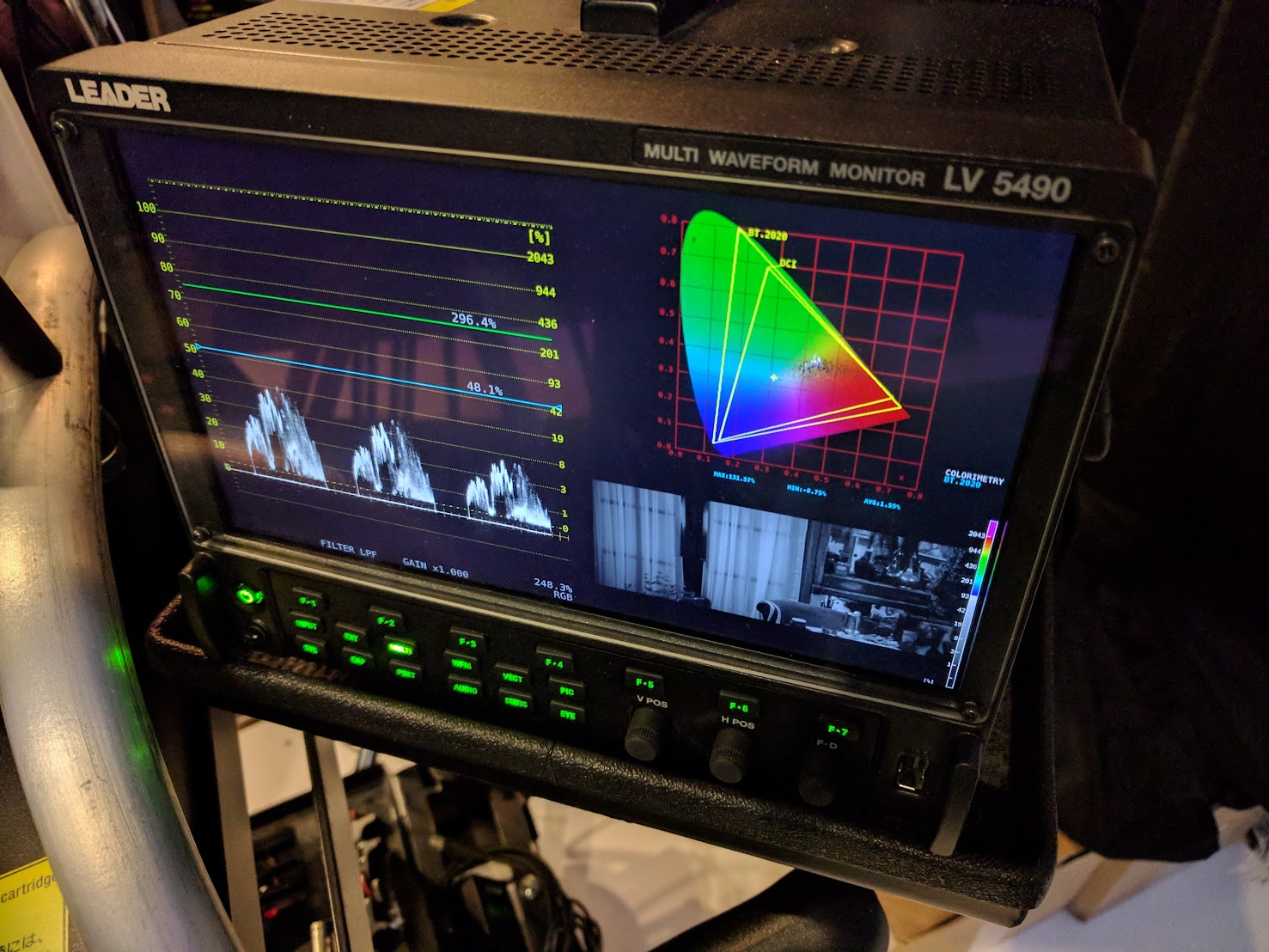

Again, we shouldn’t need to know, but we do need to, and yet we usually can’t. At this point, it’d be easy for the old hands in the audience to complain that kids-these-days don’t know how to use a waveform monitor or vectorscope anymore, and it’s true. They often don’t – especially vectorscopes, which are often a tool that full-time colour people only get out on special occasions. The deeper problem is that even if everything involved – every piece of software, every converter, every display – had all of the test and measurement tools, it’s not very clear what we would measure and how we should interpret the resulting readout.

For a deliberately trivial instance, let’s assume we have a file recorded on a broadcast camera in limited 16-235 brightness range, but we’re displaying it on a computer monitor which expects full range 0-255 values. We could view the contents of the file (or more specifically the values coming out of the codec) on a waveform monitor, which might show us what the values are, but that display would only be useful if we already know what the monitor is expecting.

There’s the potential for complexity at every stage. Settings in the video decoding software might cause it to modify the values that are stored in the file; video I/O cards, including both conventional GPUs and dedicated SDI hardware, and their drivers, can try, unhelpfully, to help in similar ways. If there are controls, manufacturers often make misguided attempts to use simplified, understandable language which ends up lacking any form of technical rigour or specificity, especially in consumer-targeted systems like GPU drivers.

Bars and tone in digital files

These are problems we’d like to solve, especially in things like grading software which can involve a whole chain of the problems we’ve discussed here, more than once, in every single clip on a timeline. Probing that virtual signal at various stages of its progress through the system might be somewhat informative, but most software companies don’t even publish a flowchart of what the processing chain looks like, and some software certainly has sufficient complexity to make that relevant.

In the end, this feels like a problem which can’t be solved without a lot of simultaneous changes from a lot of people in a lot of different disciplines. Yes, the picture itself is, in the end, the metadata nobody is going to strip out. But no, nobody’s really going to start recording bars and tone into digital files, because that’s self-evidently unnecessary, but we do seem to have made every effort to make it necessary, and that ought to be a red flag to someone, somewhere.