The more things change, the more they stay the same. So goes the old proverb.

SMPTE Time Code is like that. While the entire production and post world around it has changed radically, time code has remained virtually the same.

Still relevant after all these years, time code allows us to easily sync sound to picture in double system workflows. Time code is used in everything from inserting computer generated images (CGI) to closed captioning.

Originally conceived as a means of editing two-inch quadruplex videotape without physically cutting the tape with a razor blade, SMPTE Code has been remarkably versatile and malleable over the years. It individually identifies each video frame, whether it be quad tape or Quicktime file, applying a unique address to every one.

Nowadays with more video formats than ever, the code can be read and controlled with no modification using edit controllers built in the 1980’s during the heyday of analog equipment. The code is equally accurate controlling 24 frame high definition material as it was driving standard definition analog machines.

Not bad for a standard that’s been around for almost a half a century.

Sony Rm-450 Edit Controller (circa 1985?). Photo by Author.

Time code is a ubiquitous tool on most file-based cameras, location sound recorders, production slates, non-linear edit systems and sound editing and special effects software. With proper use, just pressing a few keys on a keyboard will bring recordings from multiple cameras suddenly into sync or make the task of syncing dailies instantaneous rather than a laborious process of locating visual and aural marker cues.

However, in the late 1950’s, editing wasn’t so easy.

Back then, film editors were still using practices and machinery dating to the mid 1920’s. The Moviola film editing machine was invented in 1924 by Iwan Surrurier and quickly became the standard for film editing and remained in general use in the industry until the early 1970’s when flatbed editors became a popular alternative.

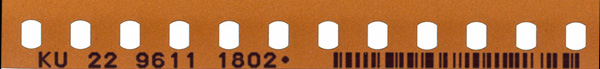

Film was the original non-linear editing medium. During the manufacturing process, the very edge of film stock is exposed with a series of numbers (called keykode by Kodak). After the film was shot and processed, these numbers were used by editors as they cut and re-cut copies of the negative (called work prints). Once editing was complete, the “locked” work print was given to a negative cutter who went through and matched the key code numbers on the work print back to the original negative. The negative cutter would physically cut the original negative and make it ready to be printed for release.

Keykode on Eastman Kodak 35mm motion picture film. Also the bar code carries the same information to be scanned by telecine film scanners. From Wikipedia.

When television videotape came along in 1956, it was initially intended as a device to improve the image and sound quality of delayed broadcasts to different time zones in North America. Videotape far exceeded the quality of its predecessor, the kinescope (a film shot off a television monitor). It was indistinguishable from a live broadcast to the untrained eye. It was also a huge cost savings for television networks that no longer had to pay for film stock and processing costs.

As recordings were made of shows while they were being broadcast live to the eastern United States, there was no need to edit them. Operators merely rewound the tape after the show finished and played it back without changes at the appropriate time several hours later.

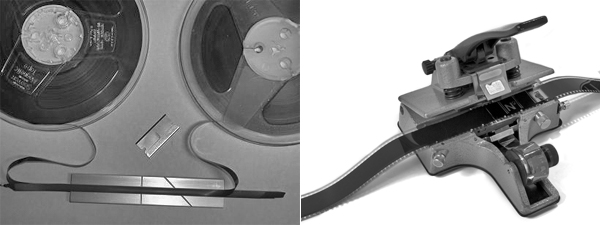

From the very beginning of videotape, the production community began looking for a way to edit this new medium. For years audiotape was edited with a razor blade. Same with film. Why not apply those same practices to videotape? Problem was, videotape wasn’t going to be as easy to cut as audio or film. Audio tape could be rocked back and forth over playback heads to find the precise point at which to make the splice. Similarly, an exact frame of film was identified using the naked eye by watching the Moviola run it back and forth until the cut point was found. The editor then cut on the black line in between two frames.

Quarter inch audio tape splicing block and 35mm tape splicer. From Dreamstime.

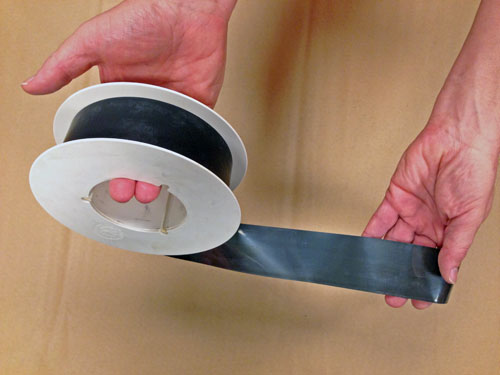

But two inch quadruplex videotape could not reproduce a picture while it was rocked back and forth or played slowly. Frame lines were hidden in invisible electronic pulses embedded in the dull brown magnetic sensitive oxide cemented to a two inch wide by several hundred foot long strip of acetate plastic.

Two inch quadruplex video tape. 15 inches equals one second of recording time. Photo by Vince Gonzales.

Engineers came up with a method to “develop” the magnetic pulses on the tape. By painting the area of a tape where an edit was to be made with a solution of iron filings suspended in carbon tetrachloride, editors were able to see the pulses and make the cut in between television frames. But those pulses were spaced a tiny 10 mils (10 one thousandths of an inch) apart, about the thickness of a human hair. If the cut was not made perfectly between those pulses, the picture would break up and roll until the machine stabilized on the new section of tape. For network edits this was unacceptable. Making successful videotape edits became an art form.

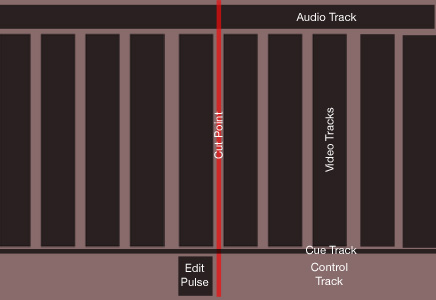

An approximation of what the editor would see after “developing” the pulses on two inch quad video tape. The width between the video information (the larger blocks) is about the width of a human hair.

One of those artists was Art Schneider, a film and videotape editor best known for his editorial prowess on the “Laugh-In” television show. Schneider began videotape editing shortly after videotape was invented and in his book “Jump Cut,” Schneider writes about how editors located edit points in those early days. They would playback the tape and, upon seeing the edit point, immediately hit the stop button. “Using black felt markers on the back side of the tape, edit points would be tentatively marked. Then the tape would be played back a few more times to verify…”

Initially, cutting the tape was done with the naked eye. Within a couple of years of the introduction of the videotape machine, the Smith Videotape splicer was invented by Bob Smith, an NBC Burbank engineer. While it didn’t do anything for improving the accuracy of the edit point, it did help editors make better splices with its 40 power microscope.

In 1958, Schneider and his fellow engineers at NBC developed the first method of tape editing allowing editors to make frame accurate edits on videotape. In a paper Schneider delivered to the National Association of Educational Broadcasters in 1972, he described “the double system method of editing videotape.” It used a 16mm kinescope made of the video tape to be edited but instead of the tape’s sound track, the film’s optical track consisted of an “electronic edge number” made up of a low frequency “beep” every 24 frames to indicate the seconds with two human voices providing the minute’s (woman’s voice) and seconds (man’s voice). This was called the Edit Sync Guide (ESG). The length of the recording was 70 minutes since at the time, the longest reel of videotape was just over 60 minutes.

It worked like this. As the master videotape was being recorded (or even if it was being played back at a later time), the ESG was played back and recorded onto the cue channel of the video tape, recorded to the optical track of the 16mm kinescope film and laid off to a sprocketed 16mm magnetic sound tape that was also recording the production sound of the video tape. Once the kinescope was processed, the film and 16mm magnetic tape were edited using normal film editing techniques and a log or “count” sheet of all the physical edits in the 16mm kinescope were made as indicated by the Electronic Sync Guide recording.

Next the videotape was loaded, not on a videotape machine, but on a rather low tech editing bench equipped with rewinds and a magnetic audio reader centered on the videotape’s cue track where the ESG was recorded. The edit points would be identified from the written log and the tape would be physically cut to match. How was the 24 frame film converted to 30 frame videotape? Schneider says, “The trick was to create a special ruler 15 inches long (equaling one second of videotape) and divide it into 24 equal parts (one second of film).” This allowed the editor to find “a corresponding tape frame from the log generated by the edited workprint.”

The first program to be edited using the process was “An Evening with Fred Astaire.” The process was kept hush-hush until they were sure it would work. It turned out they had nothing to worry about and Schneider states that as time went on, many award winning shows were edited using this method, notably the fast paced “Laugh-In” series with over 350-400 physical cuts in a one hour show. NBC continued using the system until 1971 and the offline/online procedure became the forerunner for non-linear computer editors to come.

At last, in March, 1962, Ampex developed an electronic method of tape editing. Although the Ampex Electronic Editor was completely manually operated and not frame accurate, at least it eliminated the laborious (and many times error producing) physical cutting of the tape. The videotape would be played and at (hopefully) the right moment, the editor would push the record button. Eighteen frames later (slightly over a half a second), the edit would be made. So editors had to push the button eighteen frames early to hit the edit point on the mark. If they were late, all they had to do was make the edit over again. However, if they were early, the whole previous scene would have to be laid back.

One year later Ampex enhanced the Electronic Editor with a time element control system and renamed it Editec. Using cue tones, an editor could find an edit point and refine it until he heard it at the precise point he wanted to make an edit. But all the systems up to this date were still labor intensive one-edit-at-a-time processes.

Meanwhile, the Electronic Engineering Company (EECO) of Santa Ana, California had been building timing systems for the Air Force and NASA that went back to the flights of the X-15 rocket plane in the late 50’s. In 1967, they turned their attention to television and eventually took the drudgery out of videotape editing.

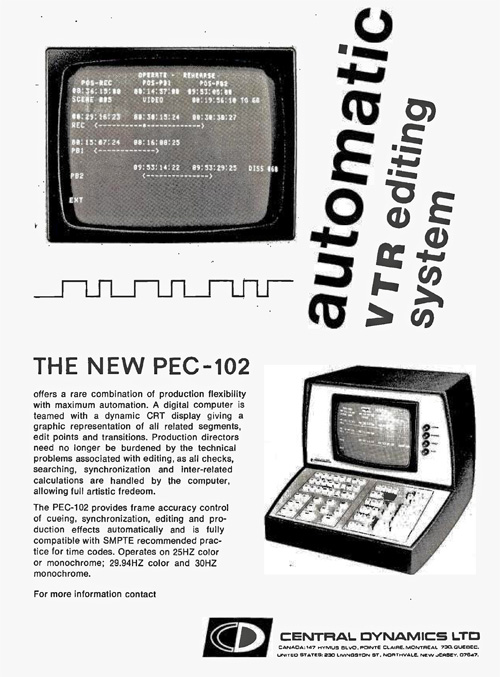

EECO wasn’t the only entry into the edit system challenge. Along with Central Dynamics Ltd, Datatron and others, they all had their own similar but proprietary systems. As these products hit the marketplace, interchange problems surfaced as tapes from a network using one system were sent to a postproduction house using another system. As stated in the March, 1970, issue of the Journal of the SMPTE, “A new proposal for an industry standard [time] code for video-tape editing and control is needed to replace the various codes now used, not one of which is compatible with another.”

A SMPTE committee was formed with Ellis K. Dahlin of CBS Engineering named as chairman. It invited the various manufacturers to discuss the merits of their codes. Requirements set forth by an Ad Hoc Committee on Video Tape Time Code (lead by ABC, CBS and NBC with input from other users such as members of the Video Tape Production Association) were considered by SMPTE. Finally, on October 6th, 1970, Dahlin delivered a progress report announcing the proposed SMPTE Code. While the standards are still in proposed form, “…the general agreement is substantial enough that manufacturers are now designing equipment for its use.” He went on to say the code meets all the requirements set by the various members of the committees (including all three U.S. Networks as well as most other users).

The proposed code, mostly based on the EECO system, only required minor modifications to units already in the marketplace. It didn’t take long for products to be re-worked and soon readers of trade magazines such as Broadcast Management/Engineering and Broadcast Engineering were seeing ads for equipment that would read, record, play, control and edit the SMPTE Time Code.

Advertisement like this began appearing in broadcast trade publications in 1970.

It wasn’t until April 2nd, 1975 that the SMPTE time code standard was approved by American National Standards Institute. But by then, networks, production houses and television stations had been using it for years.

The code was designed to be so robust it would stand the test of products and formats yet to be designed. This was in a period when the digital formats of today were still the stuff dreams were made of. Engineers foresaw technical advances in the industry and made the code as malleable as they could given the technology of the time. Today the code is able to handle everything from high speed tape winding to multiple frame rates with ease.

The sound of time code. In the early days, if the tape format didn’t have a “cue” track, one audio track would double as the time code address track.

Taking a cue from the NBC ESG offline/online concept, the first computerized non-linear editing system – the CMX-600 – was born. In 1969, with SMPTE time code standardization imminent, the laboratories division of CBS was experimenting to find more streamlined ways to edit videotape. None of their tape based experiments were taking them anywhere so they turned their attention to using computer disks. Several years earlier, Ampex had used discs to develop their HS100, a Slow Motion Instant Replay machine, but it could only hold 30 seconds of video at a time and had no audio capability. So CBS Labs approached Memorex Corporation, a Silicon Valley company and maker of computer media including magnetic tapes and disc drives. Memorex is remembered for entering the consumer market it’s series of television commercials featuring Ella Fitzgerald and the catch phrase “Is it live or is it Memorex?”

In 1970, a joint venture of the two companies was consummated with the name CMX (for Cbs-Memorex-eXperimental). Adrian B. Ettlinger, a CBS Engineer lead the development of and patented the CMX-600, the company’s first product. According to Bob Turner’s article for Videography magazine, the 600 operated with a “stack of 11 digital-storage platters [with] each 29MB platter [holding] five minutes of half-resolution black & white moving images, audio and… timecode.” Rather than try to explain how it worked, let a two part promotional video by CMX from 1971 show it in action. Please click below.

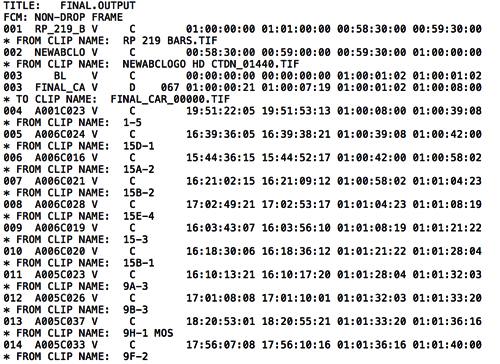

The CMX-600 gave us the first computer generated Edit Decision Lists (EDL’s). These long lists contain the time code start and stop points from the original tapes as they are shuffled automatically and played back by the computer in program order. The master record tape, already “striped” with control track and time code matching the record side of the EDL, is edited and recorded as each shot from the original recording is inserted thus creating the edit-master of the program. CMX style lists are still in use today.

CMX edit decision list. The first two time codes on each line are the locations of the shots on the master tapes. The last two are the edited program.

Life with time code was made a little tougher for those in the Americas (and other NTSC based countries). When color was approved, television was already in overwhelming demand. Millions of black and white sets had been sold by 1953 and even though there were other color systems, the RCA system allowed for compatibility with black & white sets already in the marketplace and ultimately was approved in December, 1953, even though a competing system from CBS, the CBS field sequential system, had been approved by the Federal Communications Commission. The approval was withdrawn because it would make obsolete those millions of sets already in use.

In order to solve the compatibility issue in 1953, RCA adjusted the existing television signal to make room for the color information. As part of this, the frame rate had to be slightly reduced to 29.97 FPS. As a result of this change, two time codes for NTSC countries were developed and they are still with us today. Drop Frame (DF) Time Code runs at the same speed as real time. Non Drop Frame (NDF) Time Code runs at the video speed of 29.97 FPS. Thus at the end of one hour on a DF time code recording exactly 60 minutes will have elapsed on a clock. When NDF time code reaches one hour it will indicate 60 minutes but the actual time elapsed is 60 minutes and 3.6 seconds on a clock. If you’re interested in the math behind all this, there is a good explanation here.

Many new to dealing with time code think video frames are dropped. In fact, no video frames are ever changed, it’s just a matter of how they are timed (or addressed). The same video can be numbered in DF or NDF time code and the video does not change. But because Drop Frame is adjusting for actual clock time, the time code skips certain time code numbers.

DF time code is used in situations where time of day must be correct, as in timing the length of a broadcast program so it will not exceed the time allotted to make room for the next program. Signals from Global Positioning Satellites are converted to DF time code in television networks and stations to link all facilities to the same time of day. Cueing devices set to begin recording or playing back take their instructions from this master clock. However, in production and post production work, no such need exists. It is easier in the editing room to work with continuous time code so NDF time code is generally used throughout the process. At the end of the editing phase of a television program, the final output is stripped with DF time code. Drop frame time code is indicated by changing the colons to semi-colons or by a single dot before or after the frame counter, depending on the manufacturer of the time code apparatus.

PAL Countries fared much better in the time code department. Because their broadcast standards were not completely established before color became a factor, they were able to avoid many of the problems associated with NTSC and institute the Phase Alternating Line (PAL) system (invented in Germany in 1962) reasonably easily for the most part ignoring compatibility. The first broadcasts didn’t begin until 1967, more than a decade after the NTSC system began in the U.S. As each country signed on their PAL color systems, they maintained their original black and white only systems and continued the use of the older systems alongside the new PAL technology for years in some cases (For example, the United Kingdom did not retire their 405 line monochrome system until 1985).

When time code became a consideration, no adjustment was needed because the designers of the PAL system set out to not repeat the problems of the American system. Frame rates from the beginning had used an exact 25 FPS rate and this was maintained in PAL. The result is that PAL needs no Drop Frame catagory.

Today, even with the more frame rates and resolutions for video professionals to juggle, it’s nice to know one element remains constant and reliable. There have been attempts to improve on the basic system. Wireless time code systems, for example, have freed camera and sound to roam independently on the set but still be locked as solid as being on a cable connection. However other methods such as iPad and iPhones with time code apps connected to the code generator via WiFi have a lot of latency and defeat the purpose of the system.

That is not to say there are not contenders for the throne. Computer programs now exist that will sync audio and multiple cameras. As they progress they may well give time code a run for its money. But currently, those programs don’t work for everything. For now though, as one filmmaker said “If it ain’t broke, don’t fix it.”

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now