We often talk about how important it is to get your exposures correct, and rightly so; correct exposure is fundamental to the creation of good images. There’s no question. It’s Page 1 Day 1 of Cinematography. We make sure to always have a light meter with us to ensure what we’re intending to commit to film is… as intended. Today we have powerful new tools, like false color and waveforms, to check our values as well. We can easily make sure, even by just looking at the monitor, that our brightness and contrast is exactly where we want it to be.

But we don’t live in the Black & White era anymore. With little exception, color is integral to our work. It’s easy to think in terms of production design and costumes as being what gives an image its color, and today many seem to believe that it’s simply a few dials you spin in Resolve to get the look you want (it isn’t), but there’s something as fundamental as exposure that is vital to the modern cinematographers knowledgebase, and that is understanding the color of your lights.

Before the 90’s, most lighting was basically Tungsten or Daylight. HMIs didn’t happen til the 80’s or so (Carbon Arcs coming well before them) and we didn’t use Fluorescent lighting until Kino Flo came out a little later because they gave off a nasty green or magenta shift and would tend to flicker. So for the longest time we basically had Amber and Blue as our colors. You could throw a corrective gel on there to match one source to another relatively simply, or could add “Party Gels” to make those lights look Red or Blue or Green or what have you, but generally the math was kind of simple: “I’m shooting on Daylight stock, Tungsten will look Amber, Daylight will look White”. Obviously that’s over-simplified, but the point I’m trying to make is things have gotten much more complicated today. There are hundreds of manufacturers from cinema lines to household names making lights, and now that cameras are so sensitive, we can use almost any kind of light to expose our images. This means that we have to be hyper vigilant about the quality of these lights.

It’s easy to think “oh this light says it’s 5600K, Daylight is 5600K, set my camera to 5600K and we’re off to the races; everything will match” but that’s making a lot of assumptions isn’t it? Is your current sunlight 5600K? Is it cooler, or warmer perhaps? Is the bulb accurate to what it says on the box, or is it off by a few hundred Kelvin and kind of Green or Magenta? How would you know? There’s no “False Color” for color temperature, and even if you catch it on-set (probably a little late there, wouldn’t you say?) how would you know what to change to fix it? What if you have to match the sun, or a different source, to the lights you brought with you? Are you just going to keep guessing with gels until it matches?

Prior to LEDs (and even fluorescents) you could use something like the Minolta Color Meters and they would give you a reading of the color temperature of any given source, and then after consulting your gels and doing some math, you could make things match. You could even make the assumption that Tungsten bulbs were all pretty much the same color temperature and gave off the same spectrum of light because of… science. The problem today is even if things are accurate on the Kelvin scale (Amber to Blue), lights can vary wildly “north to south” making things look green or magenta and a normal color meter doesn’t have a way to know that.

Going back a bit, the International Commision on Illumination (of course they’re Swedish) or “CIE” came up with the CRI scale. This was a method of testing the accuracy of a given light source, giving said light a score from 1-100 as it compares to Daylight or a “Black Body Illuminant” which basically just means a theoretical perfect object that rejects all light and changes color when it gets hot (like Tungsten filament, but also that’s where we get “Kelvin” as a measurement. Low heat is orange, high heat is blue, right?). CRI looked at 8 low-saturation color patches from the “Farnsworth-Munsell Hue Test” (eventually boosted up to 15 in ‘95, but even so that higher range isn’t used in the “average” generally) to determine whether or not a light reproduced color accurately, using human vision as the intended metric. This is fine, but was never intended to evaluate more modern lights like LEDs and also doesn’t take into account the camera as the viewer. It also is a relatively broad test which manufacturers can “game” and say “See, we have a really high CRI!” when in reality the light only tests well on those 8 patches. LEDs (and some other lights) can have really narrow bands of color that show up as “spikes” on a spectrum graph that the CRI test won’t catch. In other words, CRI is probably best used for home applications, like evaluating if a bulb will look good in your kitchen. Plus this came around in like 1931 so it was due for an update anyway.

So then we’ve got TCLI, the Television Lighting Consistency Index. This tests the accuracy of lights based on a standard Color Chart like you can get at Filmtools, great, but uses a 3 CCD camera as the “viewer” to determine accuracy. From The EBU:

In November 2012 the EBU Technical Committee approved a Recommendation designed to give technical aid to broadcasters who intend to assess new lighting equipment or to re-assess the colorimetric quality of lighting in their television production environment. This recommendation was developed by the LED Lights Project Group, under the EBU Strategic Programme on Beyond HD.

Rather than assess the performance of a luminaire directly, as is done in the Colour Rendering Index, the TLCI mimics a complete television camera and display, using only those specific features of cameras and displays which affect colour performance.

Okay cool, but as mentioned it uses a 3 Chip camera (which include prisms) as its observer, and these days we’re almost all using Single Chip cameras. PVC’s own Art Adams wrote an article a while back testing out whether or not this mattered, seeming to conclude that functionally it didn’t, but even so the thought also seemed to occur to the Academy, who in 2016 came up with SSI, The Spectral Similarity Index:

The Spectral Similarity Index, or SSI, addresses issues with existing indices such as the Color Rendering Index (CRI) and the Television Lighting Consistency Index (TLCI) that make them inappropriate to describe lighting for digital cinema cameras — issues that have become more evident with the emergence of solid-state lighting (SSL) sources such as LED’s.

SSI is not based on human vision, nor any particular real or idealized camera, and does not assume particular spectral sensitivities. Rather, it quantitatively compares the spectrum of the test light with that of a desired reference light source, expressing the similarity as a single number on a scale up to 100 (which would indicate a perfect match). High values indicate predictable rendering by most cameras (as well as “quality” of visual appearance); low values may produce good colors with a particular camera but not with others. SSI is useful for cinematography, television, still photography, and human vision.

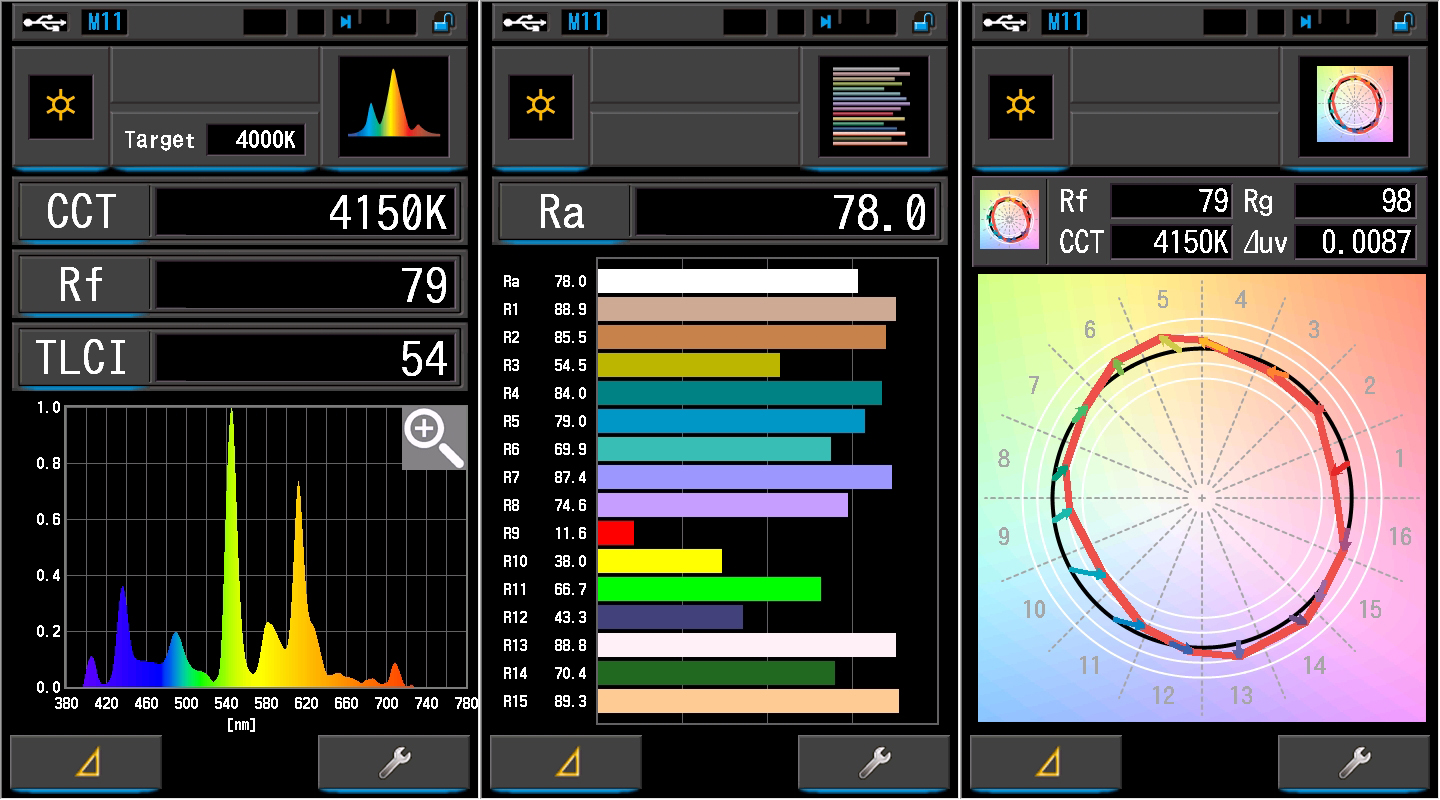

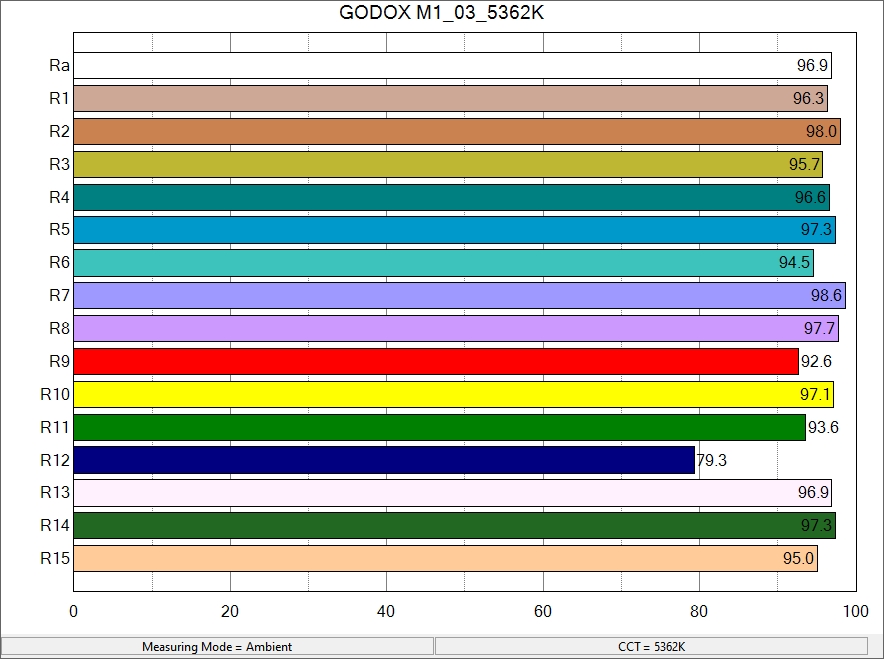

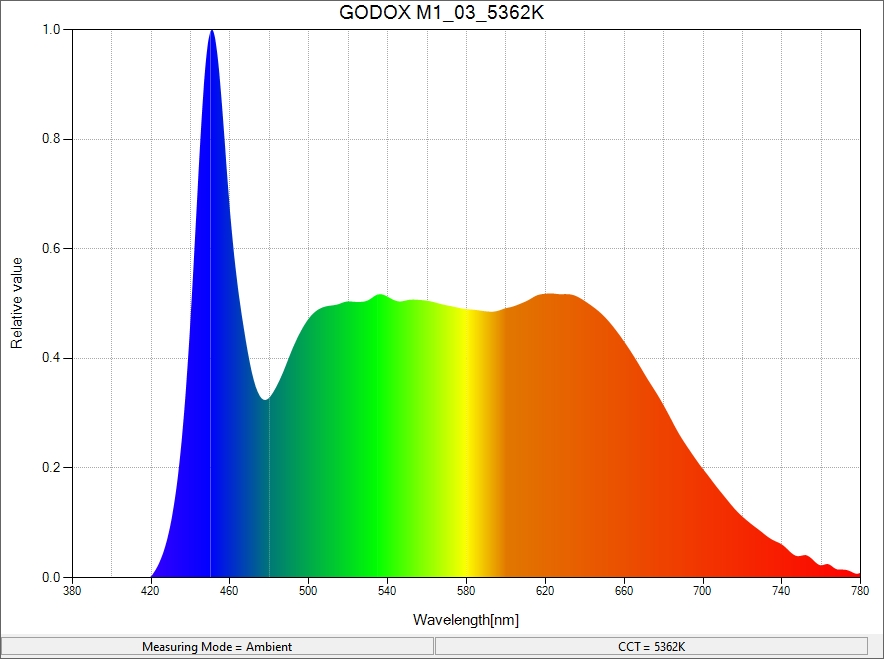

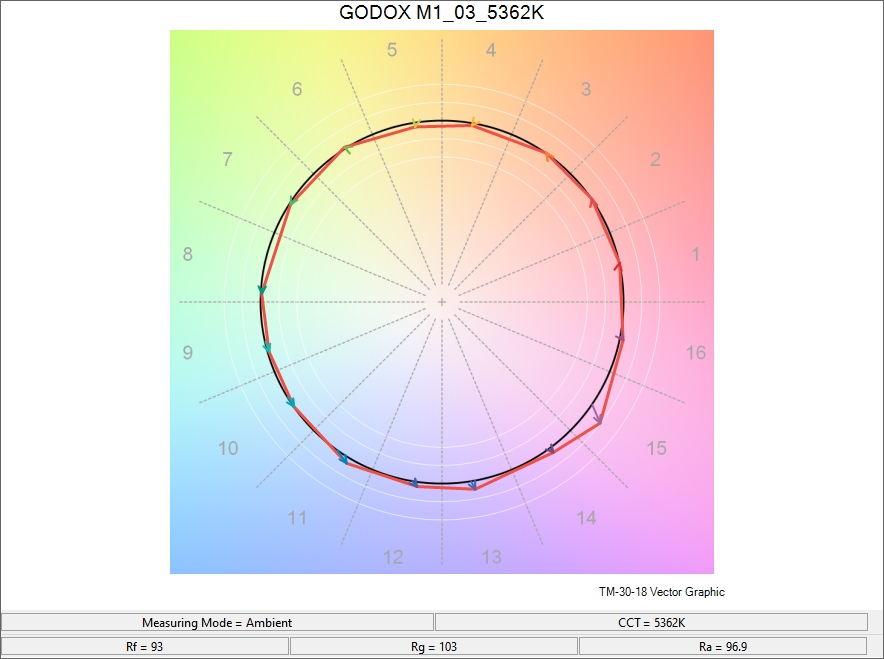

Excellent. Now we have ways to not only evaluate a light source, but more accurately match it to other/known sources. Between TLCI and SSI one can be quite sure of the verisimilitude of a light, and we even have TM-30-18 (“18” is the most current standard at the time of this writing) which basically shows a light’s accuracy as a circle over a hue chart, and if it’s perfectly in line with the reference circle, you’ve got a “perfect” light. It’ll show you where the skews are by changing the shape of the measured circle and with arrows that show where the light should be vs where it is. It’s pretty nifty, and uses 99 reference patches over CRI’s paltry 15, so it’s far more nuanced. Using the example of my kitchen light from the top of the article, we can see that the CRI is nearly in the acceptable range (80 being “more than acceptable for most uses”) but the TLCI scores an appalling 54 and the TM-30 chart shows us how skewed it is.

So the big question is, obviously… how do I measure any of this? Well, basically you’ve got one option and it’s the Sekonic C800 (or C7000 but it has some stuff you wouldn’t necessarily need as a Cinematographer and it’s more expensive). At $1,600 the C800 is not cheap, but it’s also the only tool that can illuminate (ha) this massive blind spot we all have when it comes to lighting. Name one time you’ve only used one type of lighting fixture on set. Hell, you can’t even buy regular tungsten bulbs anymore in California, so practical lights are likely going to be LED. At any given moment you may have 3-15 different lights affecting your scene! Of course you can eyeball it, but that only works so well and wouldn’t you rather be sure?

You’d be hard pressed to find a Cinematographer alive who doesn’t rely on their light meter or some form of exposure evaluation tool, why then would you leave the accuracy of your lights up to chance? Setting any LED panel to “5600K” will almost never yield that result. Good ones get close, obviously, but there’s no need to guess. And, of course, you can use the white balance function on your camera in conjunction with a gray card to measure a given light’s White Balance (and even tint bias in some cases) but what about if you have multiple sources, are you going to do that with all of them?

The wonderful thing about the C800, and what caused me to buy one, is that you can measure all of your lights, compare them, store that info (even save the graphs to a computer), and reference it later. Not only do you get detailed readouts, spectrums, and graphs for the lights you’re measuring, giving you a solid understanding of what the light you intend to use is doing to your image, but the thing even tells you what gels to use to match one light to another! You can compare 2 or 4 lights, and with the 4 light feature you just select which source is the “hero” fixture and it’ll tell you what gels to use to match the other 3 to it. You can also get X-Y coordinates from a measurement and put those into, say, a Skypanel to match the light exactly in just a few twirls of the dial. And of course, knowing exactly what color temperature any given light is gives you so much more power when lighting a scene. Sure you can put “3200K” in on the back of your LED panel, but until you measure it how would you know that’s what’s actually coming out the other side? In a lot of cases it can be 200, 300 Kelvin off or even worse. Instead of having one room all looking like it’s lit by a single source, it’ll look like multiple, ever so slightly different ones. And hey, maybe your head won’t notice, but your heart will.

Imagine you just walk on to your set, say a classic corporate interview, measure the ambient color temperature, set your LED panel accordingly by measuring it as you adjust (or Gel it as necessary if it’s not Bi-Color or RGBW) and it’s spot on. You’re done. Or you’re walking through the store, you can meter every available light and judge which one is the best for your use without falling prey to marketing BS. Maybe you want to replicate a color of light later, so you meter it and save it into the memory of the unit so you can bring it up later and match something else to it. Heck, I walked around my neighborhood and just metered various patches of sun and shade towards the end of golden hour just to see how it changed and what that looked like scientifically. Now I can easily replicate that with no guesswork. As GI Joe said, knowing is half the battle.

So to wrap this up, I highly recommend you get a modern Color Meter if you’re planning on taking Cinematography as a career seriously, or if you already are in the trenches and just didn’t realize there was this whole hidden world of variability in lighting that you had to be aware of now. If we want to fight against the whole “fix it in post” movement, let’s make sure they don’t have to aye?