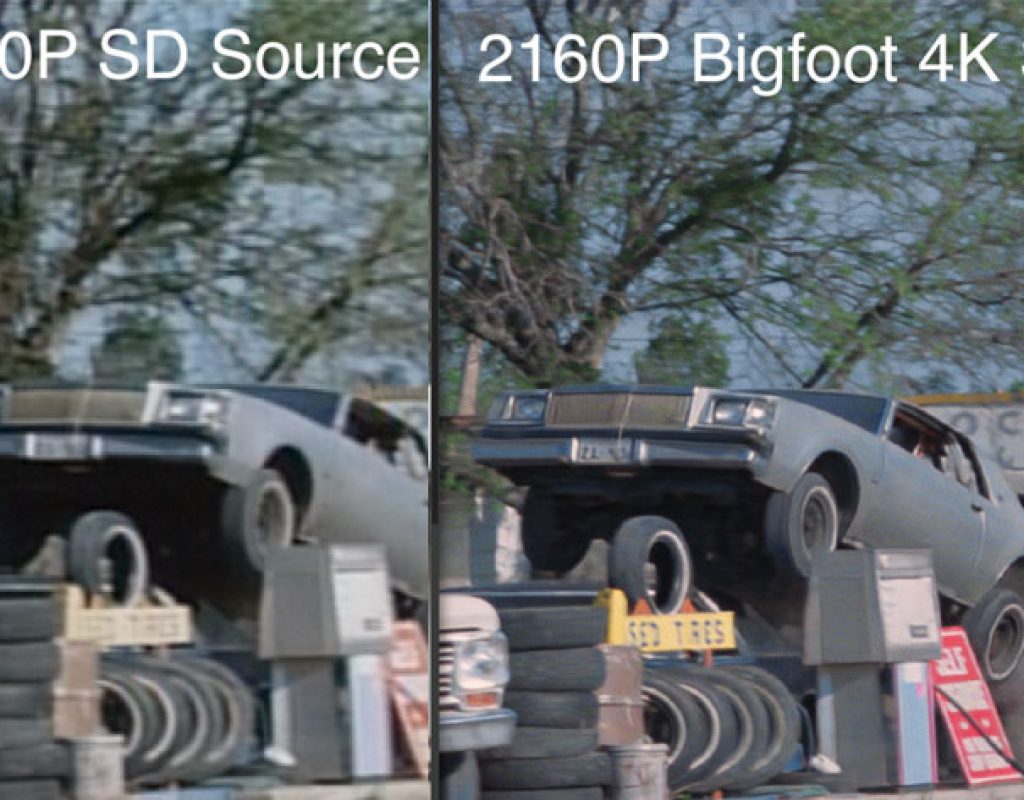

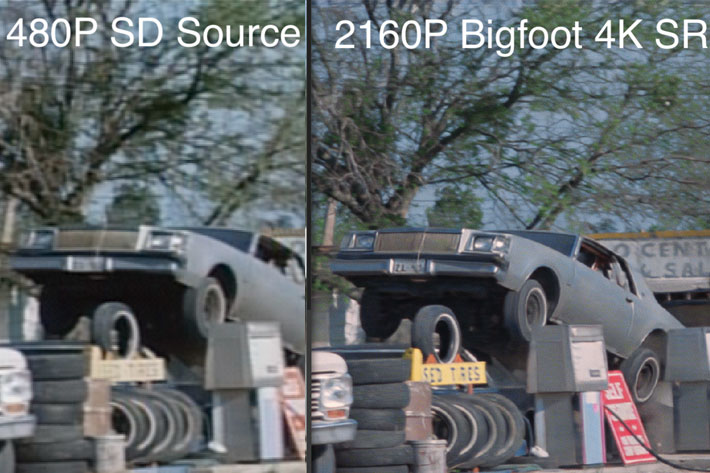

A new production-assisted AI technique called Bigfoot super resolution, from VideoGorillas, converts films from native 480p to 4K by using neural networks to predict missing pixels.

While the industry is moving forward in terms of resolution used in films produced, with 4K and 8K, following the evolution of film and video standards, there continues to be an interest in revisiting older content, which does not meet today’s visual industry standards or the expectations of audiences. To offer those audiences a more immersive viewing experience, the industry has used the only tool available to revitalize older content to the resolutions now in use: remastering. The remastering process has become a common practice, allowing audiences to revisit older favorites and enjoy them in a modern viewing experience.

As resolution increases, though, remastering has a problem: artifacts. This means that studios have to apply additional resources and manage longer lead times to produce reasonable quality content. That costs both money and time. Lots of money! Now, there is a new solution promising a viable alternative: to meet the growing pace of innovation, a company, VideoGorillas, is developing a new AI-enhanced solution to exceed visual expectations at lower costs.

A new solution: Bigfoot Frame Compare

Los Angeles-based VideoGorillas, a developer of state-of-the-art media technology incorporating machine learning, neural networks, visual analysis, object recognition, and live streaming, are no strangers to the industry, having worked with major Hollywood studios. The company announced, September 2018, the availability of its Bigfoot Frame Compare product, which is set to redefine the way film, television, and post-production companies manage assets, finishing, and remastering and preservation projects.

Bigfoot is a scalable, lightweight proprietary technology that leverages VideoGorillas’ patented computer vision/visual analysis, Frequency Domain Descriptor (FDD), and machine learning technology. It is designed to automate the manual-labor intensive conform process (which matches an original frame of film to the final edited work) and the compare process (which compares unique or common frames between different film cuts) by finding like “interest points” common across a series of images or frames of film.

On November 2018, VideoGorillas announced that Netflix’s November 2, 2018 theatrical and online release of “The Other Side of the Wind,” the final, previously unfinished film by iconic filmmaker Orson Welles, utilized VideoGorillas’ artificial intelligence (AI) powered Bigfoot Frame Compare technology in post.

Analyzing 12 million frames per second

“The Other Side of the Wind” presented an unusual challenge to the filmmakers and VideoGorillas, because it was in many ways a hybrid of a film restoration and new release project. This resulted in Bigfoot being used by the producers in an equally unusual way: as a business intelligence tool. With more than 100 hours of 40-year-old footage in a range of formats, it was essential that the producers and editors quickly gain an understanding of what footage they had before kicking off the editing process.

https://youtu.be/nMWHBUTHmf0

“During the completion of ‘The Other Side of the Wind’ we were faced with a very unique challenge,” said producer Filip Jan Rymsza. “We had a 3.5-hour reference cut assembled from various sources, which had to be conformed back to 100 hours of scans, which consisted of 16mm and 35mm negatives and 35mm prints. Traditionally, an assistant editor would have over-cut the reference picture, but some of it was blown up from 16mm to 35mm, or blown up and repositioned, or flopped, or printed in black-and-white from color negative, which made it very difficult to match by eye. Without VideoGorillas’ AI technology finding these precise matches this would’ve taken a team of assistant editors several months. VideoGorillas completed this massive task in two weeks. They were a crucial partner in this extraordinary effort.”

Scanning the amassed film footage from “The Other Side of the Wind” resulted in more than 9 million frames. Since Bigfoot was initially CPU-based software, VideoGorillas developed an experimental GPU-version to enable one local machine in its Los Angeles office to do the job that would normally take 200-300 servers. After two days of ingesting the footage it took only three days of GPU time to analyze the film, and at peak load Bigfoot was analyzing 12 million frames per second.

Bigfoot super resolution

Now VideoGorillas has another technology that incorporates AI techniques built on NVIDIA CUDA-X and Studio Stack. By integrating GPU-accelerated machine learning, deep learning, and computer vision, their techniques allow studios to achieve higher visual fidelity and increased productivity when it comes to remastering. The innovation they’re developing is a new production-assisted AI technique called Bigfoot super resolution. This technique converts films from native 480p to 4K by using neural networks to predict missing pixels that are incredibly high quality, so the original content almost appears as it was filmed in 4K.

https://youtu.be/4SbqYE77V0Y

“Bigfoot Super Resolution is an entirely new approach to upscaling powered by NVIDIA RTX technology with a focus on delivering levels of video quality and operational efficiencies currently not achievable using traditional methods. We are very excited to bring this solution to market and look forward to helping our studio and broadcast partners unlock incremental value from their content libraries” says Jason Brahms, CEO VideoGorillas.

As VideoGorillas continues to refine this technique for release, they aim to provide broadcasters and major film studios a superior way to remaster their content libraries while preserving original artistic intent.

Remastering while preserving artistic intent

“We are creating a new visual vocabulary for film and television material that’s based on AI techniques. We’re working to train neural networks to remove a variety of visual artifacts, as well as understand the era, genre, and medium of what we are remastering. Using these neural networks allows us to increase perceptual quality and preserve the original look and feel of the material”, says Alex Zhukov, CTO at VideoGorillas.

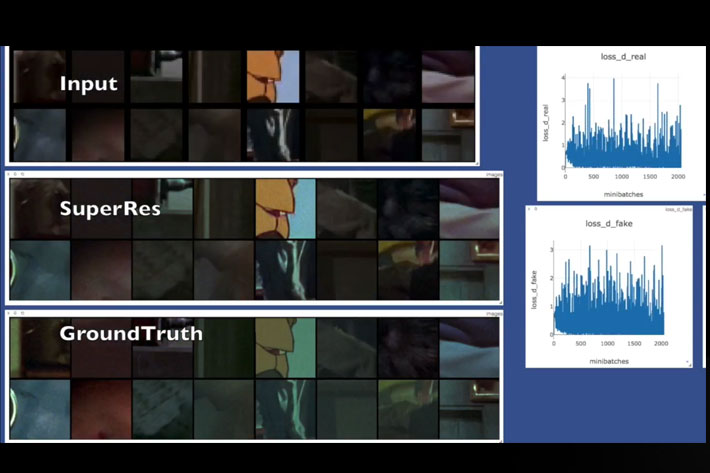

The research team at VideoGorillas trains a unique recurrent neural network (RNN) for each project, accelerated by NVIDIA GPUs. The network learns the characteristics of titles created during the same era, in the same genre, using the same method of production. New content that is then passed through this network maintains the look and feel of that era/genre thus preserving artistic intent.

https://youtu.be/6iOpAMjFjEw

A generative adversarial network (GAN) is used to remove unwanted noise and artifacts in low resolution areas while replacing them with new image synthesis and upscaling. The outcome is a model that can identify when visual loss is occurring.

The networks are trained with Pytorch using CUDA and cuDNN with millions of images per film. However, loading thousands of images is creating a bottleneck in their pipeline. VideoGorillas is thus integrating DALI (NVIDIA Data Loading Library) to accelerate training times.

NVIDIA RTX powered AI technologies

A cornerstone of video is the aggregation of visual information across adjacent frames. VideoGorillas uses Optical Flow to compute the relative motion of pixels between images. It provides frame consistency and minimizes any contextual or style loss within the image.

This new level of visual fidelity augmented by AI is only possible with NVIDIA RTX, which delivers 200x performance gains vs CPUs for their mixed precision and distributed training workflows. Video Gorillas trains super resolution networks with RTX 2080, and NVIDIA Quadro for larger-scale projects.

The extra power offered by NVIDIA Quadro enables VideoGorillas to apply super-res to HDR, high bit depth videos to up to 8K resolution, as well as achieve faster optical flow performance. The Tensor cores from the RTX GPUs provide a major boost in computing performance over CPUs, making them ideal for the mixed-precision training involved in VideoGorillas’ models.

“With CPUs, super resolution of videos to 4k and 8k is really not feasible – it’s just too slow to perform. NVIDIA GPUs are really the only option to achieve super resolution with higher image qualities” says Alex Zhukov, CTO VideoGorillas.

And while on-prem solutions work perfectly for their training needs, they also expand their workloads to the cloud using NVIDIA Kubernetes, running both in local data centers as well as Amazon Web Services and Google Cloud Platform to orchestrate Super Res inference jobs accelerated by NVIDIA Tesla V100 GPUs.

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now