The beginning of August saw the 44 year old SIGGRAPH conference (Special Interest Group on Computer GRAPHics and Interactive Techniques) return to Los Angeles for the 11th time, and this year the topic was almost exclusively VR. As this is ProVideo Coalition and not VirtualReality Coalition, I’ll be taking a more filmmaker-centric approach to this recap. That being said, the word of the week was “immersion”, and at this point I’m confident we’re on our way to The Matrix within the next 5 years.

The TL;DR of SIGGRAPH this year was that Virtual Reality and Augmented Reality are here, and there are plenty of companies developing tools for it, mostly surrounding the interactivity of the technology. That includes tracking in various forms, eye strain and fidelity solutions, and feedback simulation. The feedback tools can utilize simple things from inflatable bags worn about your person, all the way up to more complex things such as a large drum you would, I suppose, hang in your garage to simulates heat, wind, and frosty wind. Or an HMD that reads your mind.

VR is in an interesting spot, as there is plenty of potential but not a lot for the consumer to do with it right now. There’s a handful of games and even less in the way of passive entertainment, but that brings up an interesting dilemma. People keep hand-waving VR gaming as the obvious but not the end-game (pun not intended), and that there’s a ton more on the horizon. What makes one’s head tilt is the fact that there doesn’t seem to be much else to do with it (HP, in their press event, said 76% of VR content is gaming) but the companies recently diving in seem to believe there’s a whole heap of new experiences on the horizon that aren’t “silly games”, mostly in the commercial sector. Consumers care about experiences, commercial folks care about transforming workflows: lower cost, optimized investments in training and simulations, and shorter dev cycles. To that end, it’s up to individual companies to decide what (likely proprietary) programs best fit their needs.

The problem with the aforementioned poo-pooing of VR “games” comes down to the definition: what is an interactive experience if not a game? Games are becoming more and more cinematic, with franchises like Metal Gear Solid really pushing the boundaries of acceptable cut-scene length at an hour a pop in Guns of the Patriots, and turning MGS:V into a sort-of hybrid television show/open world game. More recently, Fallout 4 eschewed much its personal choice-driven roots in favor of a more linear, film-like experience. A perhaps-woefully small push towards interactive cinema, as seen by the Summer Camp demo in the VR Village, exemplified this strange blurry line.

In Summer Camp, you’re a kid in a barn with another kid, getting into some mischief, and your buddy discovers a mystical ball with magic powers and some semblance of intelligence. I won’t ruin the demo since it’s pretty short, but some fun things happen and you’re instructed to complete various simple tasks to advance the story. It’s things like “hide behind those hay bales” or “turn on the faucet”, for example. In gaming, we call this being “on rails”, as opposed to an “open world” experience. Just like in most current games that utilize this mechanic, the main portion of Rick and Morty: Virtual Rick-Ality , if you ignore the requests made of you, the characters will implore you using different voice lines and tactics. The difference here is that the entire experience is on rails. It’s billed as a VR film that you experience, but does that make it an “interactive movie” or a game? What’s the difference?

The big thing the conference showed us is that the future of this new medium is limited only by the human imagination, and perhaps a few more years of display tech advancements. That said, it feels as if everyone’s waiting for the next shoe to drop in terms of someone coming out with “the next big thing” in VR. We see this in more filmmaker-centric conferences in the form of “our camera enables creatives to be creative by giving them the power of 20K sensors and smaller form-factors”. Filmmaking is at a point now where the only thing stopping anyone from putting together a compelling movie is a compelling script. In my estimation, we’re already there in the VR space as so many of the computer-based technologies that empower traditional filmmakers are shared by VR creators. So let’s dive in to the nuts and bolts of what got us there.

First off, the PC. It’s got to be powerful and usually that means building your own, often in a big case, or spending a lot of money on a pre-build. Then there’s the giant cable-tail you’ve got following you around, jumping under your feet when you least expect it which at best ruins immersion, and at worst rips the HMD straight off your head and snaps your neck in the process.

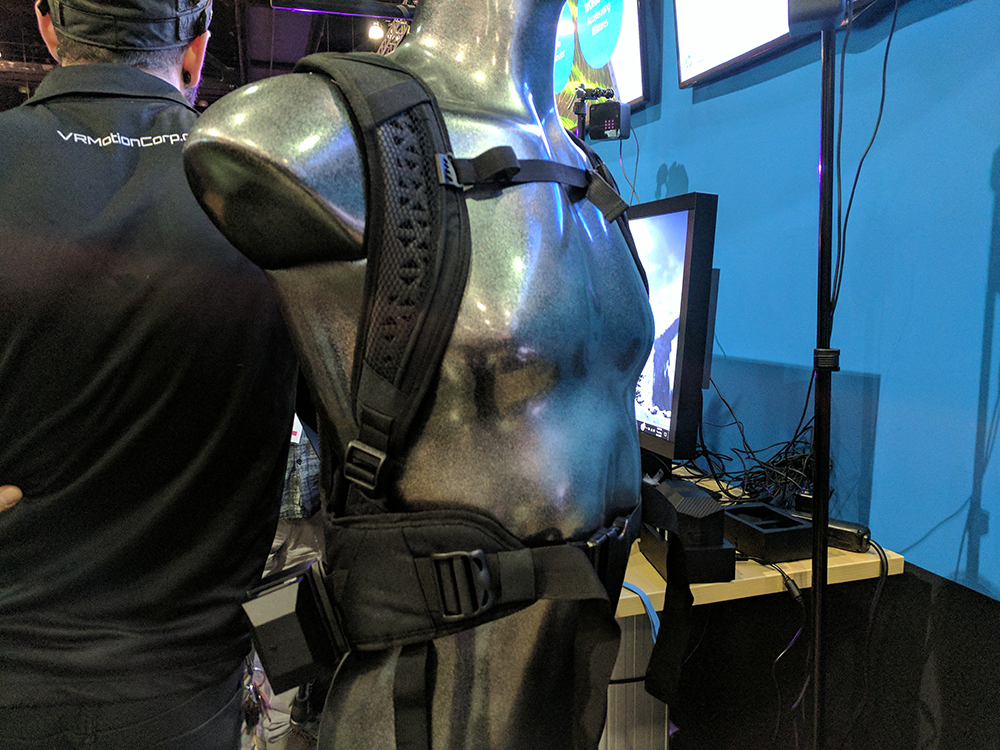

HP has developed a backpack-mounted high performance PC specifically designed for VR use and development, called the HP Z VR Backpack. At $3295, the 10lb computer is kitted with a nVidia P5200 graphics card with 16GB of memory, an Intel i7 vPro processor, and is HMD-agnostic. In other words, it’s “just” a fast PC you wear on your back. It’s also got two slots for 75mAh battery packs that are mounted by your hips to complement the internal 50mAh battery that are hot-swappable and together will last about an hour. With consistent swapping and charging, 4 batteries will last you about 8 hours. You can also detach the computer and dock it, allowing you to use it as a standard PC. Wearing it is a pleasure, feeling more like a full Camelback than a PC riding around on you. In regards to the “portability” of VR, this is definitely the next step. Beyond that? Full body tracking.

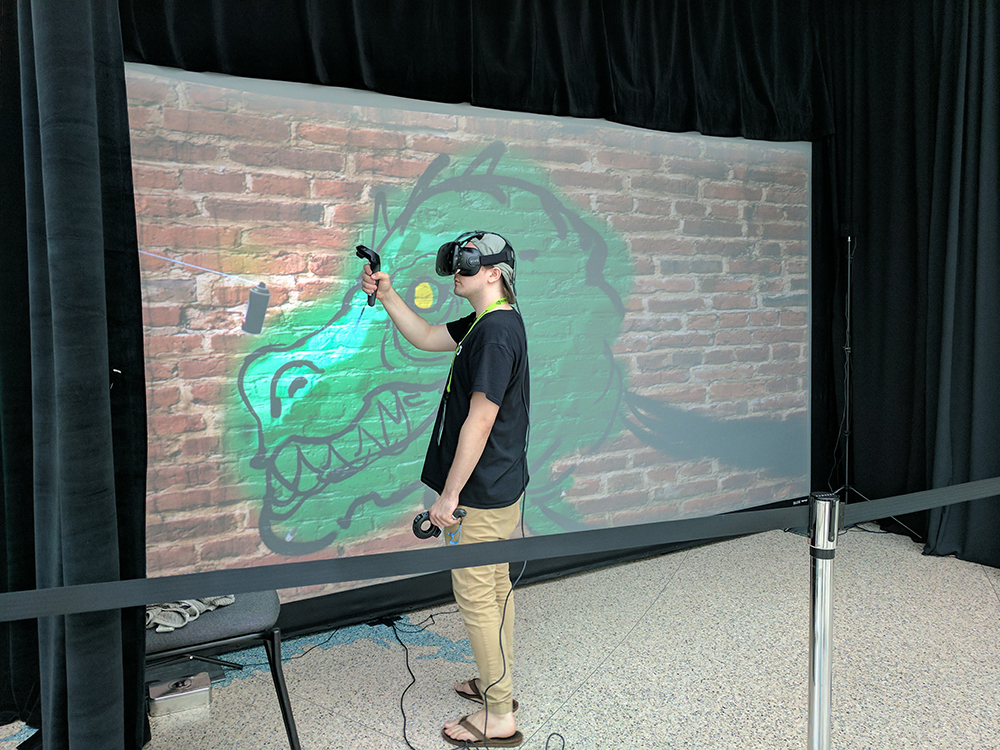

Companies like Motion Reality, Realis, Optitrack and Vicon had full body tracking demos on display, and all but two, Vicon and Realis, were rifle-based. Vicon, coupled with Manus VR’s hand tracking technology, was able to demo an incredibly immersive neon-samurai game in which you can move every joint in your body and see a 1-to-1 translation of those moves in-game. Looking down and seeing your legs, holding out your hands and articulating your fingers, is honestly incredible. It’s the difference between playing a game and being transported into another world. It’s almost impossible to describe the feeling when the tracking is perfect beyond “putting on a costume”. You genuinely feel like you were wearing the samurai armor, aided by the elastic bands holding the tracking points on your arms and legs putting pressure on your limbs, mimicking the armor in a physical sense. An unintentional but welcome side-effect.

Motion Reality, powered by HP’s aforementioned Z VR Backpack, were running a great demo featuring the Dauntless combat simulator in which you and a partner could either shoot at each other as big avatars in a city (think Godzilla) or together walking through a “kill house” type simulation shooting at wooden targets that pop up. Once hand tracking, which needs its own system, reaches a consumer-level price point and power draw we’ll be in a whole new world. It’s incredible how little things like finger articulation without a controller can drive that immersion home. Once you can move every inch of your body and see those motions translated in the digital world in your HMD you really do feel differently about the experience. That’s going to be the next next big thing.

Then there’s Neurable, the company that decided attaching a Brain-Computer Interface (BCI) to an HTC Vive wasn’t terrifying. It uses EEG technology combined with “proprietary algorithms” to detect what the user is thinking, such as making specific choices when prompted, and uses that information to make selections in the program/game/etc. This demo had a line out the proverbial door, so I was unable to try it myself, but people seemed to think it was impressive. It ultimately might have more applications in the healthcare sector than entertainment, but it’s still pretty new.

On the software side, there were companies like Boris FX and Blackmagic touting the 360 Video or VR-centric updates to Mocha and Fusion 9, respectively. It seems that at this point, most brands have a VR editing mode bundled in their packages. Boris also has a new plug-in version for Premiere, AE, Avid, Nuke, and OFX which is a welcome change from having to throw things into the standalone program for planar tracking (think using large texture selections as tracking points instead of high-contrast dots) and the like. The object removal module is really, really impressive. About as simple as “circle the thing you don’t want, that’s gone now”. As a filmmaker, that alone made me pause and consider dropping the $700 Boris wants for their current iteration of the Mocha plugin.

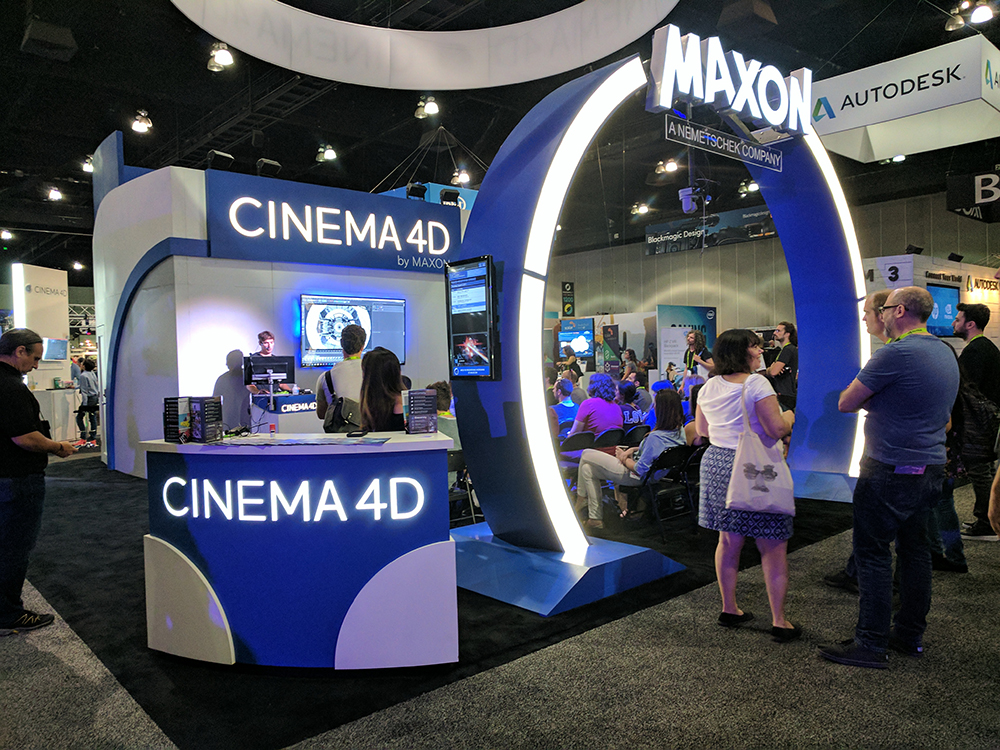

From MAXON we saw a rather substantial update to Cinema4D in the form of the R19 release, both “under the hood” and in regards to simple usability. The new viewport is leaps ahead of the old one, they’ve got a new media core, native MP4 support, a new sound effector, new easy-to-use fracturing features and more. There is also, of course, a spherical camera feature and near-realtime rendering of lights and PBR materials so you can see what your final look will be as you go as well as a new motion tracking features for filmmakers to quickly and easily place 3D assets in pre-recorded plates. As Paul Babb, CEO, said “it’s the age of rendering” now, so your product has to be fast. It seems as though C4D is holding a full house in that regard.

Overall the week gave the impression that we’ve hit the critical point between “it’s just not there yet” and “what do we do with this?” You might also say it was the year of optimization. Tracking is better, interfaces are better, forecasting is possible, and the consumer is much more aware of what’s available. At the same time, it seems like we’re still in the deep end paddling for some edge we can’t see.

Sure, there’s plenty of interesting tools and applications, but creativity is novelty plus utility. Pixel perfect tracking? Useful, but obviously needed. High performance computing power in small, wearable form factors? Same. A wakeboard or stair simulator? Definitely novel…

Even for the medical applications, it’s not the VR tech that makes it useful but peripheral technologies in scanning and design that makes something like high resolution scalable models of real patients possible. The VR space just allows for easy interaction and manipulation of that scan. This is where I think AR will leapfrog VR in terms of usefulness, and VR will remain more an entertainment outlet. But who knows?

At the beginning of the conference, animation legend Floyd Norman was interviewed and hammered home the importance of story over flash. Mr. Norman was the first African-American animator to work for Disney, working directly under Walt for the first time on The Jungle Book. Throughout his talk he reinforced the concepts given to him by Walt, which he summarized as, “don’t watch the movie, watch the audience”. Walt meant that if the audience doesn’t buy it, your film doesn’t work. You can have all the tech in the world, but if they don’t serve the story they have no real purpose. Immersion comes from getting entrapped in the story, not necessarily in the realism of your environment.

What we have here, today, is a new tool. Like digital cameras, smaller lights, faster film stocks, and VFX before it. This conference showed us that we’ve recently surpassed the novelty portion of VR which means it’s going to falls on us, the creators, to bring the necessary utility and emotional experience to draw people in, create empathy in the viewer and yes, immerse them in new worlds.

[As a total side-note, Unreal 4 was the secret winner of the whole conference. Everything seemed to be powered by Unreal. If you’re looking to get into the 3D industry, in any form, you might want to download U4 and start learning it now.]

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now