Adobe has been bringing performance improvements thick and fast of late, with new GPU hardware acceleration for Windows in May, faster launch times for Premiere Pro on macOS in June, and now significant optimization for Intel Quick Sync. But the most exciting thing for me was the June announcement of a public beta of Roto Brush 2, the automatic rotoscoping tool powered by Adobe Sensei machine learning technology. Let’s take a look at the July announcement and then do a deeper dive on Roto Brush 2 in After Effects.

Things got faster

This isn’t always the most exciting news to hear, but when a company touts “up to 2x playback performance improvements,” well that’s almost like buying a new CPU for your NLE workstation. Note: the improvements are specific to H.264 and H.265/HEVC on Windows with Intel CPUs that support Quick Sync hardware acceleration. That includes most of the i-series chip with integrated graphics since 2011, but check the previous link to verify yours.) But in this day and age of editing copious quantities of smartphone, drone, and GoPro-like media on laptops, this will be a big deal to many Premiere Pro users.

For more “enterprise-level” editing environments, Adobe also announced support for ProRes 4444 XQ (on the Mac side), wrappable in either QuickTime or MXF. Does that mean you miss out on Windows? Nope—you already had it. It’s a strange world we live in where an Apple codec is supported on the Windows platform first…

Multi-cam (Beta)

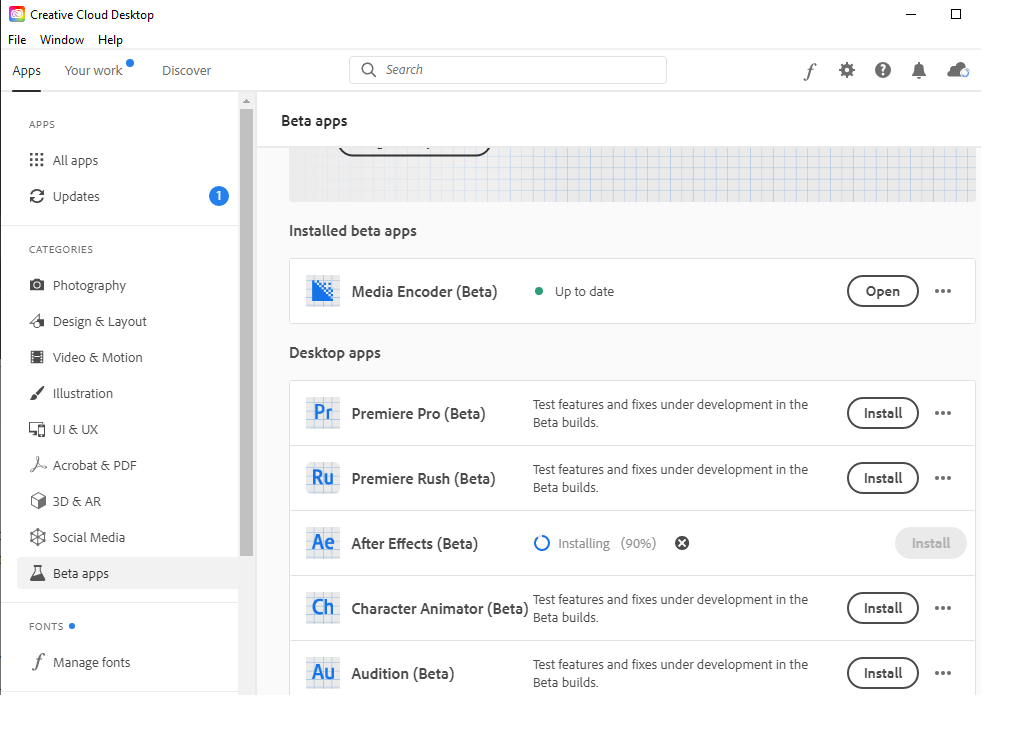

A current feature available in public beta (just click “All apps” in the left pane of your Creative Cloud app and look for the Beta apps section—See image, below) is improved multi-cam playback in Premiere Pro. I haven’t taken it for a test run, but at least according to the attractive bar charts on Adobe’s site, the numbers look promising. They’re seeing twice the number of simultaneous streams (e.g. from 4 streams of 30fps UHD in the current “shipping” version of Premiere Pro to 8 streams in the beta version on a 2018 Macbook Pro). Obviously that is entirely dependent on your hard drive throughput, but impressive nonetheless.

Roto Brush 2 (Beta)

OK, queue the flashback. It was April of 2010 and I was starting up a stereoscopic conversion studio, spending tens of thousands of dollars on rotoscoping in India. Adobe announced a “revolutionary” (aren’t they all) new tool called the Roto Brush in After Effects. Roto Brush could produce automatic rotoscoping guided by brush-based user contextual hints. In truth we all knew it was too good to be true, but because we stubbornly wanted to believe that there was a new, cheaper way to rotoscope (the most labor-intensive part of the stereoscopic conversion pipeline), artists like me spent hours trying to coax that tool to create usable rotoscoped mattes. In the end the tool found a niche with mograph artists looking for some “cut-out” style effects, but ultimately it was slow, and nowhere near as accurate as we needed for tent-pole release VFX work.

Normally I’m not a fan of beta software on my production workstations; fortunately the Adobe beta builds install alongside the production builds, so there’s no risk of it interrupting your regular work. That being the case, I couldn’t resist checking out the reincarnation of Roto Brush, now called Roto Brush 2. Rotoscoping is one of those things machine learning should be able to do well, given a large enough annotated data set. I even published an article on a rudimentary auto-rotoscoping technique; not good enough for final shots, but adequate for garbage mattes and temp roto. So I was eager to find out just how much closer this new Adobe tool gets us.

And the verdict? Not too shabby, especially given that the tool is still in beta. For general hard line roto–general body outlines, for example–it’s fast and most importantly, fairly consistent temporally. At the very basic settings I found some minor edge crawl, but adding a little (very little) feathering cleaned that right up. The exception to this would be hair, where a moving hairline against a high contrast background will produce a somewhat unpredictable animation. Then again, for the foreseeable future, flyaway hair is always going to require manual labor.

Earlier this year (remember those days, when people were out shooting movies?) I spent a few thousand dollars outsourcing roto for a national golfing ad campaign. Lots of hard edges (golfers wearing caps make for much easier roto). I tested one of the source clips and I’d estimate that I could have potentially done 25-50% of the roto in-house using Roto Brush 2. The things it got wrong are typically things that junior human rotoscopers have a hard time with: perspective shift, objects crossing into background regions with similar contrast, and thin things (like golf sticks) with motion blur. Even if that number was a 25% outsourcing reduction that would have saved both money and time: I could have started to composite some of the simpler shots while waiting for the more detailed roto to come back from the external vendors.

I want to qualify that I’m yet to use the tool in production; that’s when all the examples that didn’t make it to your initial tests creep out of the digital woodwork. Having said that, Adobe has blogged that they’re eager to receive problem clips from beta testers to help train the tool to avoid mistakes in the future. The tricky part is always getting clearance to send along the media…

If I had one wish for Roto Brush 2 it would be a stronger ability to manually tweak and offset the results. Yes, you can add corrective brushes on the way through (and use the Refine Edge tool), but adding too many of these will cause temporal inconsistencies to the matte edge. My ideal machine learning tool is one that’s trained on spline datasets and generates actual minimal bezier curves as an output. That’s probably a 2025 or even 2030 tool; here in 2020 I say Roto Brush 2 is an acceptable compromise.

At the highest settings, a 3200×1800 pixel test clip was rotoscoping at a little under half a second a frame on my 32 core Threadripper workstation. Don’t have 32 cores? Don’t worry; from what I could tell in my tests all the processing is single-threaded, so if you have a decent clock speed on your CPU your times won’t be too different to mine. (It could also be GPU accelerated—I didn’t check my GPU usage.) I’m told that Roto Brush 2 gets results on each frame based on the previous frame’s results. The model inference fully relies on GPU if available. The pre/post processing runs fully on CPU.

In summary, for colorists, editors, and casual compositors, this could become a single solution for rotoscoping. For those of us requiring precision edge detail, this is likely to become a big part of the solution. Roto Brush 2 will find a place in many video editing and VFX workflows in the near future.