The new Mac Pro carries a base price of around $6,000 USD, and by one estimate the top-of-the-line model might set you back $45,000 USD (without the monitor). For any size of studio that represents a significant investment. So, will it be worth it for you? Will the improved speed/efficiency/workflow improvements/”desktop sex appeal” create an ROI that justifies the expense? And are there more affordable alternatives that hit the same goals hardware-wise (like the iMac Pro, or—dare I say it—a Windows workstation)?

I’ll tackle the issue by breaking down the various components of the new Mac Pro, explaining the potential benefits of each, and what kind of performance gains you can expect from them. While there are no new Mac Pros out in the wild to benchmark, with the exception of the Afterburner FPGA, the tech is all a variation on existing hardware. In other words, we can easily extrapolate the performance and benefits of each component from current market offerings.

Starting with the CPU: there can be only one

The new Mac Pro boasts as many as 28 cores in the form of an Intel Xeon processor. Of course, that’s the top model config; purchasing that CPU on its own (without a computer) would set you back over $10,000 USD. I’m estimating it’ll add another $8,500 or so on to the $6,000 base price.

One surprising design choice here: a single CPU socket. Most high-end PC workstations accommodate dual CPUs, allowing consumers to get core counts up without paying the premium associated with a single chip design. (This may not be economical at initial release, but a couple of years into ownership it can make for a very affordable and powerful upgrade to a system.)

There are three important metrics in a modern CPU: base clock, turbo boost clock, and core count. The base clock is the normal speed in GHz the CPU will run. The turbo speed is the highest speed the processer can operate at when intensive calculations are required.

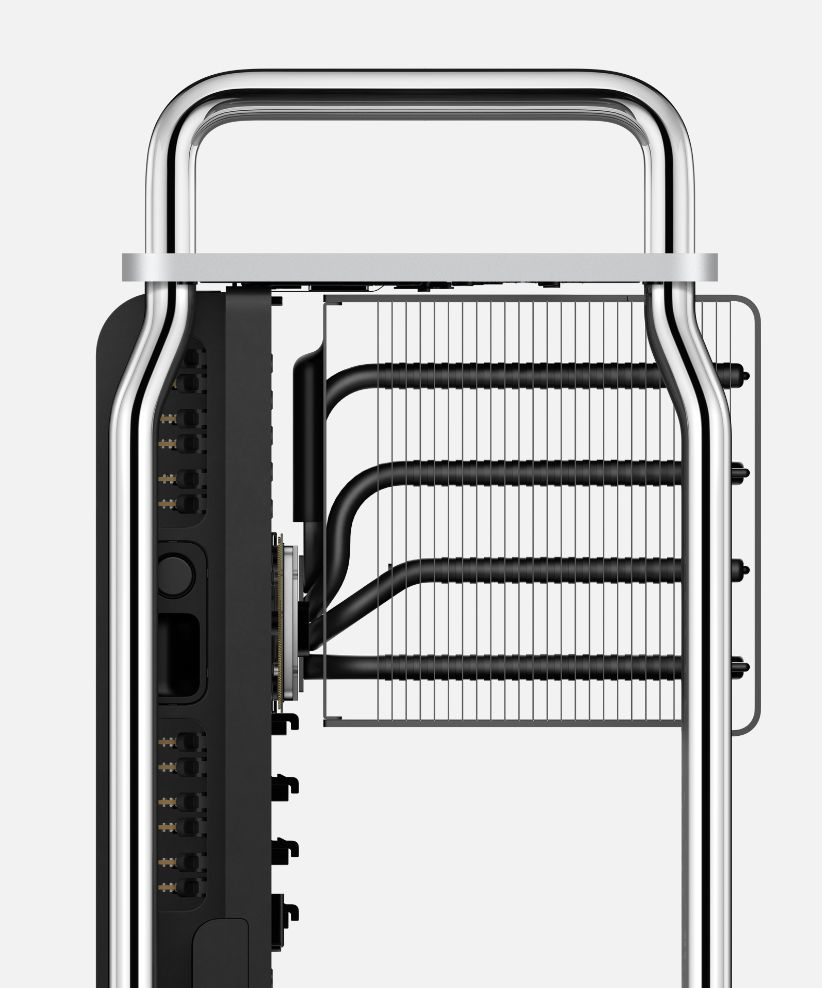

For digital content creators, not only are we constantly doing tasks that require turbo speed, but those tasks tend to run for a long time (decoding multiple streams of video, rendering 3D animation , etc.) The problem with this is that the CPU quickly heats up with the higher clock speed.

Without adequate cooling, the CPU is forced to throttle the speed back down to prevent overheating. Apple boasts significant cooling in the design of the new Mac Pro, but it’s not clear how long the Mac will be able to sustain turbo clock speeds before reducing to its nominal rate. Further, we don’t know how many cores will accelerate to this higher clock speed. The highest turbo clock quoted (4.4GHz on the 28 core top-of-the-line CPU) is for acceleration of a single core, not all 28.

Then comes core count. Each core is essentially a separate CPU, capable of executing a bunch of commands in a software program. By dividing tasks between cores, software developers can execute processes in parallel.

So 28 cores means 28x the processing power of a single core, right? Well, not really. Multithreaded programming (programming to use multiple “threads”, or processes) is really quite tricky. It only works for certain tasks; many processes are by nature serial, with each step requiring data from the previous step before being executed. And even when tasks lend themselves to parallel processing (like crunching all the pixels in a big image) writing bug-free multithreaded code is much more difficult to develop and troubleshoot than single thread code. Processes accessing and altering the same memory space cause all kinds of problems.

The most recent updates to C++ (the primary programming language used by high-end software developers) automate a lot of the tricky stuff with multithreaded programming, but it’ll be several years before common development platforms and libraries are updated to support it. In summary: for many software tasks your extra cores may be spending most of their time sitting around just warming your office.

“The sweet spot for general DCC work is probably the 12 core configuration”

What it means to you

Looking at the specs for the Mac Pro CPUs, the sweet spot for general DCC work is probably the 12 core configuration. It offers the second-highest base clock at 3.3 GHz and the maximum Turbo boost. So for the many interactive processes that only utilize a single core it will give you maximum punch, but you still get 12 cores for multithreading.

In contrast, the 28 core CPU will drop you down to 2.5GHz base clock in sustained, high-heat conditions—significantly lower than the 3.3GHz of the 12 core. So if you’re doing a lot of general interactive tasks like dragging layers around in After Effects or Photoshop, or previewing and trimming clips in an NLE, you may find that the flagship 28 core is significantly slower than the 12 core (which is much, much cheaper).

Now if you’re crunching an algorithm through MATLAB to solve world hunger, you’ll be squeezing every last ounce of performance out of your 28 cores. The moral to the story? Do a little research online with the apps you most commonly use and find out whether they favor single core operations or multithreaded operations. I’ll wager that you’ll be surprised by how many prefer a faster single-core clock speed over parallel processing.

Moving on to RAM

The new Mac Pros boast a staggering maximum of 1.5TB of RAM. For that you’ll need either the 24 or 28 core model; it seems the other configs max out at 768GB—still not an inconsiderable number. This is expensive ECC (Error-correcting code) RAM typically found in servers. While the price of ECC memory has come down in recent years, that 1.5TB of RAM will still set you back over $16,000 USD at today’s prices.

There was one trick we all used to count on when purchasing a new Mac: buy the minimum RAM from Apple, the replace it with third party RAM purchased at a fraction of the price. This is probably possible with the new Mac Pros, but it remains to be seen. It’s also uncertain whether Apple will allow the Mac Pro to run with cheaper non-ECC RAM. There have been many studies that indicate that ECC is pretty much unnecessary in a workstation, so purchasing its cheaper sibling would be a helpful saving.

Also of note: the 8 core base model uses RAM with a speed of 2666MHz, while the higher core models clock their RAM at 2933MHz. (Read on to see if that matters to you.)

What it means to you

Given that the computer you’re using today may max out at 32GB, you may be wondering what you could possibly use 1.5 Terabytes of RAM for. In fact, for digital video, more RAM is a pretty big deal. Take for example a frame of 8K video with alpha channel (e.g. ProRes 4444). To process the data in 32 bit, floating point precision requires 7680 x 4320 x 4 x 32 bits of data. That works out to 531 MB. That’s just a single frame. On a 32 GB system, with headroom for the OS and your editing/compositing software you could maybe fit 40 frames in memory. Start blending layers of video together and doing motion effects and your system quickly comes to a spluttering, choppy stop.

“You’ll almost certainly feel the difference between say, 32GB and 128GB, even with a modest HD project.”

It’s not just about real-time performance either. Most applications cache different image states into RAM, pushing them to the hard drive when RAM fills up. The more RAM you have, the more responsive your NLE or node-based compositor will be when you tweak a filter setting or perform color corrections. 1.5 TB might be overkill, but you’ll almost certainly feel the difference between say, 32GB and 128GB, even with a modest HD project.

Oh, and before you object that you’re working with compressed media, know that in most cases the software decompresses your media and stores it in RAM as an uncompressed frame for image processing operations. So while your hard drive storage needs may be smaller with an efficient codec, your RAM needs don’t necessarily follow.

One question often comes up: on a budget, should you buy more RAM of a slower speed that’s more affordable, or less of the faster, expensive variety? The answer is almost always, “Buy more RAM”. The performance differential between the 2666MHz of the base Mac Pro model and the faster 2933MHz of the higher core models will probably amount to 1-2%. In comparison, buying a larger amount of RAM may see significant boost in overall performance (assuming the higher end models will take 2666MHz RAM as well, which they should).

Onto the GPUs: Where the action’s at

Where the Xeon CPUs tap out at twenty-eight cores, the cores on a modern graphics card (GPU) number in the thousands. Rather than use them purely to make pretty graphics, modern developers use them to accelerate massively parallel tasks—everything from deep learning processes to real-time ray tracing. They’re especially well-suited to crunching lots of pixels all at once.

Up until around 2009, advances in general computing were all about the CPU. True to Moore’s law, processor speeds doubled every 18 months or so. Around 2009-2010, a lot of the action shifted to the GPU. It’s the reason you can buy an old workstation from 2010 (Mac Pro tower or Windows Z800 for example), throw a modern graphics card into it, and end up with a usable system.

Apple boasts 28.4 teraflops of floating point processing power in the top model graphics config (at a potential add-on price point of over $12,000). What’s a teraflop? It’s a trillion floating point calculations per second. Before you get too carried away comparing teraflops, there are many factors that go into final performance. Just as having 28 cores on your CPU doesn’t mean your computer will be constantly using all 28 cores, the final efficiency of a graphics card depends on how the software uses it. It’s unlikely that all your graphics cores will be “teraflopping” all the time.

Wait, no Nvidia?

I’m not entirely sure what Nvidia did to make Apple dislike them so much. I’ve heard rumors, but none that I feel confident enough to perpetuate. Apple engineers have confirmed that there’s nothing stopping Nvidia cards from running on the new machines. Nothing that is, except the small matter of Nvidia writing drivers for Apple’s Metal (an API technically in competition with their CUDA library), and Apple approving said drivers. Why is this a big deal? Read on.

Metal, CUDA, and OpenCL

You may have heard of OpenGL, but possibly not OpenCL. OpenCL is an API (Application Program Interface—basically a software library for developers) championed by AMD that allows everyday developers to tap into the power of graphics cards for non-graphics related work. The API is a “black box” that hands off massive parallel processing to the graphics card so that the developer doesn’t need to know the tricky technical details of how it’s achieved.

CUDA is Nvidia’s proprietary graphics API. It only works with Nvidia cards, unlike OpenCL which can technically work across any platform (it’ll even fall back to run on your CPU, albeit at a much slower pace).

Then there’s Metal. True to Apple’s history, their development team went back to the drawing board and came up with their own graphics API to work with Apple products (both OS X and iOS hardware platforms). Metal replaces both OpenCL and OpenGL. i.e. it handles both computational and graphics acceleration. The argument goes (and this has been repeated many times in Apple’s design philosophy), “We design the hardware, so we know the best way to design software to interface with it.” It’s a reasonable argument, and I’m not aware of anyone who actually thinks Metal is a bad implementation.

OK, so first off: OpenCL is open-source, runs anywhere, and is backed by a major hardware developer. What’s not to love? Well, it turns out most developers prefer CUDA, partly due to the way the API is written, and partly due to the fact that historically developers have been able to squeeze better performance out of Nvidia cards running CUDA than competing offerings.

Turning our attention back to Metal: Apple have already deprecated OpenCL and OpenGL on the Mac, and the latest OS—Mojave—doesn’t support CUDA. In other words, if you want graphics acceleration on a Mac your only option is Metal.

What it means to you

If you’re an editor you’re probably already OK on this front. Companies like Blackmagic Design and Adobe have been optimizing their code base for Metal acceleration for some time (although last time I checked there were a few pieces lagging on the Adobe side; perhaps that’s changed with Mojave).

If you do more exotic things like GPU rendering or machine learning/deep learning, the path forward is not so clear. Regarding GPU accelerated rendering: It was interesting that Apple’s press release paraded both Redshift and OTOY as soon-adopters of Metal. Both 3D renderers have been exclusively Nvidia and CUDA since their inception. Redshift (now part of Cinema4D’s parent company Maxon) claim to be shipping a Metal solution by end of year. OTOY are equally enthusiastic, although they’re known for overambitious release goals. It remains to be seen if the price/performance ratio works out with Metal versions of these renderers ($12,000+ is a lot to spend on graphics processing power).

Why? Because the vast majority of software machine learning practitioners depend on—at least for prototyping—are CUDA (and thus Nvidia) dependent. TensorFlow, Keras, Caffe, PyTorch, and MXNet all lean heavily on CUDA to crunch the math. While Apple has developed its own library of Metal-accelerated machine learning functions, don’t expect the heavily academic community of machine learning experts to suddenly migrate to a new set of tools based solely around the Mac.

Beyond these considerations, be sure to review benchmarks for your favorite applications to see how GPU-intensive they are, then budget the graphics config on your new Mac accordingly.

Finally, the dark horse in the race: Afterburner

For video professionals, Afterburner is where the Mac Pro gets really interesting. It’s a board dedicated to accelerating the processing of video. Confused as to what that is? Evidently so is Apple’s marketing department.

The product page on Apple’s site calls it “an FPGA, or programmable ASIC.” Well, to get a little pedantic here, ASICs and FPGAs are two different things. An Application-Specific Integrated Circuit (ASIC) is a computer chip that has been architected and “cooked to silicone” for a specific processing task. It’s when very smart engineers design their own custom chip to be etched onto a silicone wafer. Unlike a CPU, which is designed to handle whatever processing job is thrown at it, an ASIC is designed to perform very specific operations like, say, crunching video files.

In contrast, a Field-Programmable Gate Array (FPGA) is a collection of circuits that can be effectively “rewired” after manufacture (in the “field”) to operate in a specific way. This is more than just burning software code into a chip’s permanent memory (like an EPROM). FPGAs are electronically different once they’ve been programmed.

So an ASIC is designed and cooked during manufacture; an FPGA is a more generic chip that is “rewired” to more efficiently handle a specific software task. FPGAs are often used to prototype ASIC’s (the latter are far more expensive to manufacture), but they are almost certainly not the same thing. For the relatively small number of people purchasing an Afterburner card for their Mac Pro, the expense of designing an ASIC couldn’t be justified, especially when advances in GPUs and CPUs will probably make it obsolete and needing a redesign in a couple of years. (Just ask the poor guys at Media 100, who bet against Moore’s Law with their Pegasus architecture.)

If anything, the fact that the Afterburner is an FPGA is somewhat more interesting, since it opens the possibility of reprogramming it for other tasks. It’s doubtful that Apple would ever provide that function however, nor is it known whether the interface on the board allows for reprogramming once it leaves the factory.

Marketing semantics aside, the Afterburner may actually be kind of awesome. It claims “up to 3 streams of 8K ProRes RAW at 30fps”, or “up to 12 streams of 4K ProRes RAW at 30fps.” Of course, that assumes you have RAID storage that can serve the data at those speeds (that’s another massive expense to consider), but the processing power is impressive. How many software manufacturers come on board to support it remains to be seen, but you can expect the major players to be ready (or close to) by the time it ships.

(As a total aside, does anyone else think “Afterburner” an odd choice of name for a real-time device? Isn’t it more of a Duringburner?)

What it means to you

This is one of those, “If you don’t know you need it, then you probably don’t” kind of things. The price of this card is a complete unknown at this point, but I’ll wager that for studios doing 8K online, price will not be a significant part of the purchasing decision.

If you’re more on the indie side of things (offline editing, or editing with compressed formats rather than RAW) it’s probably a luxury you can forego for now. That said, I wouldn’t be surprised if companies like Blackmagic Design and AJA follow suit with their own third-party offerings; FPGA’s are definitely in their wheelhouse. We can only hope Apple’s Afterburner stimulates more activity in the custom hardware arena for video work.

The big picture: pass or play?

If you’re doing client-driven sessions like color grading or other finishing tasks, this is probably a reasonable investment, both in terms of the billing rate for that kind of work, and “sex appeal” to the client. Those are environments where real-time responsiveness is crucial, and latency legitimately translates to frustration from clients who pay by the hour.

For the more “offline” crowd…let’s weigh the alternative Apple options first. I must stress that I’m moving very much into editorial writing mode here, so take the comments for what they are: my opinionated musings.

New Mac Pro vs iMac Pro

For my money, the Mac Pro wins here. Until the iMac Pro sees a refresh (which may well be imminent) you’re paying for 2017’s hardware if you buy an iMac Pro today. The base model is $1000 less than the Mac Pro base model and includes a display, but you’re then stuck with a non-upgradeable system that already has two years on the clock in terms of its tech.

The Mac Pro is slightly faster, but more importantly, upgradeable. You can add RAM later and—perhaps most significantly—replace an aging graphics card for a new lease on life a couple of years down the road.

And you may of course already have a monitor. Regardless for a lot of editing, mograph, VFX, and 3D tasks you can buy an adequate 4K monitor for under $400. No, you won’t be grading Scorsese’s next film on that monitor, but it’ll serve. It certainly won’t be as pretty as the iMac Pro’s display, but again: it’s replaceable later.

Now if Apple comes out with an iMac Pro refresh before you go to purchase a system, that may be a whole different story.

New Mac Pro vs Trashcan Mac Pro

New Mac Pro vs Westmere Mac Pro tower

Apple-loving post professionals have been clinging onto their Westmere Mac Pros for dear life. It may be finally time to pry their fingers from the skin-lacerating cases.

It’s often been said that these systems still hold their own, especially if you up the RAM and throw in a new GPU. Alas, it’s hard to still make that claim here in mid-2019. The latest flavor of OS X—Mojave—no longer supports the older Mac Pros (even though some users are determined to work around the limitations). Hey, we’re talking about technology from 2010-2012. You had a good run: Time to say your goodbyes and turn it into a Minecraft machine for the kids.

Heresy time: New Mac Pro vs a Windows workstation

Before you hate, let me just say that I’m a big fan of Apple, right down to my iPhone, my Apple Watch, and my $25 Chinese AirPod knock-offs. (Yes, they sound as bad in real-life as in print. No, I’ll never buy them again.)

While I still use Macs in my studio (to be honest, mainly now for Logic Pro) my workhorse machines these days are all PCs. I’ve learned to live with Windows’ quirks, and Windows 10 has copied enough of OS X’s user experience over the years that it actually isn’t a bad place to do business. I still run into the occasional QuickTime-related frustration, but those are becoming fewer and fewer.

SO…if someone handed me a new, $30,000 Mac Pro would I take it? Heck yeah. (If you’re offering, please contact Pro Video Coalition’s editorial staff immediately). And I would thoroughly enjoy using it. But if I had to suggest an alternative?

1. The expensive: if you want to spend $10-15,000 on a solid workstation, look at a system like the Lenovo P-series, or an HP Z-series. You should be able to deck out those systems with a ton of RAM and a couple of powerful RTX Nvidia cards before grazing $15K.

2. The surprisingly affordable: look at picking up a used workstation (like the HP Z840) with two Xeon E5-2687W v3 CPUs installed. The ‘v3’ is an essential point; previous generations with the same moniker were not nearly as powerful. You should be able to haul one in for under $2,000 ($1,500 if you shop well).

This will give you a 2015-technology machine with 20 cores running at a base clock of 3.1GHz and a max turbo of 3.5GHz. I’ve found this to be a sweet spot between single processor speed (a healthy 3.1-3.5 GHz) and multithreading (20 cores is a lot of cores).

Plan on spending at least another $1000 on a modern RTX2080 graphics card and extra RAM. But for under $3,000 you’ll have an extremely fast machine capable of outperforming the new $6,000 Mac Pro in any multithreaded situations (at least by my estimates—I have absolutely no empirical evidence to back that up. Would kind of need a new Mac Pro to compare to…)

You always run a risk when you buy used, but my personal, anecdotal experience has been that if hardware has lasted three or four years, it’ll probably sing along happily for another five or more. (Of course when your experience differs, it’s your checkbook you’re taking the gamble with, not mine). My advice: see if you can find a local refurbisher and build a walk-in relationship with them. They’re more likely to accommodate an exchange if things go wrong.

You could try to turn it into a Hackintosh, but that would be naughty and painful (just talk to anyone who’s tried to run one for more than a few months).

But look, I get it: it’s hard to put a price on the Mac desktop experience. Wait, no it’s not: it’s anywhere from $6,000 to $45,000 (plus monitor, plus $1000 monitor stand).

One final thought: dimming the lights of Metropolis

Here’s my one more thing: power. The new Mac Pro requires 1.4 kilowatts of power to run effectively. For those of you in Europe and Australia with your fancy 240 volt outlets, no biggie. For those of us living in the Americas, that means over 12 amps of power draw. With a standard outlet tripping at 20 amps, it means that if you try to power your new Mac Pro, a Pro Display (Apple is yet to publish its power requirements), and a couple of hard drives while brewing a cup in your Keurig, well…lights out baby. Might as well put the Keurig in the closet. (Seriously, get yourself a decent espresso machine and you’ll double your client base anyway.)

What it means to you

Check your available circuits before buying. You may just need to purchase a new studio to go with your new Mac…

Updated 6-19-19: A side note I feel compelled to add: I mention Maya as an example of an application whose CPU demands vary depending on the kinds of tasks you’re doing in it. While the principle is true, upon reflection Maya is probably a poor example these days: Since Autodesk overhauled Maya’s code base with the release of Maya 2018, overall the app is highly optimized for multithreading. There will still be plenty of single-threaded tasks (especially user-generated Python scripts), but a lot of the everyday operations like object manipulation are highly multithreaded.

So I’m heading off here any objections that might deservedly arise from the Maya dev team. Props where props are due…