VR, AR, XR, MR, 360.

Last year, I sat through a discussion on these topics at the Final Cut Pro X Creative Summit in Cupertino. I had some interesting reactions, most of which included the word “puzzled.” How were the cameras positioned? What sort of magic had to occur to edit 360 video? How can a viewer with so much freedom be directed to see what I want them to see? How do stories get told?

To me, filmmaking and editing are generally understandable. One thing is always true: The director and their team guide the viewer—sometimes forcefully, sometimes gently, sometimes away from what they want shown so as to mislead, set-up, surprise, or frighten. Editing extends that idea, guides the viewer, and helps them reach desired destinations.

I recently talked with Dipak Patel (Co-Founder and CEO), Adam Dubov (Chief Content Officer), Martin Christien (Director of Content Services), and Arlene Santos (Chief Operating Officer) from Zeality (Zeality.co).

I realized that I know nothing about their kind of filmmaking. Theirs is not filmmaking in the traditional sense; it’s not a new version of an old paradigm. Theirs is an invention, a brand-new creation.

We are sometimes on extremely tight schedules and things can get a little hectic…the nature of Final Cut Pro X keeps the chaos in line. – Martin Christien

Mike: Zeality—where is the company now and where is it going?

Dipak We are on the frontier with VR, AR, 360, and we look at this time like the dawning-of-a-new-age of filmmaking. The language of this kind of storytelling is just being invented. Where we are now with 360/VR filmmaking is akin to where we were in the early 1900s when film language was being invented. The stories that we are telling now are about as simple as the stories they were telling then. We have a rare opportunity in exploring every single phase of this kind of production. We are working to define what the exact experience is: What do we do from the technology and financial perspectives? What do we do from a creation and art perspective?

We chose sports to begin with because they are highly community driven, highly engaging, and topical. They are exciting and constantly changing. Sports is not just stats and scores: It is stories. It is life.

Mike: It sure is. I am from Green Bay, Wisconsin, and that town lives and dies by the Green Bay Packers. You can see the same passion any time you watch a football match in Europe or Mexico—the crowds are incredibly engaged and emotionally invested.

Dipak: That is true, although we are seeing that the population of sports fans is aging. For example, the average age for a baseball fan is 55—for NASCAR, older than that.

They have to figure out new ways to engage younger audiences and a more diverse set of audiences—whether demographically or geographically. The goals for all of our clients are to provide new ways to connect with fans. They are all trying to drive a deeper experience and ultimately find new models to convert new interactive capabilities into revenue.

So how do we extend that reach? How do we pull people in? How do we create interaction models that create lifetime-value for customers and fans?

We start with the experiential side—not just AR, VR or 360 photo video—because it may not be 360 photo or video. It may be some other really cool whiz-bang experiential kind of system.

Mike: So you are saying this might be a stepping-stone to the next thing?

Dipak: That’s right. There are requirements that are emerging almost real-time while we are iterating, and those requirements are emerging when we are actually in the field, doing the thing.

In the future, people won’t just watch video… they will interact with it. – Dipak Patel

Mike: I can relate to what you are saying about being there and watching it unfold as you are doing it. It is a journey of discovery.

I was on the first major feature film to be cut on Final Cut Pro X, and the pushback was substantial from everybody, other than the people who wanted to do it. The cries—“We haven’t done it this way before! This is impossible!”—were part of the conversation. We just made our best guesses, which sounds like what you guys are doing. You have your base knowledge and you are making your best guesses, knowing that the best guesses may not work out, or they may just lead to the answer, or an answer—until another best guess comes along.

Dipak: Yes. There are two sides of this experimentation phase of the industry. One side is financial: the investors in VR. The other side is creative: the filmmakers and directors who are constantly trying new techniques in VR filmmaking. They have to co-exist.

Mike: Why did Zeality choose Final Cut Pro X?

Martin: Aside from ease of use? Here’s my top three reasons…

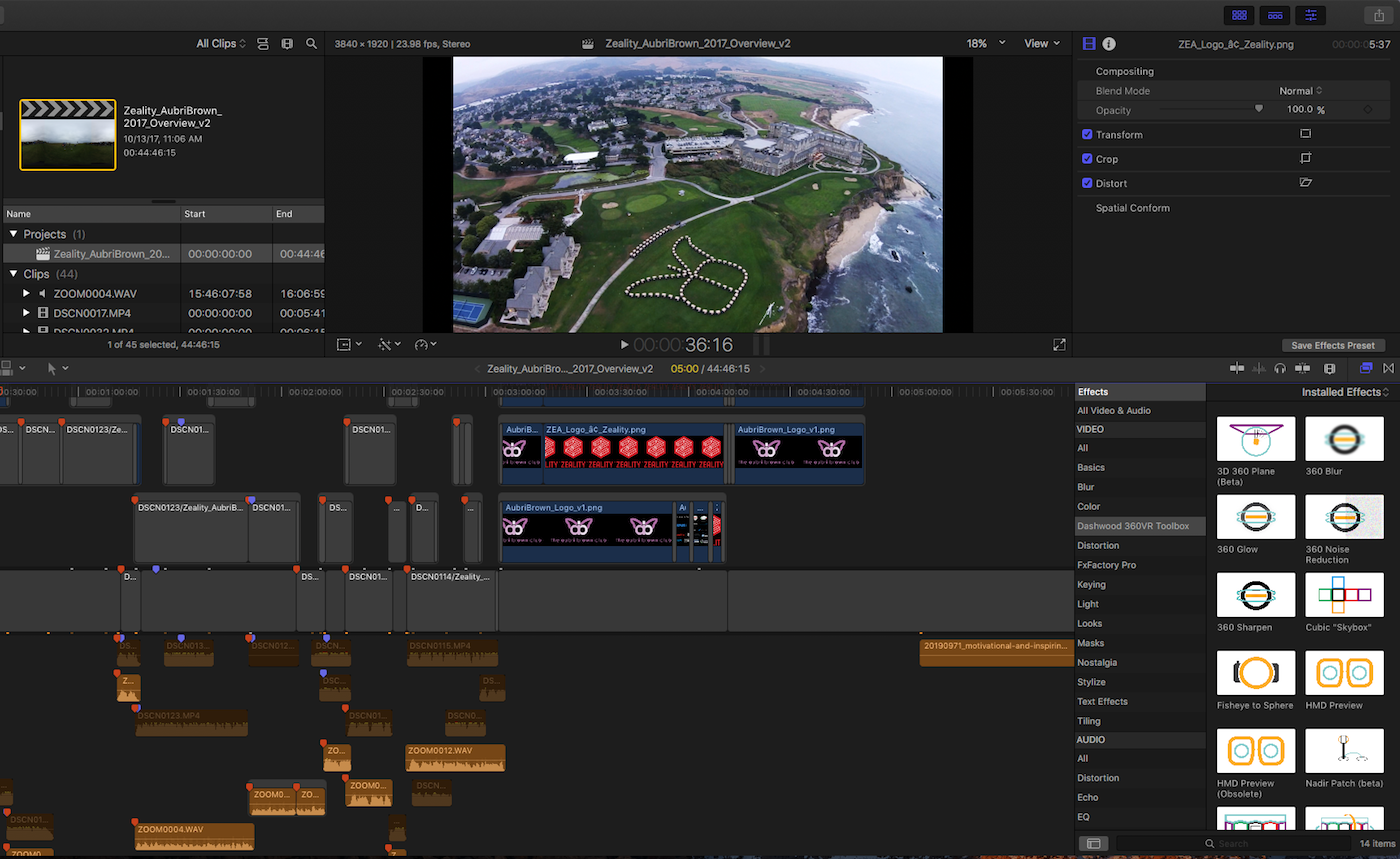

- Metadata tagging

- GPU acceleration

- Dashwood Plug-ins (Still stunned that they are free!)

One big challenge is simply staying organized over the long run. In the beginning, there are beautiful file structures, a folder for this, a folder for that. And then during the last days of editing, all of the file structures kind of go to crap. You just start chucking files anywhere, thinking you will remember to move it later. A platform—hardware or software that avoids that is always welcome.

Final Cut Pro X and its metadata, tagging, and automation features allow for a bit of sloppiness while still keeps basic organization in place. Of course, you need to have the discipline to set a good base, but that’s true with any NLE. Because we are sometimes on extremely tight schedules things can get a little frantic and our normal workflow turns into a flurry of activity. Fortunately, the nature of Final Cut Pro X keeps the chaos in line.

Graphics acceleration is a place Final Cut Pro X excels. It is, in fact, better than any other editing platform at utilizing the onboard AMD GPUs for normal functions such as scrubbing the timeline, playback, and rendering effects, but I have found it most impressive when adjusting a masked color grade. A lot of platforms struggle to render functions like that on the fly at higher resolutions, but Final Cut Pro X does it with ease.

The Dashwood plug-ins I use most often are the “Project 2D on Sphere” to correct the distortion on any 2D graphic I edit into the timeline. Then the “Re-orient Sphere” effect can adjust the X, Y, and Z axes to level up a shot or rotate the zero position (the starting direction of the viewer’s head). We are very excited about the upcoming version of Final Cut Pro X that Apple previewed at WWDC. While the Dashwood plug-ins are great, we’re eager for 360 features to be built into the app.

In 360, directing is more critical than ever. It’s just a lot more subtle. – Adam Dubov

Mike: Let’s run through your workflow. Start from the start.

Martin: We use Auto-Stitch cameras to eliminate the stitching process so that the content can be turned around faster to meet the deadline requirements of a sports media workflow. The camera we worked with most was the Nikon KeyMission360, although since then I have had some experience with the Garmin Virb and believe that to be best on the market at the moment.

I ingest everything into our central storage system whilst simultaneously backing up to a raw media archive and an edit backup that mirrors the edit file structure. Our main central storage is the G-Technology G-Rack12 and G-Speed Shuttle XL.

Mike: Are your editing station(s) hooked in to that storage too?

Martin: Generally, yes. Our New Mac Pro and 15″ MacBook Pro connect via Thunderbolt 2 and 10Gig Ethernet, but we’re always switching things up.

I go through all the footage, log all the shots, check for errors, and flag any shots that need color or brightness smoothing on one of the cameras if they were blown out by lights.

Mike: This is in Final Cut Pro X? If so, what specific features help you with this and how?

Martin: Yes, Final Cut Pro X. All the flagged shots that need fixing get a feathered mask applied to them so that brightness and contrast can be brought into line so both cameras match. I use native controls in Final Cut Pro X to draw a mask over the area that I need to adjust much like you would on a still image. Final Cut Pro X is very effective with this because of the way it utilizes the GPU, so you don’t have to wait to render or compromise on quality by playing something at lower resolution or reduced frame rate. Also, we are able to edit with full-res files directly on the timeline, which speeds things up rather than having to do an offline and re-conform later. When it is time for final color grade, we do a standard one-light color adjustment once we have signed off the content edit. We try not to mess around with the color grade too much so as to create a more real-world environment.

Mike: How is sound handled?

Martin: The cameras don’t pick up the best audio, so when we need focused audio recording. We use hardware with a small footprint like the Zoom H2N recorder.

During editing, we use standard controls, key-framing volume levels, etc. It rarely gets complicated. Most of the content we create is cut to music but we always try to mix in atmospheric sounds. Being an immersive media, even subtle atmospheric audio helps the viewer relax into the content.

Mike: So you deliver final picture from Final Cut Pro X?

Martin: Yes. For reviews we will output then upload to our platform on a private and secure channel. Our clients can view easily on our web player or on a mobile device. Then, upon approval, we deliver everything via our client’s preferred FTP provider.

Mike: Let’s get into the creative, the story-telling. This is not the narrative stuff people are used to looking at.

Martin: People ask, “How do we tell stories?”

We’ve got to figure out how a new story gets told. It is a brand new way of thinking. It is not a new version of something else.

I think that is what we stepped into first. When we were shooting, we went in there thinking, We are going to tell this, and we are going to tell that. We thought, Yeah, this is the story. Before we ended up getting there, we had to simplify what we are doing. Go back to the beginning and learn storytelling in this new medium. The first few bits that we did ended up just gathering environments.

Adam: I found myself having to unlearn a lot of habits from 2D linear filmmaking. For example, I remember shooting some stuff with the 49ers on the sidelines of players getting ready, practicing before a game, and throwing passes to each other. I remember subtly turning the monopod back and forth, something I would do with a still camera, like a tennis match, in this case two guys throwing the ball. Then realizing, I don’t have to do that. In fact this is going to look really weird. In fact this is going to be unusable!

We are very young in terms of narrative storytelling this way. Ultimately I think the appeal of immersive content and immersive media in general is really the opportunity to present the viewer with a chance to be present versus the kind of leaned-back subjective viewpoint that people have when they’re looking at a television or movie screen. They also have some sense of agency and participation because they are able to navigate that image, that environment, that sphere.

In the context of that, we are always trying to discover any tool, technology or software, that helps us through the process of making immersive media, 360 camera, 360 video and VR. Auto-stitch cameras and Final Cut Pro X are examples of those tools.

Mike: In traditional filmmaking you are directing. Traditional storytelling goes back to ancient Greeks, when they figured out how to effectively tell a story. It sounds like you are giving the opportunity to the viewer to create a story. But you still have to give them something story-worthy. How much do you guys direct what you want the story to be? There’s so much opportunity for the viewer’s attention to go, perhaps, where you don’t want it to go.

Adam: What is interesting to me, in coming from a linear TV and film storytelling background, there’s a tendency with people today with 360 cameras to think, Oh! You’re not directing at all. And, in fact, there are a lot of people who just think, Okay. We’ll just put on a monopod. Turn it on. Get the hell out of the way. That’s abdicating the role of the director in a traditional sense.

One challenge is the fact that the lenses are so wide angle—combinations of fisheye lenses and things get small very quickly and as they recede from the camera. Directing the viewer’s eyes becomes more challenging. In 360, directing is more critical than ever. It’s just a lot more subtle.

Martin: Also, we started experimenting with tools that we can use in post-production like key- framing a rotation of the sphere. Not to force the viewer’s eye, but just gently putting a finger on the chin to say — “just look over here, what’s happening here?” We try to guide attention without screaming, “look over there!!” The viewer must be allowed to explore and look around with minimal encouragement.

One of the tools that Zeailty has put together is RE/LIVE which records your view around the 360. While at the same time, recording the reverse cameras. It’s a tool for reactions — it’s interesting watching people’s Re/Lives. They help us figure out where our viewers are looking. With some people the first thing they did was look at the ground. “Oh look, my feet!” or “look, something in the sky!” That disappears very quickly and then we see exploration — are you looking at what’s moving faster through the frame, or slower. It is very useful for us to watch back people’s re-lives and discover what is holding their attention and what would be a good place to draw the eye, where to gently direct their focus.

Mike: So when you look at this information, and you are editing something together, do you think it takes longer because you have to figure that stuff out?

Martin: That’s a tough one. When it comes to editing this type of content, Adam and I work side-by-side. I’m the one in the editor’s chair, and Adam is producing. We work out “content bullet points” together. We do some of the directing in post-production, and that can be more time consuming.

If we can understand what the viewer is looking at, we can figure out where to better place the camera. Ultimately, we are trying to take you somewhere that you have not been before—maybe inside a locker room or behind the bench of the San Jose Sharks or on the field with the 49ers. We are always learning more about where the viewers may want to go, and we try to get them there.

Mike: What techniques and technologies are you excited about?

Dipak: Anything that turns 360 photos and videos into interactive canvases!

One is a scavenger hunt game around 360 photo and video that we are working on. It allows customers to create a game around their 360 media. It’s a sponsored game looking for labels, things, Coke bottles, mascots, etc. that is overlaid across prebuilt content. The first team to deploy this is the San Jose Sharks. The winner gets a Zamboni ride! Also, our Re/Lives, which allows users to create a reaction video of a 360 video to share a unique point of view.

Mike: Who is doing interesting and exciting 360 VR work these days?

Dipak: There is so much great work that is being done by the early adopters. We all are paving the way for the future of immersive media. In particular, Gabo Arora, for his empathic and deep storytelling, and Scott Kegley, who works with the Minnesota Vikings, for his amazingly quick turnaround workflow. The community is so willing to share and exchange ideas around what’s working or not.

Mike: What are your favorite ways to view 360?

Dipak: I personally enjoy experiencing 360 media on my iPad as it provides a nice big screen to explore. I also like to have my content be part of a richer user experience, which allows me to interact with the media more than simply “looking around.”

Mike: So we have this new world. What is the future of 360 video?

Dipak: The future is tied to the overall awareness and adoption of the media format. As social networks deploy 360 photos and videos to their applications, it will become more pervasive over time. We realize there is an adoption curve that is tied to the ability for fresh new content to be delivered through the social channels. In the future, people are not going to watch content; they will experience it. They will not watch stories. They will experience them. They will interact with them.

LINKS

https://www.oculus.com/experiences/gear-vr/1462012723846452/

https://www.sporttechie.com/san-jose-sharks-become-first-nhl-team-introduce-zeality-technology/

https://www.youtube.com/watch?v=xay3FLbtkMw

http://sanfrancisco.giants.mlb.com/mobile/ballpark/index.jsp?c_id=sf

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now