Given the resources put into upgrading film and TV equipment in the last couple of decades, it’d be easy to assume that we were working toward some sort of goal. For a while, in the early 2000s, that was perhaps true, although we stopped calling that goal “film look” pretty quickly. The problem is, even once we’d fulfilled that desire, which we arguably had by the late 2000s, we kept pushing toward… well… something, and that’s meant constantly-moving goalposts and at least a lessening of the feeling that one is part of a century-old artform.

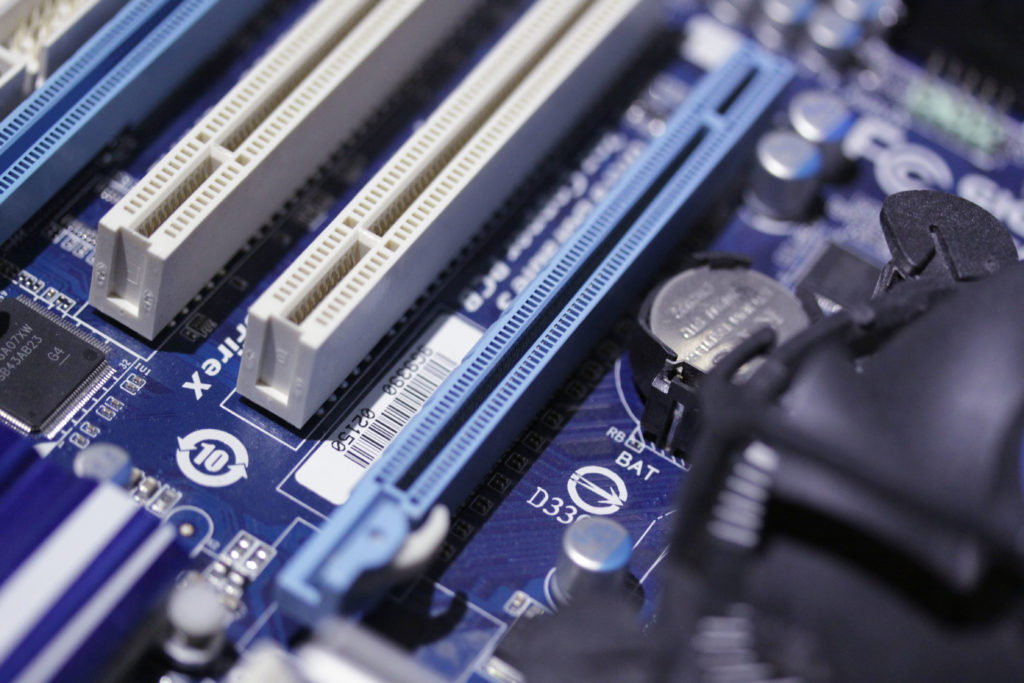

Once, the goals were well-established and progress toward them reliable. In 1990, a state-of-the-art Intel processor was a 25MHz 386SL with 855,000 transistors. Digital handling of film-resolution material was rare because the available computers could barely handle it. Four years later the 486DX4 had four times the clock rate, almost double the number of transistors and sundry other improvements. By 2001, the company was shipping 1.4GHz processors with 28 million transistors for more than fifty-six times the performance of that 386 based on clock rate alone, and more besides.

And during that time, the requirements of film and TV had barely moved. Standard-definition was still common in broadcast production and film scans were routinely 2K, barely bigger than an HD frame (and they still are). Digital film finishing went from barely possible to merely difficult. Standard-definition editing went from possible to easy, and through easy to being an absolute pleasure, which matters, because this is an artform and the tools need to get out of the way.

Then, one of the world’s brainier trusts decided that HD was a good idea. It was hard to argue; the gulf in image quality between cinema and VHS is memorable to those of us with memories that long. The problem was that workstations which had zipped along happily in the days of conventional TV became sullen and unresponsive, and creative people found themselves gaining weight from all the donuts eaten while progress bars inched along.

Technological upgrades

Since then we’ve thrown some new technology at the problem. GPUs, and vector processing in general, are such a good fit for a lot of film and TV workloads that we could keep believing, for a while, that the glory days of computers being upgraded at a rate of 50% a year would continue. They haven’t. Meanwhile we’ve advanced through HD to UHD, with greater bit depths and higher frame rates; in 2021 even a YouTube video is considered backward if it isn’t produced in 4K at 60fps.

Similar things have happened to displays. For years, a reference display was inevitably a big glass bottle full of nothing but electrons. It wasn’t generally very bright, but the standards had been written around the technology (not the other way around) and displays capable of closely approximating those standards weren’t cheap, but at least they existed, and soon they were to be replaced with surging capability in LCDs which made them cheap and easy.

Then, someone invented HDR and defined it by a set of standards that are notoriously tricky to implement even with the best tech we have.

Everything became expensive and difficult again. We can say the same about imaging sensors. It’s been possible to make very respectable sensors the size of a super-35 film frame, with resolution similar to a super-35 film frame, for some time. Even now, though, it’s difficult to make 8K sensors that size without compromising something in terms of noise and dynamic range, so we make bigger 8K sensors, demanding larger lenses. That leads to issues with storage; as flash storage makes life easier and easier, we bump up resolutions and bitrates just enough that the tail is wagging the dog again. There are plenty more examples.

Losing something

But this isn’t really about computers. Films and TV shows are now made with vastly more sophistication than ever before, regardless the mechanism for that happening. The image quality of even straightforward factual television is vastly better what was possible even in the days of early 2/3” HD cameras. Excess resolution lets all the tools of post production work extra-well, from chromakey, stabilisation and reframing, to feature tracking for VFX, not to mention giving operators plenty of viewfinder look-around and focus pullers some warning as to when things are going soft.

The downside is that few people now have the sort of equipment familiarity that a mid-century cinematographer might have enjoyed in the days when a film stock and lens combination might remain cutting-edge for a decade. Something is lost in that. Still, the sound department, with its low data rates, crossed that technological singularity a while ago, and everything’s worked out quite well. Until then, all we can do is try to keep up any way we can.

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!

Shop Now